Critical infrastructure and the cloud: Policy for emerging risk

Table of Contents

- Executive summary

- Introduction

- Critical sectors using the cloud

- Cloud as critical infrastructure

- Risk in the cloud

- Policy recommendations

- Authors and acknowledgements

Executive summary

Cloud computing is so ubiquitous to modern digital and internet infrastructure that it often, perversely, eludes our notice. Cloud’s benefits—cost savings, scalability, and outsourced management of infrastructure security and availability—have precipitated its rapid adoption. But, perhaps because the focus has been so strongly on these benefits , policy has lagged behind in reckoning with how essential cloud computing is to the functioning of the most critical systems and in the development of oversight structures commensurate with that new centrality.

The cloud, just like its on-premises predecessors, faces risks. In the Sunburst hack, the compromise of core cloud services—in this case, Microsoft Azure’s Identity and Access Management services—was one vector that exposed multiple US government agencies to snooping by malicious actors.1Simon Handler. “Broken Trust: Lessons from Sunburst.” Atlantic Council (blog), March 29, 2021. https://www.atlanticcouncil.org/in-depth-research-reports/report/broken-trust-lessons-from-sunburst/. The cloud, too, is vulnerable to the perennial fallibility of software systems: in a 2019 Google cloud outage, a misconfiguration cascaded into an hours-long brownout for services like YouTube and Snapchat, as Google’s network became congested and the very network management tools needed to resolve the issue were themselves throttled.2Brian Barrett, “How a Google Cloud Catch-22 Broke the Internet,” WIRED, June 17, 2019, https://www.wired.com/story/google-cloud-outage-catch-22/. The combination of the cloud’s increasing role as fundamental infrastructure for many other services and its status as a complex, technical, system-of-systems imply a simple follow-on question: are the policy tools at hand suited to govern cloud’s increasing complexity and criticality?

This report zeros in on an area where the stakes for cloud risk management are high: critical infrastructure (CI) sectors. The US government designates sectors as CI because their incapacity or destruction would have a “debilitating effect on security, national economic security, national public health or safety, or any combination of those matters.”3 42 U.S.C. § 5195c,“Critical Infrastructures Protection,” https://www.govinfo.gov/app/details/USCODE-2021-title42/USCODE-2021-title42-chap68-subchapIV-B-sec5195c. The potential for a cloud compromise or outage to incapacitate, even temporarily, such a sector is one which policymakers must take seriously.

The research draws on public information to examine cloud adoption in five specific CI sectors: healthcare, transportation and logistics, energy, defense, and financial services. In doing so, it pays particular attention to three factors that can make the cloud an operational benefit or necessity for the ongoing functionality of a sector.

1. Data storage and availability: How much data, and of what kind, has a given critical sector put into the cloud? Could the sector maintain operations without access to this data? Are there on-premises data backups and/or regulatory requirements mandating them?4To the reader, “on-premises data backups” refers to off the cloud and stored locally.

2. Scale and scalability: Has a given sector come to rely on scale that only cloud computing can enable, or upon the cloud’s ability to scale to larger workloads rapidly? Do the sector’s core services now rely on such capacity?

3. Continuous availability requirements: Has a given sector permanently moved systems that require constant availability into the cloud without local back-ups? And, if there are back-ups, what delay do they require to resume function in the case of a cloud compromise or outage?

This report aims to raise awareness of the risks that a potential cloud compromise or outage poses to CI and, in so doing, to make the case that these risks necessitate the maturation of current policy tools, and creation of others, to address these risks. It does not seek to vilify cloud adoption by CI sectors or preach a return to on-premises data processing. Instead, it suggests that CI sector regulators must consider cloud security and resilience a key question within their remit.

The report goes on to describe two features that make the risk profile of cloud computing markedly different from that of previous computing paradigms and that must inform the design of cloud risk management policy at a national level: compounded dependence and delegated control and visibility . Compounded dependence describes how widespread cloud adoption causes a huge range of organizations to depend upon a few shared linchpin technology systems, including unglamorous subsystems within the cloud, where the failure of one node could precipitate a cascading collapse. Delegated control and visibility describe how organizations that adopt cloud services cede control of and lose visibility into the operations and failure modes of these technology systems, posing challenges for both businesses and policymakers seeking to measure and manage cloud risks.

The factors of compounded dependence and delegated control and visibility pose challenges to managing potential risks to the cloud with existing policy tools, which remain more focused on end products and services than their shared architecture and infrastructure. These risk factors will only become more pronounced as organizations accelerate their move to the cloud, and policy structures designed to manage them will be essential to smoothly navigating the ongoing transition towards cloud computing as the dominant computing paradigm.

The report concludes with policy recommendations to help policymakers gain more visibility into and eventually a better hold on cloud risks for CI sectors, building on the 2023 cloud security report from the US Department of the Treasury and the 2023 National Cybersecurity Strategy. These recommendations center on equipping Sector Risk Management Agencies (SRMAs)—the entities currently tasked with managing cybersecurity risk in CI sectors—with appropriate tools to understand cloud usage and risk within their sector, as well as mapping out the beginnings of a structure for cross-sector cloud risk management to facilitate greater transparency and oversight. These ideas are a start, rather than an end state, for cloud risk policy—visibility is a prerequisite for risk management, but other tools will be required in concert to fully confront the problem.

The conversation about cloud security is no longer just about the security of services, but about the durability of infrastructure underpinning fundamental economic and political activities. For policymakers, that recognition must now become as tangible as it is urgent.

Introduction

A risk to the security or availability of cloud computing is a risk to US economic and national security. Over 95 percent of Fortune 500 companies use cloud systems,5 To the reader, as of 2018, 95 percent of Fortune 500 companies were already using Microsoft Azure in some capacity. See: Arpan Shah, “Microsoft Azure: The Only Consistent, Comprehensive Hybrid Cloud,” September 25, 2018, https://azure.microsoft.com/en-us/blog/microsoft-azure-the-only-consistent-comprehensive-hybrid-cloud/. and many sectors considered critical infrastructure (CI)—healthcare, transportation and logistics, energy, defense, and financial services, for example—are increasingly using cloud computing to support their core functionality. The government too is adopting cloud computing, with more and more critical governmental functions built in the cloud, from systems development at the US Department of Defense (DOD) to national public health crisis response systems.6“For DOD, Software Modernization and Cloud Adoption Go Hand-in-Hand,” Federal News Network, webinar announcement, September 26, 2022, https://federalnewsnetwork.com/cme-event/federal-insights/pushing-forward-on-dod-software-modernization/, 7GNC Staff, “HHS Protect: The Foundation of COVID Response,” Government Computer News

(GCN), November 16, 2020, https://gcn.com/data-analytics/2020/11/hhs-protect-the-foundation-of-covid-response/315746/.

The widespread and increasing use of the cloud, especially for high-value computing workloads, has also raised the stakes for cloud security. The cloud’s centralization of data and computing capabilities has made it a target of, and battlefield for, creative, persistent threats engaging in economic espionage, offensive cyber operations, and even destructive attacks on civilian infrastructure, as well as a stage for arcane regulatory disputes and outmatched procurement processes. As a form of centralized infrastructure for computing, cloud deployments are exposed to both the security risks of their customers and the malintent of those customers’ adversaries. Cloud service providers (CSPs) thus make architectural, operational, and security decisions with potentially vast, cascading effects across sectors. And still, they must build and operate this cloud infrastructure while straddling a highly contested global marketplace that crisscrosses political boundaries often fraught under the strain of immense technical complexity.

The aim of this paper is not, notably, to suggest that cloud adoption should be avoided or that cloud computing deployments innately bear more risk than their on-premises counterparts. Cloud computing offers real efficiency and cost benefits to organizations by obviating the need to maintain data centers and enabling flexible compute scaling in response to demand. Arguments can be made that on average cloud deployments are more secure than on-premises systems (though opinions are far from definitive8 Kevin Townsend, “More Than Half of Security Pros Say Risks Higher in Cloud Than On Premise,” SecurityWeek, September 29, 2022, https://www.securityweek.com/more-half-security-pros-say-risks-higher-cloud-premise/; Dan Geer and Wade Baker, “Is the Cloud Less Secure than On-Prem?,” Usenix (;login:), Fall 2019, https://www.usenix.org/system/files/login/articles/login_fall19_12_geer.pdf.). This paper instead seeks to illustrate that the risks posed by the widespread adoption of cloud computing are meaningfully different from the risks arising from the myriad of independent organization-specific computing systems. Moreover, it is a call to attention on the ways in which existing policy is not yet well-equipped to oversee and manage this novel risk landscape. In fact, it is precisely because cloud computing is so valuable that it is well worth attempting to grapple with these new risks rather than simply fleeing back to on-premises data systems.

From a policy perspective, one of the most challenging aspects of attempting to understand the cloud’s role in CI is a lack of consistent visibility into the exact nature and depth of cloud adoption by individual organizations. There is great variety in how an organization might use cloud services—public, private, community, or hybrid clouds—as well as in the breadth of services and organization-specific usages of each service model, from software as a service (SaaS) to platform as a service (PaaS) and infrastructure as a service (IaaS). Different organizations have adopted the cloud at different rates and differ too in the degree to which they host operation-critical data and workloads in the cloud, versus merely using the cloud to host auxiliary data not necessary for their core operations.

This report makes use of what information is public to show that the cloud is—already, and increasingly—embedded within CI sectors. It makes a series of policy recommendations intended primarily to help the government gain visibility into the complex, interdependent ecosystem of cloud risk and CI. These recommendations stop short of suggesting a holistic model for cloud regulation—the complexity of these products and how customers depend on different parts of them is still growing and too poorly understood across industry sectors for a one-size-fits-all approach.9Scott Piper and Amitai Cohen, “The State of the Cloud 2023,” WIZ (blog),” February 6, 2023, https://www.wiz.io/blog/the-top-cloud-security-threats-to-be-aware-of-in-2023. But policymakers will not be able to arrive at a workable model without more visibility into and consideration of the landscape of cloud use and cloud risk.

The recent US National Cybersecurity Strategy invokes cloud services and calls for policy to “shift the burden of responsibility” for better cybersecurity.10President Biden, “National Cybersecurity Strategy,” The White House, March 1, 2023, https://www.whitehouse.gov/wp-content/uploads/2023/03/National-Cybersecurity-Strategy-2023.pdf. This is an important and timely debate that must involve the largest CSPs, who shoulder so much risk and must be central in any renewed effort to govern the security of cloud services and infrastructure. One of the themes of this report is that policymakers will need to “shift the burden of transparency” onto CSPs, who are the only entities well-positioned to provide insight into their dependencies and the risks they face. Now, before a catastrophic incident, is the time for the government, with industry, to accelerate toward a healthier and more risk-informed regulatory model for cloud computing.

Cloud computing and cloud risks

In cloud computing, CSPs offer customers the ability to connect, over the Internet, to data center servers and other computing resources for on-demand data storage, specialized services, and big data processing.11Simon Handler, “Dude, Where’s My Cloud? A Guide for Wonks and Users,” Atlantic Council (blog), September 28, 2020, https://www.atlanticcouncil.org/in-depth-research-reports/report/dude-wheres-my-cloud-a-guide-for-wonks-and-users/. Cloud computing gives organizations, from non-profits and government agencies to the Fortune 500, the ability to run applications and work with data without building and operating their own physical data centers or buying computer hardware, as well as the flexibility to increase or decrease the amount of these services they pay for based on real-time needs.12Peter Mell and Timothy Grance, “The NIST Definition of Cloud Computing,” NIST Special Publication (SP) 800-145, National Institute of Standards and Technology (NIST), US Department of Commerce, September 2011, https://nvlpubs.nist.gov/nistpubs/legacy/sp/nistspecialpublication800-145.pdf. Cloud computing allows organizations to offload many of the challenges that come with ensuring the security and ongoing operations of data infrastructure to a (nominally) well-resourced, technically mature provider.

The average internet user depends on cloud computing to edit documents on Google Drive, talk over a Zoom video call, or access their favorite retail or social media websites. Companies and organizations accelerated their adoption of enterprise cloud computing during the COVID-19 pandemic, as employees could use the Internet to interact with cloud-hosted organizational resources, regardless of their physical location. Cloud computing is an increasingly dominant component of the entire information technology (IT) ecosystem, even if its presence is functionally invisible to most end users.

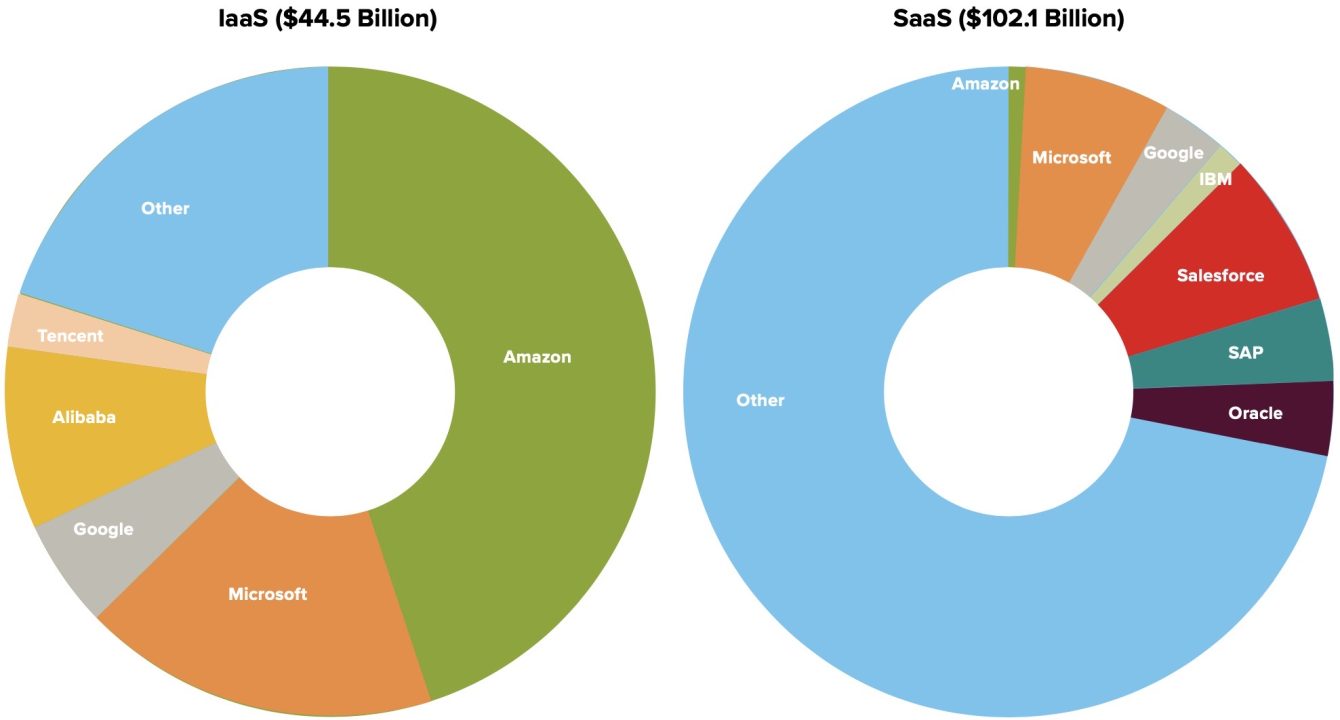

Three CSPs dominate the market: Amazon Web Services (AWS), Microsoft Azure, and Google Cloud. Together, they make up over 65 percent of the global cloud market. These “hyperscalers” benefit from economies of scale: each operates hundreds of massive data centers and allocates and redistributes customer compute demands across them to use computing resources optimally. These economies of scale provide richer suites of functionality—and more robust security features—than many small customers could ever build in-house. But, the reliance of vast numbers of online services on cloud computing, combined with the highly concentrated nature of CSPs, mean that an outage at a single CSP can have strange and cascading effects across a wide range of applications. For example, a single AWS outage in 2021 stopped operations at Amazon delivery warehouses, blocked access to online education testing services, and immobilized smart home devices such as robot vacuums and app-controlled automatic cat feeders.13Annie Palmer, “Dead Roombas, Stranded Packages and Delayed Exams: How the AWS Outage Wreaked Havoc across the U.S.,” CNBC, December 9, 2021, https://www.cnbc.com/2021/12/09/how-the-aws-outage-wreaked-havoc-across-the-us.html.

The increasing reliance of many organizations on a few CSPs creates increasingly concentrated forms of systemic risk. When a CSP’s distributed computing functions fail, the interruptions to service availability can cascade across the firm’s services and clients. Most CSPs build their infrastructure from common, modular architectures.14James Hamilton, “Architecture for Modular Data Centers,” Cornell University, arXiv platform, December 21, 2006, https://doi.org/10.48550/arXiv.cs/0612110,15“Seven Principles of Cloud-Native Architecture,” Alibaba Cloud Community, January 5, 2022, https://www.alibabacloud.com/blog/seven-principles-of-cloud-native-architecture_598431. The basic hardware and software packages that make up the cloud often share significant similarities—or are wholly identical—meaning one flaw can be present in many diverse locations. For instance, on December 14, 2020, Google Cloud suffered a widespread outage that made Gmail, Google Drive, YouTube, and many other Google services inaccessible globally for about 45 minutes.16Alex Hern, “Google Suffers Global Outage with Gmail, YouTube and Majority of Services Affected,” The Guardian, December 14, 2020, https://www.theguardian.com/technology/2020/dec/14/google-suffers-worldwide-outage-with-gmail-youtube-and-other-services-down. The outage occurred because of an error in allocating storage resources for a User ID Service, which authenticates users before they can interact with Google services.17“Google Cloud Infrastructure Components Incident #20013,” Google Cloud, accessed April 27, 2023, https://status.cloud.google.com/incident/zall/20013. Because many different Google products rely on the same authentication service, this error brought down several major cloud applications worldwide. Even Google Nest smart home devices, like speakers and thermostats, also forcibly went offline, triggering a fail-safe mode where users could not access the device settings.18Hern, “Google Suffers Global Outage. The number of systems and actors affected in this outage, stemming from a singular source, demonstrates the interconnectedness of cloud computing infrastructure.

Cloud incidents do not result only from internal software failures. Physical incidents such as a flood or storm can take out a cloud data center. For example, a severe storm near Washington DC cut power leading to an AWS data center in 2012, resulting in multi-hour downtimes of sites such as Instagram and Heroku.19Rich Miller, “Amazon Data Center Loses Power During Storm,” Data Center Knowledge (Informa), June 30, 2012, https://www.datacenterknowledge.com/archives/2012/06/30/amazon-data-center-loses-power-during-storm. The failure of Heroku, itself a hosting service provider, caused further cascading failures for the websites built on its platform.20Nati Shalom, “Lessons From The Heroku Amazon Outage,” Cloudify, June 18, 2012, https://cloudify.co/blog/lessons-from-heroku-amazon-outage/. While many CSPs have protocols for rerouting traffic to other data centers in the event of an outage, called “failover,” this event demonstrated that failing over safely and seamlessly is a significant challenge: the outage impacted Netflix even though it explicitly pays to spread traffic across multiple data centers to avoid just such a failure—because, per Netflix’s Director of Architecture, AWS traffic routing “was broken across all zones” during the incident.21Miller, “Amazon Data Center Loses Power During Storm. Additionally, because cloud services rely on the internet to connect customers to computing resources, attacks against underlying internet infrastructure can have ripple effects impacting the availability of cloud services. For example, a 2016 distributed denial of service (DDoS) attack against Dyn, a domain name service provider, caused outages at cloud services such as AWS.22Sebastian Moss, “Major DDoS Attack on Dyn Disrupts AWS, Twitter, Spotify and More,” DCD Media Center, October 21, 2016, https://www.datacenterdynamics.com/en/news/major-ddos-attack-on-dyn-disrupts-aws-twitter-spotify-and-more/.

Cloud platforms can also suffer from unique cybersecurity risks. Because cloud services are generally multitenant environments—that is, a single instance of the software or infrastructure serves multiple, unrelated organizations at the same time—a malicious actor who can escape the bounds of tenant isolation can access the data and resources of other customers. Security researchers who identified multiple bugs that them to access other tenants’ data called out a “problematic pattern” in which CSPs are often non-standardized and non-transparent about their tenant-isolation practices, making risk management more challenging for customers.23Amitai Cohen, “Introducing PEACH, a Tenant Isolation Framework for Cloud Applications,” WIZ (blog), December 14, 2022, https://www.wiz.io/blog/introducing-peach-a-tenant-isolation-framework-for-cloud-applications. And, because a single CSP typically serves many customers, cloud platforms are appealing targets for hackers seeking to compromise many different organizations, including through software supply chain attacks. These attacks involve implanting and/or exploiting vulnerabilities in a less secure software that the target software depends on. During the Solar Winds/Sunburst campaign, discovered in 2020, Russian groups managed to access Microsoft Azure’s identity and access management (IAM) service, Azure AD. Critical applications, such as Office 365, Workday, AWS Single Sign-On, and Salesforce, are commonly integrated with Azure AD. As one of several techniques, the actors abused this access to move throughout different Office 365 user accounts to access highly confidential documents, emails, and calendars.24Handler, “Broken Trust”.

Cloud computing risks have different characteristics than those of on-premises computing. Increasing adoption of cloud services by CI operators, therefore, necessitates more active involvement from the policy community to adapt to this new risk landscape. Accordingly, this report brings attention to five CI sectors that are in the process of forming deep dependencies on the cloud and provides guidance for how policymakers can create visibility into the cloud ecosystem to begin adapting policy to address the compounded dependence and delegated control and visibility prevalent within cloud infrastructure.

Critical sectors using the cloud

This section examines cloud computing’s proliferation across five critical sectors: healthcare, transportation and logistics, energy, defense, and financial services. It highlights how cloud computing already supports the maintenance of everything from patient data to home energy supplies. The sensitivity of data and services stored in the cloud varies among these sectors, yet, within each, the cloud is already or soon will be critical to US economic, national security, and general societal interests.

Healthcare sector

The healthcare sector has quickly recognized cloud computing’s benefits.25US Cybersecurity and Infrastructure Security Agency (CISA), “Healthcare and Public Health Sector,” CISA, accessed April 27, 2023, https://www.cisa.gov/healthcare-and-public-health-sector; Vinati Kamani, “5 Ways Cloud Computing Is Impacting Healthcare,” Health IT Outcomes, October 2, 2019, https://www.healthitoutcomes.com/doc/ways-cloud-computing-is-impacting-healthcare-0001. One industry survey, for instance, reported that 35 percent of healthcare organization respondents already store more than half their data and infrastructure in the cloud.26Jessica Kim Cohen, “Report: Healthcare Industry Leads in Cloud Adoption,” Health IT: Becker’s Hospital Review, April 9, 2018, https://www.beckershospitalreview.com/healthcare-information-technology/report-healthcare-industry-leads-in-cloud-adoption.html. In 2020, companies spent $28.1 billion on healthcare cloud computing, with the number projected to increase to $64.7 billion by 2025.27Research and Markets, “Global Healthcare Cloud Computing Market (2020 to 2025) – Emergence of the Telecloud Presents Opportunities,” GlobeNewsWire, October 2, 2020, https://www.globenewswire.com/news-release/2020/10/02/2102876/0/en/Global-Healthcare-Cloud-Computing-Market-2020-to-2025-Emergence-of-the-Telecloud-Presents-Opportunities.html.

The healthcare sector generates enormous amounts of sensitive data, much of which it stores in the cloud. Electronic health records (EHRs), which contain data such as a patient’s medical history, diagnoses, and medications, are increasingly common in healthcare for their efficiency and interoperability, as are medical sensors and monitors that generate large amounts of data. CSPs have created healthcare-specific tools for the cloud storage of EHR data, such as Microsoft Azure’s “Fast Healthcare Interoperability Resources” (FHIR), a standard for transmitting EHRs and protected health information (PHI),28Gregory J. Moore, “Reimagining Healthcare: Partnering for a Better Future,” Microsoft, December 2, 2019, https://cloudblogs.microsoft.com/industry-blog/health/2019/12/02/reimagining-healthcare-partnering-for-a-better-future/. which has since integrated into AWS and Google Cloud.29Henner Dierks and Angus McAllister, “Using Open Source FHIR APIs with FHIR Works on AWS,” Amazon Web Services (blog), August 28, 2020, https://aws.amazon.com/blogs/opensource/using-open-source-fhir-apis-with-fhir-works-on-aws/; “Cloud Health API: FHIR,” Google Cloud, accessed April 27, 2023, https://cloud.google.com/healthcare-api/docs/concepts/fhir. A 2015 survey of small healthcare providers found that 82 percent of respondents in urban areas used a cloud-based EHR system30John DeGaspari, “Cloud-Based EHRs Popularity Grows among Small Practices,” Fierce Healthcare,” June 3, 2015, https://www.fiercehealthcare.com/ehr/cloud-based-ehrs-popularity-grows-among-small-practices. (often because they were cheaper than on-premises systems). Moreover, these findings show major existing EHR software providers have begun making deals to move client EHR systems to the cloud or even acquire them wholesale by CSPs, such as Oracle’s recent acquisition of Cerner for $28.3 billion for its Millennium EHR platform to build a US national cloud database of EHRs.31Heather Landi, “Oracle, Cerner Plan to Build National Medical Records Database as Larry Ellison Pitches Bold Vision for Healthcare,” Fierce Healthcare, June 10, 2022, https://www.fiercehealthcare.com/health-tech/oracle-cerner-plan-build-national-medical-records-database-ellison-pitches-bold-vision; Heather Landi, “Google, Epic Ink Deal to Migrate EHRs to the Cloud,” Fierce Healthcare, November 16, 2022, https://www.fiercehealthcare.com/health-tech/google-epic-ink-deal-migrate-hospital-ehrs-cloud-ramp-use-ai-analytics; “A Cloud-Infrastructure Platform: Reimagining Remote Hosting Services,” Veradigm (formerly Allscripts), accessed April 27, 2023, https://www.allscripts.com/service/allscripts-cloud/; “The CareVue EHR,” Medsphere, accessed April 27, 2023, https://www.medsphere.com/resources/carevue-ehr-overview/.

Other healthcare-adjacent systems—like insurance systems, Health Insurance Portability and Accountability Act (HIPAA) compliant communications, laboratories and testing labs, crisis coordination networks, and supply-chain management practitioners—have also largely transitioned to the cloud. Healthcare.gov, the US government’s health insurance enrollment site, completely runs on AWS.32“Managing the Healthcare.gov Cloud Migration,” Booz Allen Hamilton (case study), February 19, 2021, https://www.boozallen.com/s/insight/thought-leadership/managing-the-healthcare-gov-cloud-migration.html. HIPAA-compliant email solutions used in healthcare settings are typically extensions of cloud-based emailing systems such as Outlook and Gmail. Radiology facilities have moved to cloud computing to share images and reduce storage costs.33Amy Vreeland et al., “Considerations for Exchanging and Sharing Medical Images for Improved Collaboration and Patient Care,” HIMSS-SIIM Collaborative White Paper, Journal of Digital Imaging 5 (October 2016) 547–58, https://pubmed.ncbi.nlm.nih.gov/27351992/. Cloud computing helps healthcare providers translate great volumes of clinical information into “clinical decision support,” which was previously impossible due to the limitations of on-premises computing infrastructure.34Jennifer Bresnick, “Can Cloud Big Data Analytics Fix Healthcare’s Insight Problem?” Health IT Analytics, December 1, 2015, https://healthitanalytics.com/news/can-cloud-big-data-analytics-fix-healthcares-insight-problem. GE Healthcare Technologies uses Microsoft Azure’s Edison Datalogue Connect to provide secure image and data exchange to physicians, reducing the need to duplicate tests at different facilities.35Moore, “Reimagining Healthcare. Technologies such as Amazon’s Comprehend Medical standardize proprietary records so healthcare providers need not decrypt external patient records.36“Healthcare Interoperability: Creating a Clearer View of Patients,” Amazon Web Services, 2019, https://d1.awsstatic.com/Industries/HCLS/Resources/Healthcare%20Data%20Interoperability%20AWS%20Whitepaper.pdf.

The US Centers for Disease Control and Prevention (CDC) tapped AWS in 2014 to bolster its BioSense 2.0 program, an initiative to provide timely insight into the public health of US communities.37“US Centers for Disease Control and Prevention (CDC) Case Study,” Amazon Web Services, 2014, https://aws.amazon.com/solutions/case-studies/us-centers-for-disease-control-and-prevention/. BioSense links local, state, and federal public health institutions to respond to public-health crises faster, which requires significant computing power and storage.38Kelley G. Chester, “BioSense 2.0,” Online Journal of Public Health Informatics 5, no. 1 (April 4, 2013): e100, https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3692855/. In the United Kingdom, the National Health Service signed a deal with IBM for a secure public cloud to improve service delivery.39Tammy Lovell, “IBM Deal to Provide the NHS with Quicker to Access Cloud Services,” Healthcare IT News, August 3, 2020, https://www.healthcareitnews.com/news/emea/ibm-deal-provide-nhs-quicker-access-cloud-services.Novartis, one of the largest healthcare companies in Europe, uses cloud services to improve data analytics and manage decisions about a complex global supply chain of medicine manufacturing and distribution, among other functions.40Amazon Web Services, “AWS Announces Strategic Collaboration with Novartis to Accelerate Digital Transformation of Its Business Operations,” Business Wire, December 4, 2019, https://www.businesswire.com/news/home/20191204005238/en/AWS-Announces-Strategic-Collaboration-Novartis-Accelerate-Digital/.

While it is challenging to determine how catastrophic the impacts of an outage of any single service or CSP would be, the cloud is increasingly critical to the efficient function of many healthcare organizations. While some practices may be able to revert to pen and paper in the event of a cloud outage, others may not, and most will suffer from the change.41“Emergency Preparedness: Be Ready for Unanticipated Electronic Health Record (EHR) Downtime,” Institute For Safe Medication Practices,” August 24, 2022, https://www.ismp.org/resources/emergency-preparedness-be-ready-unanticipated-electronic-health-record-ehr-downtime. In one incident, a ransomware attack on Allscripts’ cloud-based EHR system forced healthcare providers to fall back to paper prescriptions, possibly delaying life-saving care and raising the risk of fraud and abuse.42Evan Sweeney, “Physician Practices Forced to Use Paper Records Lash out at Allscripts over Ransomware Response,” Fierce Healthcare,” January 22, 2018, https://www.fiercehealthcare.com/ehr/allscripts-ransomware-physician-practices-ehr-cybersecurity-e-prescribing.

The cloud offers real benefits, especially for small providers: cost savings, ease of standing-up functionality without an in-house IT team, and (potentially) increased security over on-premises deployments. There is a reason why the various federal cloud strategies and policies, as well as the new National Cybersecurity Strategy, emphasize encouraging cloud adoption: Adoption must match more fulsome, fine-grained, and effective scrutiny of CSPs and their infrastructure. Healthcare’s cloud transition will continue, so examining potential outage impacts and the degree of systemic vulnerability to a few points of failure are urgent priorities.

Transportation and logistics section

The transportation and logistics sector plays a vital role in US and global supply chains. For instance, the freight shipping industry moves some $19 trillion of goods over land in the United States each year.43Bureau of Transportation Statistics, “2018 Freight Flow Estimates,” US Department of Transportation, December 19, 2019, https://www.bts.gov/newsroom/2018-freight-flow-estimates.The European Union similarly houses the world’s largest ocean shipping fleet and controls around 40 percent of the world’s tonnage, moving everything from oil and gas to cars and electrical appliances.44“Climate Change and Shipping ECSA Position Paper,” European Community Shipowners’ Associations (ECSA), January 2008, https://www.ecsa.eu/sites/default/files/publications/083.pdf. This report focuses on cloud adoptions by logistics companies and airlines and finds that, at present, this sector tends to use the cloud to enhance and optimize existing business functions, with a select number of firms making monumental shifts.

Several transportation and logistics firms are transferring their data to the cloud for easier management and future needs, though these are not generally “whole-of-business” shifts. The United Parcel Service (UPS) started its cloud transition in 2019 with Google Cloud, and it recently inked a deal to expand its cloud data storage.45Isabelle Bousquette, “UPS Expands Deal with Google Cloud to Prepare for Surge in Data,” Wall Street Journal, March 29, 2022, https://www.wsj.com/articles/ups-expands-deal-with-google-cloud-to-prepare-for-surge-in-data-11648551600. UPS uses cloud services to “see and control how packages move through [its] network,”46“UPS Extends Use of Google Cloud Data Analytics Technology,” United Parcel Service, March, 25, 2022, https://about.ups.com/us/en/our-stories/innovation-driven/ups-and-google-cloud. which it cites as particularly critical functionality for high-volume periods such as the holidays and the COVID-19 pandemic, during which UPS needed to deliver more than a billion vaccine doses.47United Parcel Service, “UPS Extends Use of Google Cloud. FedEx uses the cloud to plan its pickup and delivery routes worldwide.48“FedEx Uses Java on Azure to Modernize Route Planning for Pickup and Delivery Operations,” Microsoft for Java Developers (YouTube video), 2022, https://www.youtube.com/watch?v=fJ_OUNdFXHs. While these functions are important, without more information it is challenging to predict whether a significant cloud outage or compromise would be catastrophic or merely burdensome and inefficient for the ongoing operation of these entities.

American Airlines works with Microsoft Azure to host all its data and many software tools.49Tobias Mann, “American Airlines Decides to Cruise into Azure’s Cloud,” The Register, May 19, 2022, https://www.theregister.com/2022/05/19/american_airlines_azure/. The airline uses cloud services to manage aircraft operations, like airport taxiing decisions, flight planning, and gating decisions at Dallas Fort Worth, one of its main hubs, as well as to run mobile apps and airport kiosks.50“American Airlines and Microsoft Partnership Takes Flight to Create a Smoother Travel Experience for Customers and Better Technology Tools for Team Members,” American Airlines Newsroom, May 18, 2022, https://news.aa.com/news/news-details/2022/American-Airlines-and-Microsoft-Partnership-Takes-Flight-to-Create-a-Smoother-Travel-Experience-for-Customers-and-Better-Technology-Tools-for-Team-Members-MKG-OTH-05/default.aspx. Other partnerships hint at future plans to utilize the cloud for more safety-critical tasks: NASA announced a partnership with General Electric Company on a project to integrate cloud computing into air traffic management systems,51“Air Traffic Management Set To Meet Cloud Technology,” CloudTweaks, September 28, 2012, https://cloudtweaks.com/2012/09/air-traffic-management-technology/. and the Federal Aviation Administration (FAA) entered into a partnership in 2020 to work on cloud modernization for its safety systems.52“FAA Selects Leidos to Modernize Safety System,” Leidos (news release), December 15, 2020, https://www.leidos.com/insights/faa-selects-leidos-modernize-safety-system. A short-term cloud outage might cause catastrophic outcomes in any of these functions, but could cause delays or stoppages that trigger subsequent effects in downstream systems reliant on the smooth functioning of air transportation.

The transportation and logistics sector must contend with seasonal swings and weather emergencies, where situations call for additional computing power to solve challenging optimization problems on the fly. For example, Rolls-Royce’s (RR) engine maintenance program downloads terabytes of data from airline fleets globally. RR relies on cloud computing to store and analyze this quantity of data, and the level of data ingestion and exfiltration is volatile, subject to fluctuations in global travel demand.53Susanna Ray, “From Airplane Engines to Streetlights, Transportation Is Becoming ore Intelligent,” Microsoft, May 2, 2016, https://news.microsoft.com/transform/from-airplane-engines-to-street-lights-transportation-is-becoming-more-intelligent. American Airlines depends on the cloud’s elasticity to quickly rebook passengers during massive flight disruptions using services hosted by International Business Machines (IBM).54“American Airlines: The Route to Customer Experience Transformation Is through the Cloud,” IBM (case study), March 2018, https://www.ibm.com/case-studies/american-airlines.

New technology initiatives also demonstrate the sector’s reliance on elasticity. UPS has attached radio-frequency identification chips (RFID) to packages for efficiency and optimization, which will increase data storage and processing demands significantly. The United States Postal Service’s (USPS) rollout of machine learning tools depends on the capture of terabytes of package data from its processing centers, necessitating elastic data storage.55Jory Heckman, “USPS Gets Ahead of Missing Packages with AI Edge Computing,” Federal News Network, May 7, 2021, https://federalnewsnetwork.com/artificial-intelligence/2021/05/usps-rolls-out-edge-ai-tools-at-195-sites-to-track-down-missing-packages-faster/. A report by international courier DHL states that more than 50 percent of logistics providers currently use cloud-based services, and an additional 20 percent will adopt it in the near future.

In sum, the transportation and logistics sector appears to currently use the cloud more for planning systems than real-time operational decisions, where failure could have devastating effects. However, even short-lived delays in shipping and transportation can have costly economic effects. Moreover, industry projections and cloud-feature development suggest that the cloud will become more critical to the sector’s safe functioning in the future.

Energy sector

The energy sector, as Presidential Policy Directive 21 (PPD-21) puts it, is “uniquely critical because it provides an ‘enabling function’ across all CI sectors.”56President Barack Obama, “Presidential Policy Directive 21 – Critical Infrastructure Security and Resilience,” The White House, February 12, 2013, https://obamawhitehouse.archives.gov/the-press-office/2013/02/12/presidential-policy-directive-critical-infrastructure-security-and-resil. Increasingly, energy has moved away from manual systems to automated ones reliant on the cloud for managing and making use of data.57Vince Dawkins, “How the Energy Industry Is Embracing Cloud Computing: Three Key Areas of Success,” Cloud Tech, August 7, 2019, https://cloudcomputing-news.net/news/2019/aug/07/how-energy-industry-embracing-cloud-computing-three-key-areas-success/. The energy sector looks to the cloud to update aging interfaces and increase data-transmission efficiency.58Dawkins, “How the Energy Industry Is Embracing Cloud”.

Smart grids are an example of critical energy-related infrastructure partially or wholly reliant on the cloud. Smart grids increase the resilience and capacity of the grid through activities such as dynamic load balancing and additional visibility into grid operations. The US government continues to fund smart-grid development activities, with up to $3 billion for the task included in the 2021 infrastructure law.59Grid Deployment Office, “Smart Grid Grants,” US Department of Energy, accessed April 27, 2023, https://www.energy.gov/gdo/smart-grid-grants. Smart grids often rely on the cloud for part or all of their functionality,60Enterprise.nxt, “How Edge-to-Cloud Computing Powers Smart Grids and Smart Cities,” Hewlett Packard Enterprise (HPE), April 12, 2022, https://www.hpe.com/us/en/insights/articles/how-edge-to-cloud-computing-powers-smart-grids-and-smart-cities-2204.html. meaning that, as smart grid projects get underway in more cities, more and more Americans will implicitly rely on the cloud to keep the lights on and to provide power to other CI such as hospitals, financial systems, and, ironically, data centers hosting some of these same cloud services.

The cloud today appears to already host certain functions critical to energy delivery. Duke Energy, a major US provider, has contracted with IBM to operate its Gas Transportation Management System (GTMS) on cloud infrastructure.61“Duke Energy: Keeping Energy Flowing to Hundreds of Thousands of Customers with IBM and Oracle,” IBM (case study), January 2015, https://www.ibm.com/case-studies/duke-energy. Duke Energy provides natural gas distribution to approximately half a million customers in Ohio and Kentucky, and the GTMS is essential for this distribution network’s safety and efficiency. Southern Company, the second-largest US power provider, uses Microsoft Azure to analyze real-time data from its energy equipment—and more critically—relies on the cloud’s scalability to handle the influx of messages and alerts during storm situations to better marshal its repair crews.62“Southern Company,” Microsoft, March 19, 2018, https://customers.microsoft.com/en-us/story/southern-company-power-utilities-azure. Portland General Electric (PGE) serves nine-hundred thousand customers in Oregon and recently transitioned to a hybrid cloud service to store documents and data, as well as run software that assists with energy-loss detection, data analytics, and object storage.63“PGE Migrates to AWS, Significantly Improves Energy Loss Detection Performance,” Amazon Web Services, 2021, https://aws.amazon.com/solutions/case-studies/portland-general-electric/. Like PGE, General Electric’s (GE) Renewable Energy division uses cloud services to analyze performance and maintenance information on a global network of wind turbines.64Don McDonnell and Scot Wlodarczak, “AWS Is How: GE Renewable Energy Increases Wind Energy Production,” Amazon Web Services, June 21, 2021, https://aws.amazon.com/blogs/industries/aws-is-how-ge-renewable-energy-increases-wind-energy-production/. This data will eventually inform machine-learning and artificial intelligence (AI) applications, and but does not currently seem critical to the wind turbines’ day-to-day functioning. Southern California Edison, one of the largest utility providers in the United States, uses cloud services to aggregate drone data for fighting wildfires.65Katherine Noyes, “Fighting Fire With Tech at Southern California Edison,” Wall Street Journal, April 20, 2021, https://deloitte.wsj.com/cio/2021/04/20/fighting-fire-with-tech-at-southern-california-edison/. Again, the functionality appears useful but not critical to keeping the grid running. This is a common trend across sectors—clear examples of increased cloud usage, but with ambiguous degrees of criticality for providing core services.

ENGIE, one of the largest power utilities in France, moved to cloud-based storage to improve business and power-delivery efficiency, forming a company-wide data storage system using cloud services to ingest and store energy consumption data and inputs from a range of small physical sectors.66“ENGIE Builds the Common Data Hub on AWS, Accelerates Zero-Carbon Transition,” Amazon Web Services, 2021, https://aws.amazon.com/solutions/case-studies/engie-aws-analytics-case-study/. While these functions factor into ENGIE’s day-to-day activities, the data stored on the cloud does not appear critical for the actual power delivery. PGE and ENGIE depend on the cloud’s elasticity to manage crises. During unprecedented wildfires and catastrophic wind events, PGE relied on the cloud’s ability to scale, helping the company mitigate widespread power outages.67“PGE Migrates to AWS, Significantly Improves Energy Loss Detection Performance,” Amazon Web Services, 2021, https://aws.amazon.com/solutions/case-studies/portland-general-electric/. During a mass service disruption, PGE’s communications channels remained online thanks to its cloud infrastructure. ENGIE’s growing wind-turbine data collection relies on ever-increasing cloud storage, though, unlike the elasticity use-case for PGE, such data may not be necessary for core operations. The Electric Power Research Institute (EPRI) reported in 2020 that out of the twenty-two US utilities surveyed, half said that they expect to adopt “cloud-hosted transmission and distribution planning applications” within the next five years.68Michael Matz, “The Grid is Moving to the Cloud,” EPRI Journal, May 24, 2021, https://eprijournal.com/the-grid-is-moving-to-the-cloud/.

The cloud plays a role not only in energy delivery but also in upstream processes like oil and gas extraction. Some of the largest US and European Union (EU) oil-and-gas firms use the cloud for data storage and processing. ExxonMobil, the largest US publicly traded oil-and-gas company, uses Microsoft Azure to collect and store sensor data from their Permian Basin extraction.69Reuters Staff, “Exxon, Microsoft Strike Cloud Computing Agreement for U.S. Shale,” Reuters, February 22, 2019, https://www.reuters.com/article/ctech-us-exxon-mobil-microsoft-cloud-idCAKCN1QB1N8-OCATC. Total Energies, a French multinational oil company and the world’s fifth largest, works with Nutanix to host and secure large databases in cloud services provided by SAP HANA and Oracle and has said it will move all IT functionality to the cloud eventually.70“Total powers Digital Transformation across Energy Production with Nutanix,” Nutanix (case study), accessed April 27, 2023, https://www.nutanix.com/company/customers/total. British Petroleum (BP) has gone “all in” on cloud data storage and availability by shutting down two of its largest on-premises data centers in London’s Canary Wharf in favor of a package of Amazon services as well as a SAP product to host the oil company’s AVEVA Unified Supply Chain decision-making software.71Computer Business Review (CBR) Staff, “‘You’ve Got to Have Courage!’ BP on Going ‘All-In’ on the Cloud,” TechMonitor, June 29, 2022, https://techmonitor.ai/technology/cloud/bp-cloud-migration-interview; “BP Goes All-in on AWS for Its European Mega Data Centers,” British Petroleum (news release), December 4, 2019, https://www.bp.com/en/global/corporate/news-and-insights/press-releases/bp-goes-all-in-on-aws-for-its-european-mega-data-centers.html. Marathon Oil centralized much of its data collection onto the cloud,72“Helping Marathon Oil Create a Next-Generation Cloud Native Data Platform,” EPAM Systems (case study), accessed April 27, 2023, https://www.epam.com/services/client-work/helping-marathon-oil-create-a-next-gen-cloud-native-data-platform; “Marathon Oil Reduces Intelligent Alert Creation Time from Months to Hours Using AWS Partner Seeq,” Amazon Web Services (case study), March 2023, https://aws.amazon.com/partners/success/marathon-oil-seeq/. while Chevron developed a cloud-based tool for oil-well data management.73Mary Branscombe, “How Microsoft Is Extending Its Cloud to Chevron’s Oil Fields,” Data Center Knowledge, November 21, 2017, http://www.datacenterknowledge.com/microsoft/how-microsoft-extending-its-cloud-chevron-s-oil-fields. These companies store immense qualities of data in the cloud, and by increasingly shuttering on-premises data centers, will have to spend even more to turn back. Depending on the specific functions for which each relies on the cloud, a cloud outage could have impacts ranging from simply delaying data reporting to shutting down operational facilities or stunning supply chains.

As in healthcare, major energy players—from oil-and-gas companies to electricity-delivery utilities—are adopting the cloud for functions ranging from auxiliary data processing to core operational capabilities. The impacts of potential cloud compromises on energy availability are hard to predict, especially, as the interconnected nature of the energy supply chain and grid could magnify the unavailability of one component or system into widespread cascading effects. While policymakers have begun to grapple with the interconnection of cyber and energy—for example, the recent National Cybersecurity Strategy notes that cybersecurity will grow increasingly important for next-generation energy technologies such as “advanced cloud-based grid management platforms,” and pledges to “build in cybersecurity proactively through implementation of the Congressionally-directed National Cyber-Informed Engineering Strategy”74Biden White House, “National Cybersecurity Strategy.—more work is required to fully map out the energy sector’s cloud dependence as well as the potential impacts of a devastating cloud compromise for the sector.

Defense sector

The defense sector appears to be the slowest in adopting the cloud among the CI sectors surveyed here, perhaps for understandable reasons: defense-related data systems are subject to more stringent and slow-to-change security requirements than any civilian infrastructure sector. However, both the military and large defense contractors have gingerly started placing auxiliary and systems development functions on the cloud. Policymakers have increasingly identified cloud adoption as a linchpin technology for the future of defense information systems: the Acting Chief Information Officer of the Department of Defense, John Sherman, stated in his 2021 Congressional testimony that “[DOD has] made cloud computing a fundamental component of our global IT infrastructure and modernization strategy. With battlefield success increasingly reliant on digital capabilities, cloud computing satisfies the warfighters’ requirements for rapid access to data, innovative capabilities, and assured support.”75House Armed Services Committee,“[H.A.S.C. No. 117-50] Department of Defense Information Technology, Cybersecurity, and Information Assurance for Fiscal Year 2022,” 117th Congress House Hearing text, June 29, 2021, https://www.congress.gov/event/117th-congress/house-event/LC67110/text?s=1&r=25.

Cloud adoption by the military and defense contractors has been largely facilitated through government-led programs such as Cloud One, which aims to make the cloud accessible across the DOD by acting as a “one-stop-shop” for procuring cloud services from all the hyperscalers.76“Cloud One: Enabling Cloud for Almost Any Department of Defense Use Case,” Air and Space Forces Magazine, July 2, 2021, https://www.airandspaceforces.com/cloud-one-enabling-cloud-for-almost-any-department-of-defense-use-case/. All Cloud One services have been accredited to comply with stringent DOD security requirements, lowering contractual barriers that have traditionally precluded military cloud use. Platform One is a similar initiative providing tooling, development pipelines, and a Kubernetes platform for DOD operators.77“Platform One,” US Air Force, accessed April 27, 2023, https://p1.dso.mil. Platform One aims to help military personnel deploy ready-made, almost-fully-configured cloud products. These initiatives signify an endorsement of cloud in the military, with pathways built out for greater reliance in the future.

While national security considerations often make it impossible to know exactly what kinds of defense data and defense workloads migrate to the cloud, some public information is available. For example, Lockheed Martin has begun moving its test and development instances of SAP HANA, a database used by a variety of applications, onto AWS.78“Lockheed Martin Case Study,” Amazon Web Services, 2017, https://aws.amazon.com/solutions/case-studies/Lockheed-martin/. Small and medium defense contractors are also transitioning, aided by expertise from the DOD.79Laura Long, “Defense Industrial Base Secure Cloud Managed Services Pilot,” EZGSA, April 3, 2019, https://ezgsa.com/tag/defense-industrial-base-secure-cloud-managed-services-pilot/.

In the US military, the Navy stands out as the chief adopter of cloud computing. In 2020, the Navy began moving its planning and tracking tools monitoring hundreds of ships and aircraft, their repair logs, and other operational details, to the cloud.80AWS Public Sector Blog Team, “Readying the Warfighter: U.S. Navy ERP Migrates to AWS,” Amazon Web Services, January 22, 2020, https://aws.amazon.com/blogs/publicsector/readying-warfighter-navy-erp-migrates-aws/. The Naval Information Warfare Center Pacific shifted its DevSecOps environment, a portmanteau of development, security, and operations, called Overmatch Software Armory to the cloud, while other cloud services deliver over-the-air updates to software on some naval vessels, maintain contact between sailors and families onshore, and deliver personnel services and programming to sailors deployed.81Liz Martin, “US Navy Deploys DevSecOps Environment in AWS Secret Region to Deliver New Capabilities to Its Sailors,” Amazon Web Services, June 29, 2021, https://aws.amazon.com/blogs/publicsector/us-navy-deploys-devsecops-environment-aws-secret-region-deliver-new-capabilities-sailors/. Apart from the Navy, the US Defense Logistics Agency (DLA) has migrated some of its applications to the cloud, including its procurement management software and a new training suite.82AWS Public Sector Blog Team, “Defense Logistics Agency Migrates Five Applications to AWS GovCloud (US) Ahead of schedule,” Amazon Web Services, January 16, 2020, https://aws.amazon.com/blogs/publicsector/defense-logistics-agency-migrates-five-applications-ahead-schedule/. In the defense intelligence community, some agencies use cloud services by analyzing satellite imagery and encrypting communications.83“Oracle Cloud for the Defense Department,” Oracle, accessed April 27, 2023, https://www.oracle.com/industries/government/us-defense/.

For large defense contractors, cloud deployments have mainly augmented existing on-premises infrastructure—the cloud provides additional computing resources but generally operates alongside on-premises infrastructure rather than as a wholesale replacement. Boeing, in a momentous 2022 decision, decided to use cloud services from multiple CSPs, while in the interim maintaining a mostly on-premises infrastructure.84Sebastian Moss, “Boeing Announces Cloud Partnerships with Microsoft, Google, and AWS,” Data Center Dynamics, April 6, 2022, https://www.datacenterdynamics.com/en/news/boeing-announces-cloud-partnerships-with-microsoft-and-google/; Aaron Raj, “Boeing Takes to the Cloud with AWS, Google, and Microsoft,” TechWire Asia, April 8, 2022, https://techwireasia.com/2022/04/boeing-expands-cloud-services-with-aws-google-and-microsoft/. One reason behind Boeing’s decision is the cloud’s ability to easily scale test environments and store the immense datasets a jet’s sensors generate each flight. The company cited a Boeing 787’s need to download up to 500 gigabytes (GB) of data per flight, with Raytheon making similar arguments on scalability.85Jay Greene and Jon Ostrower, “Boeing Shifts to Microsoft’s Azure Cloud Platform,” Wall Street Journal, July 18, 2016, https://www.wsj.com/articles/boeing-shifts-to-microsofts-azure-cloud-platform-1468861541. Lockheed Martin has recently begun to use cloud’s computing capability to help their on-premises capacity for sensitive workloads.86Jeff Morin and Dan Zotter, “Lockheed Martin’s Journey to the Cloud,” ASUG Annual Conference, May 7, 2019, https://blog.asug.com/hubfs/2019%20AC%20Slide%20Decks%20Thursday/ASUG82404%20-%20Lockheed%20Martin’s%20Journey%20to%20the%20Cloud.pdf.

Rates of cloud use in defense seem likely to increase as defense contractors become more acquainted with its risks and benefits. DOD discusses how the “episodic nature” of its mission makes the cloud’s scaling capabilities an alluring feature in its 2018 Cloud Strategy.87“Department of Defense Cloud Strategy,” US Department of Defense, December 2018, https://media.defense.gov/2019/Feb/04/2002085866/-1/-1/1/DOD-CLOUD-STRATEGY.PDF. Because the US military and its contractors have been slow to migrate critical systems to the cloud, a cloud compromise would likely not wholly hobble national defense. Less clear is how significant the impacts of such an event would be on important processes such as supply chain and logistics planning. If current defense sector cloud partnerships are successful, then the cloud may grow much more critical to US national defense soon.

Financial services industry

Financial institutions were among the earliest cloud adopters, but their relatively early experimental use has not yet translated into widespread migration of critical workloads, at least in part, due to the financial industry’s substantial data handling and security regulations. Many US-incorporated financial institutions must abide by the requirements of the Basel Accords, Sarbanes-Oxley Act,88Sarbanes-Oxley Act of 2002, Public Law No: 107-204, Government Publishing Office, July 30, 2002, https://www.govinfo.gov/content/pkg/PLAW-107publ204/pdf/PLAW-107publ204.pdf. Payment Card Industry Data Security Standards (PCI DSS), Gramm Leach Bliley Act, bank secrecy acts, and other legal frameworks.89See for example, “Cloud Security Implications for Financial Services” Avanade (White paper), 2017, https://www.avanade.com/-/media/asset/white-paper/cloud-security-implications-for-finanical-services.pdf?la=en&ver=1&hash=51A7A54F67E900ADDE743F89AAA96233. The February 2023 report on cloud use in the financial sector from the US Department of the Treasury (or Treasury) said that more than 90 percent of banks had some data or processes in the cloud, but that only 24 percent of North American banks had begun migrating critical workloads to the cloud.90The Financial Services Sector’s Adoption of Cloud Services, US Department of the Treasury (February, 2023): 27–28, https://home.treasury.gov/system/files/136/Treasury-Cloud-Report.pdf. The Treasury report suggests that non-bank financial institutions, such as investment advisors and broker-dealers, are also migrating to the cloud relatively cautiously. It noted that cloud adoption has been faster among small institutions, which often rely on third-party service providers that might themselves rely on the cloud. Adoption has also been faster in financial institutions focused on artificial intelligence and machine learning, for which massive computing requirements often functionally require the cloud.91US Department of the Treasury, Financial Services Sector’s Adoption of Cloud Services, 19

Select financial institutions have more rapidly embraced the cloud as their primary infrastructure for core digital workloads. Capital One, among the largest banks in the United States, announced in 2022 that it had closed all eight of its private data centers and now runs major services entirely in the cloud, including applications working with client data and backup services, an unprecedented move for a financial institution.92Lananh Nguyen, “Banks Tiptoe Toward Their Cloud Based Future,” New York Times, January 3, 2022, https://www.nytimes.com/2022/01/03/business/wall-street-cloud-computing.html. A catastrophic cloud event leading to the temporary or permanent unavailability of this data would undoubtedly disrupt functionality at Capital One and prove difficult to recover from without cloud-based tools. A massive data breach in 2019 exposed one of the recurring challenges in cloud computing—the trust boundary between a cloud-consuming organization and its CSP. An attacker compromised the firm’s AWS-hosted data stores and gained access to personal data for more than 100 million people, in an incident later attributed to a misconfiguration by Capital One that left it vulnerable to a common attack against cloud services.93Emily Flitter and Karen Weise, “Capital One Data Breach Compromises Data of Over 100 Million,” New York Times, July 29, 2019, https://www.nytimes.com/2019/07/29/business/capital-one-data-breach-hacked.html; Brian Krebs, “What We Can Learn from the Capital One Hack,” Krebs on Security, August 5, 2019, https://krebsonsecurity.com/2019/08/what-we-can-learn-from-the-capital-one-hack/.

Nasdaq, the world’s largest securities exchange, responsible for matching buyers and sellers across billions of orders, cancellations, and trades each day, moved from on-premises data centers to the cloud in 2014.94Nguyen, “Banks Tiptoe”; “Nasdaq Uses AWS to Pioneer Stock Exchange Data Storage in the Cloud,” Amazon Web Services, 2020, https://aws.amazon.com/solutions/case-studies/nasdaq-case-study/; “Trading and Matching Technology,” Nasdaq, accessed April 27, 2023, https://www.nasdaq.com/solutions/trading-and-matching-technology. The stock market’s unpredictable trade volume created a need for elasticity best provided by cloud services. Nasdaq’s cloud transition proved timely during the COVID-19 pandemic, when the number of transaction records surged to 113 billion a day in March 2020. Since starting up enough on-premises infrastructure for such quantities of data would be virtually impossible in the short-term, losing this capability risk undermining core market functions.95Nguyen, “Banks Tiptoe”; Amazon Web Services, “Nasdaq Uses AWS to Pioneer”; Nasdaq, “Trading and Matching” A major cloud compromise or disruption could impact the exchange’s ability to accurately store the day’s transactions, bill customers, and comply with regulatory requirements.

Other major financial institutions have increasingly moved sensitive data to the cloud, though few at the same pace as Capital One or Nasdaq, including Goldman Sachs and the Deutsche Börse Group, which runs the Frankfurt Stock Exchange using a cloud-based tool to analyze investor behavior to offer guidance on better trading strategies rather than any core functions of the exchange.96“Goldman Sachs and AWS Collaborate to Create New Data Management and Analytics Solutions for Financial Services Organizations,” Goldman Sachs, November 30, 2021, https://www.goldmansachs.com/media-relations/press-releases/2021/goldman-sachs-aws-announcement-30-nov-2021.html; “Deutsche Borse Group Launches Data Analytics Platform in Rapid Time using AWS,” Amazon Web Services, 2022, https://aws.amazon.com/solutions/case-studies/deutsche-boerse-case-study1/.

The financial sector increasingly relies on the ability to rapidly increase and decrease their use of cloud computing resources, to keep pace with unpredictable volumes of financial data. In addition, the increasing complexity of machine learning models that financial institutions use to make decisions about everything from whether a transaction is fraudulent to loan interest rates often necessitates cloud-scale resources. NetApp, a leading cloud data management platform, helped an unnamed “hedge fund division of a major investment bank headquartered in the US” transition their risk modeling functions into Google Cloud to take advantage of its ability to rapidly scale up compute on-demand.97“Cloud Computing in Finance with NetApp Cloud Volumes ONTAP: Case Studies,” NetApp, January 18, 2021, https://cloud.netapp.com/blog/cloud-computing-in-financial-services. Capital One relies on cloud’s scalability to manage seasonal transaction surges.98David Andrzejek, “Becoming a Fintech: Capital One’s Move from Mainframes to the Cloud,” CIO, May 17, 2022, https://www.cio.com/article/350288/becoming-a-fintech-capital-ones-move-from-mainframes-to-the-cloud.html. Robinhood, a retail investor platform, relied on cloud services to support hundreds of thousands of users at launch.99“Robinhood Case Study,” Amazon Web Services, 2016, https://aws.amazon.com/solutions/case-studies/robinhood/. Other banking firms like HSBC and Standard Chartered Standard report using cloud services for customer analytics and even some customer transactions.100“Standard Chartered Cuts Risk Grid Costs 60% on Amazon EC2 Spot Instances,” Amazon Web Services (YouTube video), November 12, 2019, https://www.youtube.com/watch?v=o-sw9CLY6Go; “HSBC on AWS: Case Studies, Videos and Customer Stories,” Amazon Web Services, accessed April 28, 2023, https://aws.amazon.com/solutions/case-studies/innovators/hsbc/.

Other core and critical applications of the financial sector have moved to the cloud, too with Wells Fargo reportedly using Microsoft Azure as the foundation of its “strategic business workloads” and Capital One shifting its disaster-recovery and business-continuity functionality to the cloud.101“Wells Fargo Announces New Digital Infrastructure Strategy and Strategic Partnerships with Microsoft, Google Cloud,” Wells Fargo (business wire), September 15, 2021, https://newsroom.wf.com/English/news-releases/news-release-details/2021/Wells-Fargo-Announces-New-Digital-Infrastructure-Strategy-and-Strategic-Partnerships-With-Microsoft-Google-Cloud/default.aspx, Wells Fargo, “Wells Fargo Announces New Digital Infrastructure Strategy. Vanguard, a leading American investment advisor, relies on a similar suite of cloud services as Capital One, reporting near-total adoption of the cloud across more than 850 production software applications in 2021.102Jeff Dowds, “AWS re:Invent 2019 – Jeff Dowds of Vanguard Talks About the Journey to the AWS Cloud,” Amazon Web Services (YouTube video), December 12, 2019, https://www.youtube.com/watch?v=8kzOj9cStGo; “Vanguard Increases Investor Value Using Amazon ECS and AWS Fargate,” Amazon Web Services, 2021, https://aws.amazon.com/solutions/case-studies/vanguard-ecs-fargate-case-study/.

The usage of cloud computing services across financial sector firms is growing and attracting notice from policymakers. The Financial Stability Board (FSB), an international body of central bank regulators, expects that there will likely be strong commercial and efficiency incentives for financial institutions to transition to the cloud amongst the financial sector, given its considerable improvements to operational efficiency.“103FinTech and Market Structure in Financial Services,” Financial Stability Board, February 14, 2019, https://www.fsb.org/wp-content/uploads/P140219.pdf. In August 2019, Reps. Katie Porter (D-NY) and Nydia Velazquez (D-CA) sent a letter to the Treasury Department which strongly urged naming the leading CSPs (i.e., AWS, Azure, and Google Cloud) “systemically important financial market utilities” (SIFMUs) by the Financial Stability Oversight Council,104To the reader, SIFMUs are organizations that, if they fail, would have a catastrophic impact on the stability of financial markets, such as financial clearinghouses. It is important to note that SIFMUs themselves, such as the Options Clearing Corporation, increasingly rely upon cloud services, making cloud providers an even more important part of the financial system. See: “OCC Launches Renaissance Initiative to Modernize Technology Infrastructure,” The Foundation for Secure Markets, January 14, 2019, https://www.theocc.com/Newsroom/Press-Releases/2019/01-14-OCC-Launches-Renaissance-Initiative-to-Moder. a designation which would allow the Federal Reserve to more directly examine and regulate CSPs to prevent potential risks to the stability of the financial system.105Katie Porter and Nydia Velazquez, “Letter to Secretary Mnuchin,” US Congress, August 22, 2019, https://velazquez.house.gov/sites/velazquez.house.gov/files/FSOC%20cloud%20.pdf. The Dodd-Frank Act created the SIFMU designation in recognition of the fact that the financial sector itself is intricately interconnected and that the availability and functionality of certain components are integral to the continued health and functioning of the financial system as a whole.106“Designations: Financial Market Utility Designations,” US Department of the Treasury, accessed May 9, 2023, https://home.treasury.gov/policy-issues/financial-markets-financial-institutions-and-fiscal-service/fsoc/designations. These systemic dependencies generate additional risk on top of the systemic risks potentially prompted by shared reliance on a handful of CSPs, as an outage at a CSP could lead to a domino effect of cascading failures at other institutions through financial relationships even if they rely on distinct technologies.

Treasury’s 2023 report, The Financial Services Sector’s Adoption of Cloud Services, was a welcome step forward in attempting to map out the complex landscape of cloud service models, noting, “a lack of aggregated data to assess concentration is a key impediment to understanding the potential impact of a severe, but plausible operational incident at a CSP on the financial sector.107US Department of the Treasury, Financial Services Sector’s Adoption of Cloud Services, 57 The report identified as key barriers “(i) the lack of common definitions or identification approaches for critical or material cloud services used by financial institutions, (ii) the lack of a common and reliable method to measure concentration, (iii) different data collection authorities and mandates across FBIIC-member agencies.”108US Department of the Treasury, Financial Services Sector’s Adoption of Cloud Services, 57–58. 109To the reader, FBIIC is the acronym for the Financial and Banking Information Infrastructure Committee It further noted the increased difficulties in assessing risk due to “‘nth party’ dependencies…[as] CSPs provide services to many other third-party service providers that a financial institution may rely on, and also use many sub-contractors, creating indirect dependencies for financial institutions that are more difficult to assess.”110US Department of the Treasury, Financial Services Sector’s Adoption of Cloud Services, 50

The difficulties faced even by the Treasury Department, an experienced sector risk management agency, in assessing the systemic vulnerabilities of the financial sector to cloud incidents are an example of broader measurement challenges common to CI sector regulators attempting to understand the impacts of cloud technology on sector risk.

Cloud as critical infrastructure

The goal of this report is not to evaluate the cloud as a new CI sector. Instead, it addresses the nature of the cloud’s criticality on its own merits and to other sectors, in service of specific policy activities which could better handle and govern that criticality. As illustrated above, CI sectors increasingly look to cloud computing to host important workloads. The narrative of the cloud’s economic, operational, and security advantages appears uniformly persuasive, even if the rate at which adoption occurs, as well as the operational criticality of the workloads moved to the cloud, varies greatly among sectors. As cloud adoption ramps up, so too will the potential harms to CI from any significant outages, compromises, or cascading failures. Does this mean the cloud services industry, itself, ought to be considered CI?

PPD-21’s CI definition is subjective—no quantitative threshold determines criticality to national and economic security, either directly or transitively. Regulatory authorities rely on common knowledge and intuition to make that classification, often focusing on physical, tangible sectors. The majority of the sixteen CI sectors are those with which citizens interact daily (either directly or transitively), such as water, transportation, financial services, and food. Absent a strict methodology, one way to determine criticality is to hypothesize the consequences of an infrastructure’s sudden unavailability. The CI sectors of healthcare, transportation and logistics, energy, defense, and financial services increasingly rely on cloud technology for critical workloads. As such, a sudden loss of cloud availability could have cascading consequences of the kind that policymakers sought to avoid by originally designating these sectors as CI. Therefore, the cloud ought itself to be considered as CI, if for no other reason than that it is ever more critical to the operation of already designated CI sectors. The intent of this acknowledgment is not to argue for the addition of cloud as another CI sector—indeed, IT is already a CI sector—but instead to highlight the need for increased scrutiny of cloud computing from existing CI sector regulators and policymakers, given its increasing role as a critical dependency for CI.

Mainstream discussion often glorifies cloud computing as “next-generation” technology, citing cost efficiency, speed, and scalability. Press releases by major CSPs contain myriad references to “transforming” industries,111“AWS and Atos Strengthen Collaboration with New Strategic Partnership to Transform the Infrastructure Outsourcing Industry,” Amazon Press Center, November 30, 2022, https://press.aboutamazon.com/2022/11/aws-and-atos-strengthen-collaboration-with-new-strategic-partnership-to-transform-the-infrastructure-outsourcing-industry; “The University of California, Riverside Enters Into First-of-Its-Kind Subscription-Based Service with Google Cloud to Transform Research and IT,” Google Cloud (news release), March 9, 2023, https://www.googlecloudpresscorner.com/2023-03-09-The-University-of-California,-Riverside-Enters-Into-First-of-its-kind-Subscription-based-Service-with-Google-Cloud-to-Transform-Research-and-IT. going “all-in” on the cloud,112“Wallbox Goes All-In on AWS,” Amazon Press Center, November 30, 2022, https://press.aboutamazon.com/2022/11/wallbox-goes-all-in-on-aws; “AGL Transforms 200+ Applications, Goes All in on Cloud, and Sets up for Sustained Success,” Microsoft News Center, June 9, 2020, https://news.microsoft.com/en-au/features/agl-transforms-200-applications-goes-all-in-on-cloud-and-sets-up-for-sustained-success/. and building next-generation technology.113“AWS and NVIDIA Collaborate on Next-Generation Infrastructure for Training Large Machine Learning Models and Building Generative AI Applications,” Amazon Press Center, March 21, 2023, https://press.aboutamazon.com/2023/3/aws-and-nvidia-collaborate-on-next-generation-infrastructure-for-training-large-machine-learning-models-and-building-generative-ai-applications; “Mercedes-Benz and Google Join Forces to Create Next-Generation Navigation Experience,” Google Cloud (news release), February 22, 2023, https://www.googlecloudpresscorner.com/2023-02-22-Mercedes-Benz-and-Google-Join-Forces-to-Create-Next-Generation-Navigation-Experience; “Empowering the Future of Financial Markets with London Stock Exchange Group,” Official Microsoft Blog, December 12, 2022, https://blogs.microsoft.com/blog/2022/12/11/empowering-the-future-of-financial-markets-with-london-stock-exchange-group/. Much policy has sought to speed and streamline government cloud adoption to harness the potential efficiency and cost benefits accordingly.114Vivek Kundra, ”Federal Cloud Computing Strategy”, The White House, February 8, 2011, https://obamawhitehouse.archives.gov/sites/default/files/omb/assets/egov_docs/federal-cloud-computing-strategy.pdf; “Strategic Plan to Advance Cloud Computing in the Intelligence Community,” Office of the Director of National Intelligence, June 26, 2019, https://www.dni.gov/files/documents/CIO/Cloud_Computing_Strategy.pdf These benefits are real. This report does not seek to dissuade cloud adoption but instead argues that the real benefits of cloud adoption must also carry measured consideration of the unique risk landscape widespread cloud dependence creates, not just in single cloud services but also in the common infrastructure and architectures that power them.

Despite this, policy discussions about managing cloud risk in the face of critical-infrastructure adoption are lagging. One reason might be that cloud computing remains an opaque topic for many: the technology integrates a mix of old and new computer science concepts, and cloud product offerings are often designed to offload complexity from customers, offering as product a computing paradigm familiar on the surface even if vastly different under the hood.115Handler, “Dude, Where’s My Cloud?” There is then no intuitive designation of cloud computing as critical because its ubiquity and complexity are hidden by design. Where cloud policy discussions are underway, they often focus on the security of specific services rather than the macro interactions in how CSPs design this infrastructure and the emergent properties of widespread adoption. The next section unpacks these properties, specifically compounded dependence and delegated control and visibility, and how they combine to create systemic risk.

Risk in the cloud

Cloud computing systems display two properties that create unique risk characteristics: compounded dependence and delegated control and visibility. These properties are the driving cause behind this report, and they arise from cloud architecture and the behavior of cloud infrastructure far more than the security properties of any single cloud service.