Online tool helps social media accounts amplify pro-Israel messages

“Words of Iron” represents a shift in the evolving approach to digital propaganda during times of war

Online tool helps social media accounts amplify pro-Israel messages

Banner: Screenshots from a Words of Iron promo video explaining how social media users can participate in the campaign. (Source: Words of Iron/archive)

Following the October 7, 2023 Hamas terrorist attack on Israel, the website known as Words of Iron began assisting social media users to amplify pre-written pro-Israel messages and mass-report content that the site deemed as false or anti-Israel. The site was employed by thousands of accounts on X over the first days of the war to post copy-paste replies targeting other platform users, including politicians from the United States and Europe.

Words of Iron is among a new generation of information warfare tools and techniques deployed by Israel since the start of the conflict, some of which are now receiving additional scrutiny. On June 5, the New York Times reported on an inauthentic network of social media accounts previously investigated by the DFRLab and other open-source researchers for targeting US lawmakers on the platform X. According to reporting by the Times, as well as by Ha’aretz and FakeReporter, the network’s operation was funded by Israel’s Ministry of Diaspora Affairs, which allocated approximately $2 million for the effort, run by the Israeli marketing company Stoic. Last week, Meta announced it had also discovered Stoic’s presence on its Facebook platform following DFRLab’s initial investigation, subsequently banning hundreds of fake accounts and issuing a cease-and-desist order against the marketing company. Similarly, OpenAI announced it had determined that the campaign had utilized its AI tools to generate fictitious user personas.

The current online information battle began immediately following the October 2023 Hamas attack, with Hamas and other supporting entities relying on Telegram and X to promote their messaging. Subsequently, Israel’s digital strategy used the country’s official social media accounts, in addition to ads placed across multiple social media platforms, on the streaming platform Hulu, and in mobile games, to gain public support and spotlight Hamas crimes. Within this unfolding new digital space, various pro-Israel groups launched digital tools supporting Israel’s public diplomacy efforts, highlighting an evolving shift in propaganda approaches during wartime.

Following the 2019 war in Gaza, the DFRLab reported on the limited impacts of the smartphone app Act.IL, which served a purpose similar to Words of Iron by coordinating the spread of pro-Israel messages on social media platforms. After October 7, volunteer groups created initiatives like Israeli Spirit to increase the online reach of content supportive of Israel and projects such as Digital Dome and the Telegram bot Iron Truth to assist volunteers in mass reporting social media content they considered harmful or false. The Washington Post reported that another tool resembling Words of Iron known as Moovers “is directly tied to Israel,” with a Tel Aviv-based marketing firm promoting the tool as “endorsed by Israel’s Government Advertising Agency.” Like Words of Iron, Moovers also encourages users to promote and report content. Another tool reported on by the Post, Project T.R.U.T.H., provides users with AI-generated text to use in replies to social media content.

The evolution of Words of Iron

Words of Iron (WOI), a name likely derived from Israel Defense Forces’ Operation Swords of Iron in Gaza, is an easy-to-use dashboard and tool created by Israel Tech Guard, a volunteer group formed after the October 7 attack. Their current website, registered on the day of the October 7 attacks, stated that its team is “a coalition of Israeli developers, AI specialists, tech founders, project managers, and coders.” Though the current version of the group’s website does not show WOI as part of its featured projects, it appeared as such in an older archived version of the website. The archived site also states that the group is “working in close cooperation with the IDF and security services.” Subsequent iterations of Israel Tech Guard’s website no longer include this statement.

According to an interview with the tool’s founder by Israeli tech website CTech, WOI is “a joint initiative between the National Information Directorate, the Ministry for Diaspora Affairs and Combating Antisemitism,” and other government offices along with Israel Tech Guard. The tool aims to shape the war’s social media narrative by empowering individuals to promote positive content through “automating the generation of source-based materials in response to hateful media.”

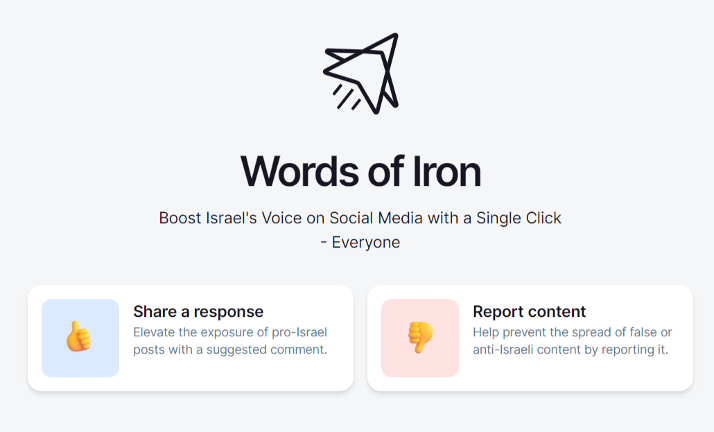

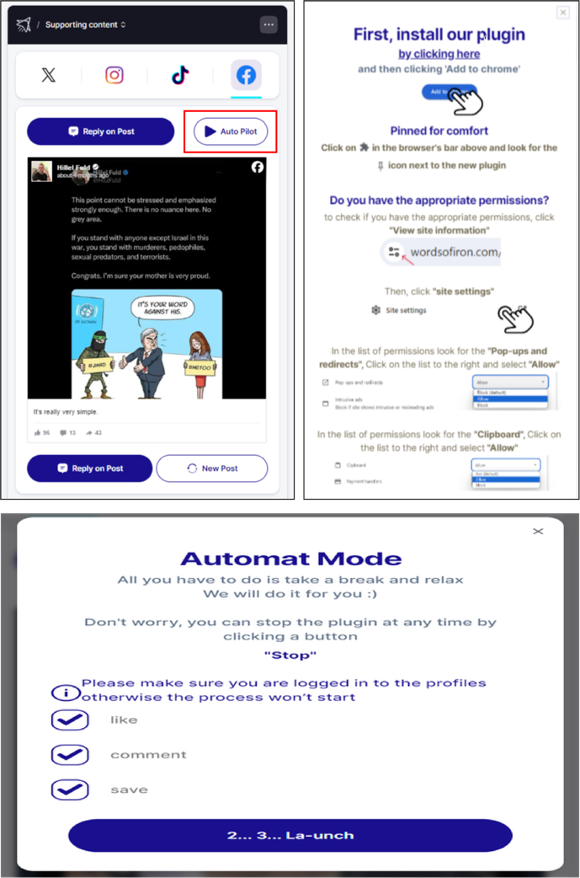

Supporting and reporting

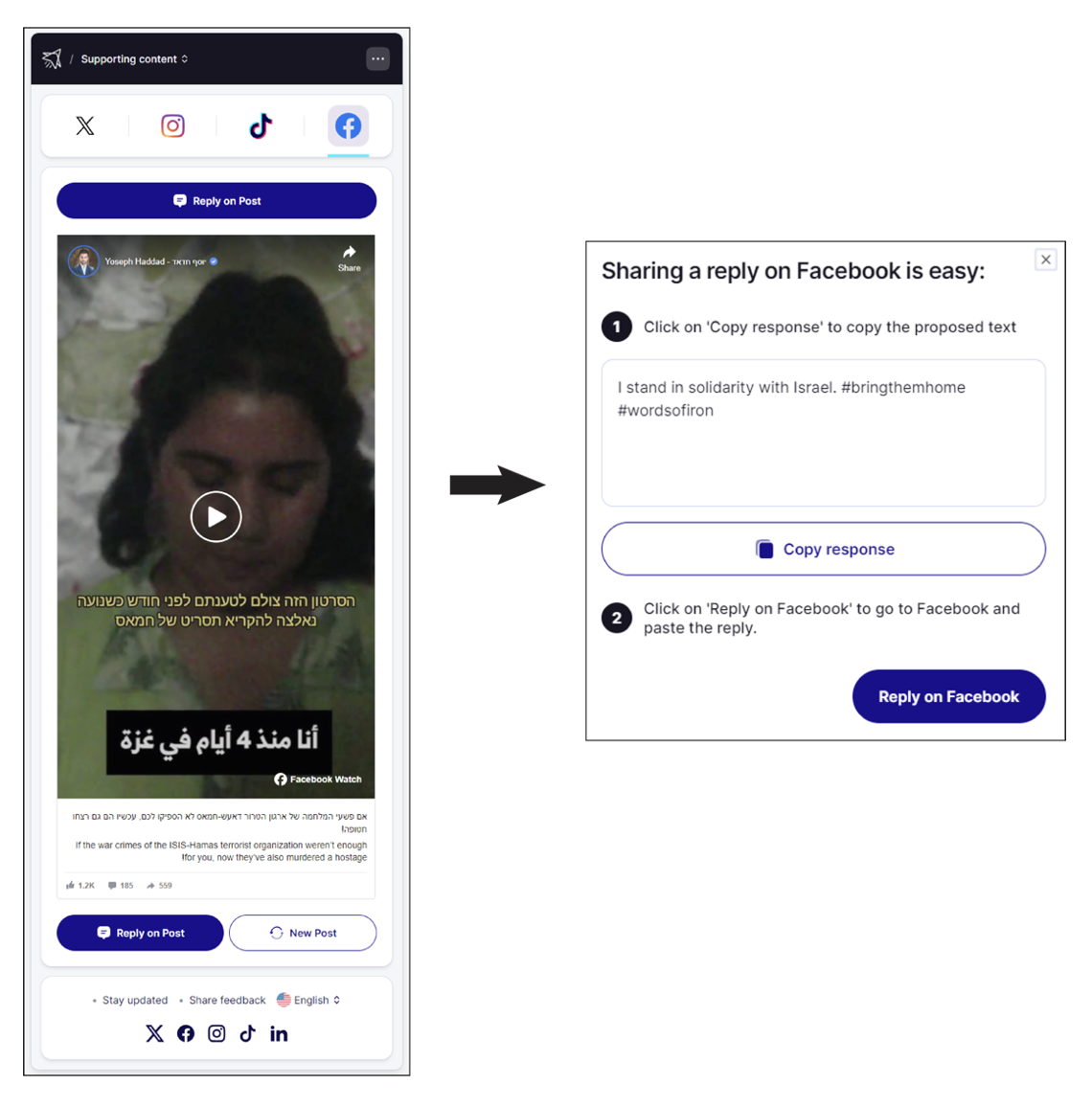

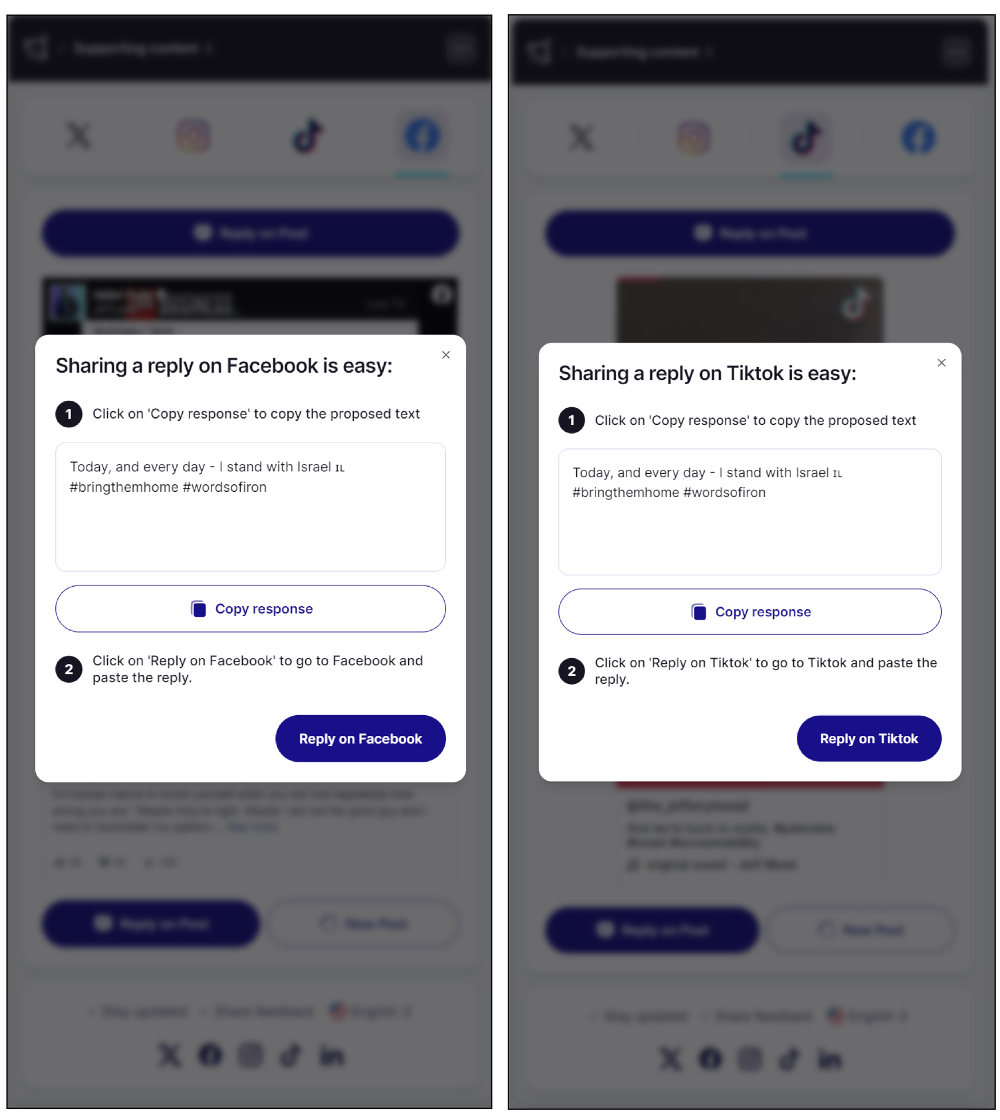

WOI helps coordinate and semi-automate social media users’ communication efforts by providing two options: either to “Share a response” to pre-selected pro-Israeli posts or to “Report content” that the site describes as “false” or “anti-Israel.” If a user decides to share a response, they are then provided copies of predetermined posts on Facebook, X, Instagram, and TikTok, alongside a feature that generates automated text and hashtags for the user to copy, paste, and post as a reply. Users can then press a button that takes them to the post. On X, if the user is logged in, the tool can automatically create replies that only require the user to press the publish button.

The tool identifies specific pro-Israel social media posts for users to reply to, with a focus on politicians, journalists, and social media influencers. The generated text includes language that encourages solidarity with Israel and Israeli hostages in Gaza, thanks supporters, counters claims against Israel, and targets Hamas, among other content. The site also generates hashtags appropriate to particular messaging, including #wordsofiron, #bringthemhome, #HamasisISIS, and #standwithIsrael. Sometimes the generated text also includes emojis.

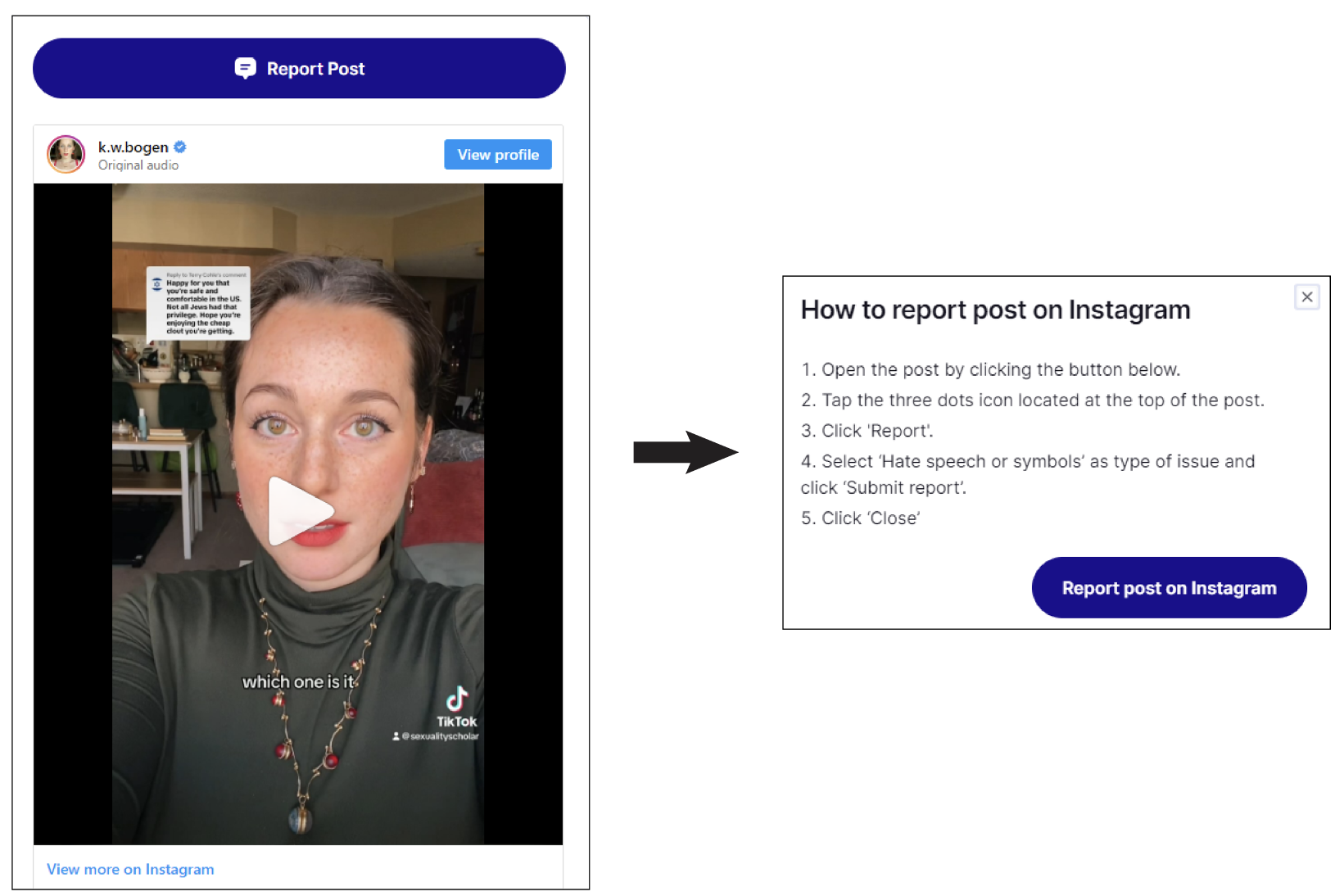

WOI works in a similar way to report content, labeling posts it claims to be spreading anti-Israel or false content and generating guidelines on how to submit such reports.

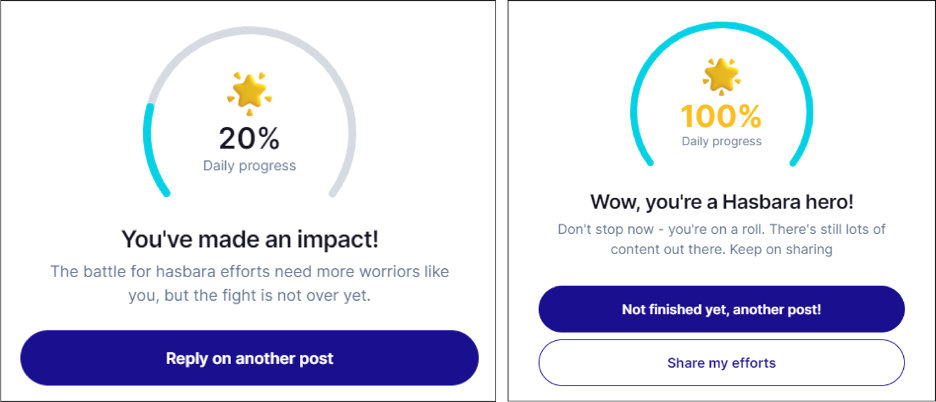

Gamification and automation

The tool also incorporates gaming elements in the form of a scoring system that appears after users click the reply button to support or report a post. This functionality incentivizes the repeated use of the tool to reach daily participation target goals, employing a percentage-based points system that increases after each click until the user reaches their goal and receives a “Hasbara hero” badge.

In January 2024, WOI added an “Auto Pilot” option for the “supporting content” feature by providing a Google Chrome extension. According to the website’s Chrome Web Store page, users can download the extension to further automate the process “with just one click.” The DFRLab did not test this feature.

Targeting politicians and influencers

Among the accounts featured on the tool for users to support are Instagram, Facebook, X, and TikTok accounts of various American and European politicians; the Israeli Embassy in Washington, DC; controversial news aggregator Visegrad24; influencers and activists such as Ella Kenan, Hen Mazzig, and Yoseph Haddad; and the Bring Them Home Now accounts, in addition to many others.

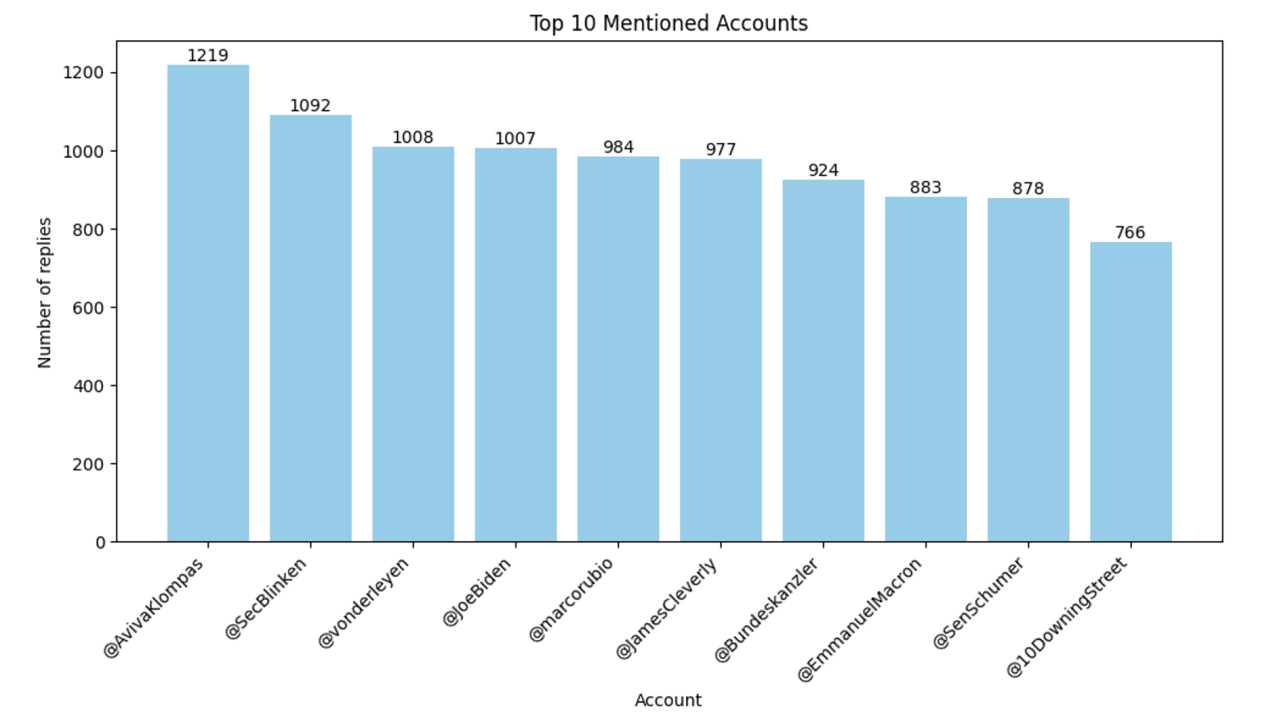

A review of Meltwater Explore data analyzing the use of the hashtag #wordsofiron on X between October 10 to January 7, 2023, revealed that among the top ten recipients of replies featuring the hashtag, nine were American and European leaders and politicians. Aviva Klompas, former Director of Speechwriting for Israel’s Permanent Mission to the United Nations, received the most replies. After Klompas, the accounts that received the most replies belonged to Secretary of State Anthony Blinken, President of the European Commission Ursula von der Leyen, United States President Joe Biden, US Senator Marco Rubio, Home Secretary of the United Kingdom James Cleverly, Chancellor of Germany Olaf Scholz, President of France Emmanuel Macron, US Senator Chuck Schumer, and the official account for Prime Minister Rishi Sunak’s office.

An analysis of the flagged posts for users to report en masse demonstrated a range of content about the war. Some posts were by accounts known for sharing false information, while other posts were by accounts critical of Israel. On Instagram, the tool featured accounts belonging to Palestinian journalists Yara Eid and Bisan Owda, as well as content creator Katherine Wela Bogen. Among those featured many times on Facebook were the political group Scottish Jews Against Zionism and journalist Daizy Gedeon. The site also targeted posts by creators Venus Bleeds and Aussia Bibiyaan on TikTok, and on X, accounts such as physician Dr Mads Gilbert and influencers known for posting disinformation such as Sulaiman Ahmed and Jackson Hinkle. Some accounts were targeted on multiple platforms, such as the accounts of Guy Christensen on both TikTok and Instagram, Sally Buxbaum Hunt on Instagram and Facebook, and Lucas Gage on X and Instagram.

As the DFRLab examined posts featured by the tool during different periods from December 2023 to March 2024, we observed that some social media posts across various platforms included in the tool’s reporting feature were no longer available. However, it is difficult to determine whether such content was removed because of mass reporting by Words of Iron users or if the platforms themselves took independent steps to remove the content. For example, the DFRLab noted that WOI featured at least fourteen posts by journalist Daizy Gedeon’s Facebook page for users to report. Two of the posts were later removed from Facebook.

Measuring impact on X

As previously noted, Words of Iron automatically generates text for users who are already logged into X so they can post without the need to copy and paste content. The generated text included the hashtag #wordsofiron, which enabled the DFRLab to track thousands of replies containing the hashtag.

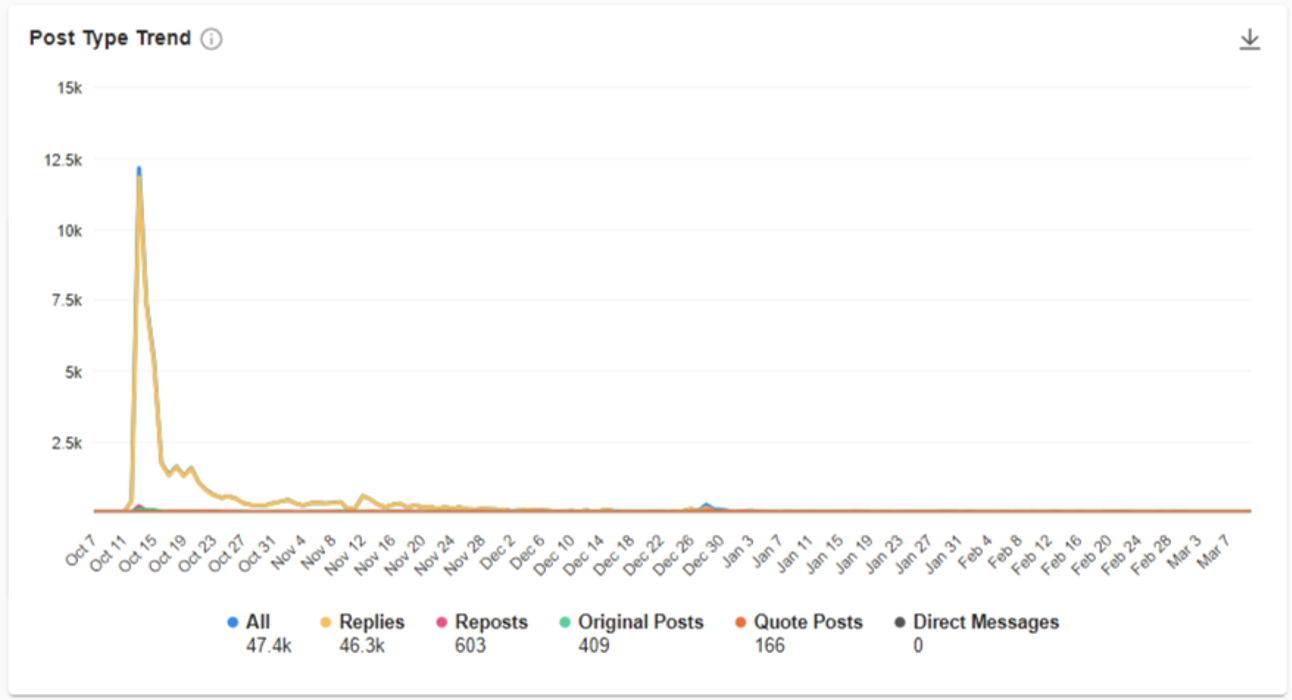

Using the monitoring tool Meltwater Explore, the DFRLab found that between October 10, 2023 and March 10, 2024, the hashtag #wordsofiron appeared over 40,000 times in posts by more than 5,000 unique users, with an estimated reach of about 1.63 million users. The data showed a spike in engagement on October 13, mostly replies, which then fell off quickly, suggesting that Words of Iron achieved only temporary success as a mass messaging tool.

Approximately 98 percent of instances of the hashtag on the platform was in the form of replies, as compared to original posts or reposts. This aligns with the tool’s focus on amplifying posts through replies, and further suggests that most of the engagement on the hashtag came from accounts using the online tool. Yet it is difficult to measure and confirm if most users employing the hashtag also utilized the tool, as some users could have used the hashtag without being guided by Words of Iron to do so.

Thousands of users used automatically generated replies directed towards selected posts, which resulted in a high volume of duplicate pro-Israel messages in replies to the same and different posts. Moreover, the automatic generation of identical text suggests the curation of a pre-set list of replies created by Words of Iron.

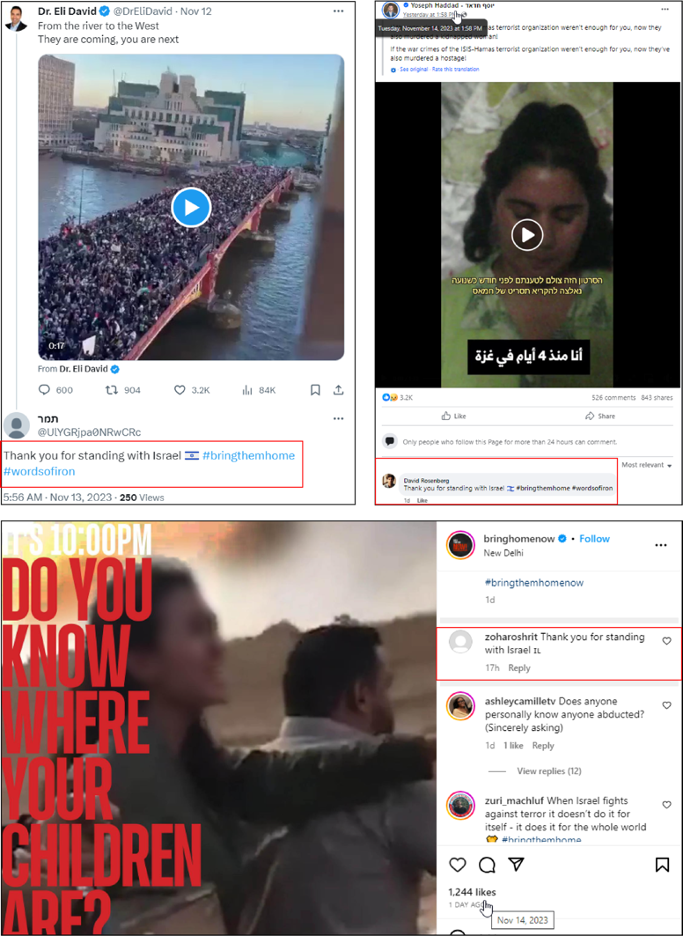

The DFRLab observed that some accounts had a high posting rate. In one example, the X account @offerrossman posted more than three hundred copy-paste replies on October 13 that included #wordsofiron, over four hundred replies the next day, and over five hundred on October 15. In another example on X, there were more than one hundred replies using #wordsofiron on a post from @USConspiracies that included a TikTok video calling for the support of Israel, including one reply from the WOI account. Eight accounts used the same text to reply “Today, and every day – I stand with Israel 🇮🇱 #bringthemhome #wordsofiron,” while more than ten accounts replied with the text “I stand in solidarity with Israel. #bringthemhome #wordsofiron” during different times.

Between October 12-26, 2023, more than six hundred accounts published the same verbatim reply more than 1,400 times: “Our safety lies in our unity, as we stand together to protect our beloved nation. #hamasisISIS #standwithisrael #bringthemhome #wordsofiron.”

Monitoring of the tool showed that its pre-set replies changed over time, as some messages were not generated after specific dates; new hashtags were also added. This aligns with data from Meltwater Explore showing sudden decreases in the amplification of specific messages following certain dates. For example, from October 13- 25, 2023, around two hundred accounts posted 285 replies saying, “False narrative: Israel ignores the Palestinian people. Truth: Many Israelis and Palestinians work together for coexistence and understanding. Our common enemy is Hamas.#HamasisISIS #standwithIsrael #bringthemhome #wordsofiron#HamasisISIS #standwithIsrael #bringthemhome.” However, this exact post was not used by any accounts after October 25, according to Meltwater data, suggesting the text prompt had been removed from the system.

Newly created accounts and suspicious activities

Analysis of X accounts that appear to have used the tool revealed the presence of accounts that exhibited suspicious behavior, particularly those accounts created after October 7. Moreover, the DFRLab observed several accounts increasing their posting activity after long dormant periods.

Using the Twitter ID Find and Converter tool, the DFRLab identified at least two hundred accounts created after the October 7 attacks, some created minutes apart from each other, that amplified WOI messaging. More than thirty accounts were created on October 12, while more than twenty were established the following day

Some of these newly created accounts exhibited suspicious activity; more than one hundred accounts did not have an avatar image and used alphanumeric handles at the time of reporting. Alphanumeric handles are usernames generated by the platform’s algorithm that consist of a combination of letters and numbers.

Several accounts also had the same display names and sometimes the same avatars. For example, the accounts @OmerMazor, created in August 2009 and dormant from September 2009 until becoming active again on October 8, 2023, and @OMazor72680, created after the October 7 attack, employed the same name and avatar source photo. It is unclear clear if the accounts belong to the same person or if the new account used an existing name and avatar.

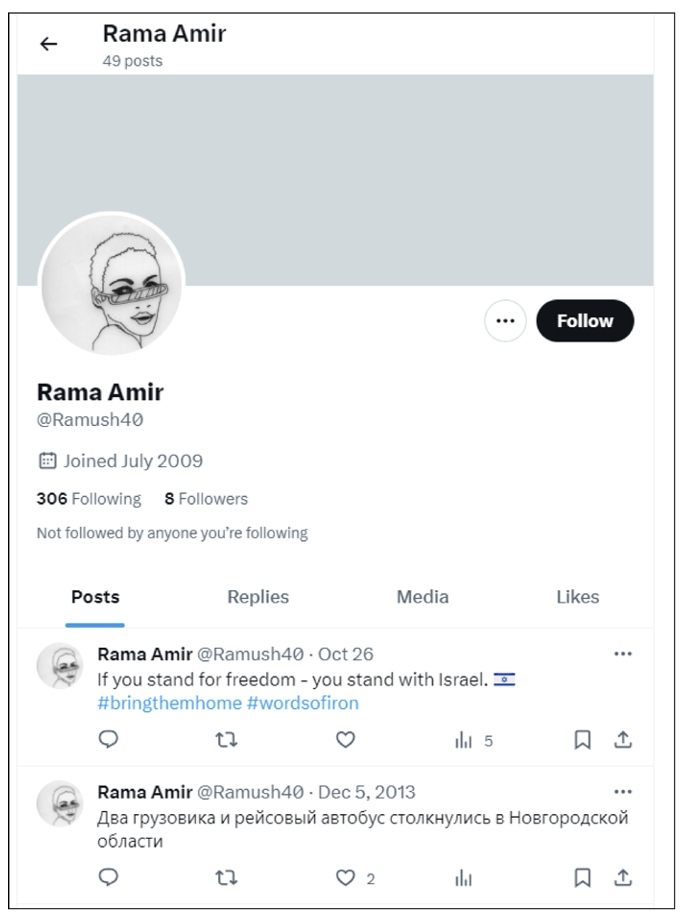

Many accounts that amplified WOI messaging were dormant for years before resuming activity to post WOI content. For example, the account @Ramush40, created in July 2009, was dormant from December 5, 2013, until it became active again on October 23, 2023. Almost all the new tweets posted by the account used copy and paste text from Words of Iron. Other dormant accounts, such as @smadaror1968, created in July 2012, or @haranarbel, created in July 2022, had no posts aside from one single post using WOI text to reply to another account.

While many accounts using the tool were suspicious or anonymous, many others appeared to be real users. Notably, the account of General Mike Driquez, Israel’s Deputy Consul in Miami, published text consistent with WOI messaging in eight replies on October 14 and 15. Another politician, Israel’s former Minister of Diaspora Affairs Nachman Shai, also may have used the tool, as he posted a reply on October 16 that incorporated WOI messaging.

Words of Iron was also likely used to reply to posts on different platforms such as Facebook and Instagram. It is possible that the tool was also used to post comments on TikTok, but the platform’s limited search functionality makes it difficult to confirm. In one example, users posted the same reply to different posts on X, Instagram, and Facebook stating, “Thank you for standing with Israel 🇮🇱 #bringthemhome #wordsofiron.”

Counter-campaign

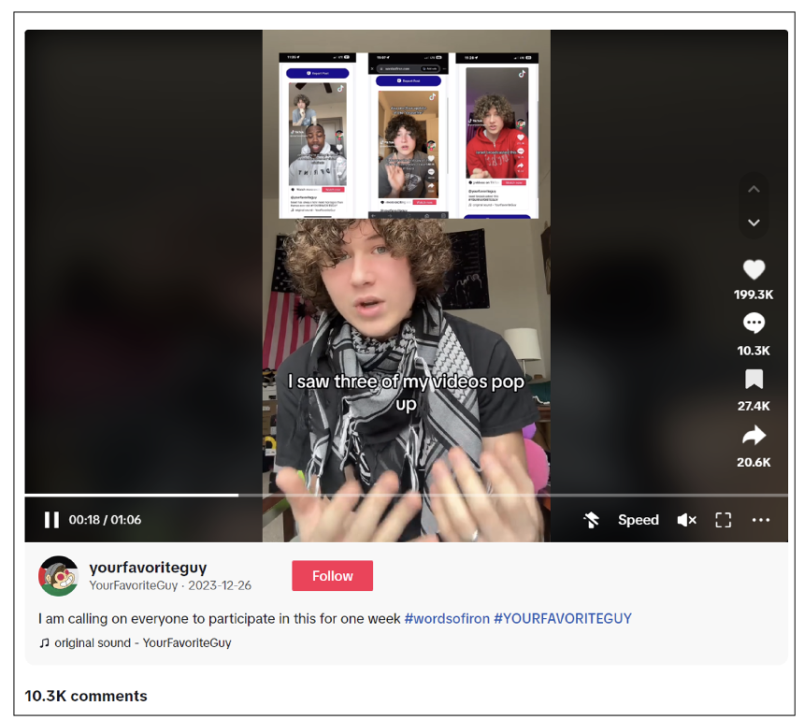

American TikTok influencer Guy Christensen (@yourfavoriteguy), known for his posts expressing support for Palestine, was featured on WOI’s reporting feed many times. The DFRLab observed at least seventeen TikTok posts and seven Instagram posts by the influencer featured on WOI’s reporting mechanism. In response, Christensen initiated a counter-campaign against WOI by reversing its intended use. On December 26, 2023, Christensen asked his TikTok followers to report pro-Israel posts featured on the website for one week and to support pro-Palestine posts the tool is trying to report. Christensen also encouraged his fans in January to use the website melonflood.com, which he said operated in a similar but opposite capacity by boosting pro-Palestine content and reporting pro-Israel content. The website, which was registered on January 14, 2024, is no longer operating, as it takes users to a page by reversecanarymission.org, which announced that “Operation Melon Flood has been halted for now.” The counter-campaign also encouraged some pro-Palestine users on X to repurpose the tool’s autogenerated text to show support of Palestine instead of Israel, altering the text to convey viewpoints other than originally intended by the tool. For example, in a reply to a post about the Israeli hostages by @Hamasis_ISIS, the account @TheFlowerBro posted a reply saying, “If you stand for freedom – you stand with Palestine. #FromTheRiverToTheSea #wordsofiron.”

Despite its limited impact, Words of Iron and similar coordination tools represents an evolution of third-party tools that rely on volunteers to shape narratives. Such tools constitute a content moderation quandary for social media platforms, with automation features that encourage users to perform certain actions. Individual users of WOI might also be in violation of some content moderation policies. X prohibits activities that result in artificially boosting content, which includes coordinated activity, automation, and “duplicative content resulting in spammy, inauthentic engagement.” Similarly, Meta’s Inauthentic Behavior policy forbids using Facebook or Instagram to mislead the platform or users “about the popularity of Facebook or Instagram content or assets,” while TikTok’s integrity and authenticity policies “do not allow coordinated attempts to influence or sway public opinion.”

Cite this case study:

“Online tool helps social media accounts amplify pro-Israel messages,” Digital Forensic Research Lab (DFRLab), June 11, 2024, https://dfrlab.org/2024/06/11/online-tool-helps-social-media-accounts-amplify-pro-israel-messages/.