#ElectionWatch: Alabama Twitter War

Activists raise a storm in a coffee cup over Roy Moore allegations

#ElectionWatch: Alabama Twitter War

Activists raise a storm in a coffee cup over Roy Moore allegations

With the explosive accusations against Republican candidate Roy Moore in the special election to fill the position United States Senate position vacated by Attorney General Jeff Sessions, Twitter users have gone to war.

Activists launched hashtag campaigns to attack or defend Moore, and those supporting or opposing him. Russian “bots” (automated accounts which masquerade as humans) reportedly entered the fray.

Bots, indeed, made an appearance, as they do in the vast majority of political debates on Twitter. However, more traffic appears to have been generated organically by coordinated activism, with groups of apparently human users, many of them anonymous, posting and re-posting furiously to give their hashtags the appearance of popularity.

This pattern of activity, which is considerably more sophisticated than the simple use of bots, shows the likely way in which Twitter users will seek to influence voters in Alabama, and in next year’s mid-term elections.

It also reveals the American Twittersphere’s ongoing vulnerability to outside interference. Much of the traffic was carried by anonymous, hyper-partisan accounts. As recent events have shown, this is the (dis)information space in which Russian operatives flourished throughout the 2016 election cycle. It remains wide open to potential abuse.

Hashtags

A number of hashtags emerged after the Washington Post quoted an Alabama woman named Leigh Corfman, who accused Moore of an nonconsensual sexual encounter with her when she was 14. The online campaign against this act, which used the hashtag #MeAt14, launched on November 10.

The traffic intensified after another woman, Beverly Young Nelson, accused Moore of a sexual assault when she was 16. Quickly, traffic using her name exploded; defenders of Moore began posting #StandWithMoore in reply.

Critics of Moore then called for a boycott of coffee maker Keurig for running commercials on Fox host Sean Hannity’s show, after Hannity was seen as defending Moore.

Under pressure from such posts, Keurig pulled its commercials from Hannity’s show.

Moore supporters reacted with outrage, taking over the hashtag and generating massive traffic on it.

As the #BoycottKeurig hashtag snowballed, Moore’s opponents pushed out #DrinkKeurig. Then, as reports emerged that Moore’s challenger, Democrat Doug Jones, was climbing in the polls, #DigDoug also picked up traffic.

These hashtags performed very differently. #StandWithMoore was the least successful, generating just over 6,500 tweets from November 11–17. By far the most successful was #BoycottKeurig, with almost 440,000 tweets.

Putin, popular, or pushed?

While the traffic was flowing, Newsweek reported that “Russian bots” were amplifying #BoycottKeurig. The basis for its claim was the Hamilton68 dashboard, which tracks “600 Twitter accounts linked to Russian influence efforts online”.

This claim should be viewed with caution. Hamilton68 tracks a range of sources, from Kremlin-run outlets such as RT (which registered as a “foreign agent” in the US on November 10), through self-proclaimed Kremlin supporters, to users who amplify Kremlin themes without necessarily realizing that they are doing so. The fact that a hashtag appears on the dashboard could simply mean that American users with a history of amplifying Kremlin tropes are pushing it.

It is, however, possible to verify whether the hashtags were genuinely popular, or artificially amplified. To do so, @DFRLab conducted machine scans of the launch phase (the first 100,000 tweets) of #BoycottKeurig and “Beverly Young Nelson” and full scans of the other hashtags.

We looked for the presence of bots, and broader signs of “astroturfing”, a process by which small groups try to make themselves look larger through coordinated tweeting and retweeting, the selective use of bots, and very high rates of posting.

The scans suggested that both sides used astroturfing techniques — not just bots but also coordinated human activity.

Bots give way to cyborgs

We identify bots by looking at individual accounts’ activity, anonymity, and amplification. If an account posts many dozens of times a day, shows no verifiable personal information, and only shares other users’ posts, it is botlike.

Botlike behavior showed up on both sides of the debate.

For example, @whenyourastar posted the hashtag #DrinkKeurig 236 times in 34 minutes on November 12 (local time). Every single one was a retweet. The account was set up on October 29; by November 17, it had posted 3,257 tweets and likes, at an average rate of 163 engagements per day. As of the same date, all of its last 100 posts were retweets. This is botlike behavior.

On the other side, @EHFoundation237 posted six retweets of #BoycottKeurig on November 12. Created in January, this account had posted 658,000 times by November 17, at an average rate of 2,253 posts a day — clearly botlike.

These both behave like bots, but there is no evidence to suggest that they are Russian bots. @whenyourastar focuses on anti-Trump U.S. politics; @EHFoundation237 shares a wide range of apparently commercial posts, often with an African angle.

Much more of the traffic on these hashtags appears to have been generated by cyborgs — accounts which post at botlike rates, but periodically tweet authored content, in a sign that a human being is in control, at least temporarily.

For example, the most prolific tweeter in the scan on #BoycottKeurig, @KriensL, posted 252 times in a single hour. Of those, 251 were retweets and one was a reply to another user.

Overall, @KriensL has posted 40,000 tweets and 34,400 likes since its creation in September 2016, for a botlike average rate of 180 engagements per day. However, a significant number of posts appear to be authored. This is likely a semi-automated cyborg, used to artificially boost Twitter traffic.

On the other side, @Ryanjac01791467 posted 250 times on #MeAt14; every one was a retweet, which is botlike behavior. This account was created in October 2015, but, curiously, only appears to have begun posting in March 2017. It does, however, post a significant proportion of replies. This marks it as a cyborg or activist account, rather than a bot.

Throughout the sample scans, accounts such as this were far more active than simple bots. Such accounts would typically post 50–250 retweets on their chosen hashtag, interspersed with a handful of authored posts (often just one).

This suggests that the traffic was not driven primarily by bots, but by groups of human users agreeing on a hashtag, posting their own tweets and then re-posting one another’s. This is known to be a favorite tactic of the far right in the United States; it appears to have been adopted by their opponents too.

Distortion by design

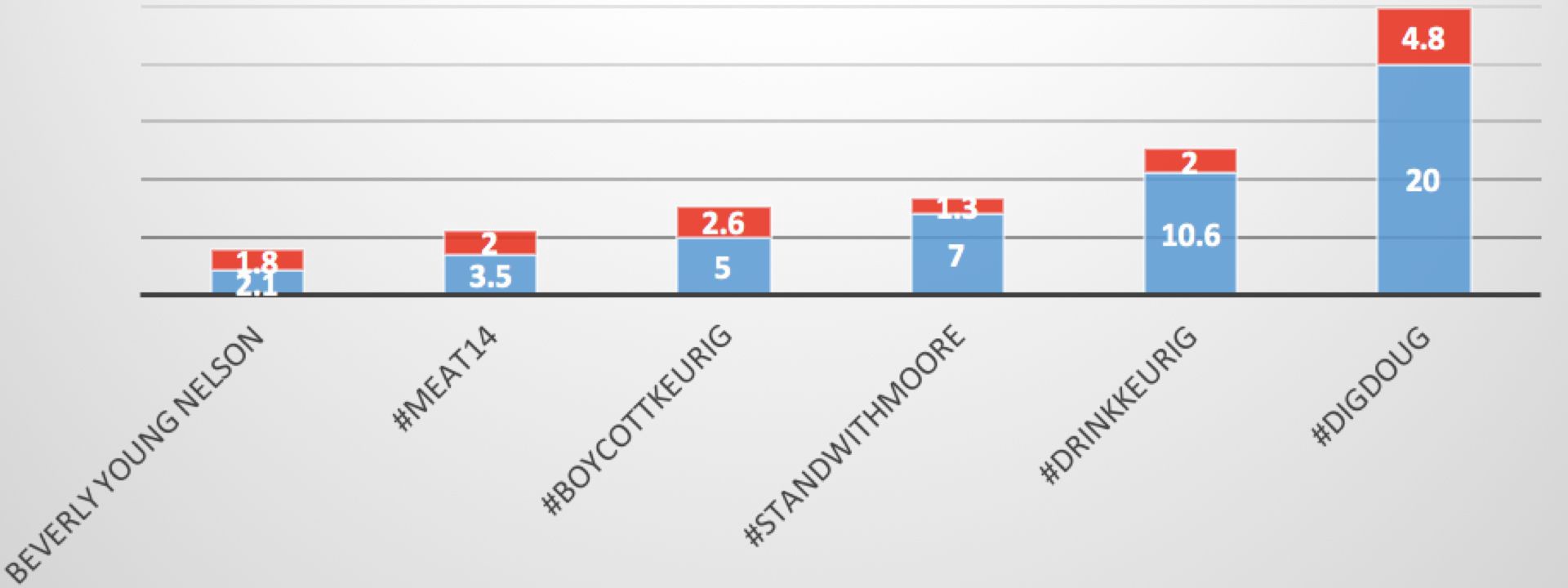

Some idea of the degree of coordination can be gathered by studying two factors: the percentage of tweets generated by the fifty most active users and the average number of tweets per user in the overall scan.

Analyzing the impact of the top fifty users shows how much a small group is driving or distorting traffic; analyzing the average number of posts per user shows whether the traffic is driven by (and thus, probably, seen by) a large number of casual users, or a small number of dedicated ones.

Taken together, these factors allow us to compare the relative extent to which traffic on different phrases is distorted. It does not distinguish between bots, cyborgs, and real people, nor should it be taken as a binary “distorted or not distorted” judgement. Rather, it allows us to compare different Twitter movements. In general, a low score indicates a more organic pattern of traffic (fully organic movements typically score 5 or lower); a high score suggests a deliberate attempt to inflate traffic.

According to this scan, traffic on the name “Beverly Young Nelson” was entirely organic, with the top fifty tweeters representing just 2.1 percent of traffic, and an average of 1.8 tweets per user. #MeAt14 scored somewhat higher, at 5.5, suggesting a relatively minor attempt at distortion.

All the other hashtags, from both sides of the debate, showed significant signs of distortion. #BoycottKeurig scored 7.6, largely due to the very high activity of the top fifty users. Since the scan covered 100,000 tweets, these accounts posted almost 5,000 times, a clearly unnatural pattern. #StandWithMoore scored even higher, again largely due to the top fifty.

By far the most distortion, however, affected #DigDoug. Twenty percent of all the traffic — almost 8,500 tweets — came from just fifty accounts. On average, each user in the scan posted 4.8 times. These are classic indicators of distorted traffic, with a dedicated user group trying to make themselves look larger.

The tweets themselves explain why. The most-retweeted post on #DigDoug came from an account called @pmaalai. It called for a “Twitter storm”, linked to a Facebook event and a “Tweetsheet”, and was retweeted 1,792 times.

The “tweet sheet” was a Google document with instructions on how to post pro-Jones tweets using a click-to-tweet URL shortener, together with advice to “average about one tweet per minute so Twitter doesn’t lock you out.”

Pasting one of the links into a browser does, indeed, provide a pre-written tweet; the user only needs to hit “Return” to post it.

The account which promoted the message, @Pmaalai, gives no personal information, and its location is “Earth”. Since its creation in January, it has posted over 10,000 tweets and almost 9,000 likes, for an average engagement rate of 60 times a day — high, though not impossible, for a fully human user.

It intersperses retweets with apparently authored posts; many of them, such as this, appear on the tweet sheet.

@PMaalai appears to be an influence cyborg, partially automated, and designed to post political messages and to steer other users to do the same.

This confirms what the statistical analysis indicated: that #DigDoug was subject to wholesale distortion by a relatively small group of users, semi-automating their posts, and rallying supporters to create a “Twitter storm” in favor of their candidate.

Conclusion

Twitter traffic around the Alabama special election, and, in particular, the accusations against Moore, has not been uniform. Some phrases, notably Beverly Young Nelson’s name, were widely tweeted, but show no signs of distortion. The more political hashtags, however, all showed signs of distortion, as groups on both sides of the partisan gulf tried to make their message felt.

Two factors are of concern here. First, the traffic confirms (if that were needed) the sheer extent to which Twitter has become a partisan battleground, in which groups on both sides try to gain an advantage by coordinating and automating posts.

Attempts such as these are only like to grow in frequency and sophistication as the special election approaches in December, and as next year’s mid-term elections loom.

Second, anonymous, partisan accounts still wield significant influence. Accounts such as @Pmaalai, @KriensL, and @Ryanjac01791467 helped to drive and shape traffic, without giving any indication of who was behind the accounts or even where they were based.

This is not to suggest that any of them is a foreign-based disinformation account; but Russia is known to have used exactly such accounts to exacerbate partisan divides in America in 2016 and 2017, with significant effect.

This is a real vulnerability. There is no indication that Russia has abandoned its efforts to win influence abroad, including on social media; nor is it likely to be the only country which is doing so. Anonymous, hyper-partisan accounts are a perfect vehicle for spreading disinformation and division.

Judging by current traffic in Alabama, American Twitter users on both sides of the divide remain wide open to foreign influencers.

Follow along for more in-depth analysis from our #DigitalSherlocks.