#InfluenceForSale: Not So Deep Fake

An increasing number of automatically generated YouTube videos are using scraped @DFRLab content

#InfluenceForSale: Not So Deep Fake

An increasing number of automatically generated YouTube videos are using scraped @DFRLab content

Automatically generated content has been a widely discussed topic on the internet for years. Despite the advantages of this automation for advertising purposes, videos generated with artificial intelligence (AI) pose new threats. Not only do they not generate original content, but in many ways they work similarly to Twitter bot networks. For example, a selection of @DFRLab content was used in automatic video creation that surged on YouTube, providing no reference to the original articles. @DFRLab is not responsible for the creation of these videos but finds them somewhat amusing. Here is a quick look at the recent phenomenon through the scope of @DFRLab’s work.

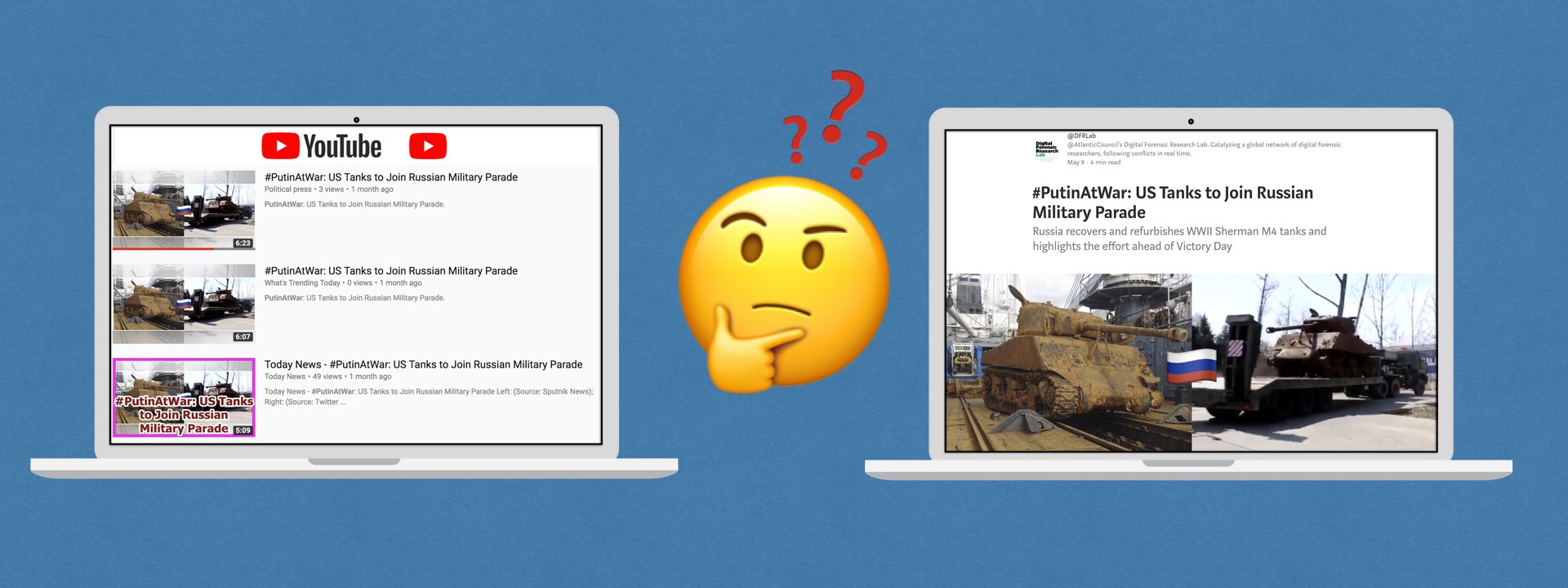

We observed a recent surge in these automated videos, mostly with @DFRLab’s hashtags #PutinAtWar, #MinskMonitor, and #BreakingSyria. The videos were created on the same day as the articles were published and used exactly the same name as the original source. These accounts can be classified into two main categories: alleged media outlets and private personas. The alleged media outlets, such as NEWS 247, Military Times, Political Press or DIE NEWS have large amounts of subscribers and in the video descriptions promote other similar channels in different languages. Private personas, such as Mary A. Barnes, Dewitt S. Phelps, Lien Ti, and Bao Noi had barely any subscribers and kept the video descriptions short.

These generated videos scraped all the information from @DFRLab’s articles, including the text and pictures. The text was either narrated or subtitled on the bottom, and each video included generic music in the background.

https://www.youtube.com/watch?v=05UWVj-8oaI

It was clear that the information for the video was taken by an AI program, as it used all the available text, without differentiating between the actual story and the supporting hyperlinks.

These videos were usually published by different users/channels and mostly on the same day as the original article was published.

Most likely, different YouTube accounts were using different software to create these videos, as the style of the videos differ between the accounts.

The accounts/channels posting these videos appeared to have large amounts of followers, nonetheless each posted video was viewed only a few times. This suggested that the base of the followers was probably garnered unnaturally.

There are several companies providing similar services, offering to automatically generate content, using a variety of sources. Most of the software seems to be professional paid services, such as GliaCloud, Lumen5, and Wibbitz, but they also have free versions to try for a limited time period. Companies such as Viomatic are very candid about their products, publicly offering: “Take advantage of the world’s second largest search engine — YouTube.”

A lot of this automatically generated content is harder to find, as most of the article’s text is in the actual video, rather than the description making it harder to find the original source of the video. The reverse image search method was more helpful to find a lot of these fake @DFRLab videos than the simple Google text search.

Conclusion

YouTube’s search function and autoplay algorithms have been covered at length as a hotbed for disinformation, but the discussion around the weaponization of automatically generated content has thankfully not had many tangible case studies to date. While we would not categorize the @DFRLab content auto generated into video as weaponization against anything but the quality of our content, it does remain a useful case study.

Not only the new methods, but also the scope of these YouTube bots is not fully comprehended. The exact purpose of these bot accounts remains unclear, but they are most likely used to grow audience and create a critical mass of consistent content.

As YouTube bots provide videos automatically generated from many different media outlets and other sources, they are able to collect thousands of real and fake subscribers. It is possible that when these accounts grow to a certain size, they could be used for promotional, information campaigns, similar to Twitter bot networks. As public focus is currently concentrated on fake accounts on, YouTube continues to be less controlled in terms of online malicious activity. With Twitter increasingly cracking down on the operating fake accounts, YouTube could be the next battleground for information operations.

@DFRLab will continue to monitor malicious activity on YouTube and other social media platforms.

Follow along for more in-depth analysis from our #DigitalSherlocks.