#TrollTracker: Facebook Takes Down Fake Network in the United Kingdom

Pages targeted far-right users then posted pro-Islam, pro-Pakistan messages

#TrollTracker: Facebook Takes Down Fake Network in the United Kingdom

BANNER: (Source: @DFRLab)

On March 7, Facebook removed 23 pages, 74 Facebook accounts, five groups, and 35 Instagram accounts that the company assessed to be “engaging in coordinated inauthentic behavior as part of a domestic-focused network in the UK.” These pages had approximately 175,000 followers, and approximately 4,500 accounts followed at least one of the removed Instagram accounts.

Although multiple assets were previously disabled, Facebook shared 15 of the active pages and two of the active public groups with @DFRLab prior to the takedown.

Overall, the network seemed designed to amplify pro-Islam and anti-extremist messaging, including by engaging with anti-Islam commentators. The pages and groups were administered by a cluster of accounts that claimed to be based in the United Kingdom, Pakistan, and Egypt and appeared to have a broader interest in Pakistan.

Facebook determined that individuals behind the accounts violated the platform’s ban on “coordinated inauthentic behavior” by running a network relying on “fake accounts to misrepresent themselves.” @DFRLab’s analysis of the accounts highlights open source evidence that a small group of users were running multiple pages to manage the network, in violation of Facebook’s policies.

Facebook also removed four pages, 26 Facebook accounts, and one group demonstrating highly biased content in favor of the ruling Social Democratic Party in Romania (PSD), on which @DFRLab also reported.

Anti-Islamophobic and Anti-Extremist Narrative Shared Among Pages and Groups

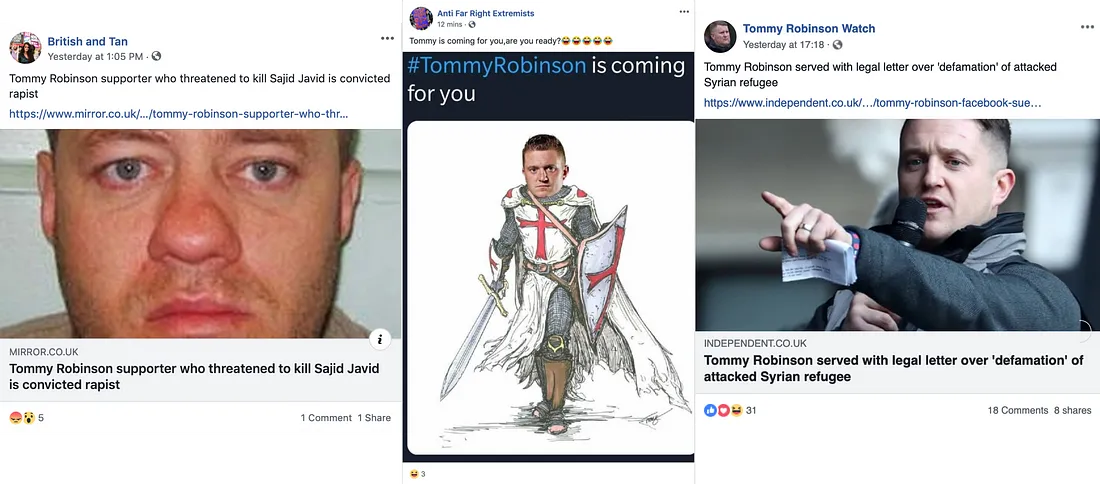

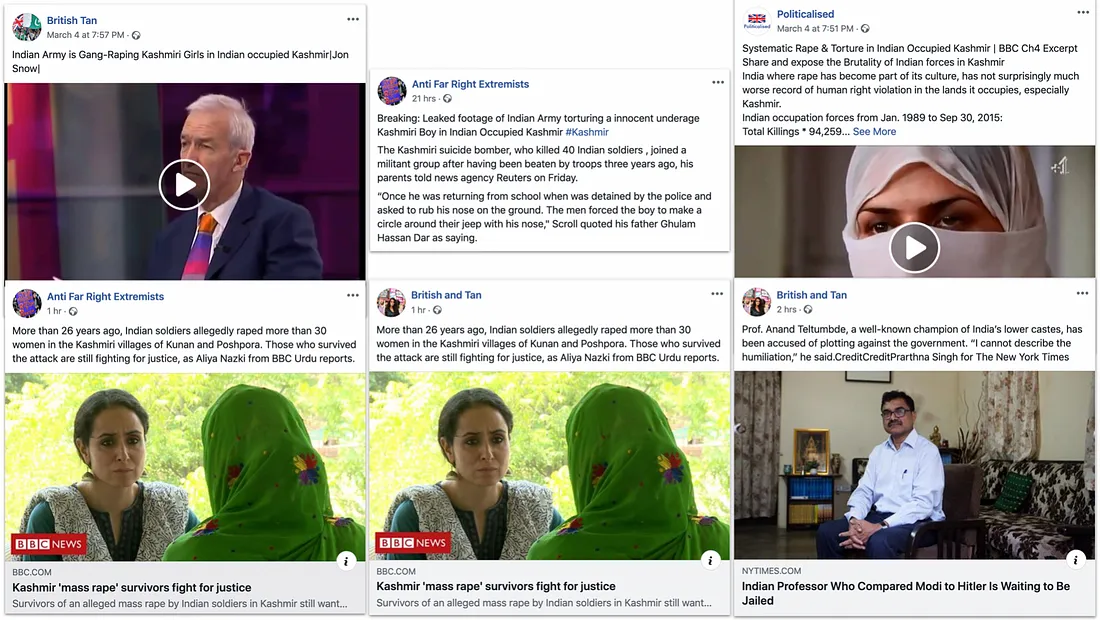

Over half the pages examined by @DFRLab defended the role and status of migrants and Muslims in Britain and criticized far-right, anti-migrant, or anti-Islam acts and comments, sometimes urging their readers to take action in response. These pages had names such as “Anti Far Right Extremists,” “British and Tan” and “Tommy Robinson Watch,” named after a far-right figure whose real name is Stephen Yaxley-Lennon. These pages were among the most popular in the takedown, with approximately 14,000 followers, 43,000 followers, and 11,000 followers, respectively.

In particular, these removed pages focused on politicians who spoke of Islam in extremist terms, such as referring to the religion as a “death cult” and calling for the prosecution of Muslims, while also reporting on the political consequences of this hate speech.

Several of the pages focused on highlighting hostile content related to Tommy Robinson, the former leader of the far-right extremist group English Defense League.

The pages frequently accompanied this content with a call for audience members to “expose” Robinson’s words and actions. Robinson has been recognized as an influential far-right personality whose videos have been watched or heard by almost four in ten Britons. At least seven of the 15 pages @DFRLab examined referred to Tommy Robinson.

Content from the pages that depicted and condemned violent acts against Muslims received support from some Facebook users and provoked anti-Islam epithets from others.

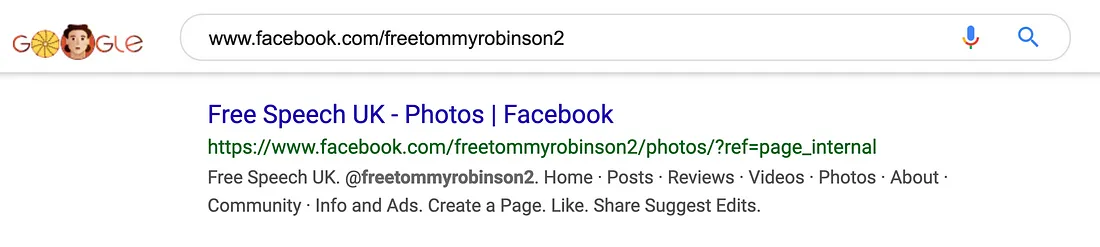

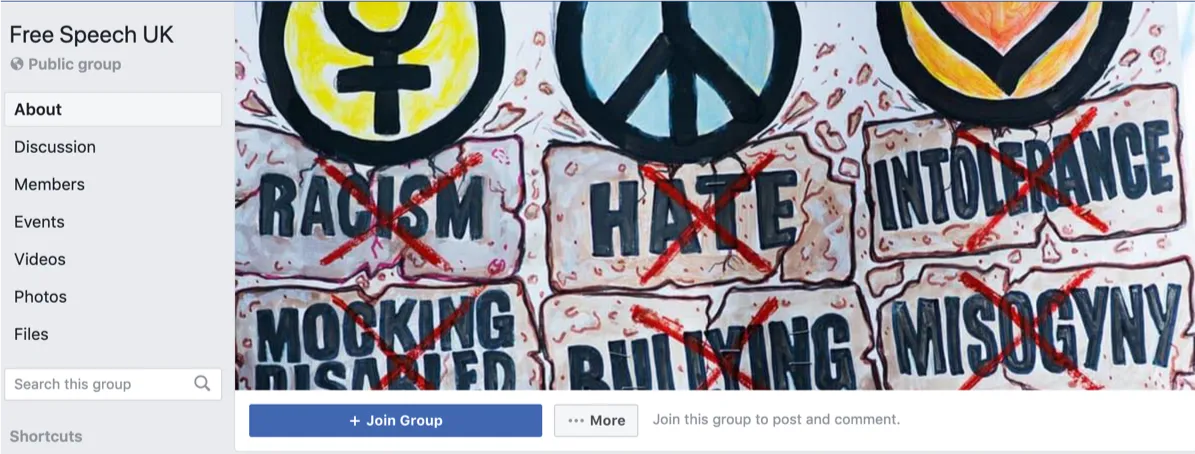

Other pages in the takedown also focused on Robinson but appeared designed to appeal to his supporters. One of these pages was deactivated before @DFRLab could view it but had the unique username “@freetommyrobinson2.” Although the page left little trace online, a Google search showed that its title was “Free Speech UK.”

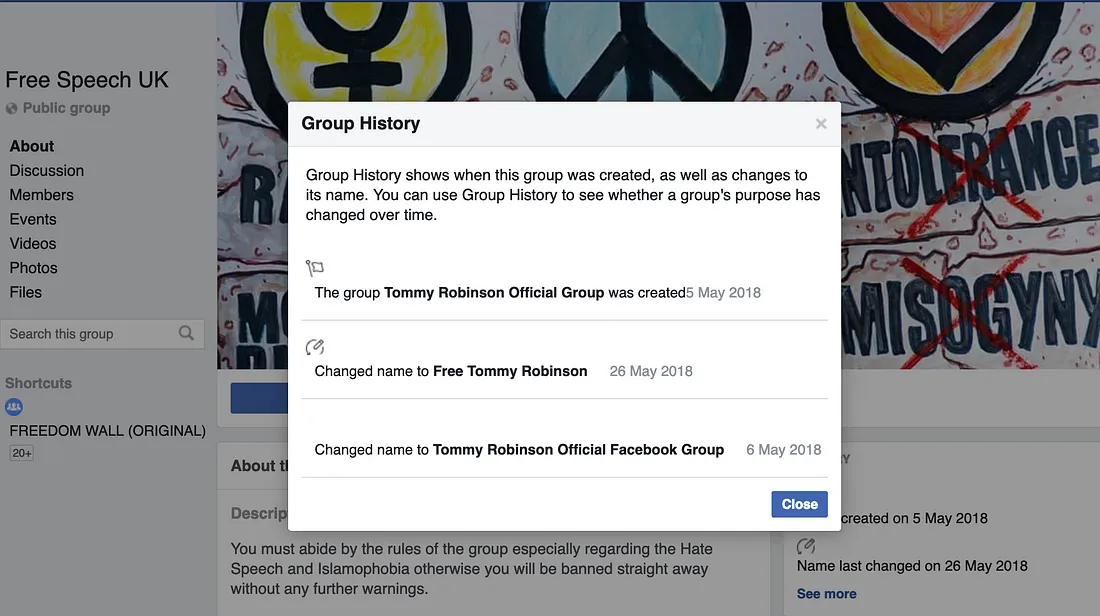

A similar group was active up to the moment of the takedown. It was created on May 4, 2018, as the “Tommy Robinson Official Group.” The name changed to “Tommy Robinson Official Facebook Group” the day after creation and then to “Free Tommy Robinson” on May 26, 2018. It was finally renamed “Free Speech UK.” The group’s various names suggest that it was likely designed to attract Robinson’s supporters.

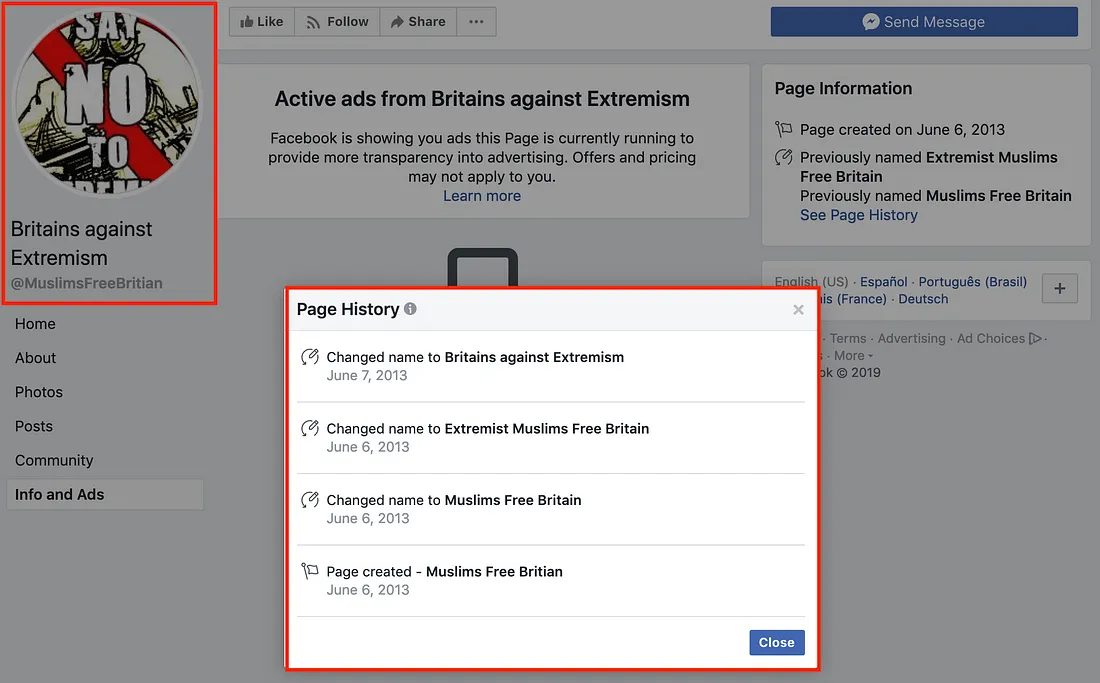

Another much older page underwent similar name changes with the same possible intention. This page was initially created on June 6, 2013, under the name “Muslims Free Britian” (note the misspelling of “Britain”), was later renamed to “Muslims Free Britain,” and finally renamed again to “Extremist Muslims Free Britain.” The intended meaning of the page title is ambiguous. On its face, the grammatical construction of the third title calls for “extremist Muslims” to “free Britain.” It is also possible, however, that the page administrator made a grammatical error and actually meant to call for an “Extremist Muslim-Free Britain” — that is, a Britain free of extremist Muslims.

Regardless, the page’s title finally evolved into “Britains against Extremism,” its ultimate title. Given the earlier reference to “Extremist Muslims,” this final name change was likely designed to attract and lure in anti-Muslim or anti-extremist users.

The pages and group that changed names hosted spirited debates between members with differing views on Tommy Robinson and other politically divisive topics, such as education about LGBT issues.

The “Britains against Extremism” page hosted discussions on Islam, with the anonymous administrator asking other accounts direct questions about their views on the religion. There was little subsequent activity on the page following the debates about its naming upon creation in 2013.

While @DFRLab cannot conclusively determine the intention of changing page and group names based on the open-source evidence, the three assets appeared designed to attract anti-Islam and far-right users with their names and then engage these users with pro-Islam and anti-prejudice messaging. @DFRLab found no indication that these assets displayed Islamic extremism, attempts at radicalization, or calls for violence between faith groups.

“Coordinated Inauthentic Behavior”

Facebook said that it took the pages offline because of “coordinated inauthentic behavior” — that is, the use of accounts or pages that misrepresented who was behind them.

A number of the user accounts listed as administrators, moderators, or managers of these groups and pages also appeared to violate the Facebook’s authenticity rules. Under Facebook rules, each person is only allowed to operate one individual user account. In this case, however, two individuals (a woman and a man) each appeared to be represented by multiple accounts.

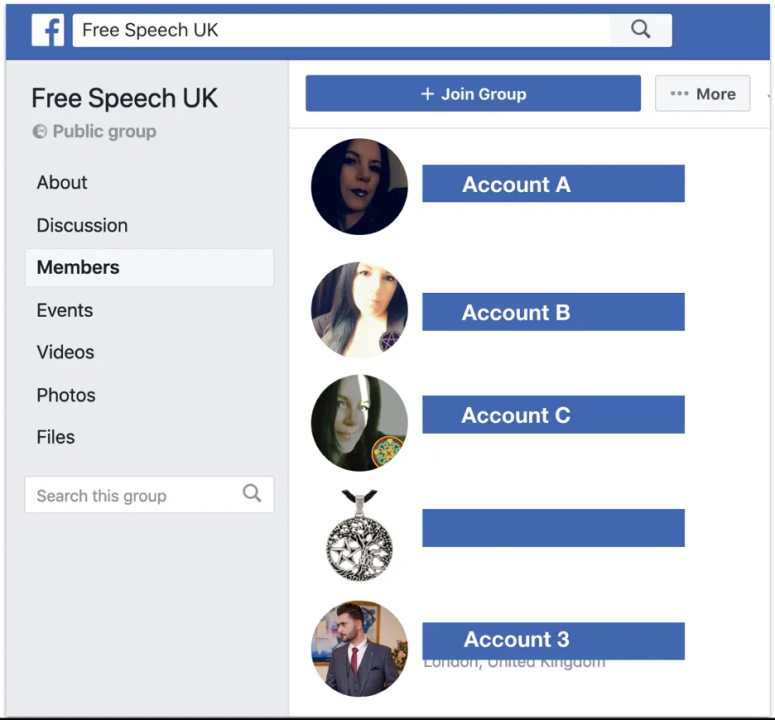

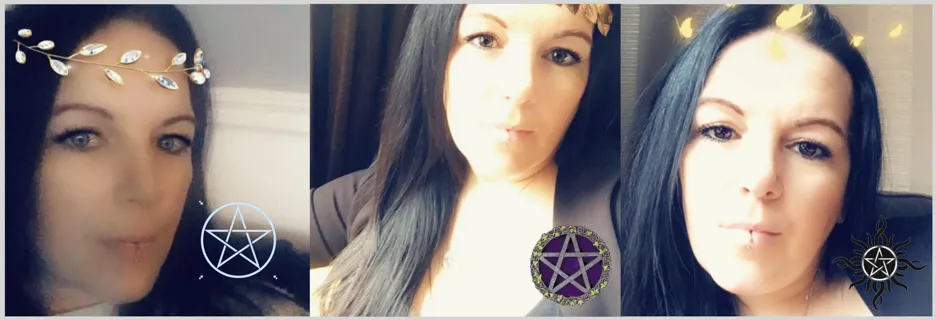

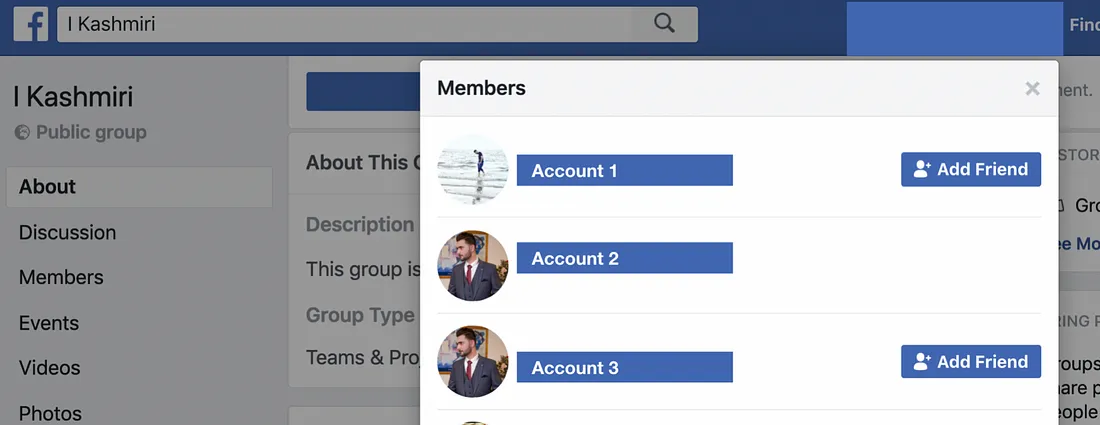

The groups “Free Speech UK” (formerly “Tommy Robinson Group”) and “Freedom of Speech UK” both featured three administrator accounts that had the same initials and almost identical surnames. @DFRLab obscured the names to avoid overly exposing the individual (or persona) behind the accounts and has instead referred to the administrator accounts as A, B and C. Another member, Account 3, will be discussed below.

The three accounts posted their first profile pictures in September, October, and November 2018, respectively. The images did not appear to have been posted elsewhere online. This seems to have been a case of a single user running multiple accounts, in violation of Facebook’s rules.

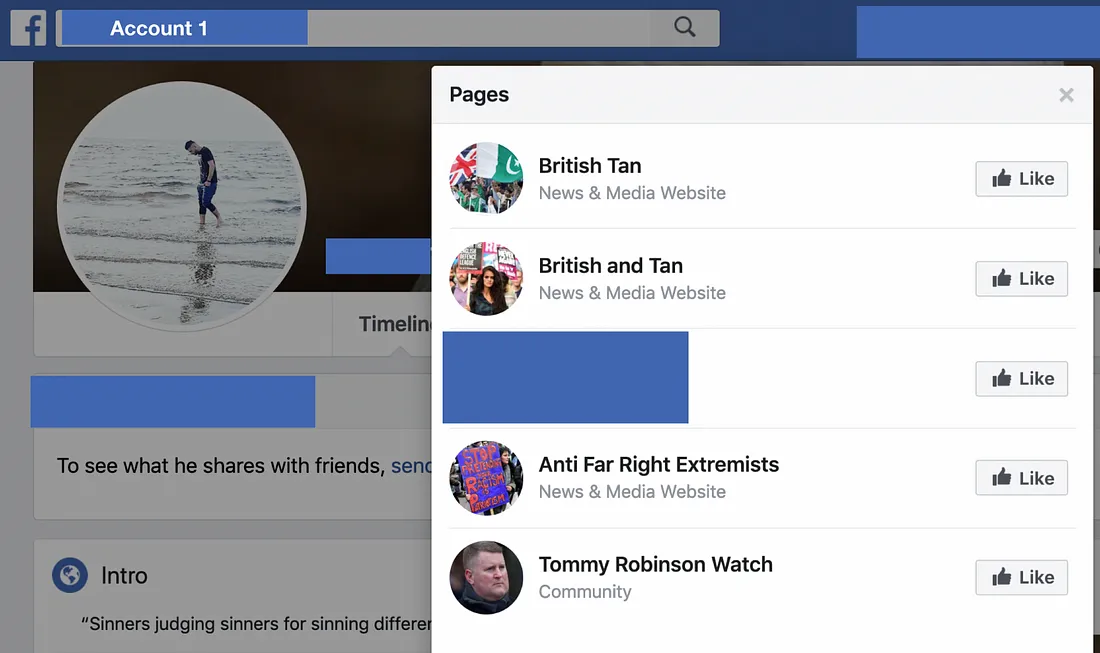

Similarly, pages such as “British Tan,” “British and Tan,” “Anti Far Right Extremists,” and “Tommy Robinson Watch” were all administered by a single account, Account 1. Again, @DFRLab obscured the name to protect the identity of the user.

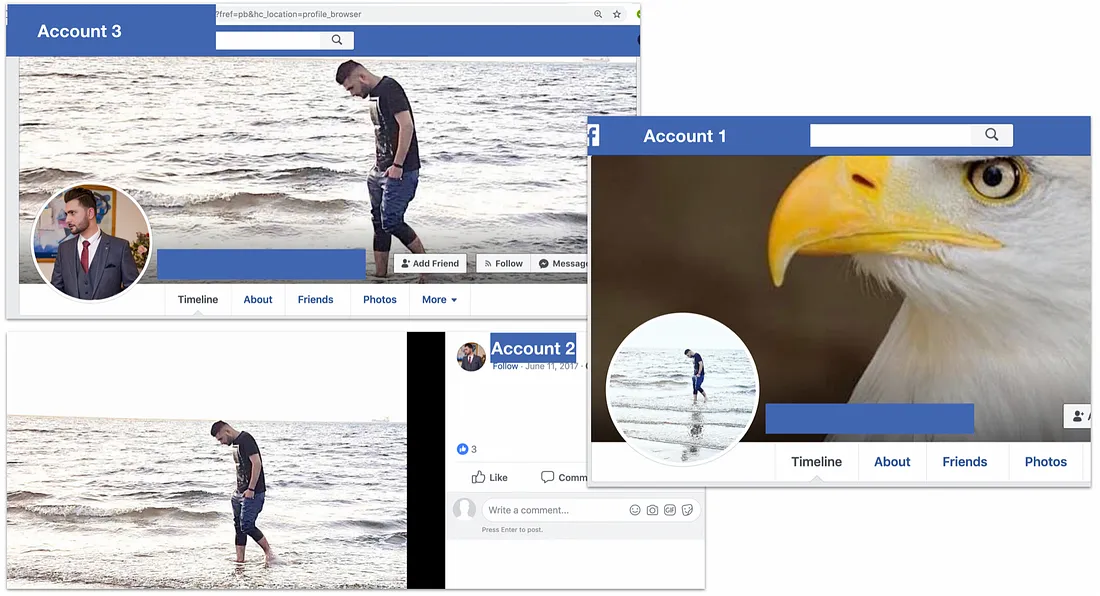

The same account was also a member of a group called “I Kashmiri,” alongside two further accounts that had similar names and shared the same profile picture. One of them, Account 3, was a member of the two “Free speech” pages noted above.

All three accounts featured the same image of a young man standing in water, either as a post, profile picture, or background.

Again, the images did not appear to have been featured elsewhere online. These accounts appear to have been linked to a genuine person, but one who was running multiple accounts, in violation of Facebook’s rules.

Pakistan Ties

Beyond the focus on race and religion in the United Kingdom, the pages and groups showed some links to Pakistan and Britain’s large and long-established Pakistani community.

One page in the takedown featured South Asian dresses and other miscellaneous items. At least two posts from 2016 advertised “Delivery Facility All Over Pakistan.” Another page, called “Authentic Teachings of Islam,” shared quotes and trivia from the Quran. A page titled “Research the Search,” inactive since mid-2017, primarily contained YouTube links to a varied assortment of videos, the majority concerning Pakistan.

Eight of the 15 pages @DFRLab analyzed contained information about the geographic location of the page managers. Each of the eight pages had managers with a primary location in the United Kingdom, but five also had at least one manager with a primary location in Pakistan, and three pages had a manager with a primary location in Egypt.

In between posting on religious and integration issues, the Facebook pages posted content that criticized the behavior of Indian soldiers in Kashmir and accused India of intolerance more generally, in line with Pakistani perceptions of the conflict in the disputed area.

Some of the comments posted to the pages and communities on the recent hostilities between India and Pakistan were also anti-Indian.

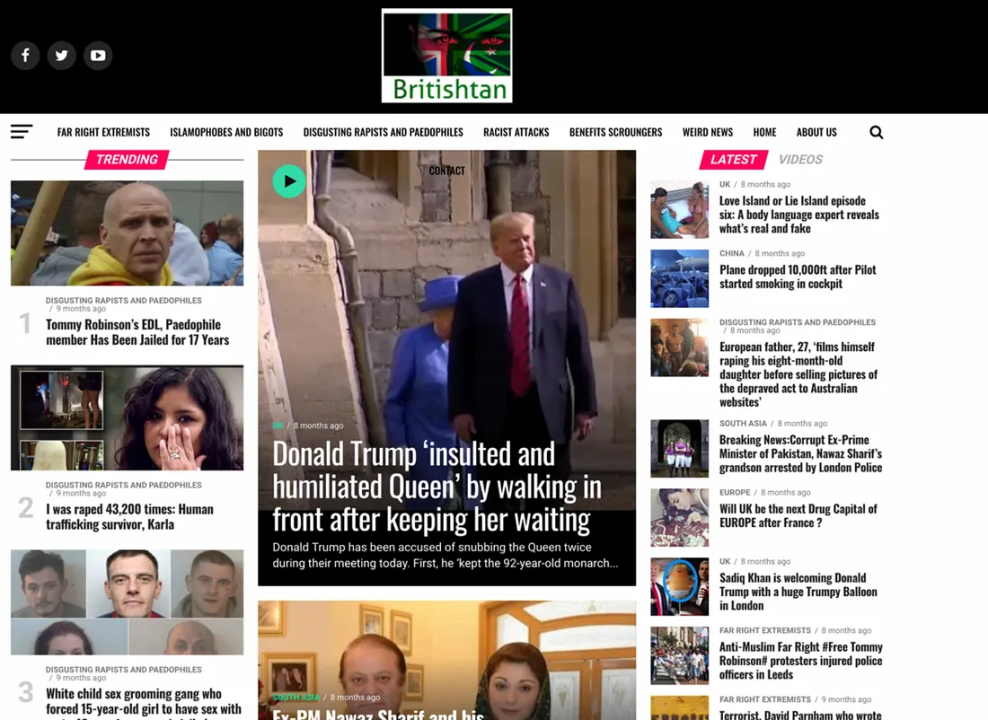

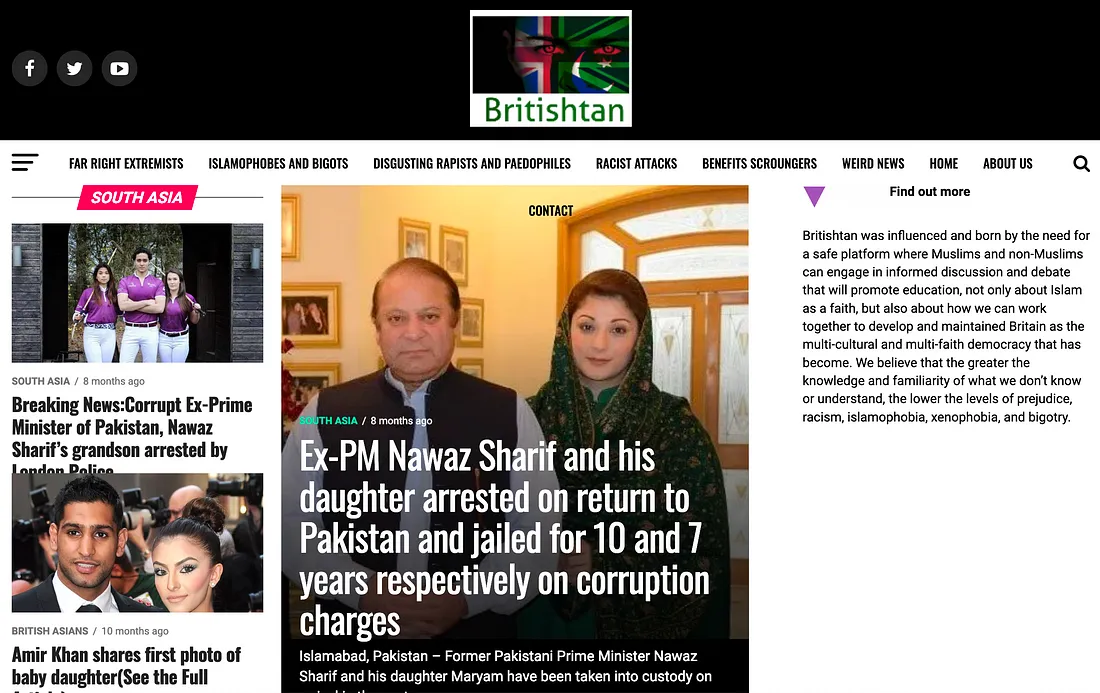

At least two of the pages contained a link to a website called BritishTan. The site’s homepage seemed infrequently updated and featured “Trending” and “Latest” content that was published at least eight months prior. The narratives shared on the site were similar to those shared on the pages, highlighting far-right and extremist activity.

The website combined clickbait categories such as “Weird news” with more focused categories, including “British Asians,” “Far Right Extremists,” “Islamophobes and Bigots,” “Muslims,” “Racist Attacks” and “South Asia.”

The South Asia section had a significant focus on Pakistani politics and on the British Pakistani community, including boxer Amir Khan.

Its “About” page called for dialogue and tolerance between Muslims and non-Muslims in Britain:

Britishtan was influenced and born by the need for a safe platform where Muslims and non-Muslims can engage in informed discussion and debate that will promote education, not only about Islam as a faith, but also about how we can work together to develop and maintained Britain as the multi-cultural and multi-faith democracy that has become.

Network Amplification

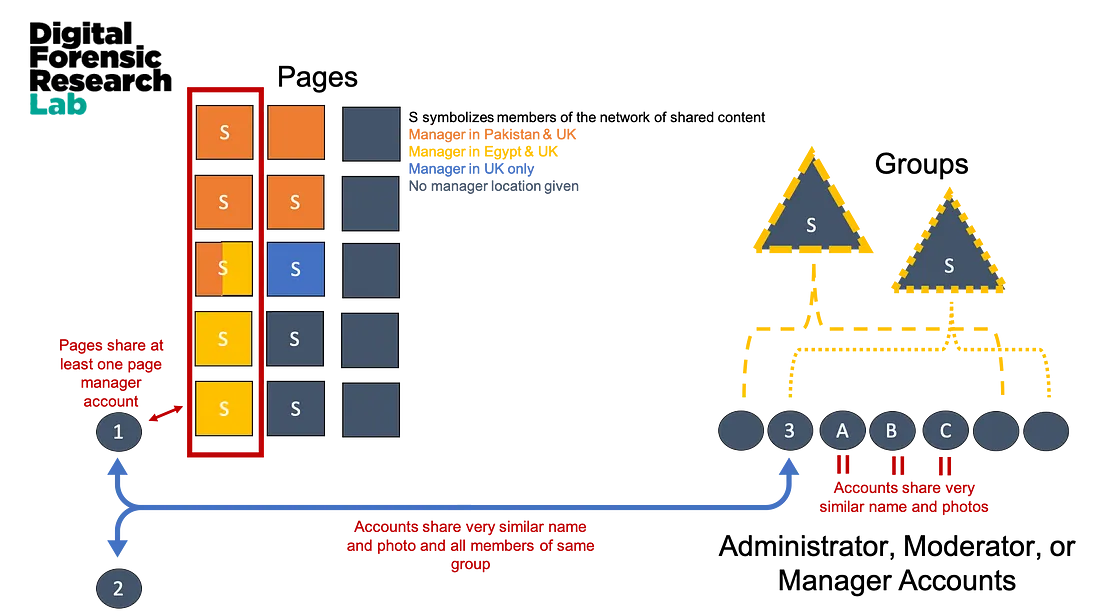

The pages and groups removed from Facebook had developed a complex and interconnected network of moderators, audience members, and discourse. @DFRLab mapped out the network to visualize the insights generated from this investigation.

Five of the pages, boxed in red in the map, shared one manager, Account 1. These five pages had a combined follower count of 131,000 accounts and had been active just days prior to the takedown.

At times, these pages also shared identical links or graphics within minutes of each other.

In addition to pages, Facebook also shut down the two “Free Speech UK” and “Freedom of Speech UK” groups, shown as triangles in the network map.

The “Free Speech UK” group was created in May 2018, and the “Freedom of Speech UK” group was created on March 22, 2018. These groups had a combined membership count of more than 18,000 accounts. In their group descriptions, they specifically outlined rules “regarding Hate Speech and Islamophobia” and included quotes from Article 10 of the European Convention on Human Rights, including “everyone has the right to freedom of expression.”

The two groups shared six of the seven administrators or moderators that ran them, as shown by the circles and dashed lines in the network map.

Two of the five pages managed by Account 1 were also members of the two groups removed by Facebook, further suggesting a connection between the groups and pages.

In addition to posting original content or linking to sources outside of the group of removed pages, the pages also amplified each other. The network of content amplification is represented by “S” in the map.

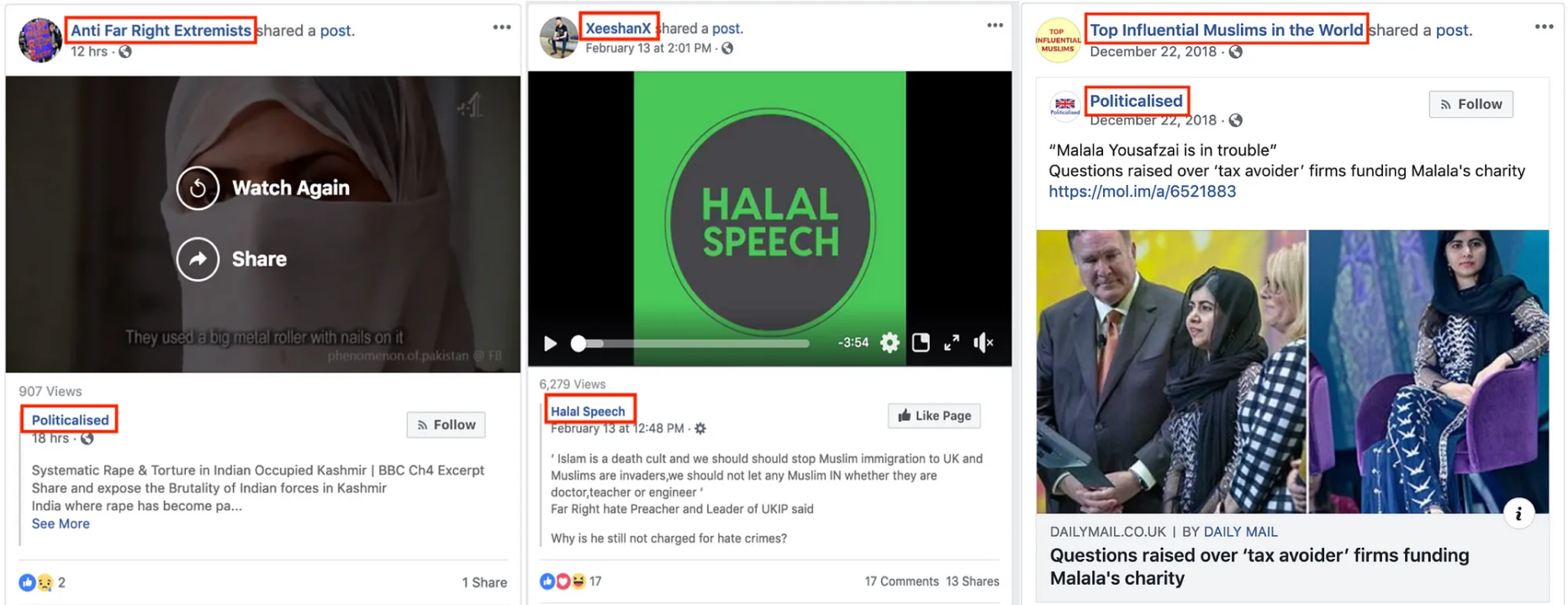

Of the 15 pages that @DFRLab analyzed, nine seemed to operate as a single content-sharing network. For example, two of five pages (“Anti Far Right Extremists” and “XeeshanX”) managed by Account 1 amplified content from other pages in the takedown. In addition, a page with unknown managers called “Top Influential Muslims in the World” shared content from the same page that the group “Anti Far Right Extremists” shared. The content shared by these pages also included posts by the two “Free Speech” groups removed by Facebook, suggesting that the groups comprised part of the network as well.

Conclusion

The pages, groups and accounts that Facebook took down formed a cohesive and dense network that focused on issues of interest to Muslim communities, especially in the United Kingdom.

Furthermore, the groups and pages did not operate in a segregated manner, with groups interacting only with other groups and pages only with other pages. On the contrary, the two types of assets operated interdependently: they shared the same content and, in some cases, likely the same administrators.

Some of these assets appeared to pose at times as far-right groups to lure genuine far-right users into a debate on Islam. These saw heated debate, without necessarily showing signs of either side shifting its position. Meanwhile, the main administrator accounts in the network appeared to be violating Facebook’s rules on individuals running multiple user accounts.

The network is thus best characterized as an attempt to promote the managers’ authentic beliefs, albeit through inauthentic means.

Follow along for more in-depth analysis from our #DigitalSherlocks.