Measuring the Near-term Success of Facebook’s Anti-Vaxx Crackdown

Almost two months into Facebook’s campaign, anti-vaccine content was still widely shared and used in ads

Measuring the Near-term Success of Facebook’s Anti-Vaxx Crackdown

BANNER: (Source: @donara_barojan/DFRLab)

Despite Facebook’s effort to curb anti-vaccine misinformation, it continued to spread on the platform over two months after the company implemented intensified screening.

Health-related misinformation is one of the few forms of false reporting that can directly result in real-world tragedies, such as viral disease outbreaks and death. In particular, misinformation targeting vaccines has been directly linked to hundreds of measles outbreaks around the world, including its reemergence in the United States, which had previously declared the disease eradicated in 2000.

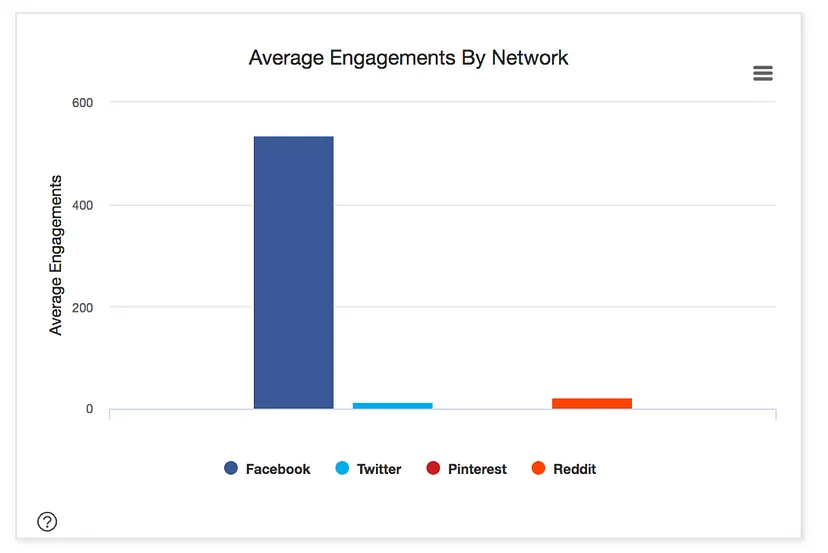

According to DFRLab analysis performed using BuzzSumo, an online tool that measures engagement across social-media platforms, Facebook in the past two years was the source of vaccine-related shared content 2,000 percent more often than Twitter, Pinterest, and Reddit combined. A lot of this content is anti-vaccine (or “anti-vaxx”) misinformation.

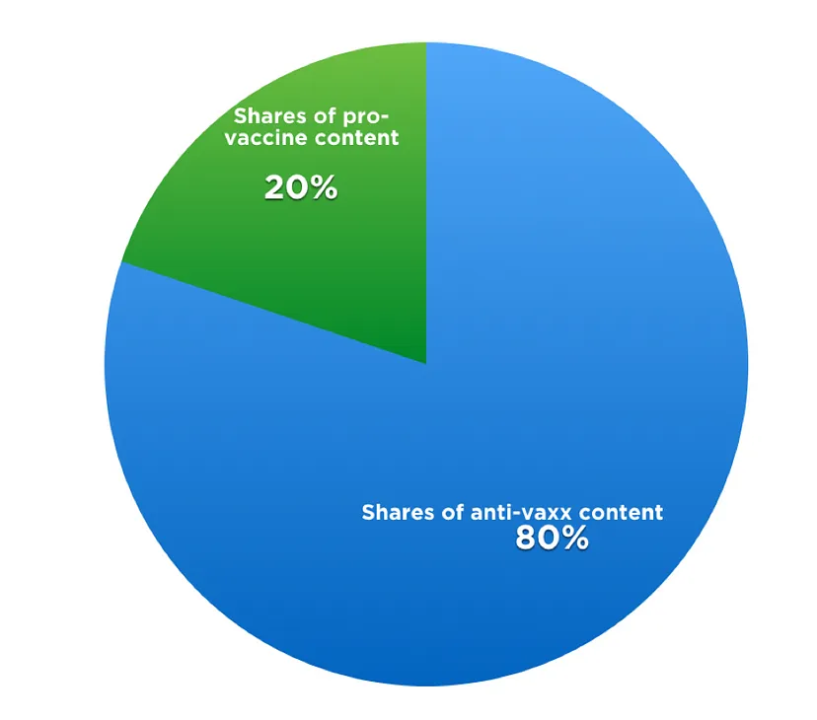

On March 7, 2019, Facebook announced that it would “reduce the ranking of groups and Pages that spread misinformation about vaccinations” and “reject ads” that include misinformation about vaccinations. A month and a half after Facebook’s announcement, the approach had achieved mixed results. On the one hand, shares of anti-vaxx content had decreased; however, anti-vaxx content still constituted 34 percent of all vaccine-related content shared on Facebook and at least three anti-vaxx ads were still active on the platform.

Over the last five years, anti-vaxx bloggers and influencers have weaponized Facebook to a much greater extent than they have other online platforms. In particular, the anti-vaxx community has used Facebook to target defined demographic groups, especially new parents, through tailored ads.

As a result, nine out of the top 14 most-shared articles — including the top three — on vaccines across Facebook, Twitter, Pinterest, and Reddit in the past two years were anti-vaxx. The nine anti-vaxx articles generated 6,660,000 engagements (i.e., shares, likes, and comments) in total; more than 95 percent of those engagements came from Facebook.

In contrast, the five pro-vaccination articles garnered only 1,631,000 engagements in total. Overall, the most trending anti-vaxx content outranked the most trending pro-vaccination content by a ratio of 4:1.

The vast majority of the most-shared articles were either outright false or highly misleading. The most-shared article on the topic of vaccines was from In Shape Today and was titled, “FDA Announced That Vaccines Are Causing Autism.” This article misrepresented an old vaccine label as evidence to support the claim that vaccines cause autism. The article has since been debunked numerous times, and InShape removed it from its website. Despite that, the article was still shared more than 2,400,000 times (see the BuzzSumo results above).

The second most-shared article on vaccines was published by EWAO.com and titled “John Hopkins researcher releases shocking report on flu vaccines.” The article misrepresented a feature in a British medical journal as a study and the anthropologist behind it as a medical researcher from John Hopkins University. The anthropologist argued that the potential risks of the flu vaccine have not been highlighted sufficiently and that its benefits are overstated. The EWAO article, however, went further and added quotes from pseudo-scientists, practitioners of bogus theories, that the flu vaccine is ineffective and unsafe. The article was shared 2,200,000 times across social media, predominantly on Facebook.

The third most-shared article was a conspiracy theory alleging that an alternative medicine doctor was murdered after discovering cancer enzymes in vaccines. The doctor in question, James Jeffrey Bradstreet, spent his career arguing that vaccines cause autism. There is no evidence to support the claim that Bradstreet was killed. On the contrary, local law enforcement ruled his death to be a suicide.

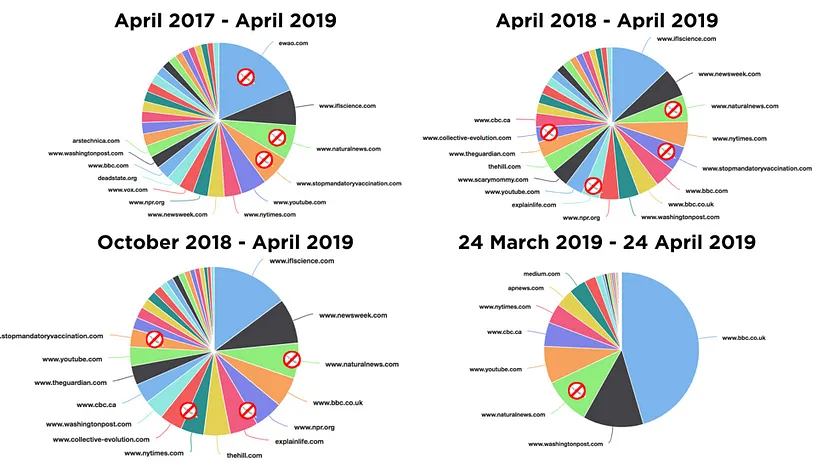

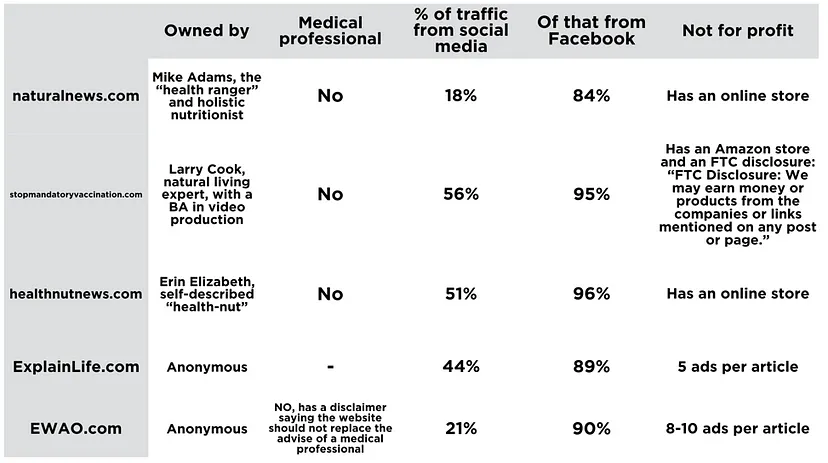

A BuzzSumo survey showed that the most-shared anti-vaxx sources are five alternative health websites: naturalnews.com, stopmandatoryvaccination.com, healthnutnews.com, explainlife.com, and EWAO.com.

None of these websites appear to be run by medical professionals, though at least one of them claims to be. In addition, most appear to be run for profit, as three of the sites listed below — Natural News, Stop Mandatory Vaccination, and Health Nut News — operate online stores either on their own sites or via Amazon.com, in which they sell supplements, anti-vaxx books, and air filters, as well as “alternative medicines.”

Most of the sites listed above rely on social media, predominantly Facebook, for between 20 and 50 percent of their website traffic. In light of this, Facebook’s recent announcement that it would de-rank pages spreading anti-vaxx content has the potential to redefine the information environment on vaccine-related topics, on Facebook and also beyond the platform, as the downranking will likely reduce the number of visits to the anti-vaxx websites that rely on Facebook for a significant portion of their traffic.

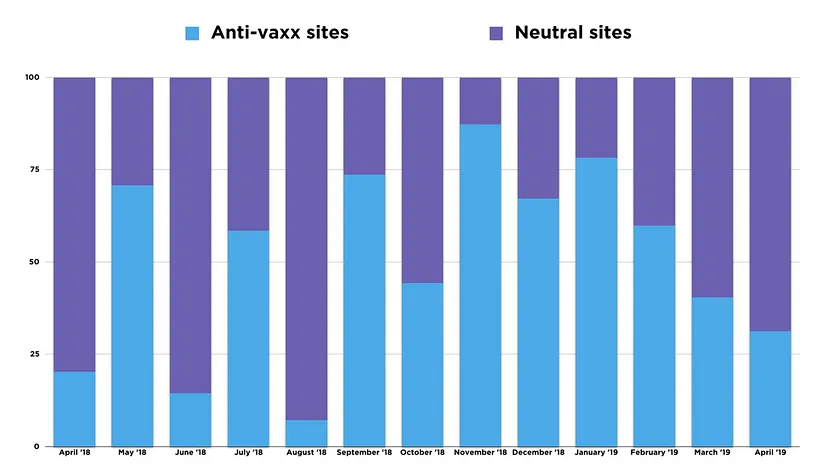

Thus far, Facebook’s approach seems to have achieved some limited success. The DFRLab surveyed the most-shared websites on the topic of vaccines on social media in the last 12 months. Since Facebook introduced new rules curbing anti-vaxx content in early March 2019, the shares of content from anti-vaxx sites has dropped 20 percentage points overall (from 54 to 34 percent); over the course of March, content from these sites accounted for 34 percent of all shares of vaccine-related information.

The roughly 30 percent in April, however, is still a high proportion, as it suggests that one-third of the most-shared content on vaccines is coming from anti-vaxx sites.

Removing Anti-Vaxx Advertising

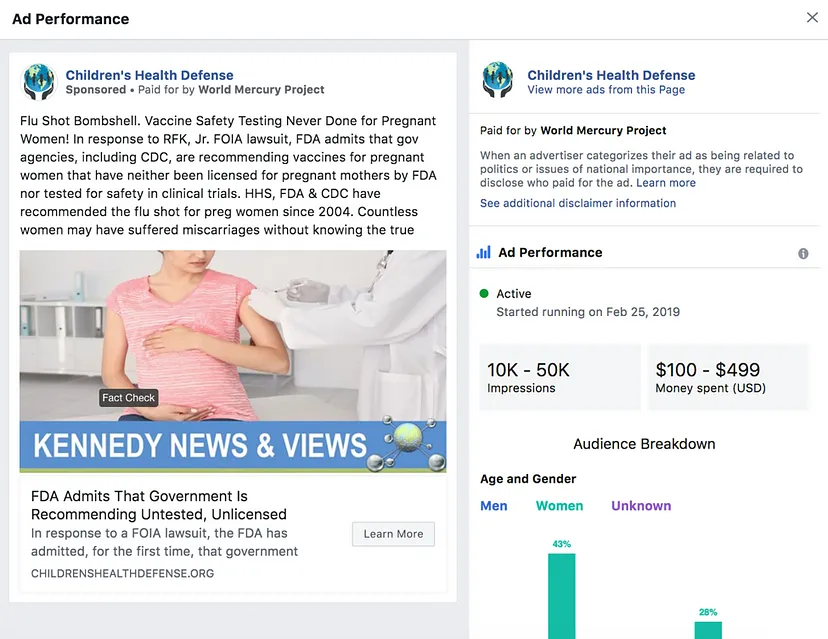

In addition to downranking anti-vaxx pages, Facebook also pledged to take down ads that spread misinformation about vaccinations. The DFRLab surveyed Facebook’s active ads and found at least three active ads that were spreading misinformation related to vaccines.

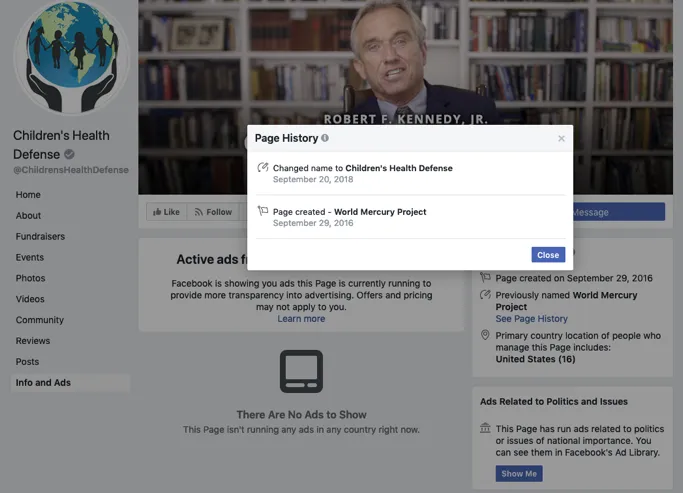

The first ad was posted by Children’s Health Defense (CHD), an anti-vaxx news site chaired by Robert F. Kennedy, Jr., known to share anti-vaxx hoaxes and conspiracies. The ad was a sponsored post (from February 11) — the organization has a history of promoting its own posts — with the related World Mercury Project paying to promote the post. (As paid promotions are searchable in Facebook’s advertisement library, the DFRLab considers them to be ads.) The page for CHD was originally titled “World Mercury Project,” and the website (www.worldmercuryproject.org) for the latter redirects to the former’s webpage.

According to the article linked in the advertisement, the FDA admitted that the government is recommending untested and unlicensed vaccines to pregnant women. That claim, however, is highly misleading, because the article uses a Freedom of Information Act (FOIA) request for information related to vaccines not actually in development. The claim has been debunked by several fact-checkers.

The paid promotion of the post started on February 15, 2019, shortly before Facebook’s announcement. It was still visible a month later as a paid promotion but was no longer being promoted at the end of April.

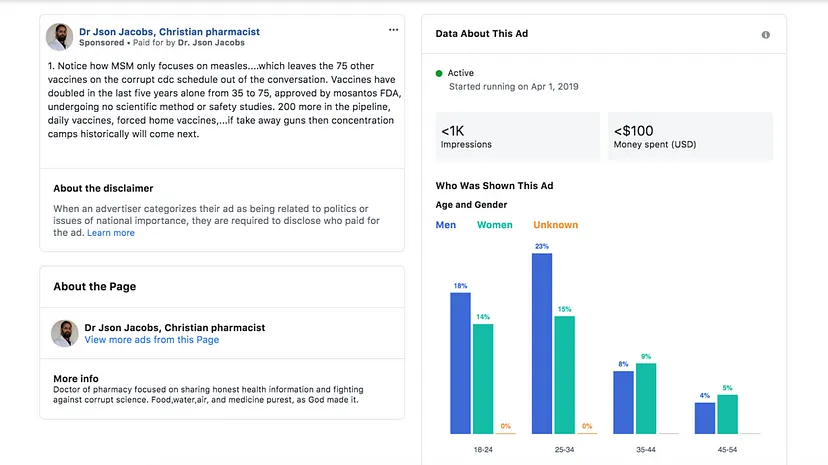

The second ad — a sponsored post — started running on April 1, 2019, and was paid for by “Dr. Json Jacobs,” a self-described “Christian pharmacist” fighting against “corrupt science.” A simple Google search shows no other results for a “Dr. Json Jacobs” outside of the Facebook page. The post falsely claimed that vaccine development does not adhere to the scientific method or involve safety studies.

Despite being misleading, the ad was still active on Facebook as of April 4 and generated between 10,000 and 50,000 impressions. While it was no longer functioning as an ad — given it was a sponsored post — on April 29, it remained available on “Dr. Jacobs’s” page.

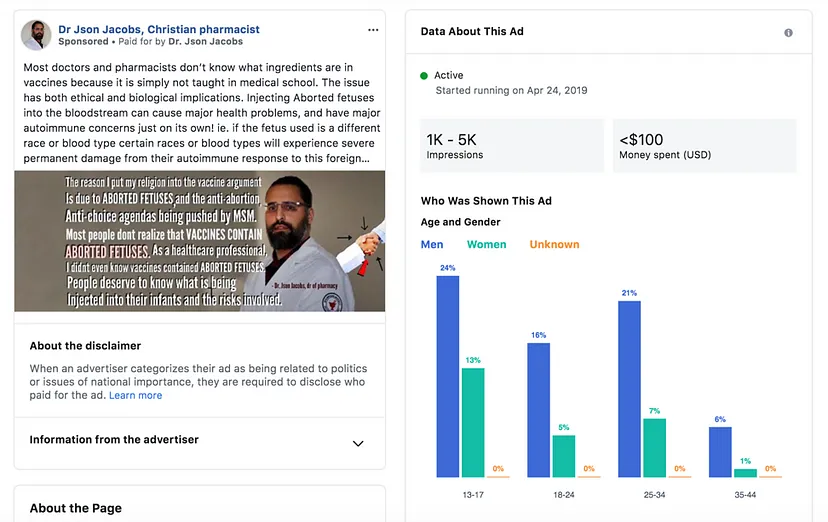

The same page published another ad on April 24, which was still active on April 29.

The third ad — another sponsored post — claims that vaccines contain aborted fetuses and therefore may cause a serious autoimmune response. This false claim has been debunked many times. Although some human cells have been used to develop the vaccines in the 1960s, they are not used in the manufacturing process.

Conclusion

Since Facebook introduced the new initiative, shares of anti-vaxx content on the platform have dropped.

There is still more work to be done, however. Roughly a third of the most-shared content related to vaccines still spreads anti-vaxx misinformation. Furthermore, while Facebook has pledged to remove ads promoting anti-vaxx content, at least three such ads were active at the time of this analysis.

While the DFRLab has found the near-term results of Facebook’s efforts to be incomplete, at best, the long-term impact of the new measures will take several months to become apparent.

Register for the DFRLab’s upcoming 360/OS summit, to be held in London on June 20–21. Join us for two days of interactive sessions and join a growing network of #DigitalSherlocks fighting for facts worldwide!