360/OS 2019: Evolving Trends in Disinformation and Platform Governance

Part of a series of posts highlighting key themes at the DFRLab’s 360/OS 2019summit

360/OS 2019: Evolving Trends in Disinformation and Platform Governance

BANNER: Nathaniel Gleicher, head of Cybersecurity Policy at Facebook, speaks at the DFRLab’s 2019 360/OS event. (Source: @sarahphotovideo/SarahHalls.net)

The year was 2009 and social media platforms were desperate. “Content moderation” was no longer so straightforward as policing for nudity and graphic violence. Each deletion or suspension carried far-reaching political consequences — consequences with which Silicon Valley was unprepared to deal. “Please, take this power away from us!” one senior executive at an unnamed tech company pleaded in a meeting with a U.S. government counterpart.

So recalled Nathaniel Gleicher, Head of Cybersecurity Policy at Facebook and former Cybersecurity Director for the U.S. National Security Council, as he spoke at the Digital Forensic Research Lab’s 360/OS event on June 20–21, 2019, in London, the United Kingdom. Nowadays, as Gleicher explained, the dynamic is very different. Content moderation and disinformation — with its multitude of political considerations — now represents a central focus of Facebook and other large social media platforms.

Gleicher’s remarks offered insight into how these issues will evolve in the years ahead:

1. Privacy Emphasis and Continuing Disruption of the Open-Source Community. As the DFRLab and Atlantic Council’s Adrienne Arsht Latin America Center noted in a joint March 2019 report, the proliferation of WhatsApp and other end-to-end encryption services made it very difficult to conduct open-source monitoring of 2018 elections in Mexico, Colombia, and Brazil. While good for the privacy of users, the closed nature of these platforms renders it impossible to contextualize the reach and influence of disinformation trends. With Mark Zuckerberg’s stated intention of shifting to a similar “privacy-focused” model for Facebook and Instagram, these challenges will grow steeper.

One consequence of these shifting priorities can be seen in the June 11 termination of Facebook Graph Search, which had long been an invaluable tool for the open-source research community. Gleicher offered some of the first on-the-record comments regarding the decision, explaining that the flexibility of Graph Search had left it prone to abuse. As an example, he described an adversarial data-scraping operation that had sought to infer the hidden sexual identity of Facebook users, leaving them open to intimidation and harassment.

2. The “Professionalization” of Information Operations. Since 2016 and the explosion of interest in the disinformation and open-source research community, it has been fairly straightforward to distinguish amateurish digital influence operations from the more sophisticated efforts of nation-states. As Gleicher observed, however, this is quickly changing.

A prime example can be seen in the DFRLab’s recent analysis of the privately owned Archimedes Group, an Israel-based marketing firm. The firm operated at least 265 inauthentic assets across Facebook and Twitter, working to manipulate elections in Nigeria or slander media in Tunisia on behalf of its paid clients. The Archimides Group’s sophisticated tactics were essentially identical to those previously attributed to Russia’s Internet Research Agency. It is a sign of things to come.

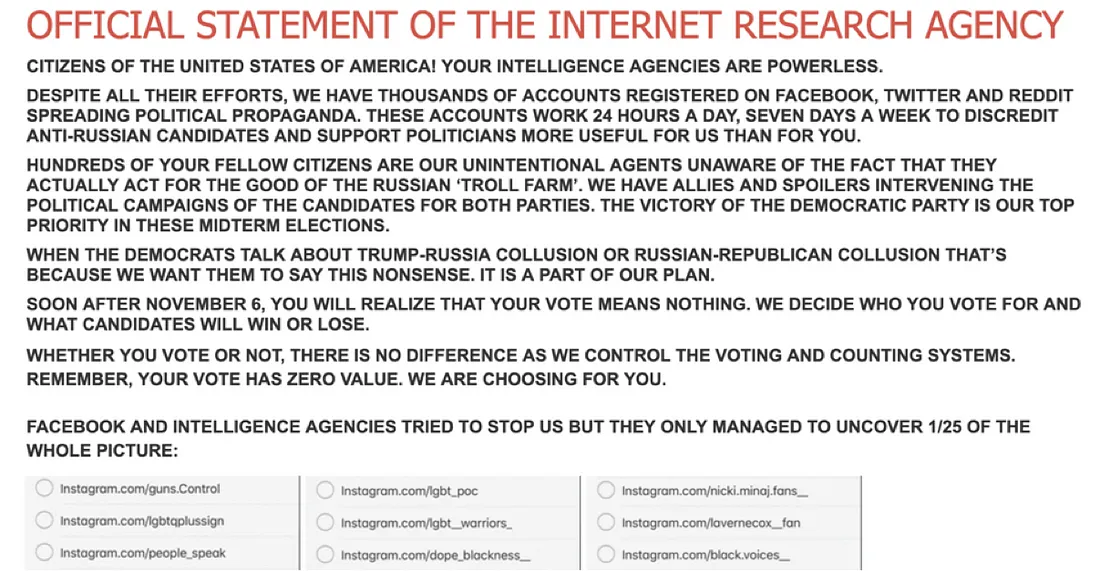

3. The Rise of Disinformation about Disinformation. Growing public awareness of disinformation campaigns has led to another new phenomenon: disinformation about disinformation. In Gleicher’s experience, each public announcement of a Facebook “takedown” must be weighed against the fact that significantly more users will be exposed to news about the disinformation campaign than might ever have been affected by the campaign itself. And as news of the takedown spreads, it becomes a vector for entirely new disinformation campaigns that seek to twist the facts in one direction or another.

An early, worrying example could be seen in late 2018, in the final days before the 2018 U.S. midterm elections. A website purporting to be associated with Russia’s Internet Research Agency announced that it had launched a massive disinformation attack that had effectively rendered the results of the election moot. There was scant supporting evidence, however, and U.S. media covered the effort with appropriate skepticism. Nonetheless, there is a real danger that these sorts of meta-campaigns will grow more sophisticated in the years to come. After all, if a disinformation attack is perceived to have taken place, it can carry political consequences as severe as the real thing.

Ultimately, the challenges regarding disinformation operations and platform governance will only grow more complex in the years to come. This challenge must be met by a new generation of #DigitalSherlocks equipped with the knowledge to identify disinformation campaigns and the means to combat them.

Follow along for more in-depth analysis from our #DigitalSherlocks.