Inauthentic anti-Rohingya Facebook assets in Myanmar removed

Posts portrayed Rohingya as terrorists, denied

Inauthentic anti-Rohingya Facebook assets in Myanmar removed

Posts portrayed Rohingya as terrorists, denied that atrocities against them took place, and amplified reports of violence to inflame ethnic tensions

On April 30, 2020, Facebook took down a network of pages and accounts the company attributed to members of the Myanmar Police Force (MPF) for coordinated inauthentic behavior. Many of these assets inflamed anti-Rohingya sentiment before, during, and after the ethnic cleansing of Rohingya Muslims by the Myanmar military in 2017.

Starting in August 2017, between 10,000 and 25,000 Rohingya Muslims were killed and 700,000 fled; the United Nations described the situation as “a textbook example of ethnic cleansing.” Social media use was rife around the crisis — both as a means of inciting further violence against the Rohingya but also as a means of communication that avoided government censors within that same community.

In its monthly statement on May 5, 2020, Facebook said that:

The individuals behind this network used fake and duplicate accounts to post in Groups and manage Pages posing as news entities. The Page admins and account owners shared content primarily in Burmese about local news and events such as the successes of the national police and military, stories about police officers providing assistance to local families, arrests and police raids, criticism of the Arakan Army and anti-Rohingya content. Most recently, some of these Pages posted about COVID-19. Although the people behind this activity attempted to conceal their identities and coordination, our investigation found links to members of the Myanmar Police Force.

The DFRLab examined 18 assets that Facebook stated were operated by members of the MPF. The DFRLab could not corroborate the direct links, though the assets it had access to did demonstrate a heavy pro-MPF bias. A portion of these assets inflamed anti-Rohingya sentiment by presenting the Rohingya as terrorists, denying that atrocities against the Rohingya took place, amplifying reports of violence by the Rohingya on other groups, and dismissing the existence of the Rohingya in Myanmar. Although there was little evidence of coordinated activity in the assets the DFRLab had access to, they displayed behavior intended to hide their identities while driving the anti-Rohingya narratives.

A Bloody Backdrop

Although the Myanmar military officially relinquished control of the state in 2011, allowing more independent elections after years of repression, the military — also called the Tatmadaw — still wields significant power and influence in the country. Unlike many other countries, which have civilian control of police forces, the Myanmar Police Force operates under the military, along with the Myanmar Army, Navy, and Air Force.

For years before the 2017 ethnic cleansing, the Myanmar military used Facebook to stoke ethnic divides through anti-Rohingya propaganda and coordinated troll campaigns. Facebook plays an outsize role in Burmese citizens’ understanding of the news. An estimated 20 million of Myanmar’s 53 million people use Facebook, and it is synonymous with the internet for many of its users; Facebook is even pre-downloaded on most cellphones in Myanmar due to decisions made by the main telecoms providers in the country. The DFRLab previously examined inauthentic Facebook assets from a Burmese telecommunications company partially owned by the Myanmar military.

On August 28, 2018, Facebook took down accounts of Myanmar military and related individuals who had “committed or enabled serious human rights abuses,” amid criticism it acted too late to prevent the incitement of anti-Rohingya sentiment on its platform. Since August 2018, Facebook has attempted to play a more proactive role in Burmese content moderation with special attention paid to hate speech, although some human rights activists have criticized their choice to ban certain violent Burmese groups — including the Arakan Rohingya Salvation Army (ARSA), a Rohingya group dedicated to violent resistance to the Myanmar military — while still allowing the Myanmar military to operate on the platform.

Anti-Rohingya, pro-MPF pages

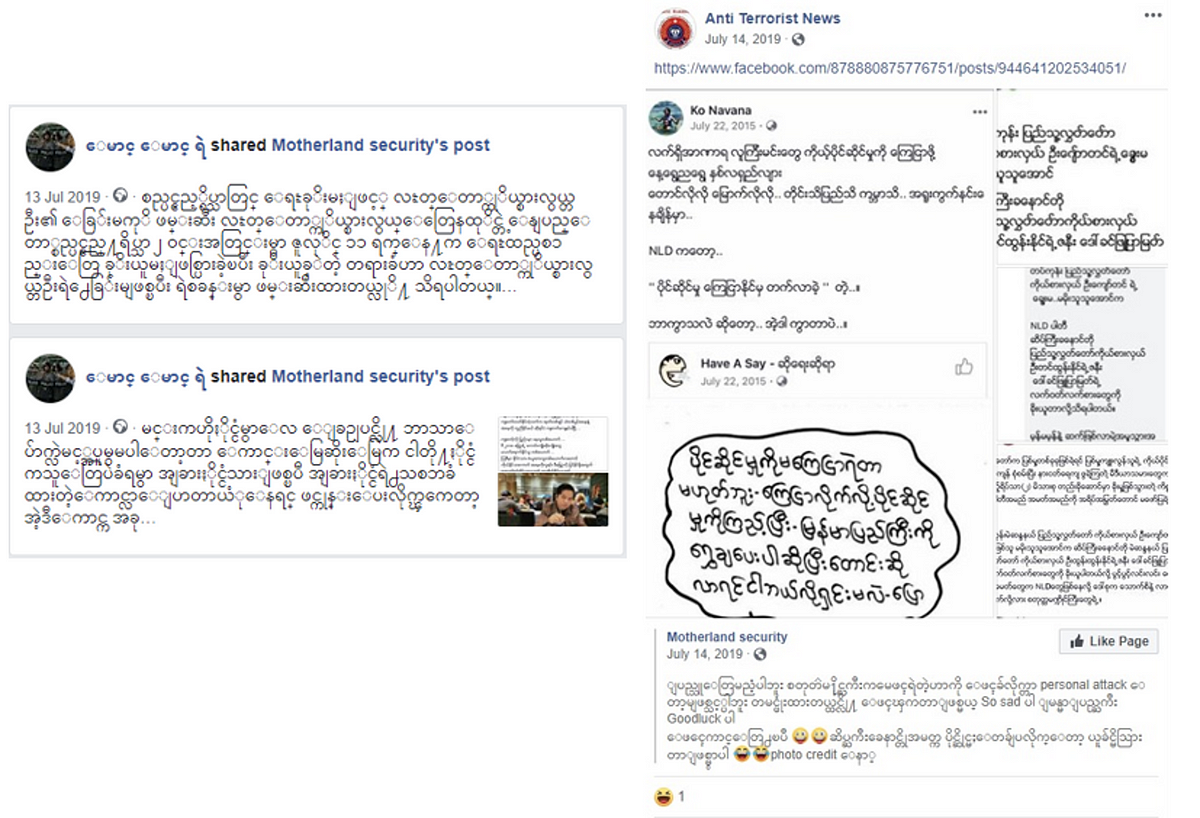

Facebook took down at least three pages it attributed to members of the MPF: “Motherland security,” “Anti Terrorist News,” and “ျမန္မာသတင္းအမွန္,” whose unique handle is “TrueNewsOfMM.”

“Motherland Security” and “ျမန္မာသတင္းအမွန္” were newer pages, created on April 6, 2019, and January 23, 2019, respectively. In contrast, “Anti Terrorist News” was created on August 28, 2017, two days after ARSA violently attacked the Myanmar military. The attack catalyzed days of atrocities by the Myanmar military against the Rohingya, leading to ethnic cleansing. According to human rights investigators, the worst days of the violence were between August 25, 2017, and September 1, 2017.

All three pages shared Burmese security-related news, including alleged terrorist activity, and some posts seemed similar to police dispatches with information on crimes and potential suspects.

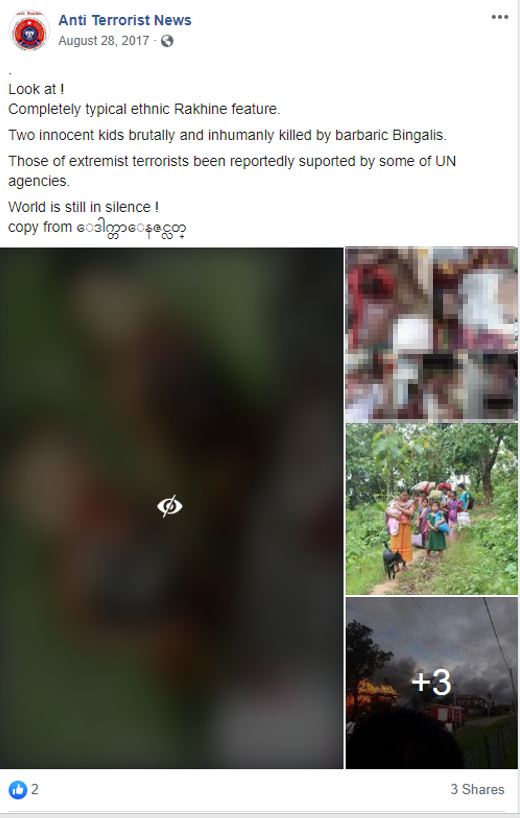

Anti Terrorist News’ content demonized the Rohingya, mischaracterized the violence by denying that atrocities against the Rohingya took place, amplified the deaths of other ethnic groups at the hands of the Rohingya through graphic imagery, and dismissed the existence of the Rohingya in general.

For example, the page’s very first post, on August 28, 2017, shared graphic, violent images, including the corpses of children, who the post described in English as, “Two innocent kids brutally and inhumanly killed by barbaric Bingalis.” “Bengalis” is used by some in Myanmar, including the government, to describe the Rohingya in the attempt to portray the Rohingya as belonging in Bangladesh and not Myanmar.

Another post on the same date shared a meme about ARSA using babies as human shields, in comparison to the Myanmar Army who are depicted as protecting babies; the accompanying text included the hashtag “#No_Rohingya_in_Myanmar.” Multiple surviving Rohingya Muslims have accused the Myanmar military of murdering and stabbing Rohingya babies during the August 2017 violence. One Rohingya woman recounted that the Myanmar military killed her baby by throwing him into a fire on August 29, 2017 — a day after this meme was posted.

Posts in early September detailed the atrocities faced by Hindus at the hands of “ARSA extremist terrorists,” implied that the Rohingya were pretending to need help while murdering Rakhine people, and denied that attacks on the Rohingya occurred. One image falsely stated that “HRW [Human Rights Watch] is announcing worng [sic] news on Rakhine State, Myanmar. All of the victims are indigenous ethnic group of Rakhine race villagers. They run away to escape Bengali extremist terrorists attack.”

These posts — portraying all Rohingya as terrorists, denying that the Rohingya existed, and falsely casting the sectarian violence as one-sided — all occurred as the Myanmar military murdered and displaced the Rohingya in August and September 2017.

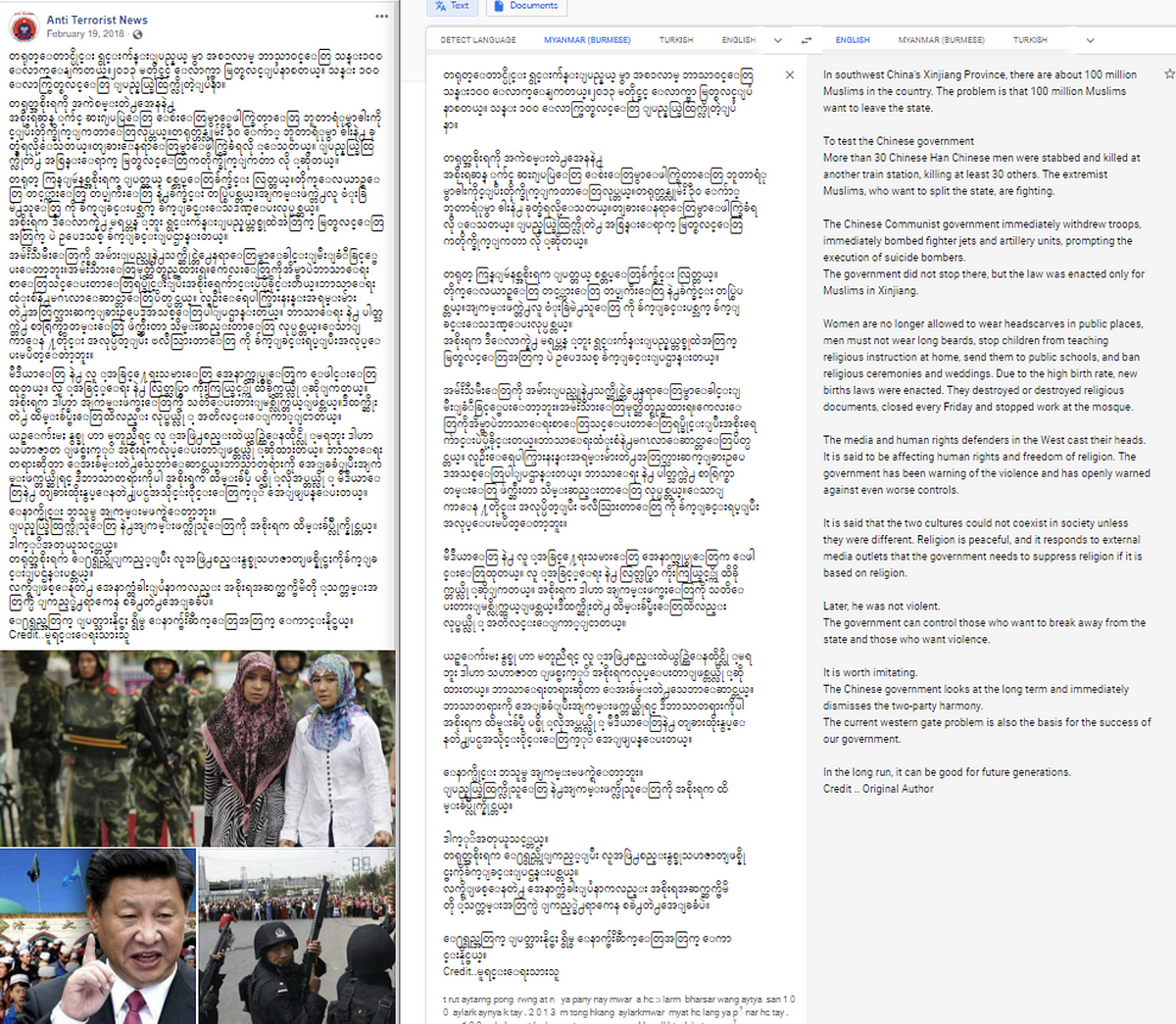

Other posts spread conspiracy about Muslims in general. A February 18, 2018, post notably lauded the oppression of Uighur Muslims in China’s Xinjiang Province, justifying the Chinese government’s abusive, repressive tactics and, according to a Google translation, stating that “It is worth imitating. The Chinese government looks at the long term and immediately dismisses the two-party harmony… In the long run, it can be good for future generations.”

Ultimately, however, the Anti Terrorist News page gained little traction, as it had only 30 likes by the time it was taken down, and its posts generally garnered only one or two likes and/or shares.

The “Motherland security” page, sporting the logo of the Tatmadaw, had gained a total of 9,406 likes. The page linked to a low effort off-platform website whose sole page depicts graphic photos of corpses that it claims are Hindus killed by “Bengali extremists,” continuing the narrative that the sectarian violence is one-sided and can be attributed to the Rohingya.

The third page, ျမန္မာသတင္းအမွန္, also wrote a lot of news-like posts regarding terrorists and security concerns. It had a similar number of likes to “Motherland security,” with 9,016 at the time of its removal.

Although a CrowdTangle analysis did not find any coordinated patterns in the pages’ engagement and likes, some posts from one page would be shared by another page. Some of the accounts being taken down concurrently, however, interacted with these pages through likes on posts, while some shared page posts on their timeline, including anti-Rohingya posts from the “Motherland security” page. Seven of the accounts liked the “Motherland security” page, and, for two of the accounts, it was their sole Facebook like, according to their “About” pages.

Anti-Rohingya, pro-MPF accounts

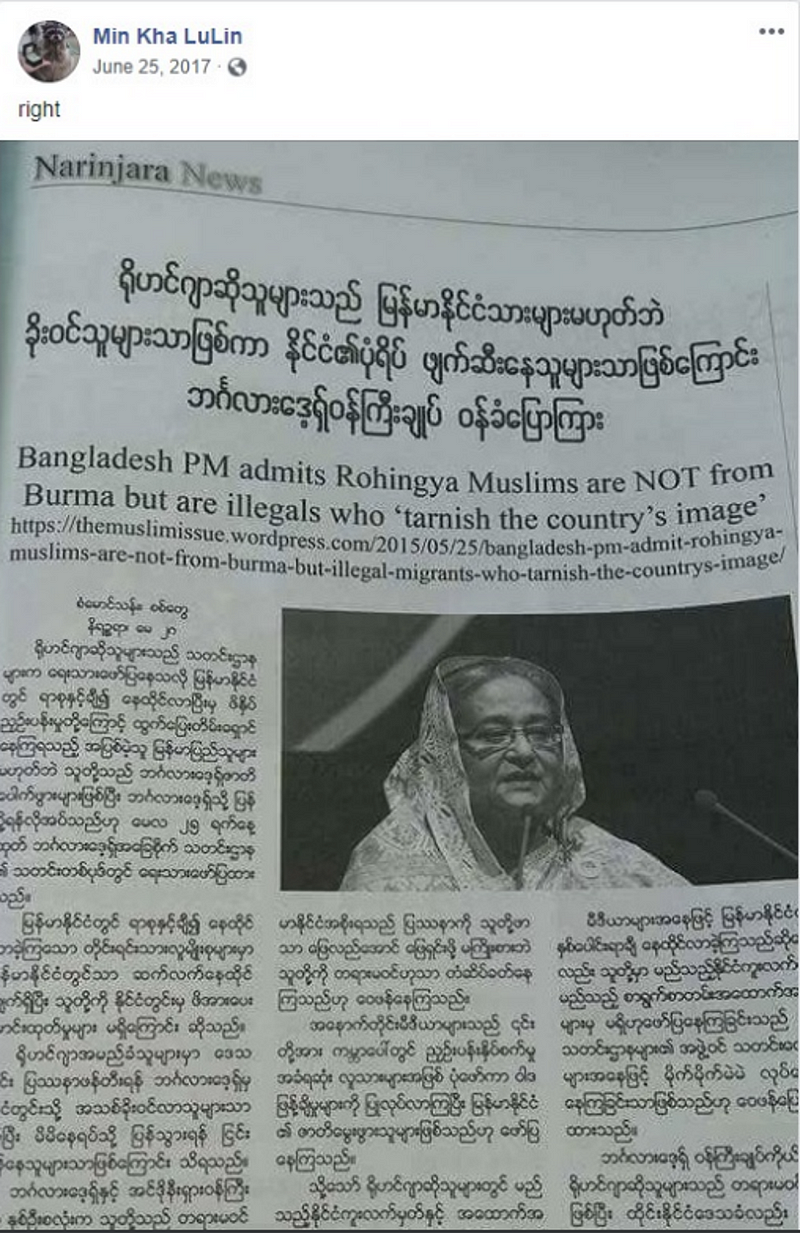

Accounts removed as a part of this takedown shared the anti-Rohingya narratives seen in the pages. One such anti-Rohingya account — Min Kha LuLin — was publicly active from November 2016 to August 19, 2017, prior to the ethnic cleansing in August and September 2017. The account shared regular updates about the situation in Rakhine state from other pages. On June 25, 2017, the account posted a picture of an article with the headline “Bangladesh PM admits Rohingya Muslims are NOT from Burma but are illegals who ‘tarnish the country’s image,’” accompanying the photo with a terse “right.”

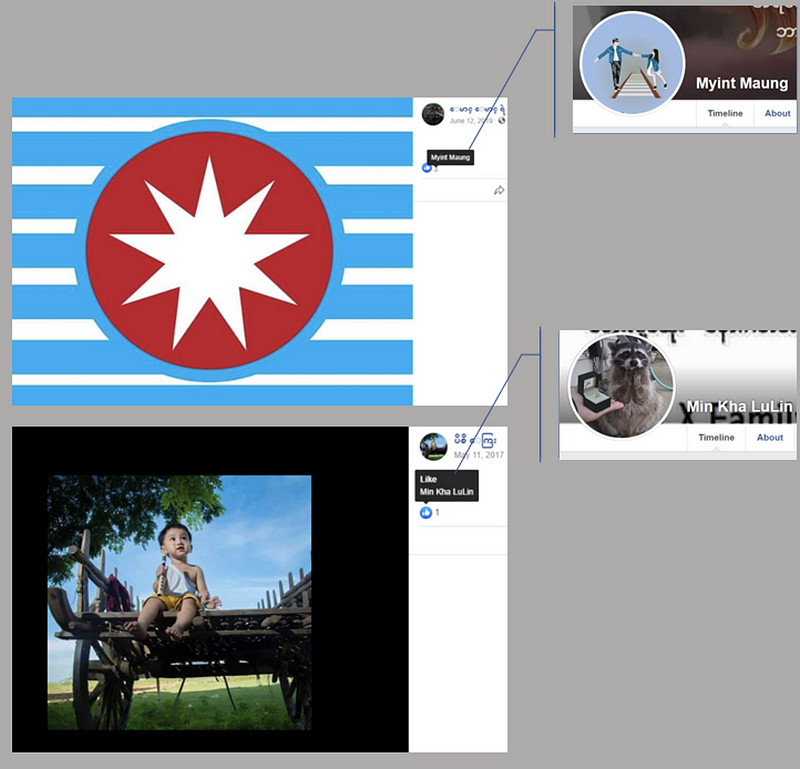

The DFRLab identified minor signs of potentially inauthentic activity and coordination between the assets in the dataset provided by Facebook. The DFRLab’s open source analysis, however, was not able to confirm Facebook’s assessment of inauthentic behavior, nor could it invalidate that same assessment. In other words, the DFRLab found no evidence that would cause it to doubt the company’s attribution, which likely relied on back-end information not available to open source researchers. All of the accounts identified appeared to shield their identity by using anonymous or stock photos for their profile pictures and cover photos. Two accounts used celebrities’ faces instead of their own for profile pictures.

Although behavior that points to the obfuscation of an identity is one potential sign of inauthentic activity, in and of itself it is not a conclusive indicator of an inauthentic account.

The accounts using celebrity photos — Ko Lu Chaw and Maylay Lay — were two of the 10 accounts that displayed clear pro-Myanmar military sentiment. Eight accounts featured military imagery in their profile — either through flags and insignias from the Military Police Force, Myanmar Army, and the specific Army flag “Badge of the Western Command” — or through uploaded images of military forces.

Nine of the removed accounts liked Myanmar military pages, including the page of the official MPF, which was not in the removed assets. The Myanmar Police Force’s official Facebook page is an outlier when compared to other countries: it has a staggering 1.3 million likes. In Indonesia — which has five times the population of Myanmar and 6.5 times the number of active Facebook users — the national police force Facebook page has only 920,000 likes.

In terms of coordination, several of the accounts liked photos on other assets in the set. The dearth of strong evidence of coordination could be a result of the high privacy settings on most of the accounts identified, which prevented the DFRLab from “friends only” access.

Conclusion

While Facebook took down these assets belonging to members of the Myanmar Police Force for coordinated inauthentic behavior, little open source evidence conclusively linked these assets to the Myanmar military beyond imagery deployed in their profiles or pointed to significant inauthentic and coordinated behavior. Lack of significant open-source evidence highlights the responsibility social media platforms have to identify and moderate coordinated inauthentic activity, particularly when that activity incites violence along sectarian lines in a country with ongoing conflict and a history of ethnic repression. The DFRLab’s analysis indicated that some of the assets actively inflamed anti-Rohingya sentiment during the critical period of June through September 2017 in Myanmar when tens of thousands of Rohingya were murdered and hundreds of thousands were displaced.

Alyssa Kann is a Research Assistant with the Digital Forensic Research Lab.

Kanishk Karan is a Research Associate with the Digital Forensic Research Lab.

Follow along for more in-depth analysis from our #DigitalSherlocks.