Anti-Taiwan influence operation shows shift in tactics

Inauthentic assets amplified content to more than 1,300 Facebook groups, with the apparent goal of dividing Taiwanese society and undermining its democracy

Anti-Taiwan influence operation shows shift in tactics

Share this story

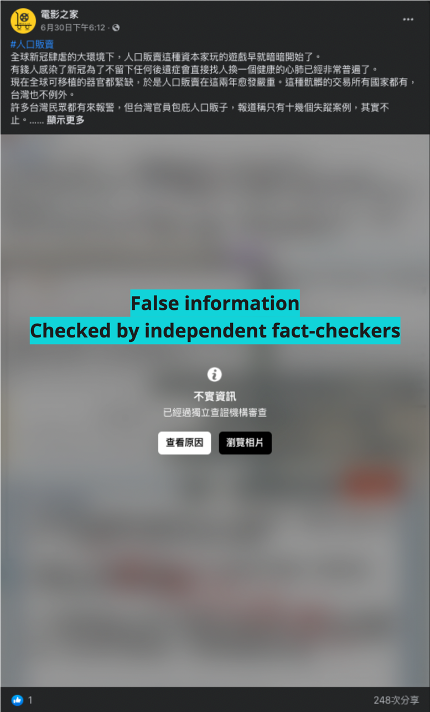

Screencap from a post by the Facebook page “Diss Lingering” about Taiwanese President Tsai Ing-wen and Vice President Lai Ching-te allegedly fighting at the president’s birthday banquet. (Source: Diss纏綿)

This report is published in partnership with Doublethink Lab.

Doublethink Lab has uncovered a batch of inauthentic assets on Facebook targeting Taiwanese users with disinformation and propaganda most consistent with the agenda of the Chinese Communist Party (CCP). These assets display a shift in tactics toward fewer identifiable indicators of inauthentic activity, making detection and attribution more difficult.

The Chinese Communist Party (CCP) has long targeted Taiwan with influence operations aimed at weakening the country’s resistance to “unification,” consistent with the content created and amplified by the inauthentic assets uncovered in this study. The campaign stokes domestic polarization by spreading narratives about government corruption and the failure of Taiwanese democracy, particularly in terms of the economy. To achieve its goal, the CCP seeks to reduce antipathy toward China, promote the benefits of its own governance model, and discredit Taiwanese democracy and the ruling Democratic Progressive Party (DPP) government. One example was the Kansai airport incident, in which a Taiwanese diplomat in Osaka was blamed for failing to help citizens during a typhoon, and China’s embassy was credited with evacuating them to safety, leading to the diplomat’s suicide. The CCP frequently attacks international diplomatic support for Taiwan as “dollar diplomacy,” paid for at the expense of the less advantaged Taiwanese. For instance, the outlet Guancha described Taiwan’s purchase of Lithuanian rum as the “overpriced taste of democracy” after China imposed economic sanctions on Lithuania for allowing Taiwan to open a representative office.

Content amplified by the assets identified in this report attacked the Taiwanese government, manipulated public perceptions about vaccinations, showed China in a positive light, and attempted to interfere in Taiwan’s November 2022 election. Although the precise impact of this activity is difficult to ascertain, the potential reach of these assets was in the millions.

Similar inauthentic assets conducting influence operations to manipulate Taiwanese public opinion have been found previously on Facebook and other platforms. The assets uncovered in this study used tactics not unliked those unearthed by Taiwan’s Ministry of Justice Investigation Bureau (MJIB) in 2021. Although the MJIB was unable to make an air-tight attribution, Dr. Puma Shen, chairperson of Doublethink Lab and associate professor at National Taipei University, assessed the campaign’s characteristics as consistent with outsourced CCP information operations. This included indicators such as the sloppy conversion of simplified to traditional Chinese characters; coordination with China state media messaging at the time; Facebook pages operated from China, Hong Kong, and Cambodia; links to simplified Chinese content farms; and page names identical to Weibo influencers. The trend toward outsourced information operations has made attribution more difficult, leaving us to infer the perpetrator from similarity of tactics and content alignment with political agendas.

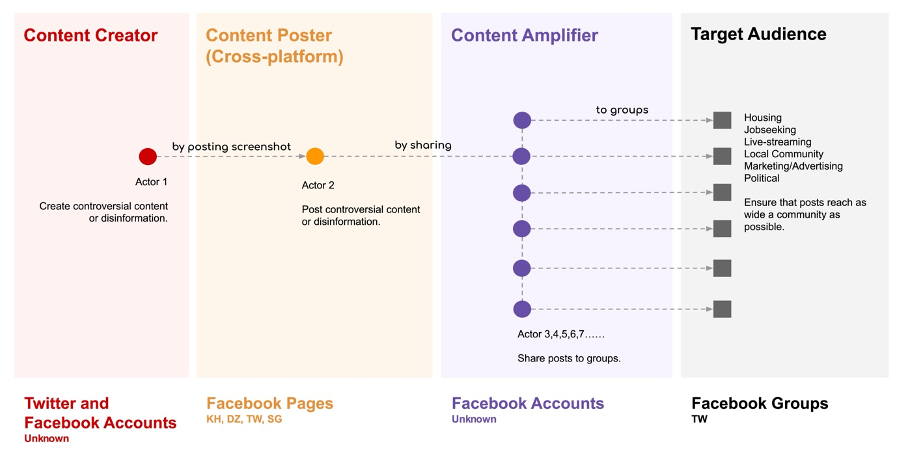

In this case study, we show how a number of inauthentic Facebook accounts cross-posted content from inauthentic Facebook pages into Taiwanese Facebook groups with large audiences. Based on strong indicators of inauthenticity, Doublethink Lab is highly confident these are influence operation assets. We argue that, among the known actors targeting Taiwanese with political messaging, the content is most consistent with the agenda of the CCP, and that the assets used tactics consistent with actors also highly suspected of being CCP assets.

Methodology

We started our investigation by looking at the assets comprising Dr. Shen’s 2021 investigation, of which we found ten that were either suspended or discontinued. We therefore looked for new pages with similar characteristics in order to see whether replacements had been created.

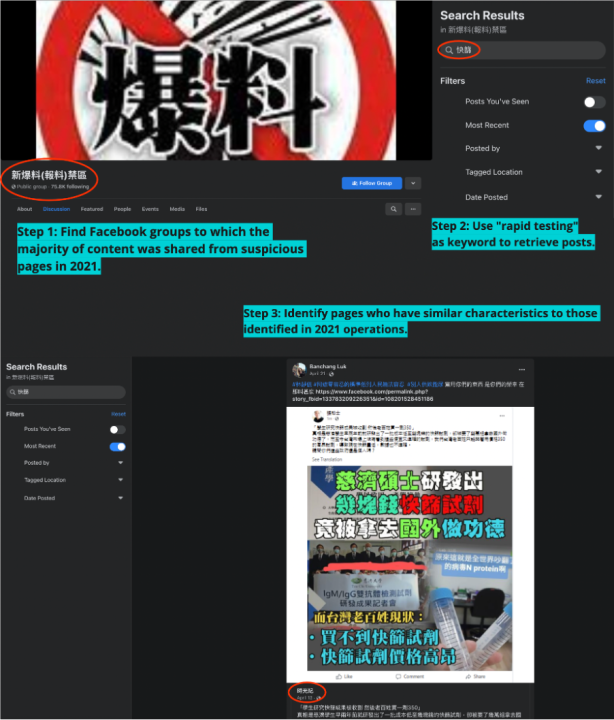

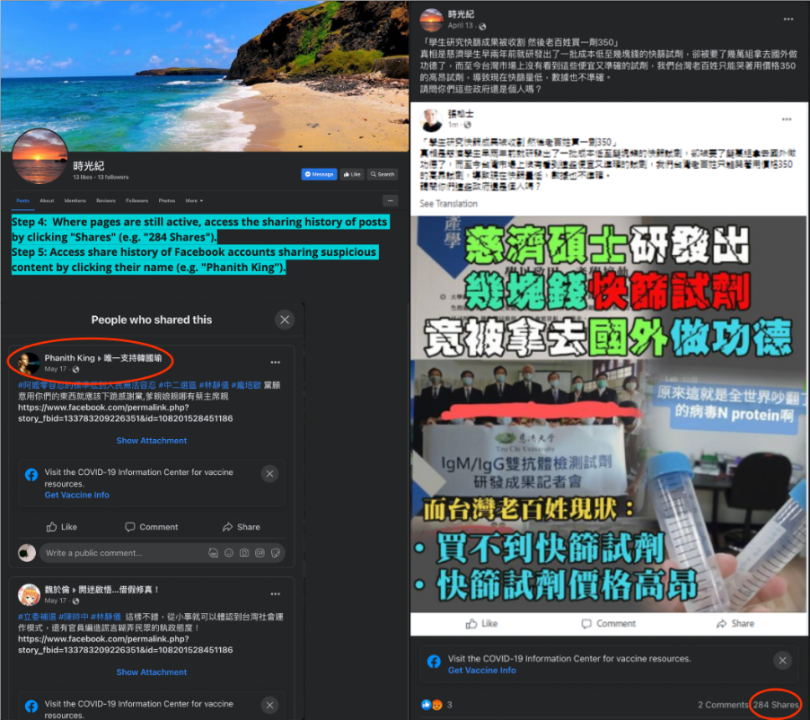

Since we had access to the data from the 2021 investigation, we first selected the ten Facebook groups to which a majority of content had been shared by suspicious pages in 2021. The identified 2021 pages had primarily posted disinformation criticizing Taiwan’s handling of COVID-19; operating under the hypothesis that pages connected with similar assets would do the same in 2022, we used the search term “rapid testing” (快篩) to look for similar content shared from the pages into select Facebook groups in 2022. Not every post that contained the keyword was connected to the inauthentic assets, so we only looked into those created by pages with specific signs of inauthenticity, including pages with few followers and low numbers of likes; controversial or unverified content; and posts with low like and comment counts alongside unusually high shares.

We discovered the Facebook accounts connected to the assets shared content into groups chiefly from pages with signs of inauthenticity. By examining other accounts sharing from those pages to groups, we found more suspicious pages with similar attributes. We then further expanded the list of pages by manually identifying the Facebook accounts and browsing the share history of the accounts. This resulted in an additional eighteen pages on top of the ten pages from 2021. We then used CrowdTangle to collect data on these eighteen pages from January 1 to September 20, 2022, yielding 650 posts and 16,006 shares.

Findings

Facebook accounts with signs of inauthenticity shared controversial page content and disinformation to Taiwanese Facebook groups. Although we used “rapid testing” as keywords to identify the pages, the resulting dataset focused on wider topics, including visits to Taiwan by foreign dignitaries and domestic Taiwanese politics.

The post content fell into three categories:

1. Re-shares or screenshots from well-known Facebook political pages, political figures, or news content

2. Screenshots from Facebook accounts of supposed Taiwanese citizens on Twitter and Facebook

3. Original meme graphics

When screenshots were shared, we traced the origin of the content based on visible usernames to identify “content-creating” accounts. The pages, content amplifiers, and content creators generated and disseminated controversial content and disinformation about foreign dignitaries visiting Taiwan, criticism of domestic epidemic control, ruling DPP politicians, and general governance.

In 2021, the MJIB identified that a significant number of “content-creating” accounts in the assets were personal accounts on Taiwan-based forum CK101. Also known as “卡提諾論壇” in Mandarin, CK101was once a popular online forum among younger generations in Taiwan. These accounts were registered using overseas mobile phone numbers rather than numbers in Taiwan. However, during our 2022 investigation, we noticed a migration of “content-creating” accounts away from CK101 and toward Twitter and Facebook, as outlined below.

The assets in the MJIB investigation used hashtags and links in the messages that the content-amplifying accounts shared to groups. The assets in our 2022 investigation all stopped using hashtags and links in June 2022.

We also found that most of the Facebook pages in our 2022 investigation that did not show up in the MJIB’s original investigation also did not report administrator location. Seventeen of these eighteen pages were created in 2022, and only four reported administrator location.

Suspicious pages, content amplifiers, and content creators

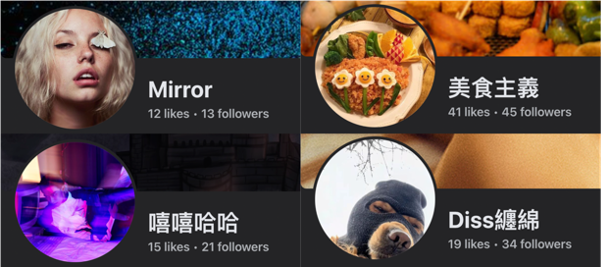

At the time of writing, three of the eighteen pages were inaccessible and were likely suspended. The remaining fifteen pages often exhibited suspicious behavior. Their content was often sourced from a narrow pool of content creators, which themselves displayed signs of inauthenticity, as well as coordination in some cases. They had few followers and low numbers of likes for the page itself, demonstrating low activity or an inability to attract an audience.

Their registration dates were often very close together, strongly suggesting that they were created in a batch.

Their posts were almost entirely dedicated to politics, even when the page category was identified as non-political topic such as “health/beauty” or “community.”

A majority of the posts on a given page contained controversial political claims, some of which fact-checking organizations identified as false.

Posts on the page received a low volume of likes and comments alongside unusually high shares, indicating possible manipulation through artificial amplification, such as the potential use of bots or fake accounts to share a selected post.

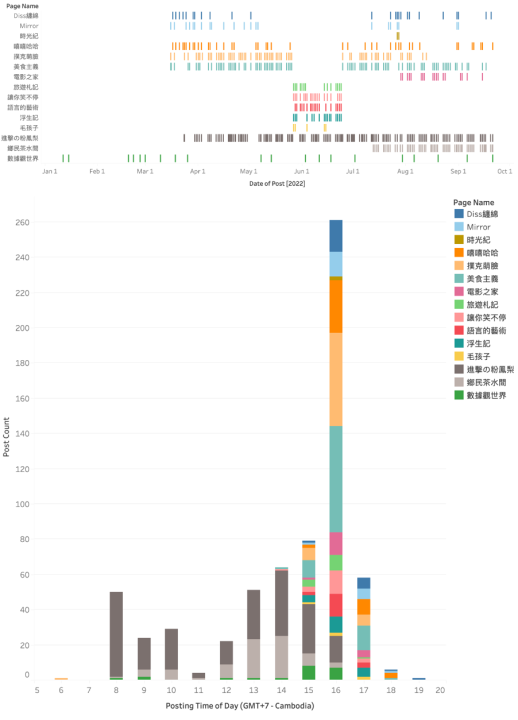

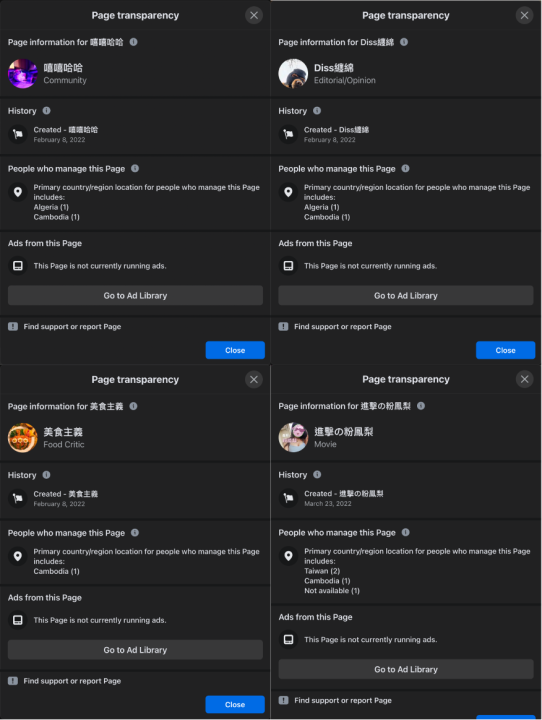

Finally, many of their posting times fell around the working hours in the GMT+7 timezone, which includes Cambodia.

Based on these indicators and the targeted focus on Taiwan, we suspect the pages to be influence operation assets.

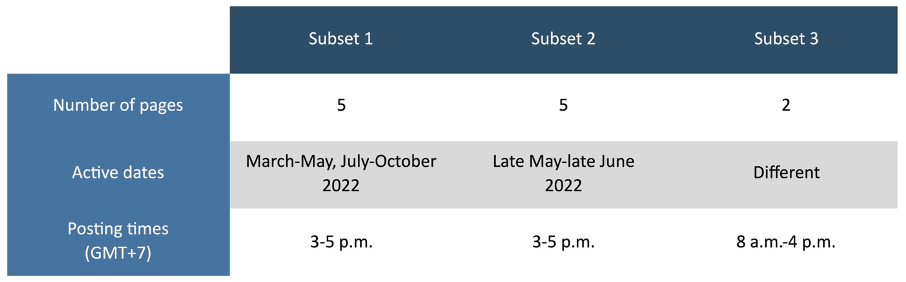

Although the pages did not post identical content with little time in between, most were active during the same windows of dates and times of day that they posted. Of the fifteen still active pages, three deviated in terms of active dates and working hours, but the remaining twelve could be separated into three different subsets.

The first subset started to post from March 2022, took a break in late May, and posted again from July to October. The second subset was only active from late May to late June. The two pages in the third subset started posting at different points in the year, but they were quite synchronized on which date to post, and they both only posted between 8 a.m. to 4 p.m. (GMT+7). For the pages in the first and second subsets, they mostly posted between 3 p.m. and 5 p.m. (GMT+7). The posting time patterns for these pages mostly aligned with Cambodian business hours.

Content-amplifying Facebook accounts – those that mostly shared pages’ posts, often supplementing the share with additional text – also showed signs of coordinated inauthentic behavior (CIB). Within a sample of one hundred of the accounts’ posts to the groups, a majority only had between four and eight repeated variations of the same supplemental text per post, indicating a high probability of coordination.

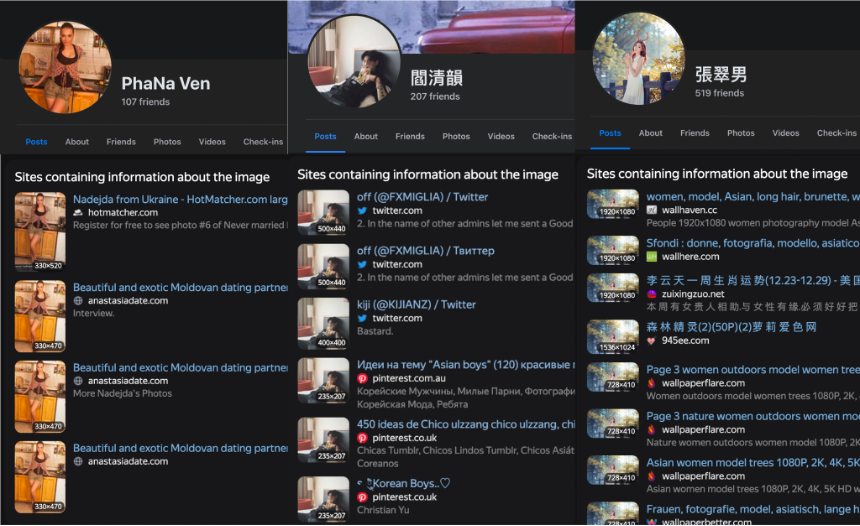

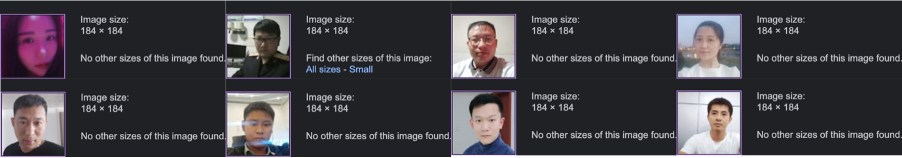

Many of the content-amplifying accounts reappropriated images taken from elsewhere online, another common indicator of inauthenticity. Of twelve accounts, seven used profile images sourced from elsewhere online.

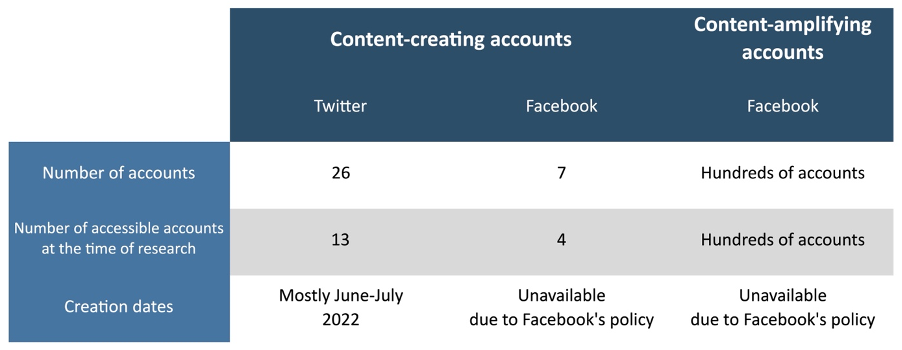

When posts by pages contained cross-posted screenshots, we traced the screenshots to thirty-three accounts which primarily posted original content that was later amplified. Of these accounts, twenty-six were on Twitter and seven were on Facebook. Half of them were inaccessible at the time of research.

Of the twenty-six Twitter accounts, ten were suspended and three no longer existed, while three of the seven content-creating Facebook accounts were inaccessible and had likely already been suspended.

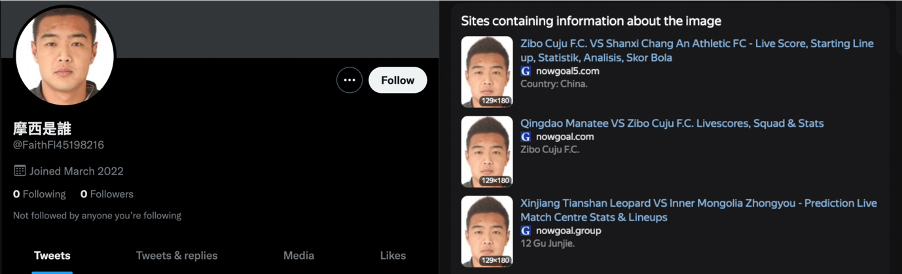

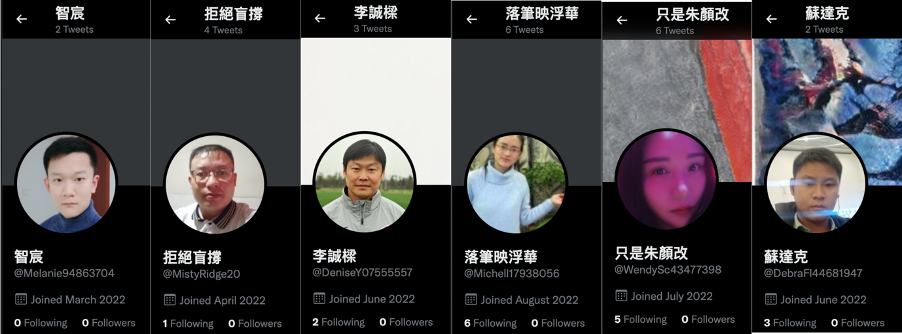

The thirteen content-creating Twitter accounts that were active at the time of research were mostly created in June-July 2022, posting content limited solely to politics. These accounts shared indicators of inauthenticity, including featuring identical profile photos.

Beyond the usage of identical photos, others took their profile photos from elsewhere on the internet without attribution, a second potential indicator of inauthenticity.

Notably, eight out of the thirteen accounts shared exactly the same profile image size (184×184 pixels).

In yet another indicator of inauthenticity, all of the Twitter accounts in question had following and follower counts of either zero or in the single digits.

Screenshots posted by the Facebook pages – it is unknown whether the operators of the pages captured them themselves or whether they were sent the screenshots – consisted of captures of two types of Facebook accounts: known politicians and political commentators, and seemingly normal Taiwanese citizens. We observed short intervals between the content published by those purporting to be regular Taiwanese citizens and the screenshots of posts published by the inauthentic Facebook pages. Amplifying accounts would then use the Facebook “share” function to repost these screenshots to Facebook groups.

The screenshots included the time that elapsed between the original post and when the screenshot itself was taken. When a user is active on Facebook, every post indicates to them the time at which its content was posted relative to the user’s current activity (e.g., “two minutes ago,” “five hours ago,” etc.). That number, as captured in the screenshots, therefore allowed us to investigate potential coordination.

For known politicians and political commentators, the time interval evident in the screenshots was never under two hours. For the personal accounts of the supposed Taiwanese citizens, however, the intervals were often within one or two minutes, strongly suggesting coordinated behavior between the account and the page posting the screencap.

Indications of foreign actor involvement

The pages at the center of MJIB’s and Dr. Shen’s research shared location information traced to Cambodia and China. In our follow-up 2022 research, several of the identified pages included their administrator locations: Cambodia, Algeria, and Taiwan.

A small proportion of the pages’ posts (6.57 percent) contained an awkward mix of simplified and traditional Chinese characters. Although there are a number of potential explanations for this, it points to their potential foreign origin: Taiwan, Hong Kong, and Macau officially use traditional Chinese characters, while China, Southeast Asia, and other regions use simplified Chinese characters. A mix of these two systems would likely indicate an attempt to convert from one to the other. Since we were observing the Taiwanese information space, the most likely direction of conversion would be from simplified characters (i.e., China, Southeast Asia, etc.) into traditional characters (i.e., Taiwan, Hong Kong, and Macau).

Content analysis

The posts by the inauthentic Facebook pages identified above were universally negative against contemporary Taiwanese politicians and politics. Some focused on smearing DPP politicians or blamed the DPP government for problems in Taiwanese society, including COVID-19 testing policies, mysterious hepatitis infections in children, Chinese bans on Taiwanese products, and human trafficking from Taiwan to Cambodia. These attacks are seen in the political messaging of the opposition Chinese Nationalist Party (Kuomintang, or KMT), but are also consistent with CCP propaganda messaging.

Other posts pertained to international relations and sovereignty, including decrying visits by former US Secretary of State Mike Pompeo or then-Speaker of the US House of Representatives Nancy Pelosi to Taiwan. These messages were not observed in KMT messaging but were consistent with CCP information warfare objectives to generate concern about diplomatic isolation and fear that democratic allies would not assist Taiwan in the event of a Chinese invasion. When Speaker Pelosi visited, all domestic political parties issued separate statements welcoming the diplomatic breakthrough, as it was the first visit by a US Speaker of the House since 1997.

Other posts concerned Lee Ming-che, a Taiwanese human rights advocate whom the Chinese government controversially sentenced to five years in prison on charges of “subversion of state power” in 2017. The posts attempted to undermine public perceptions of him and promoted the idea that China had treated him well despite his supposedly “criminal” actions. Attacks on him, some of which accused his wife of having an affair, did not resonate strongly in Taiwan. When China released Lee from prison, he returned home in mid-April 2022; the Taiwanese public largely welcomed his return, while the traditionally China-leaning KMT and other opposition parties did not mention Lee’s case in their political messaging.

Impact and influence

In total, Facebook accounts distributed content from the analyzed pages to 1,302 Facebook groups, the vast majority of which were aimed at Taiwan, with a combined membership of 22 million people, not excluding double counting. Note that Taiwan has a population of 23 million. According to the names of these Facebook groups, about 8.5 percent of them appeared to cater for the Chinese diaspora in Southeast Asia.

It is important to note that reach is not a good measure of impact. Although potentially millions of Taiwanese could have seen the posts amplified by the inauthentic assets identified above, there is no way to know how many saw the posts, let alone were influenced by them. That said, in mid-September 2022, the Taiwanese Presidential Office was forced to rebut a false story that President Tsai Ing-wen and her deputy Lai Ching-te had a fight at the former’s birthday banquet. Fact-checkers at Taiwan FactCheck Center later identified the news as disinformation and sourced the content to the page “Diss Lingering” (Diss纏綿) and the account “Ju Bangwei” (鞠邦為), both of which appeared among the inauthentic assets comprising this study.

Conclusion

Facebook pages with similar inauthentic indicators to those detected in 2021 remained active in 2022. At the time of publication, however, only one Facebook page in our set is still active, with twelve now unavailable for unknown reasons, and five inactive since June 2022.

The accounts and pages comprising this research demonstrated an evolution in tactics. First, the account operating information was left blank. Although the stated administrator location is self-reported and checked by Facebook, it can still be faked. Not including any location at all leaves even less information to trace the operators, as when locations have been included, there is often overlap in the listed countries. Second, we observed cross-posting of screenshots from accounts with indicators of inauthenticity similar to MJIB’s 2021 study, but the originating platforms were different; no CK101 accounts were observed in 2022, for example, with Facebook and Twitter accounts serving as the primary source. Third, as the Facebook accounts ceased using hashtags and including URLs, as had been the case in Dr. Shen’s and MJIB’s investigations, it could indicate a change in tactic to decrease the ability of investigators to find their posts. URLs may reduce the algorithmic ranking of a post, and therefore their exclusion may be a tactic to increase post rankings.

Based on these indicators of inauthenticity, we conclude that these accounts are likely engaged in influence operations. There was, however, insufficient evidence to make a confident attribution to any specific actor. The content, however, supported some KMT interests but is more aligned with CCP objectives, specifically dividing Taiwanese society, undermining democratic values, and reducing antipathy toward China.

Feng-Kai Lin is an analyst at Doublethink Lab.

Cite this case study:

Feng-Kai Lin, “Anti-Taiwan influence operation shows shift in tactics,” Digital Forensic Research Lab (DFRLab), March 30, 2023, https://dfrlab.org/2023/03/30/anti-taiwan-influence-operation-shows-shift-in-tactics.