#BotSpot: How Bot-Makers Decorate Bots

Tracing the mind games of those who create fake accounts

#BotSpot: How Bot-Makers Decorate Bots

Tracing the mind games of those who create fake accounts

When the world’s social media giants meet to discuss the most interesting jobs available online, creating fake accounts is not on the list. Fake social media profiles are the cannon-fodder of the propaganda wars. Automated and regimented, fake profiles can be deployed in tens of thousands within minutes and taken down almost as quickly.

But somebody has to create that cannon-fodder. As Twitter Public Policy has grown more adept at preventing automated account creation, a whole industry has grown up, employing people to create, name, register, and verify fake accounts.

Judging by the accounts they make, those people range from the starstruck to hyper-creative to the very, very bored. Some go to great lengths to give their throwaway accounts personality, and even a family. Others, frankly, can’t be bothered.

These are some of our favorite bots, gathered here to illustrate the different ways in which bot makers try to make their accounts stand out from the mass.

Girls and football

Many botnets use profile pictures of famous people, especially famous women. Bot creators seem to assume that more men than women use Twitter, men are more likely to pay attention to a beautiful woman, and when not staring at women, men watch football. Marketing experts are unlikely to disagree.

Many botnets seem primarily made up of beautiful women. One network, active in countries in the Persian Gulf in September, used profile pictures of actresses Cameron Diaz, Scarlett Johansson, Keira Knightley, and others.

Actress Emma Watson appears to be a particular favorite, with her photo cropping up on bots in the Persian Gulf and the Russian-speaking world.

Another bot stole its avatar picture from German Instagram celebrity Lorena Rae, and used it to amplify far-right messaging around the Charlottesville riots in the United States.

A botnet, which attacked @DFRLab’s colleagues in July and August, used the photos of dozens of young ladies, but shared them out between hundreds of accounts, presumably in the hope that nobody would notice the same girl had five different names.

A botnet @DFRLab observed in Qatar in May took a different approach. Rather than actresses, it featured soccer players, including David Beckham and Lionel Messi (though not Messi’s great rival, Cristiano Ronaldo).

The same network, marked by simultaneous tweeting of the same pro-Qatar tweet, also used glamorous men from the world of film, such as George Clooney, Frank Sinatra, and Johnny Depp.

Given the Gulf context, in which genuine, home-grown accounts seldom feature glamorous women showing their hair, this appears to be a case of bot creators adapting to local styles, and shaping their accounts accordingly.

A further set of accounts used an image of a small girl, apparently taken from a collection of “cute baby images for WhatsApp”.

Literally dozens of accounts used this image, retweeting the same post almost simultaneously, as this screen shot of its retweets makes clear.

All these accounts were created in March 2017 and have identical biographies; many also give a location in Mecca.

Pink and blue

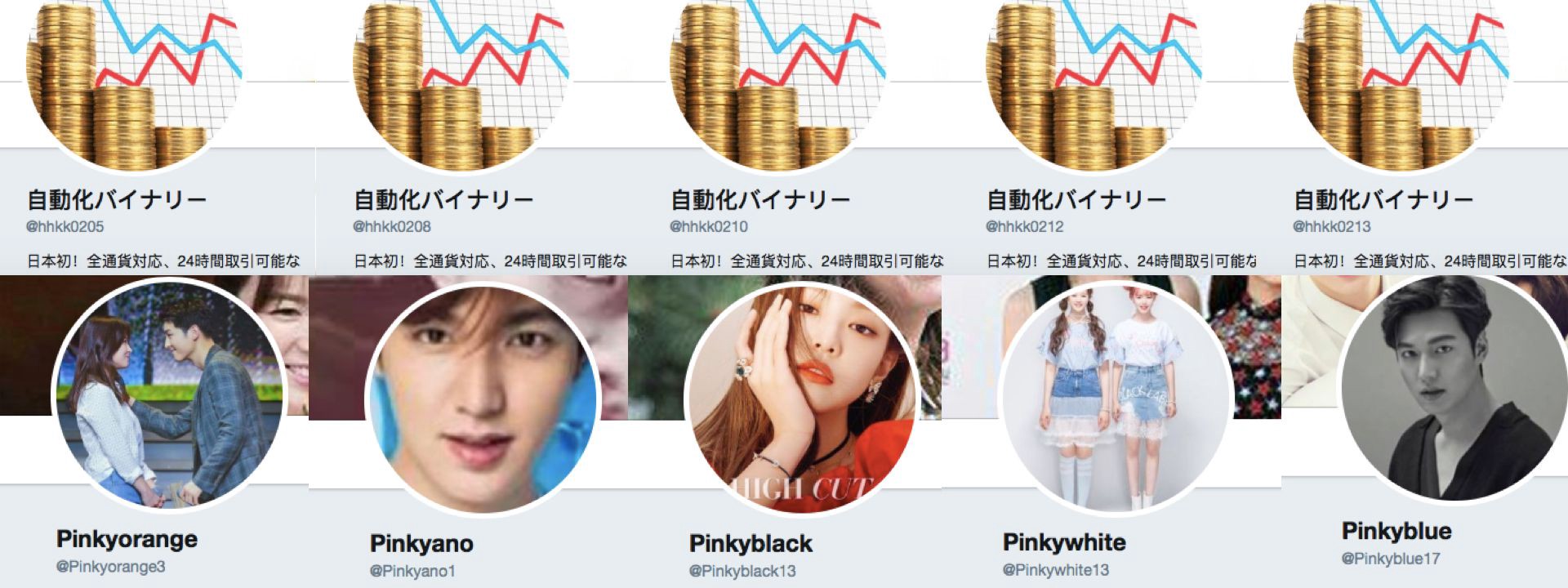

Some bot makers appear to have been more creative, building the identities of their accounts around a particular theme. One intriguing set, which also amplified traffic in the Gulf, was based around names starting in “Pinky”, and featured Korean pop profile images.

Sticking with the color theme, some of the accounts amplifying the same traffic called themselves “blue”, with handles based on a numerical sequence, but the same screen name.

Some bot creators lack even this degree of creativity. One small botnet we identified in May featured accounts whose screen names and handles appeared to have been generated by drumming the creator’s fingers over the keyboard. One such account’s handle began asdasda, together with an eight-digit number which was probably randomly generated; its screen name was asd asdasd. A, S and D are the first three keys on the middle row of a QWERTY keyboard; the image is of a bot user so bored they are simply rattling the keys.

Another bot in the same network had the Cyrillic screen name прврпр варвапва, which does not make a recognizable (or even pronounceable) name. Its handle was @S1UjVQTtIq2OOZc, a randomly-generated string.

The letters В, А, П, and Р are adjacent on middle row of the standard Cyrillic keyboard (the Russian В being in the position of D on a QWERTY keyboard). The creator seems to have randomly generated the handle and done the bare minimum to give it a screen name.

These accounts, especially the ones which lack even a profile picture, suggest that the person who created them was singularly bored, and decided to stick with a minimalist recipe that worked, as long as nobody looked.

Humans copying bots, copying humans

Some bot creators appear to have gone for mass-production, to such an extent that they look like humans imitating automated creation software, which is creating imitation humans. Bot studies are full of this sort of paradox.

For example, one Japanese-language net features a very large number of accounts, all with sequential usernames, but with varying clusters of accounts using different avatar images.

The numerical sequence of still-active accounts starts with @vvjdthcs501, which had not yet tweeted as of December 12. @vvjdthcs506 was in the same position, while @vvjdthcs502, and similar accounts whose handles ended in 100, 200, 300 and 400, had all been suspended.

Suggesting the scale of this botnet, accounts whose handles ended in 999, 1234 and 1999 had also been suspended.

Some, whose handles ended in numbers between 500 and 800, shared this avatar and bio, with or without a background image.

Others, whose handles were above 1100, shared a different avatar.

Yet a third set, whose serial numbers ran between 1880 and 1902, used this set of avatar and background.

Many of the accounts in this series appear not to have been activated yet: they have a primitive screen name (the Japanese hiragana sound for “a”), no avatar or background, and no posts. This suggests a dormant capability, perhaps a backup as the active accounts are taken offline. Their serial numbers run all the way up to 2099.

It largely follows verified, blue-check accounts, a technique often used by bot herders to give their accounts the appearance of humanity.

This is not the only botnet to behave in this way. An even more obvious one is built with a serial starting @hhkk, then a four-digit number. These accounts seem to be divided into clusters sharing the same avatar, bio and background, as the following examples indicate.

Yet a third series took a similar approach, but used whisky-related imagery.

All these appear to be semi-automated, production-line creations. In each series, some accounts had been suspended, some restricted, and some left untouched, indicating that they had been created carefully enough to pass Twitter’s initial barriers.

They are obvious to the eye, however, as when the @hhkk network all liked the same tweet at the same time.

Works of art

Occasionally, botnets appear like works of art, and the users appear to have devoted significant time to making them. One botnet @DFRLab uncovered amplifying posts on South Africa politics appeared especially high-quality. Many of the accounts in the network were copycats of apparently genuine ones, but transposed the letters “i” and “o” with “l” and “0” in the usernames.

Many of them were created in April 2014.

Some of the apparent fakes had creation dates earlier than the accounts they imitated, but still exchanged an “i” or “l”, or an “o” or “0”, in the handle, which suggests these bot accounts originally had different names.

This botnet was remarkable for its craftsmanship, and the way in which its constituent accounts only posted at low rates — apparently in a bid to evade automated detection.

Our favorite botnet took a similar approach, but with a fatal flaw. Its creation appears to have been automated to copy and paste the account biographies of real users, giving it the appearance of credibility, but whoever set up the accounts did not cross-reference the bios with the names and images.

For example, @hehDelanababnor’s biography reads, “Micah Challenge is a global campaign of Christians speaking out against poverty & injustice in support of the MDGs.” It seems to be copied from an account called Micah Challenge Aust, handle @micaheaxa:

Curiously, a number of other accounts which appear botlike have the same bio, suggesting that this may be a popular approach among bot makers.

Other bios from accounts in the network, which were suspended before @DFRLab could archive them, included a comments editor at the Guardian newspaper; a sports journalist for the New York Times; the largest media firm in Africa; a student at Alcorn State University; a luxurious island sanctuary; and a world expert on silver.

One which we did manage to archive is the charming account Erik Young, a shy and bearded young man who describes himself as “just a woman who loves Jesus”.

Bot creators have many skills. It may be that a sense of humor is one of them.

Follow along for more in-depth analysis from our #DigitalSherlocks.