Twitter’s Ethiopian interventions may not have worked

Twitter’s decision to deactivate trending topics in Ethiopia did not reduce the volume or toxicity of tweets about the civil war.

BANNER: A man holds the Ethiopian flag in Addis Ababa’s Meskel Square during a pro-government rally to denounce what the organizers say is the Tigray People’s Liberation Front (TPLF) and Western countries’ interference in international affairs of the country, November 7, 2021. (Source: REUTERS/Tiska Negeri)

This piece first appeared in Tech Policy Press. This piece was coauthored by Megan A. Brown, a research scientist at the New York University Center for Social Media and Politics.

On November 6, 2021, Twitter announced it was halting trending topics in Ethiopia due to the ongoing threat of violence in the country, which has been embroiled in a year-long civil war. The escalating conflict, originally between Ethiopia’s northern Tigray region and the national government, has left thousands dead and displaced millions. Previous reports, and a trove of documents leaked by Facebook whistleblower Frances Haugen, have illustrated how social media is fueling ethnic-based violence in Ethiopia.

Twitter Trends have, in fact, played a significant role in the communications strategies for both parties since fighting broke out in November 2020. Although the predominant language in Ethiopia is Amharic, English-language tweets about the war have frequently trended in-country. Groups from across the political spectrum created click-to-tweet campaigns to ensure hashtags such as #TigrayGenocide and #NoMore trended, primarily targeting diaspora members as telecommunication access was originally restricted in Tigray. These types of operations have also successfully manipulated Twitter Trends in Nigeria and Kenya.

Taken together, this evidence suggests removing Trends in Ethiopia could make a difference and “reduce the risks of coordination that could incite violence or cause harm,” as Twitter stated. However, our investigation into English-language conversations on Twitter about the conflict before and after Trends were removed found no discernible change in the volume of tweets or the prevalence of toxic and threatening speech, meaning the Twitter intervention may not have worked as intended.

How we did our research

The NYU Center for Social Media and Politics and the DFRLab collected tweets mentioning various popular hashtags related to the conflict, including #nomore and #tigray from November 1, 2021 through November 8, 2021.

Although there are several potential ways in which Twitter’s intervention could work, our investigation focused on two possibilities based on the platform’s hope that removing Trends would reduce coordination leading to incitement of violence:

· An overall reduction in tweets about the conflict, because individuals would not be able to find new hashtags via trending topics;

· A reduction in the level of toxicity and threat in conversation about the conflict.

To measure the former, we counted the hourly number of tweets using hashtags about the conflict before and after the intervention. To measure the latter, we labeled all tweets for toxicity and threat using Perspective, an open source API created by Google’s Jigsaw unit and its Counter Abuse Technology team to enable the classification of harmful speech online. Perspective defines severe toxicity as “very hateful, aggressive, disrespectful” speech, and threats as describing “an intention to inflict pain, injury, or violence against an individual or group.”

Given our reliance on Perspective, however, it was not possible to replicate these analyses in Amharic or Tigrinya, the language of Tigray. The shortcomings of the automatic classification of languages outside of the US and Western Europe are well–documented and pose a significant challenge to research.

Results

Our research found no evidence for either of the predicted possibilities.

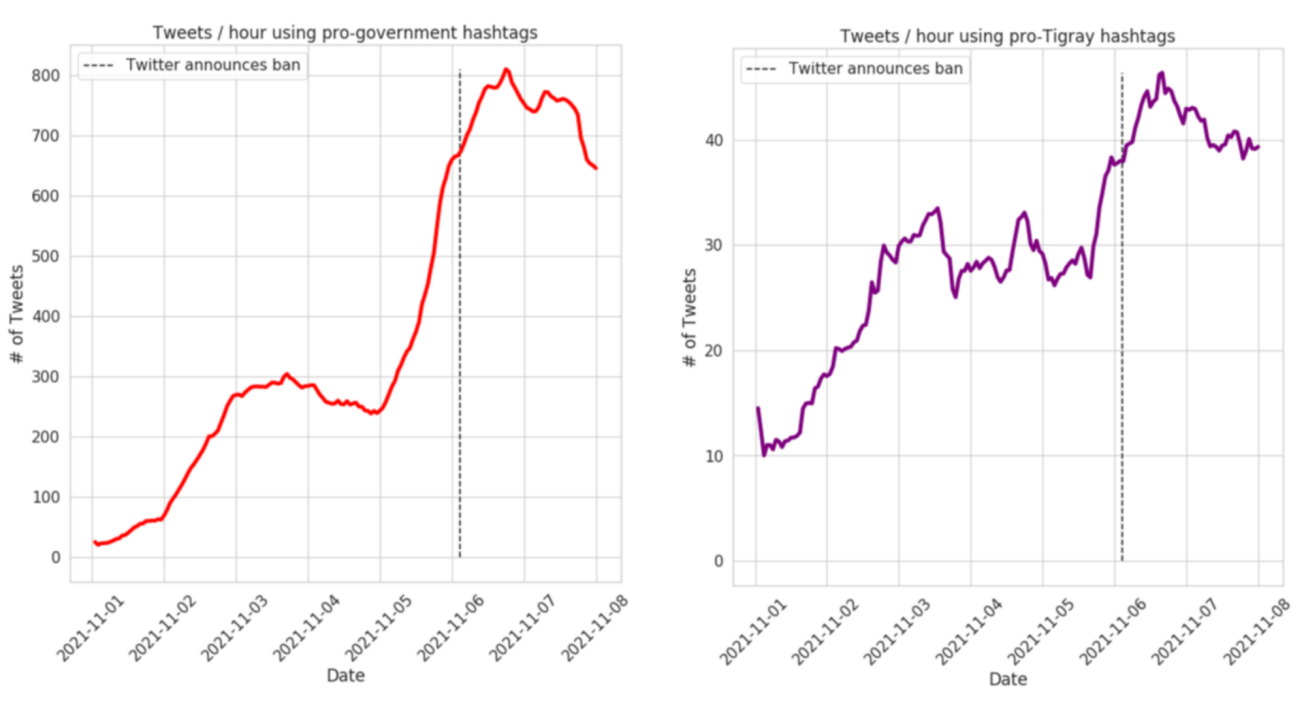

We first considered whether or not the overall number of tweets about the conflict went down following Twitter’s intervention. The two figures below show the total number of tweets per hour, split by pro-government hashtags (left) and pro-Tigray hashtags (right). Twitter’s announcement of the moratorium on trending topics is shown with a dotted gray line. Notably, there were far fewer tweets using pro-Tigray hashtags than pro-government hashtags both before and after the removal of Trends.

The data show there was no reduction in the volume of tweets per hour using hashtags related to the conflict on both sides of the political spectrum. In fact, the volume had been increasing prior to the intervention, and continued to increase following the intervention. (We performed the same analysis on retweets, replies, and favorites, and it yielded similar results). It is possible that the rate of increase would have been even greater without Twitter’s intervention; based on the observational data, though, there is no reason to believe that Twitter’s intervention led to any decrease in the number of Tweets about the conflict. Indeed, it is possible that Twitter’s intervention resulted in a backlash of users who were upset Trends had been removed in the region. And in fact, Twitter was the fifth most-mentioned account in pro-government tweets and the eighth most-mentioned account in pro-Tigray tweets in the aftermath of the announcement.

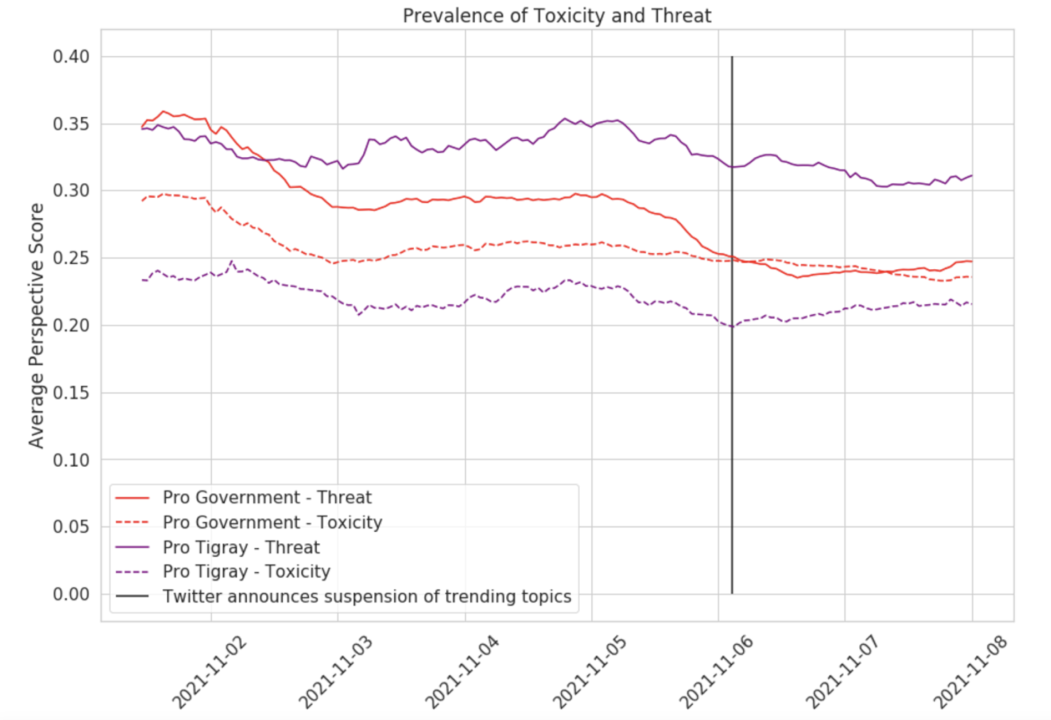

A second analysis, using the Perspective API, shows the average probability that tweets contain toxic or threatening content by hour, separated by pro-government (red) and pro-Tigray tweets (purple).

The data shows an initial decrease in the prevalence of harmful content between November 2–3, before Trends were removed. This could potentially align with a Twitter intervention that was not made public. But after the intervention announced by Twitter, there was no discernible decrease in the prevalence of threatening or toxic content.

It is entirely possible that the Twitter intervention had minimal effects because many of the users tweeting about the conflict in English, using pre-determined hashtags targeting the diaspora, were not based in Ethiopia.

Without reliable information on the impacts of the interventions made by social media platforms it is impossible to determine if the policies had any tangible impact. While further research should replicate these findings in local languages, we consider these results a preliminary suggestion that the intervention may not have had the impact Twitter intended it to.

To further examine the data, our open-source data set is accessible on GitHub.

Cite this case study:

Megan A. Brown and Tessa Knight, “Twitter’s Ethiopian interventions may not have worked,” Digital Forensic Research Lab (DFRLab), January 13, 2022, https://medium.com/dfrlab/twitters-ethiopian-interventions-may-not-have-worked-f7c433d7a8d8.