Inauthentic campaign amplifying Islamophobic content targeting Canadians

Accounts used AI-generated photos and inauthentic accounts to amplify Islamophobic account claiming to be a new Canadian nonprofit

Inauthentic campaign amplifying Islamophobic content targeting Canadians

The DFRLab identified a suspicious network operating across multiple social media platforms to amplify a recently created account named United Citizens for Canada (UCC). The network, which included at least fifty accounts on Facebook, eighteen on Instagram, and more than one hundred on X, boosted anti-Muslim and Islamophobic narratives directed at Canadian audiences. Accounts on both Facebook and X used AI-generated profile pictures, posted identical or similar replies and comments, and possibly hijacked existing accounts. On X, the network also relied on alphanumeric handles and created accounts on similar dates. Additionally, some accounts tried to bring UCC’s content to the attention of Canadian media and journalists.

Describing the campaign as “cross-internet inauthentic behavior,” Meta confirmed to the DFRLab that it had “taken initial action to remove these assets” following its own internal review. Meta added it is continuing to investigate for any additional violations.

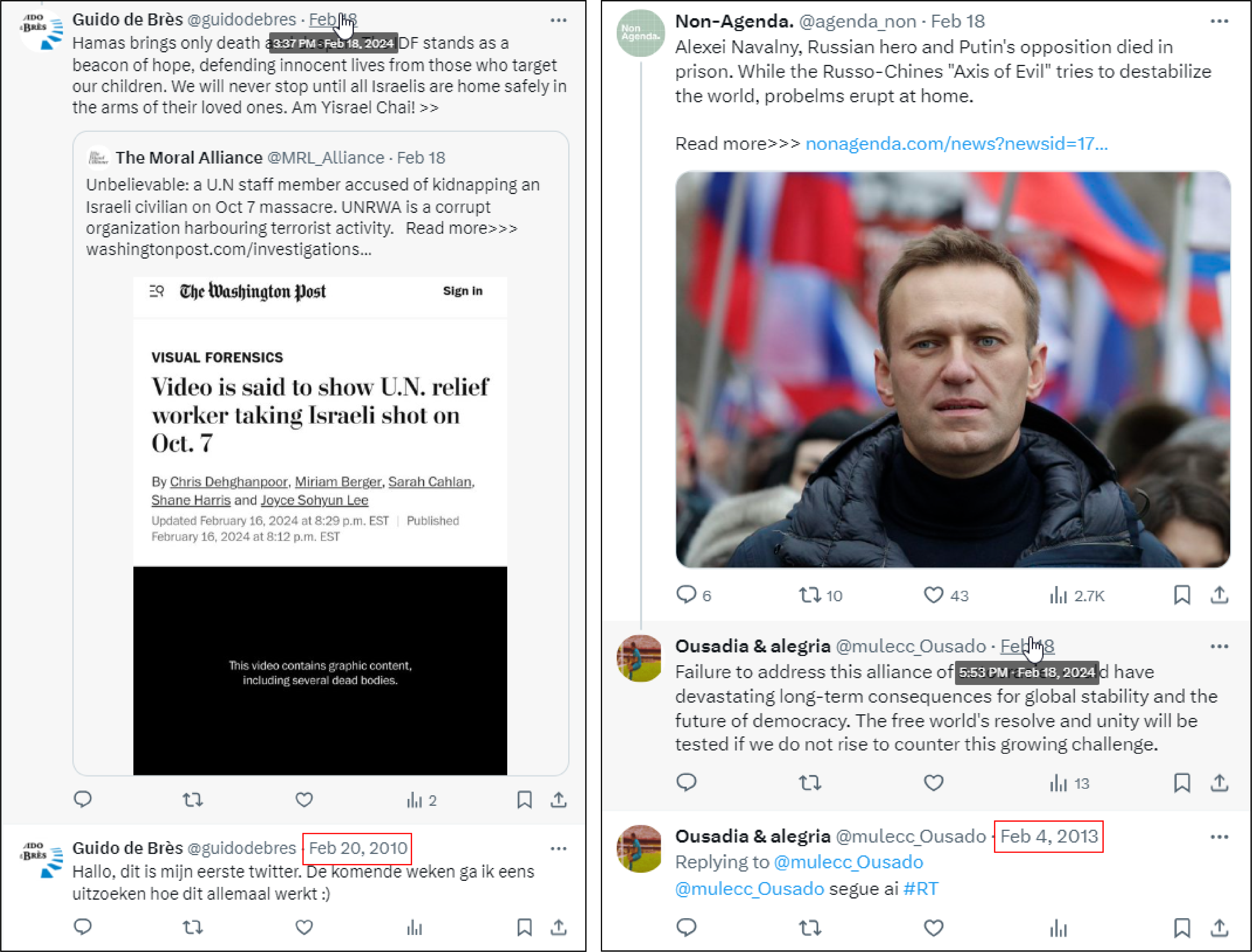

The DFRLab discovered these accounts while analyzing another suspicious network we previously investigated, consisting of more than 130 accounts on X that amplified Israel’s allegations against the United Nations Relief and Works Agency (UNRWA) in a similar manner, targeting politicians in the United States. As accounts in this network continued their suspicious activities on X, some reposted and liked posts by the United Citizens for Canada (UCC) X account, @Uni_Citizens_Ca. The majority of engagements with the UCC account came from similarly suspicious accounts that reposted and replied to UCC’s content. Reviewing UCC’s other social media profiles also revealed the presence of Facebook and Instagram accounts boosting UCC content. The network targeting UNRWA was first reported by open-source researcher Marc Owen Jones in early February 2024, with Fake Reporter and Haaretz conducting subsequent investigations.

The employment of inauthentic coordination to spread hate speech comes at a time when “acts of harassment, intimidation, violence and incitement based on religion or belief” has reached “alarming levels” across the world, as the United Nations noted on March 15. In Canada last November, a Senate human rights committee described Islamophobia as “a daily reality for many Muslims.” Moreover, Canada’s Anti-Racism Strategy states that Islamophobia “can unfairly lead to viewing and treating Muslims as a greater security threat on an institutional, systemic and societal level.”

A ‘vigilant’ non-profit

United Citizens of Canada described itself as a “vigilant” non-profit organization created by Canadian citizens worried about the “possible future Canada is heading.” Prior to being deplatformed by Meta, the page stated that it aimed to identify and address “the increasing presence and support for anti-liberal, aggressive, and violent Islamic movements and organizations in Canada,” which according to the group is increasing because of Canada’s liberal immigration policy. UCC promoted such views through its X account and now-defunct Facebook page, both created on February 18, 2024, as well as its YouTube account and now-removed Instagram page. It also maintains a website that was registered on February 26, 2024. UCC’s Instagram account was first created in August 2019, but was likely repurposed from its original use, as it previously had five other usernames at various points of time.

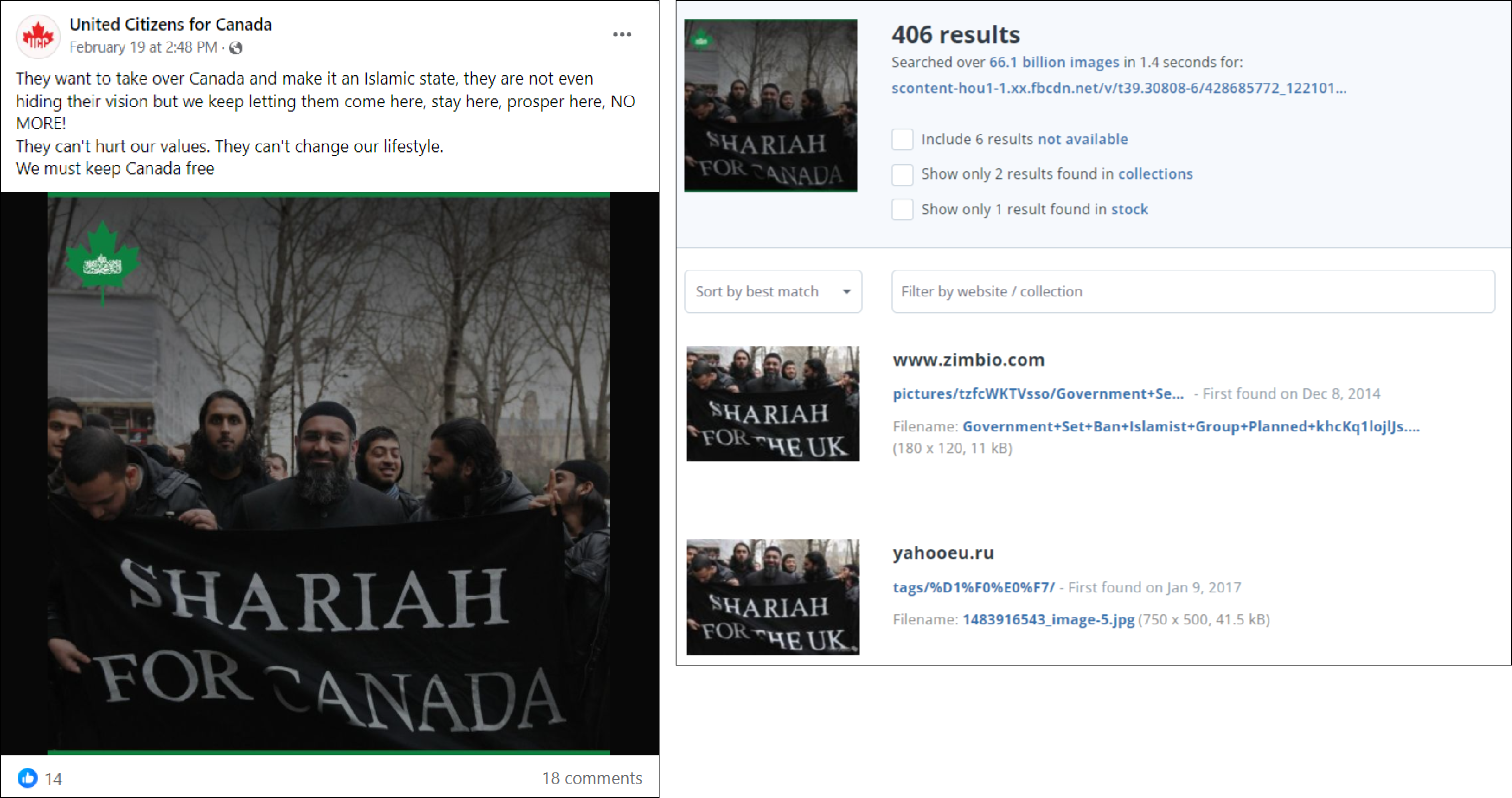

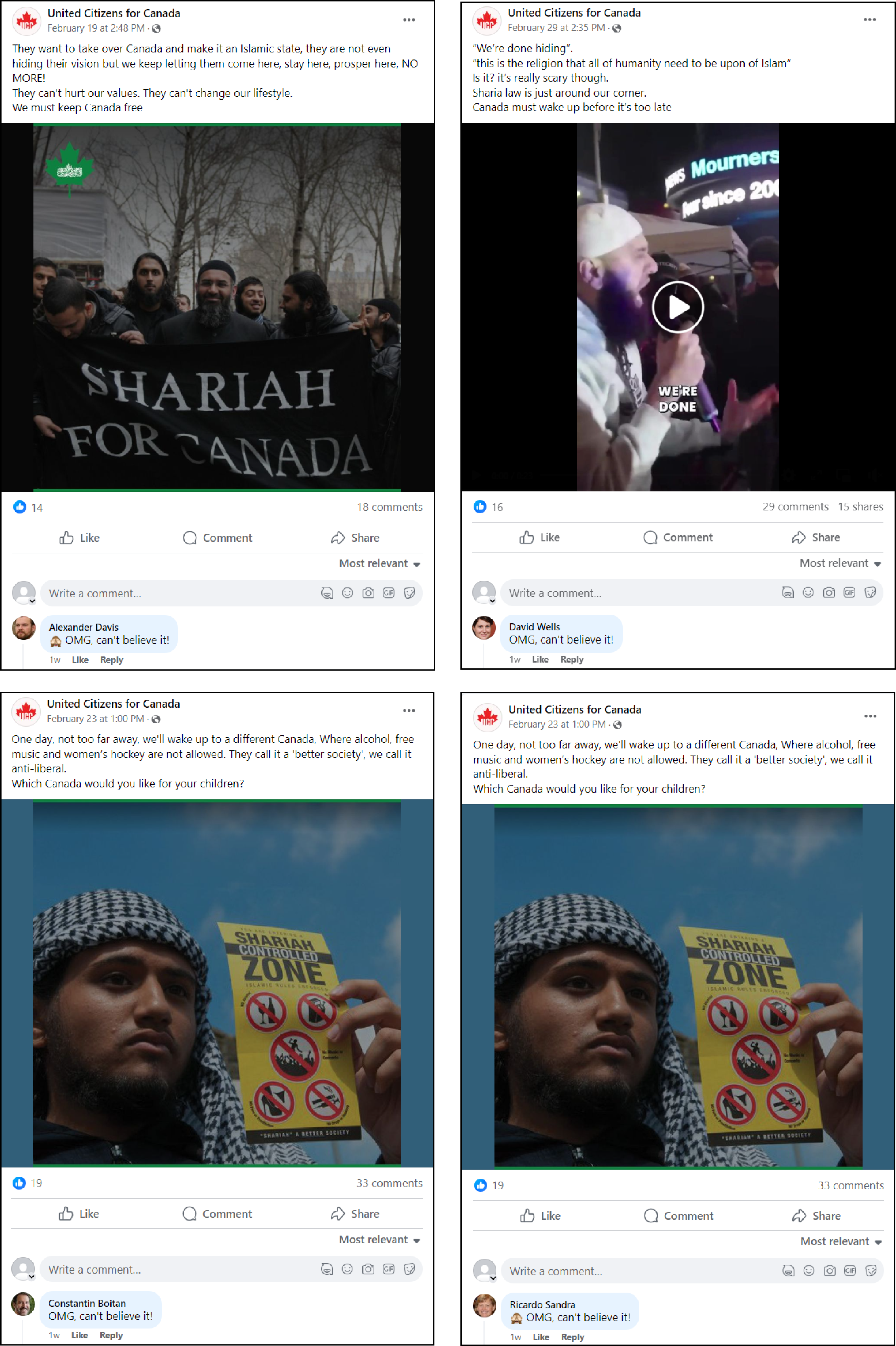

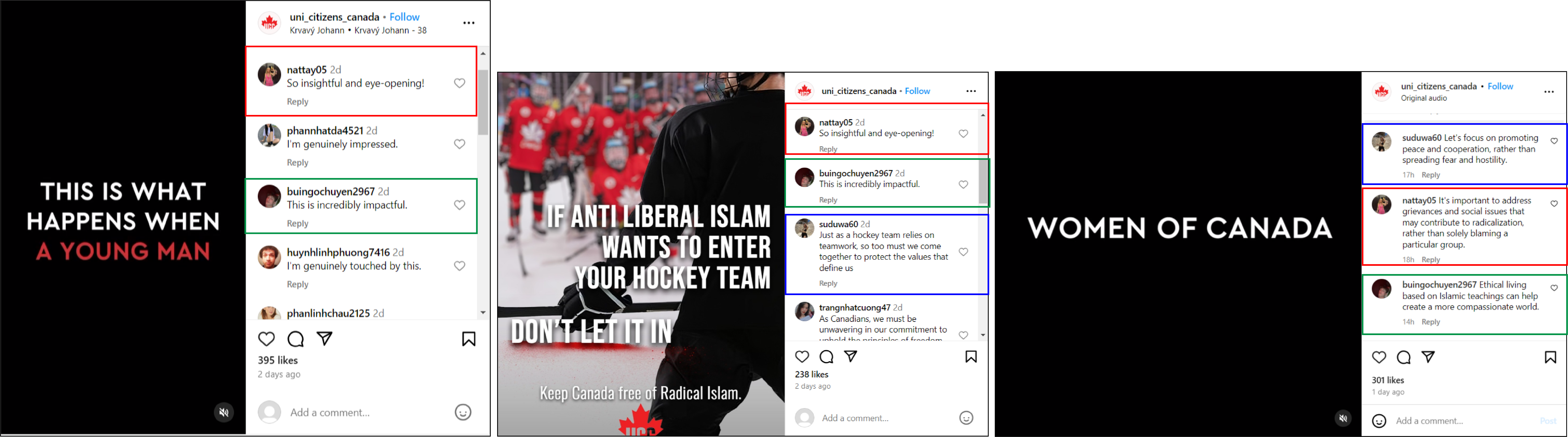

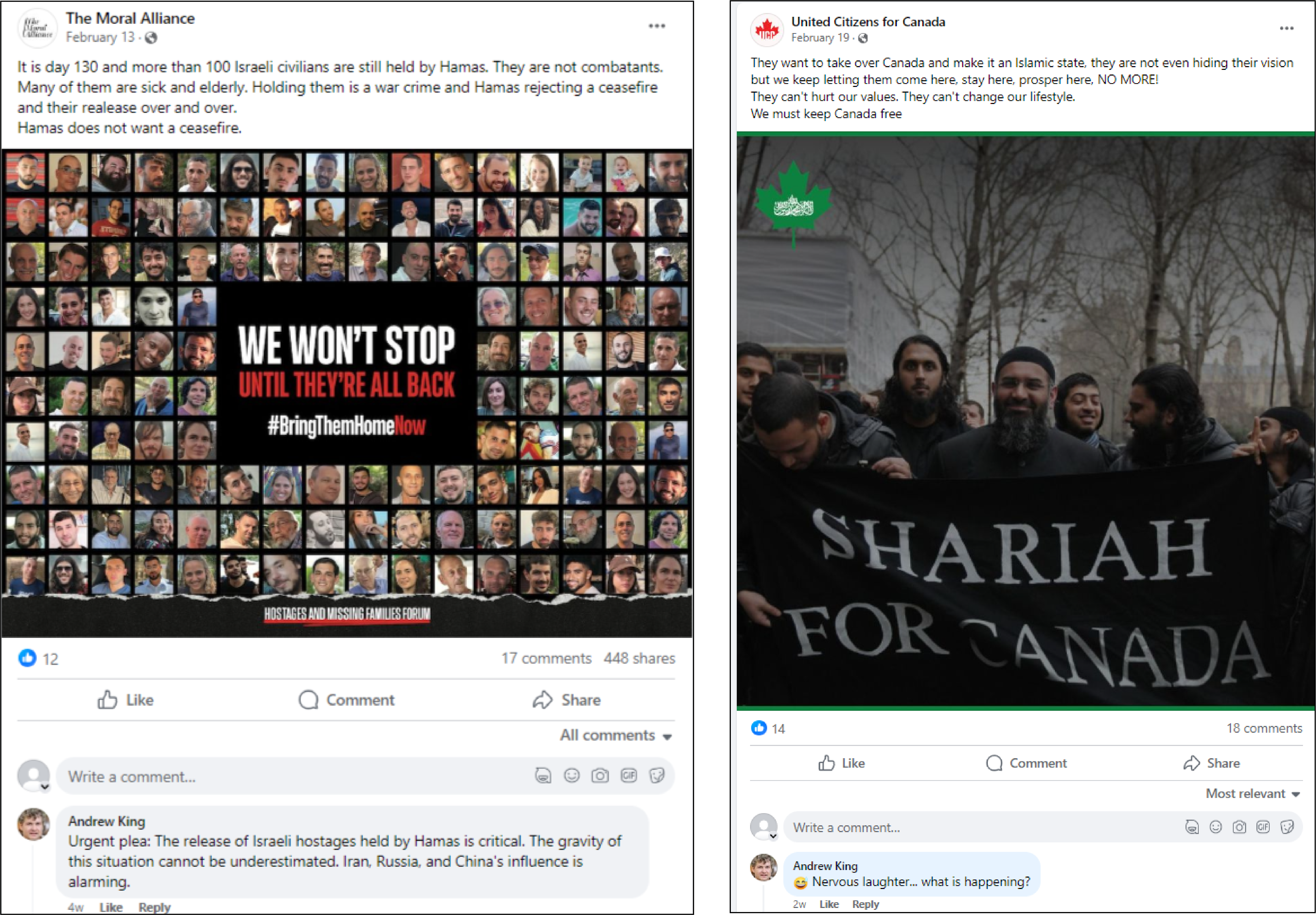

The group’s posts reflected their stated purposes as they spread Islamophobic narratives, often altering existing online images and videos to misleadingly make the content about Canada. They chiefly warned Canadians about the claimed dangers of radical Islam in their country and the need to preserve Canada’s liberalism.

Targeting Canadian media and journalists

Notably, on X, some accounts directly targeted Canadian media and journalists by replying to their posts with links to UCC posts. This behavior is similar to actions observed in the network amplifying claims against UNRWA, which targeted US politicians in replies to their tweets. For instance, the now-suspended account @mulecc_Ousada replied to unrelated posts by Canada’s National Observer, journalist Jen St. Denis, and CBC News with identical text drawing attention to a post by UCC. Similarly, two other accounts in the network replied to a post by Rosemary Barton, CBC’s chief political correspondent, asking Barton if she noticed a UCC post about “Sharia in Canada.”

Similar and same comments

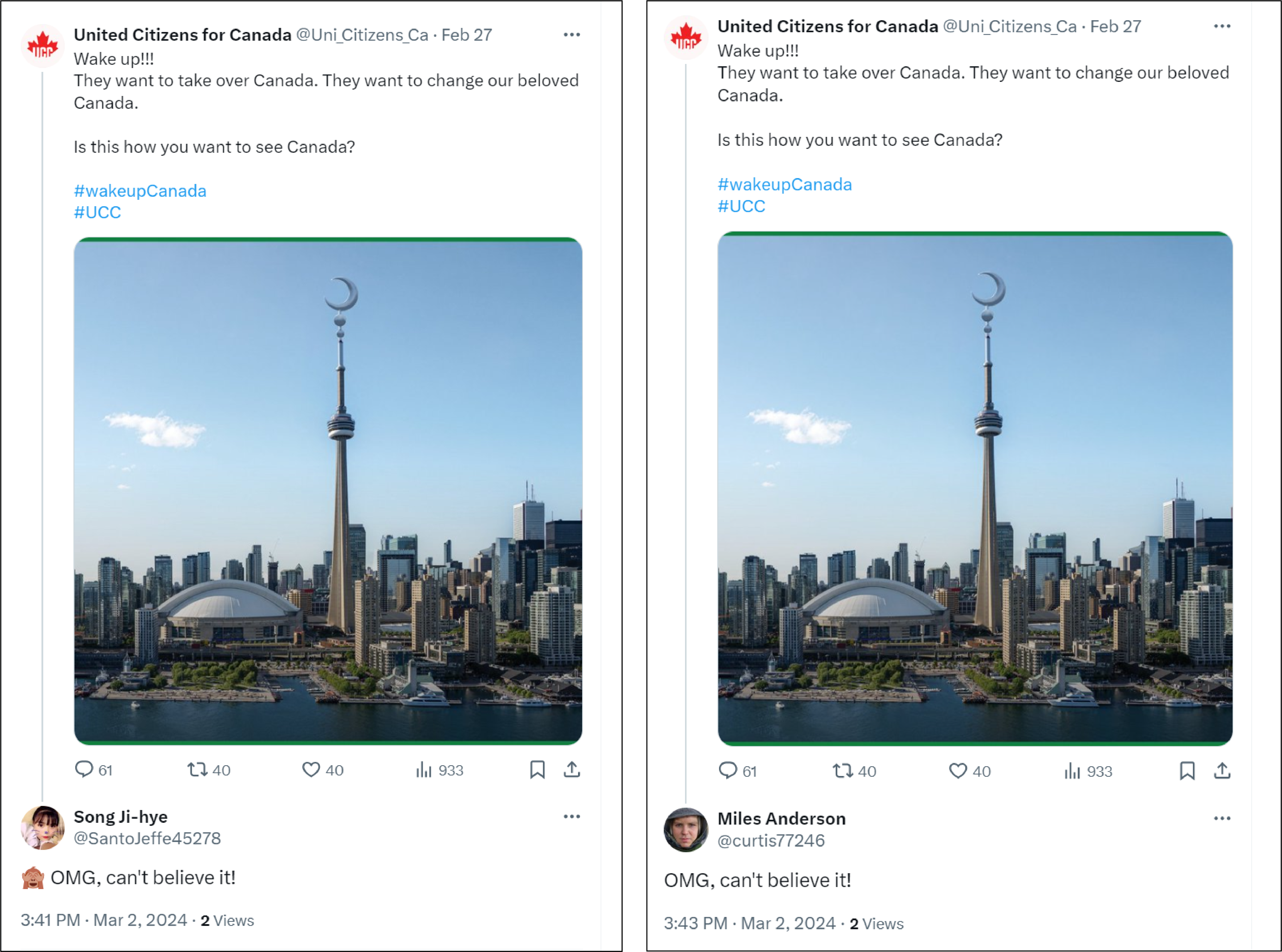

On Facebook, Instagram, and X, many accounts engaging with posts by UCC used verbatim or very similar language to amplify posts. Some of these were brief; for example, four accounts on Facebook used the phrase, “OMG can’t believe it!” while commenting on three UCC posts. Two of them also added the same “see no evil” monkey emoji at the start of the comment.

Three X accounts also employed the same phrase while replying to a UCC post, one of which featured the monkey emoji.

On Instagram, accounts also amplified UCC posts with verbatim or similar comments. In many instances, the same eighteen accounts left similar comments on posts. Each post also had a similar number of total comments, ranging from fiteen to eighteen.

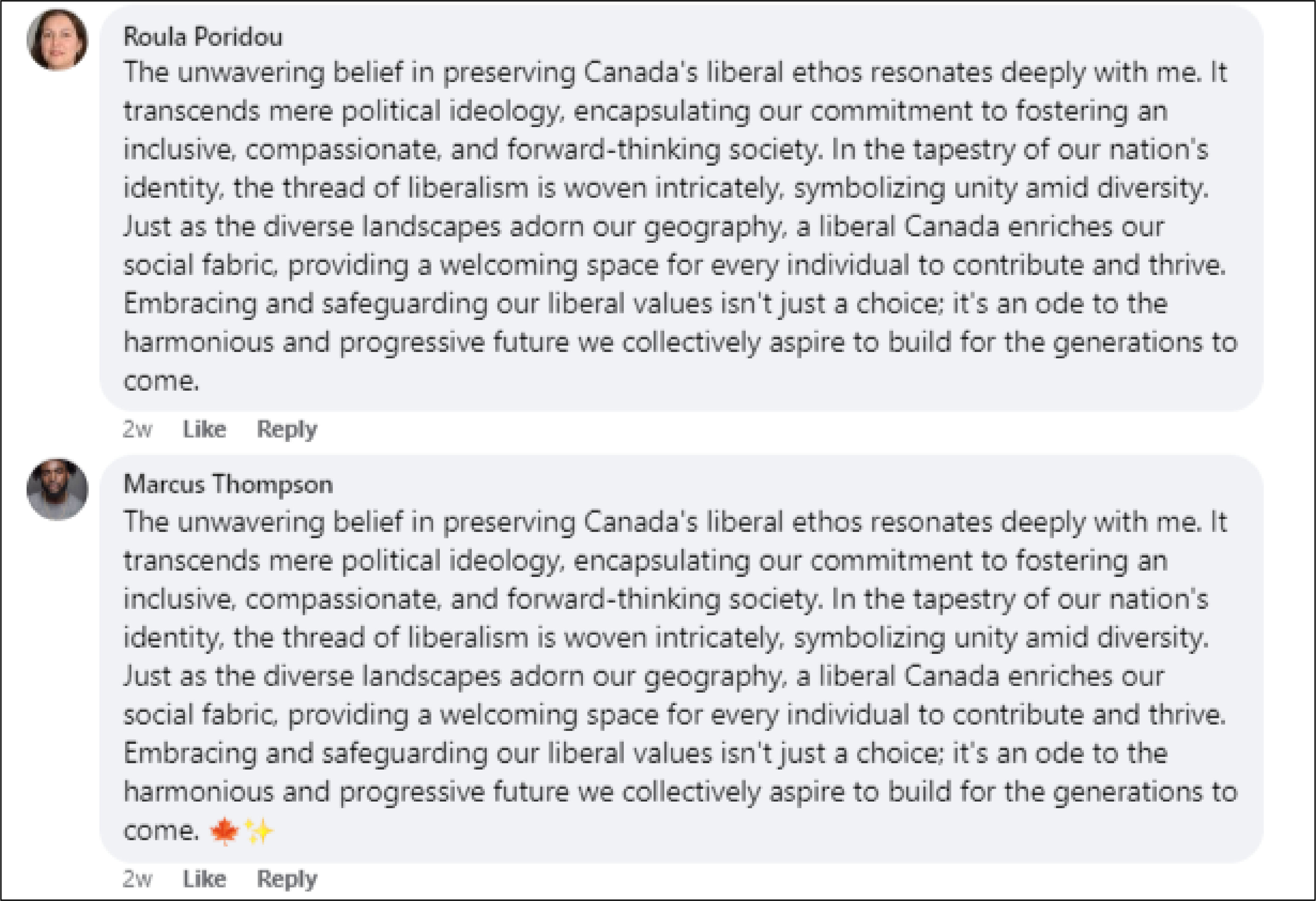

In another instance, two Facebook accounts replied to a UCC post within fifteen minutes of each other, this time using much longer overlapping text about “preserving Canada’s liberal ethos,” with one comment adding two emojis at the end of the text.

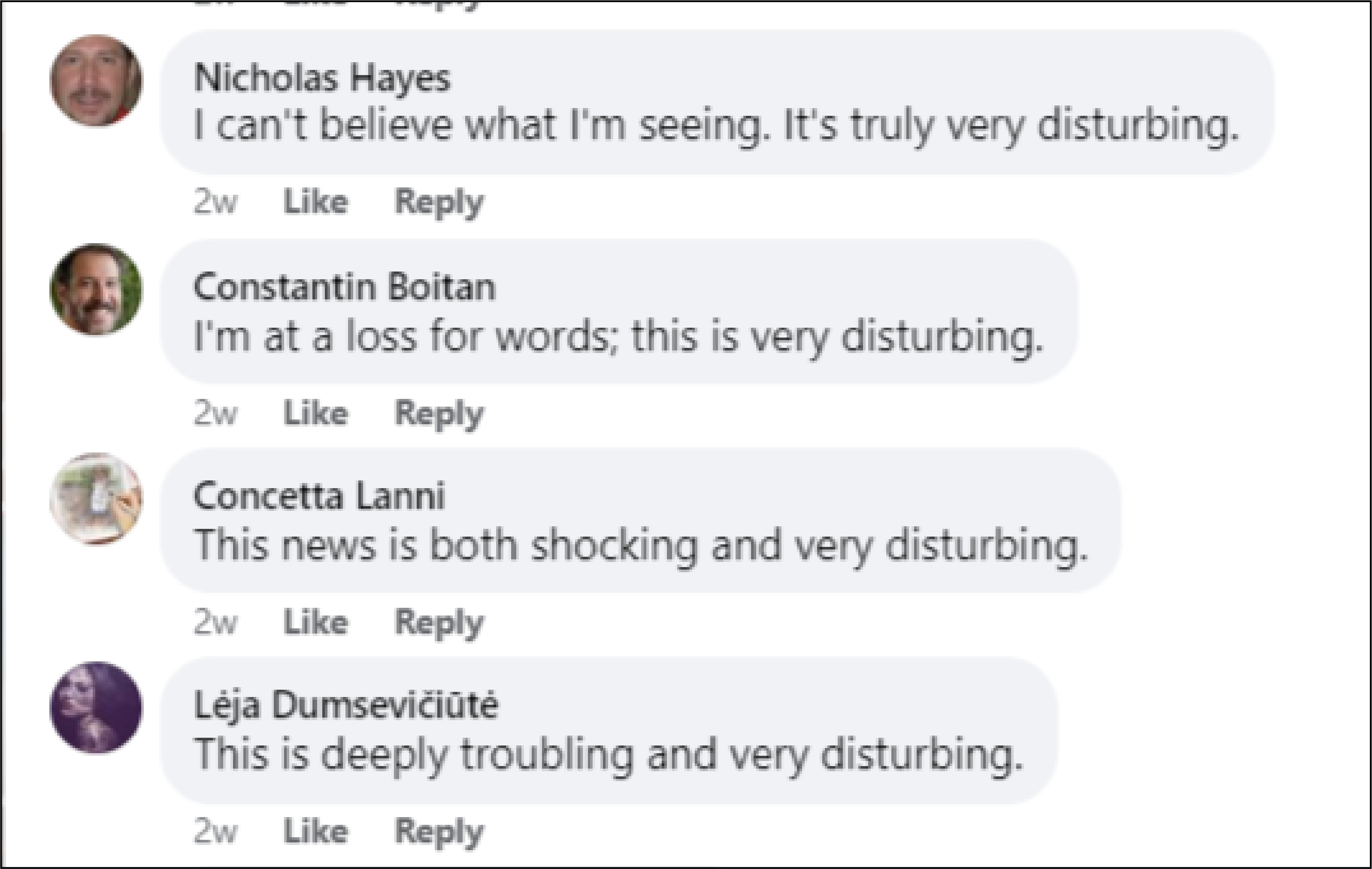

Other accounts posted similar comments and replies, such as four different accounts responding to the same Facebook post all claiming to express shock, all signing off with variants of “very disturbing.”

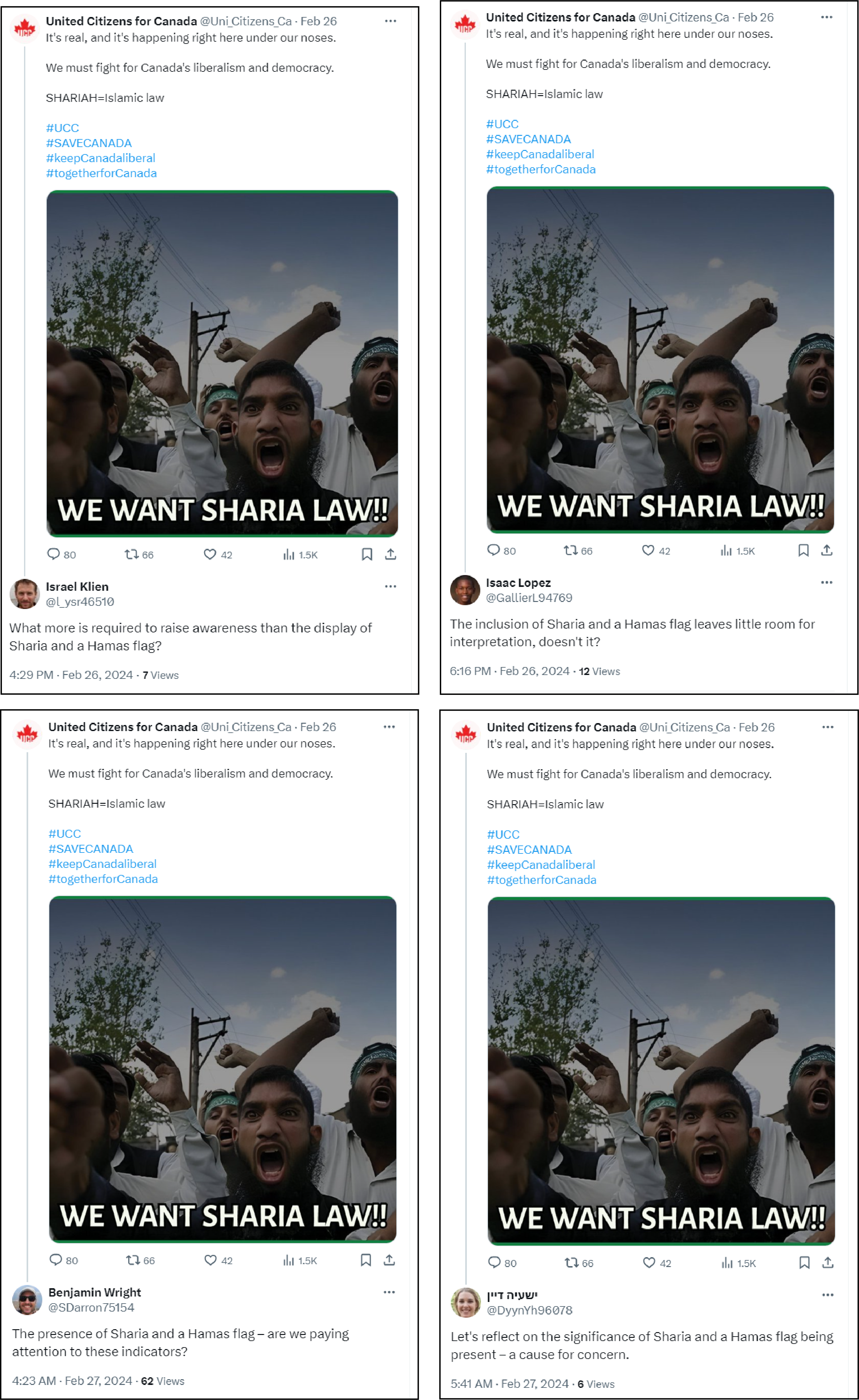

Additionally, four different X accounts made similar replies to a UCC post that stated, “It’s real, and it’s happening right here under our noses. We must fight for Canada’s liberalism and democracy.” As evidence, it included an old photo taken in 2006 in India that has been used widely as a meme by internet users. The four commenters all suggested that the image contained a Hamas flag, even though it did not.

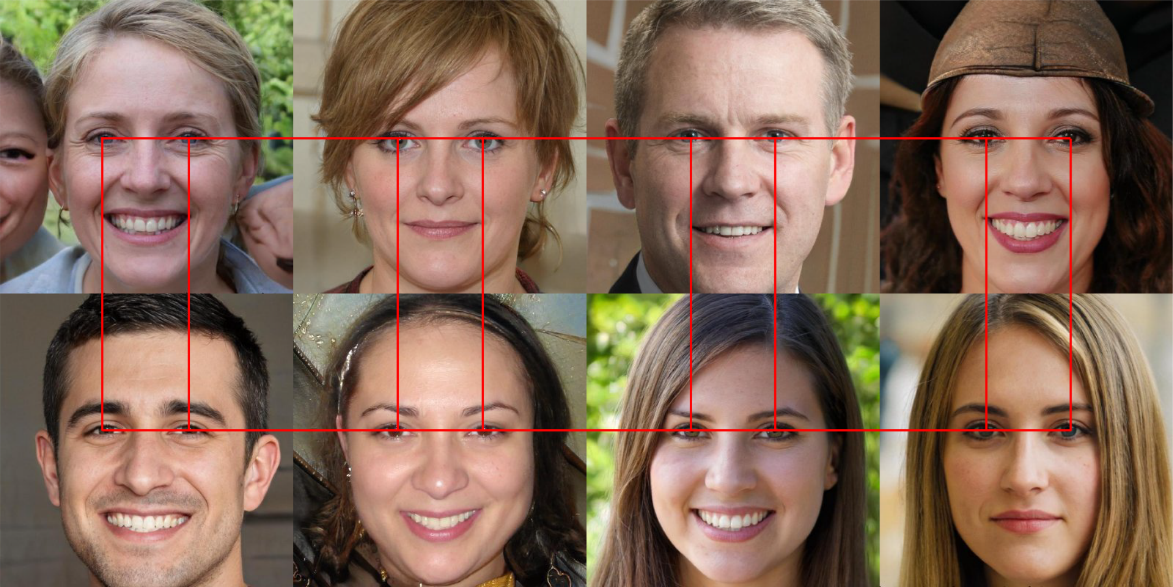

The prevalence of AI-generated pictures

Many of the accounts operating on Facebook and X appeared to use AI-generated photos which had the hallmarks of generative adversarial networks (GAN). The DFRLab counted a total of eighteen profile photos on Facebook and more than fifty others on X utilizing images that were likely GAN-generated.

Some GAN websites offer their generated pictures at no cost to users but add a watermark on the top or bottom of the picture. Users can then either crop out the watermark or use the picture with the website’s branding. Most of the GAN images on Facebook and X did not display watermarks, though one Facebook account named “Lia Reed” failed to crop it out, keeping the label of the GAN image source, This Person Does Not Exist.

Same creation dates, same avatars, and alphanumerical handles

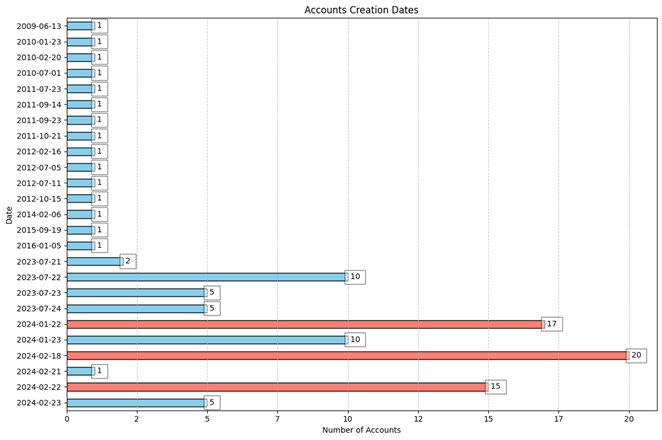

A closer inspection of more than one hundred X accounts in the network revealed indicators of suspicious behavior, including overlapping creation dates and the wide use of alphanumeric handles. Many were created on similar dates, some minutes apart. According to analysis using Twitter ID Finder, many of the accounts were specifically created in July 2023, January 2024, and February 2024. Fifteen accounts, all missing avatar images, were created hours before UCC’s X account on February 18, while an additional fifteen accounts featuring GAN-generated avatars were created within minutes of each other on February 22.

On Instagram, all accounts were created in October 2023, except for one account created in September 2023. However, this account previously featured a different username, a sign that it could have been repurposed or hijacked.

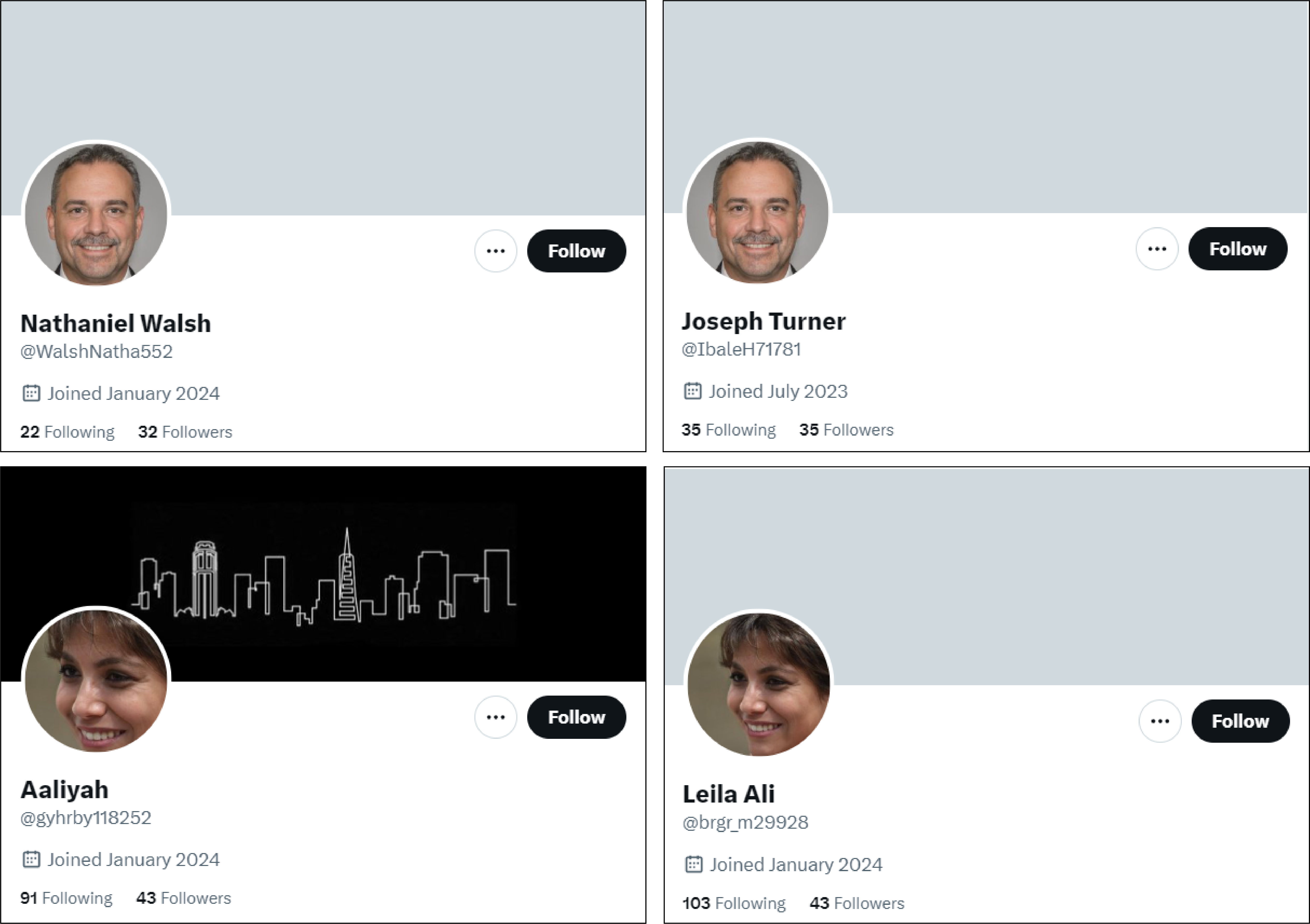

More than seventy-five X accounts also used alphanumeric handles, some of which were similar, such as @brgr_m60585 and @brgr_m29928, or @MyklZdwq17509 and @MyklZdwq91506. Similarly, the DFRLab also noticed that six X accounts in the network used the same GAN-generated pictures, for example, @WalshNatha552 and @IbaleH71781, or @gyhrby118252 and @brgr_m29928.

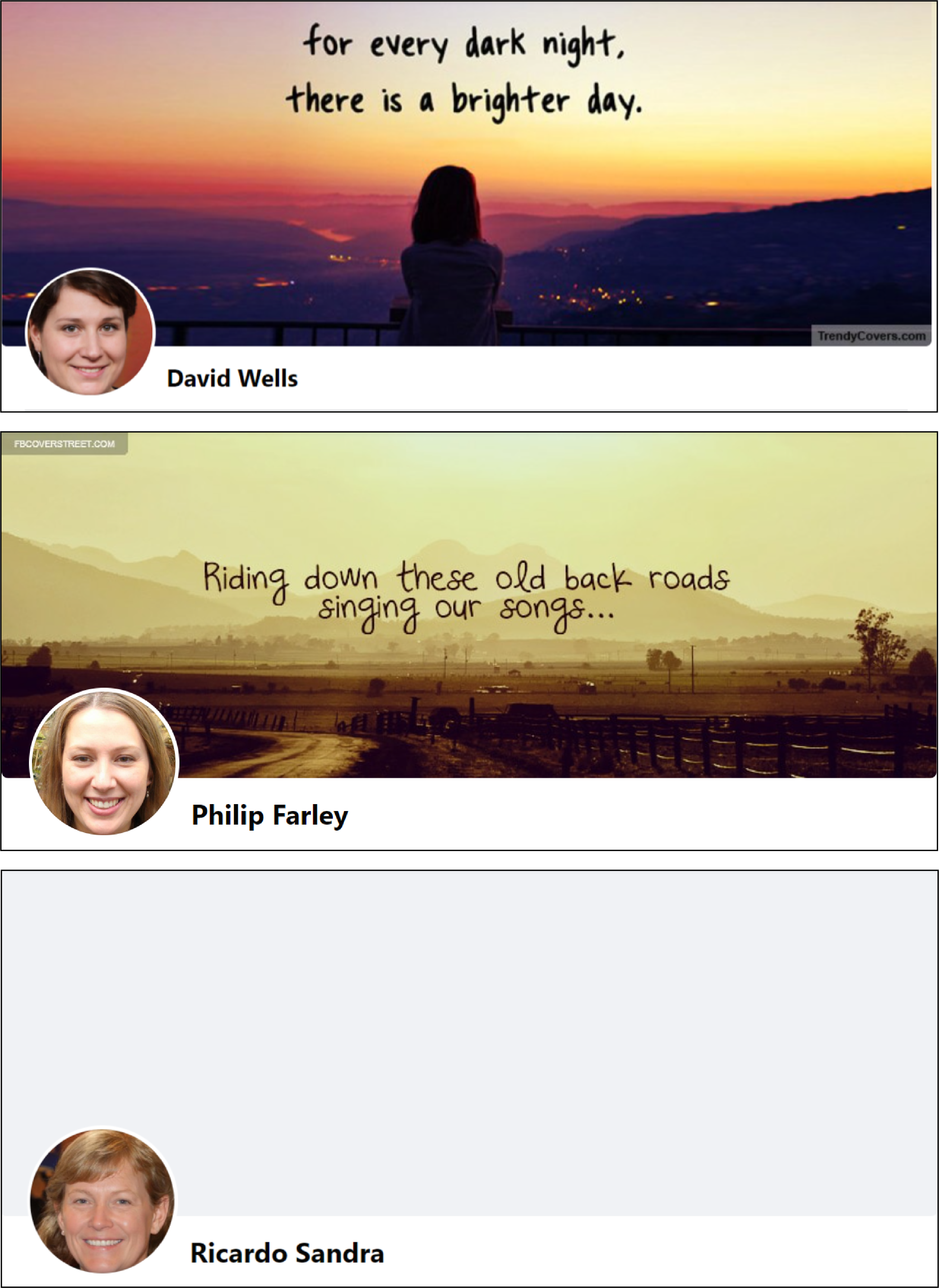

Additionally, some accounts on Facebook had mismatched usernames with profile pictures as they used names typically associated with males and pictures depicting females.

Indicators of potential account hijacking

On both Facebook and X, some accounts exhibited indicators suggesting possible hijacking from existing accounts in an attempt to appear more legitimate.

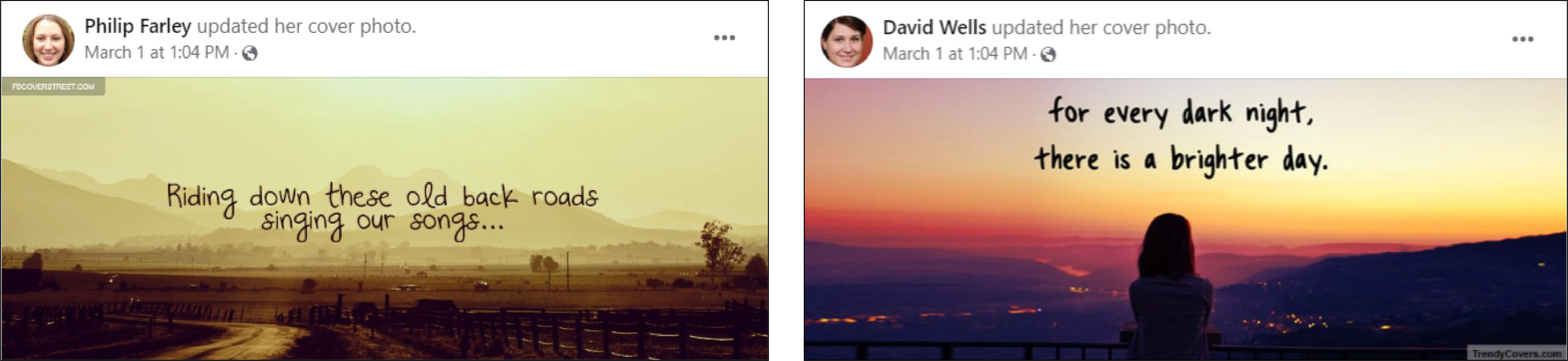

In some cases, it appears that accounts were repurposed by changing their profile pictures to GAN-generated pictures and possibly removing other identifying information. For example, twelve accounts changed their profile pictures to GAN-generated pictures during a narrow timeline on February 11, 2024, with some changing their pictures minutes apart. Others changed their cover photos seconds apart on the same day.

In other instances, details about the accounts’ prior incarnations had not been removed. These included old profile pictures, likely uploaded by authentic users who formerly owned these accounts, which no longer matched their current profile picture. Moreover, some accounts previously posted in different languages.

A few accounts appeared to make an effort to avoid detection after the apparant repurposing. One method used by some accounts involved posting brief one-word posts over successive days to make the account seem active. In another example, one account recently reposted old photos originally uploaded in 2018 by the potentially hijacked user, possibly to suggest that the original user still managed the account

On X, the DFRLab also identified twelve accounts that appeared dormant for many years before becoming active again; it is also possible the account simply deleted older posts . One account, @guidodebres, featured a posting gap of more than a decade.

Connections with previous network

As noted earlier, some of the accounts in this network first appeared during our previous investigation into suspicious accounts targeting UNRWA. Though it is difficult to establish a direct connection between the new accounts and the previously discovered network, there are indicators of collaboration.

For example, the previously covered network amplified two suspicious magazine Facebook pages, Non-Agenda and The Moral Alliance, which were also amplified by accounts boosting UCC’s posts. Despite having only one follower at the time of conducting this research, a February 13 post by The Moral Alliance about Israeli hostages held by Hamas garnered seventeen comments and 448 shares. While not all engagements are publicly visible as some accounts are locked or private, three of the accounts that commented on the post were part of the network amplifying UCC’s content on Facebook. Five Facebook accounts in the network were also following Non-Agenda.

Another possible connection was found via three Facebook accounts following an account named “Alicia Miller.” That account, which featured a stolen profile image prior to Meta deplatforming it, shared the same name and profile image of an X account involved in the anti-UNRWA campaign.

While UCC and the inauthentic network peddled Islamophobic content, it is difficult to determine who is behind these accounts and whether old accounts in the networks were recruited or hijacked.

Cite this case study:

“Inauthentic campaign amplifying Islamophobic content targeting Canadians,” Digital Forensic Research Lab (DFRLab), March 28, 2024, https://dfrlab.org/2024/03/28/inauthentic-campaign-amplifying-islamophobic-content-targeting-canadians.