Sockpuppet network impersonating Americans and Canadians amplifies pro-Israel narratives on X

A network of accounts on X using stolen and possibly AI-generated images coordinated to engage with accounts supportive of Israel

Sockpuppet network impersonating Americans and Canadians amplifies pro-Israel narratives on X

Share this story

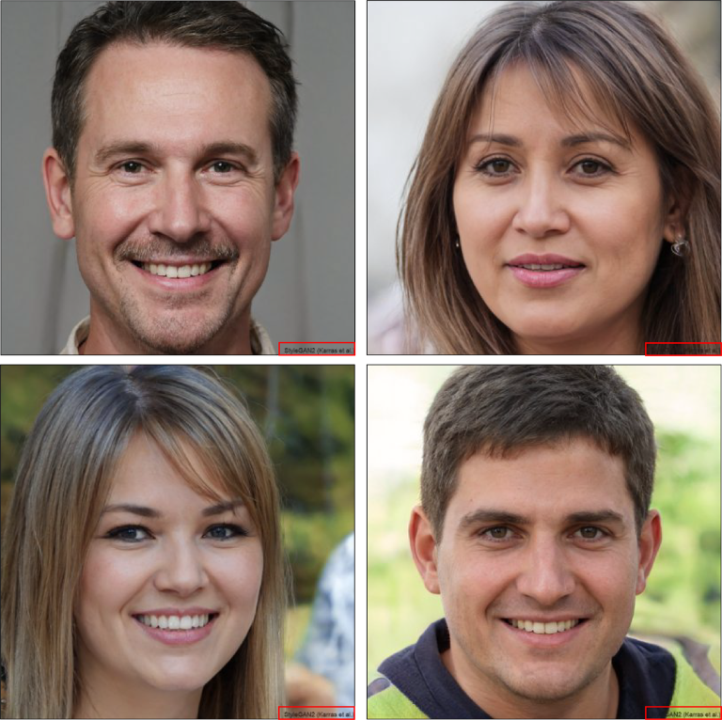

Banner: Montage of X avatars that appear to be AI generated, including one that spelled “Trump” as “Truip” (bottom row, second from the left). (Source: X)

The DFRLab identified a network of more than fifty X accounts exhibiting signs of inauthenticity and possible coordination to promote pro-Israel narratives. The accounts, claiming to be American, Canadian, or individuals residing those countries, engaged with pro-Israel accounts and amplified their posts using retweets, quote retweets, likes, and replies. Some of the accounts in the network were created within the same time span, while others appear to be pre-existing accounts that were hijacked or repurposed; they also inflated their subscriber numbers by following each other. Some also used AI-generated images or publicly available images for avatars. While the content propagated by the network was primarily pro-Israel, it sometimes amplified partisan US election messaging in support of former President Donald Trump and against President Joe Biden.

This discovery follows previous research by the DFRLab and other investigators which exposed a similar network of inauthentic pro-Israel accounts which became active on Facebook, Instagram, and X after the beginning of the war in Gaza. This previous network targeted US politicians with messages amplifying allegations against the United Nations Relief and Works Agency (UNRWA); it also targeted Canadian media with Islamophobic content. Following the DFRLab’s investigation, Meta announced the removal of over five hundred Facebook accounts, eleven pages, one group, and thirty-two Instagram accounts from this network and linked it to an Israeli political marketing and business intelligence firm named Stoic. OpenAI also found that the network used its AI tools to create content for the campaign. The New York Times, Haaretz, and FakeReporter wrote that Israel’s Ministry of Diaspora Affairs funded Stoic’s efforts to conduct the mentioned influence operations.

It is currently unknown whether the new network has any links to previous inauthentic campaigns such as the one reportedly orchestrated by Stoic, or if they merely share similar tactics and messaging goals.

AI-generated images and strikingly similar bios

Accounts in this network typically used photos which were publicly available or likely to have been generated by artificial intelligence using generative adversarial networks (GANs). The DFRLab counted at least 35 accounts using possible GAN-generated images and eighteen accounts using publicly available photos detected through a reverse image search, including images taken from the profiles of other internet users.

GAN-generated images usually have specific errors detected by a trained eye such as unmatched earrings, errors in hair details, and unmatched light reflections in the eyes; they also tend to place the eyes in the same position consistently across images. All of these traits were present in pictures used by the network. Moreover, websites and tools that create such images for free often add a watermark to the created images. Suspicious networks typically remove these watermarks to avoid detection, but at least four accounts in this network forgot to do so; each of these displayed the same watermark at the bottom right corner of their avatars, suggesting that they were generated using the same tool.

Furthermore, several accounts created around June 2016 appear to use AI-generated imagery without the use of a GAN. These images are often more realistic or in artistic styles that could be theoretically created by people. While it is difficult to verify that these images are synthetic, the DFRLab noted similarities between the four accounts including consistent photographic style and composition, the use of portraits or headshots set against blurred backgrounds, and similar flattering lighting. Facial expressions and poses in the images also appear to be consistent with subjects all looking directly at the camera with neutral or positive expressions.

Moreover, one of the accounts, @chrislaz87, used a photo of a bearded man wearing a white t-shirt with the word “TRUIP” on it, possibly a typo for the word “Trump.” Typos in images are common errors often associated with AI-generated imagery.

Other accounts such as @MeriWharton18 and @PhylissGalvin used stock photos and publicly available images for their avatars. They were created minutes apart from each other on April 30, 2024; at the time of publishing they had been suspended for violating platform rules.

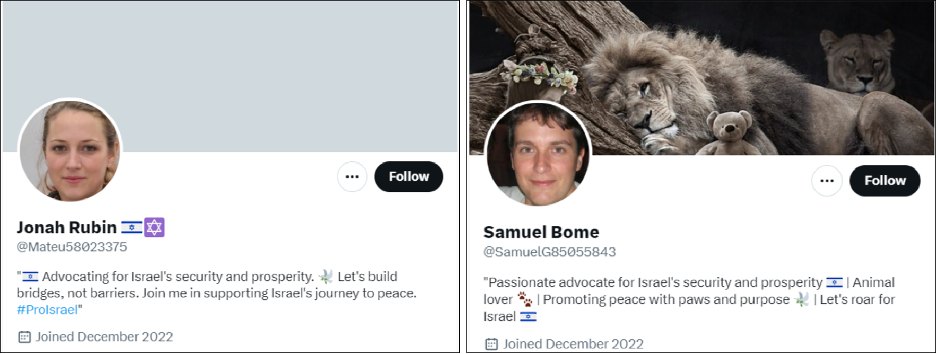

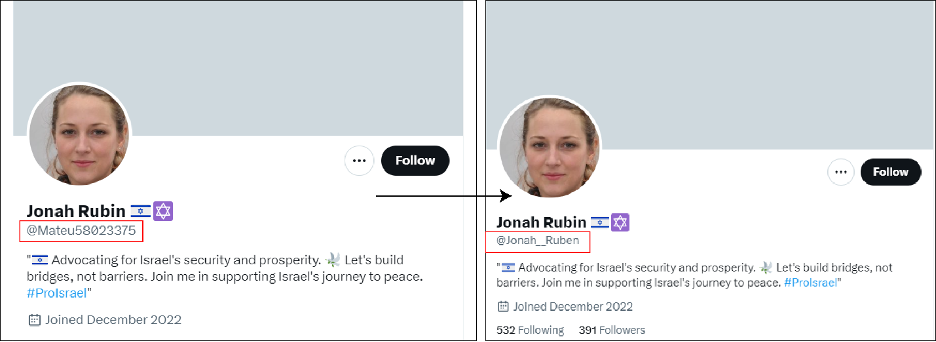

It is also possible that some accounts used AI to generate short descriptions for their bios. For example, @Jonah__Ruben and @Sam_Bome both had bios with similar text saying that they are either advocating or an advocate “for Israel’s security and prosperity.” Both bios had the Israeli flag and the dove emojis; notably, they also had quotation marks at the start of their bios, suggesting that the text might have been copied from another source.

Fake Americans, Canadians, and Brits, oh my!

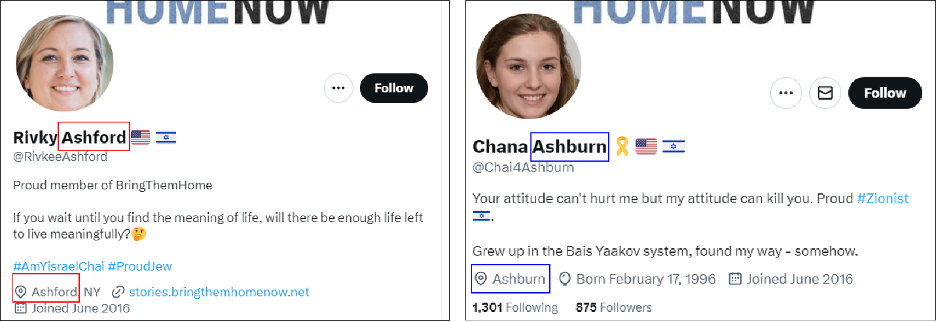

Gauging by the locations listed in their bios, most of the accounts in the network attempted to portray themselves as Americans or residents of US cities. They also used common American surnames. At least two accounts, @RivkeeAshford and @Chai4Ashburn, claimed the cities of Ashford and Ashburn as their location, which were suspiciously their last names as well.

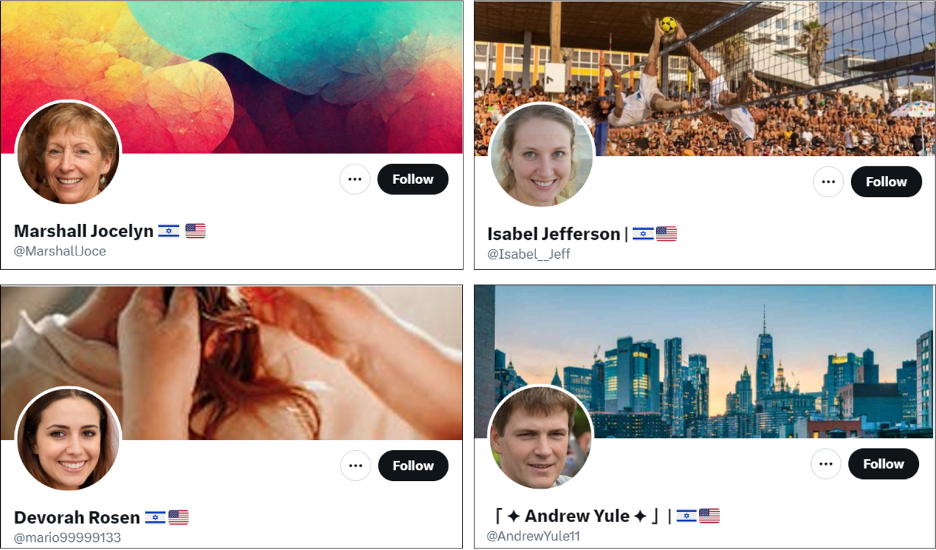

Moreover, twenty accounts used both the Israeli and US flags emojis next to their display names. Others such as @PeggyWinnipeg and @Hennes1Theresia used the Israeli and Canadian flags emojis. Accounts also used these emoji flags often in their bios.

Signs of hijacking and similar creation dates

Using the Twitter ID Finder and Converter tool, the DFRLab investigated the creation dates of accounts in the network, revealing a suspicious number of accounts created in short periods of time, some as recently as May 2024. Notably, more than twenty accounts were created in June and July 2016, eight on April 30, 2024, and five on May 7, 2024.

Many of the accounts created in 2016 and 2022 were particularly suspicious as they appear to have been dormant for years before being active again during similar dates. These include the accounts @RivkeeAshford—created on June 7, 2016—and the now-suspended @PastorCurtisTX, created on June 8, 2016. Neither had posts until Jan 21, 2024, at which point they began posting four minutes apart. Other accounts had older personal posts, suggesting they belonged to real users whose accounts might have been hijacked, sold, or repurposed. This was the case for the account @KatzTalia613, an account which posted occasionally in 2023; one such post showed a poster with Bengali text supporting a political movement in Bangladesh.

Other accounts tried harder to appear authentic. For example, the account @benjaminlibraty was dormant from 2018 before becoming active again on January 21, 2024, when it began posing as an IRS worker; its first post after returning from dormancy contained a link to an article about taxes.

During its research, the DFRLab noticed at least five accounts changing their handles from autogenerated alphanumeric handles to more personalized handles that match their display names, possibly to appear more authentic.

Narratives, engagement, and the Israel Digital Army

The new network identified by the DFRLab focused on retweeting, quote retweeting, replying, and liking posts supportive of Israel from authentic accounts belonging to individuals and influencers. The content they amplified covered a range of topics including US politics towards Israel, Israeli hostages taken by Hamas, support for the Israeli government and military, criticism of the United Nations and the UNRWA, Islamophobic content, and other issues. Accounts in the network also occasionally created original posts related to the same topics.

It is worth noting that the network often posted and promoted content that included graphics with a watermark used by a group of online accounts on X calling itself the “Israel Digital Army” (IDA). A website with the domain name IsraelDigitalArmy.com previously redirected users to a Google Form with the title, “Israel Digital Army Volunteer Form,” created “to find volunteers and organiz[e] people’s skills in the most effective possible manner.” The form states that the goal of the IDA is “to mobilize lovers of Israel from around the world to become soldiers on the front lines in Israel’s battle in the war of ideas.” The website now redirects to Thelandofisraelpin.com, which sells yellow ribbon pins in solidarity with Israeli hostages held in Gaza.

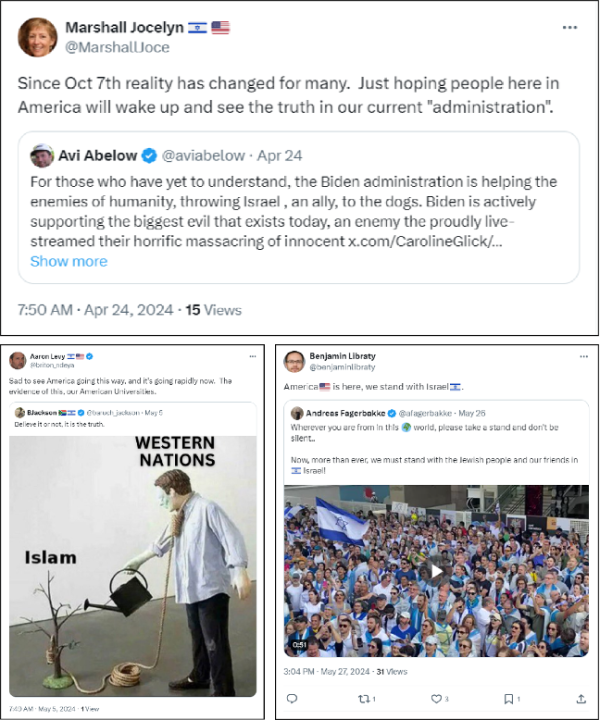

One example of this network’s activity is a post from X user Avi Abelow, whose account received consistent engagement from the network, which was quote tweeted by the account @MarshallJoce . The post criticized the Biden administration for not providing sufficient support for Israel, adding “hoping people here in America will wake up and see the truth in our current ‘administration.’”

In May 2024, another account in the network, now-suspended, quote tweeted a post depicting western nations as supporting Islam in a way that is harmful to them. , “Sad to see America going this way, and it’s going rapidly now,” the post read. “The evidence of this, our American Universities.” That same month, a third account from the network quote tweeted another post affirming American support for Israel.

In other instances, accounts from the network created original posts containing graphics with the IDA’s watermark. For example, the accounts @RafiRaSlatovik and @KevinLarryDavid posted two different cartoon graphics on May 31, 2024, each of which included the watermark at the bottom left corner. These cartoons made fun of the US military’s temporary pier in Gaza and President Biden’s three-phase peace plan.

The account @RafiRaSlatovik was among the most vocal in terms of expressing partisan viewpoints. On May 5, 2024, it criticized President Biden, writing, “Yes, never again is now, even though Joe Biden seems to support, “Again as soon as Hamas regains its footing”.” The following day, it accused the US Congress of not being sufficiently pro-Israel: “Yes, it matters to me that Congress be pro Israel, but it should also matter to Congress, because if they are not, it is Congress that will be extinguished, not Israel!” Less than ten minutes later, it advocated the ethnic cleansing of Gaza, writing, “Yes, make Gaza Jewish again, and then make all of Israel safe for Jews, from the river to the sea!”

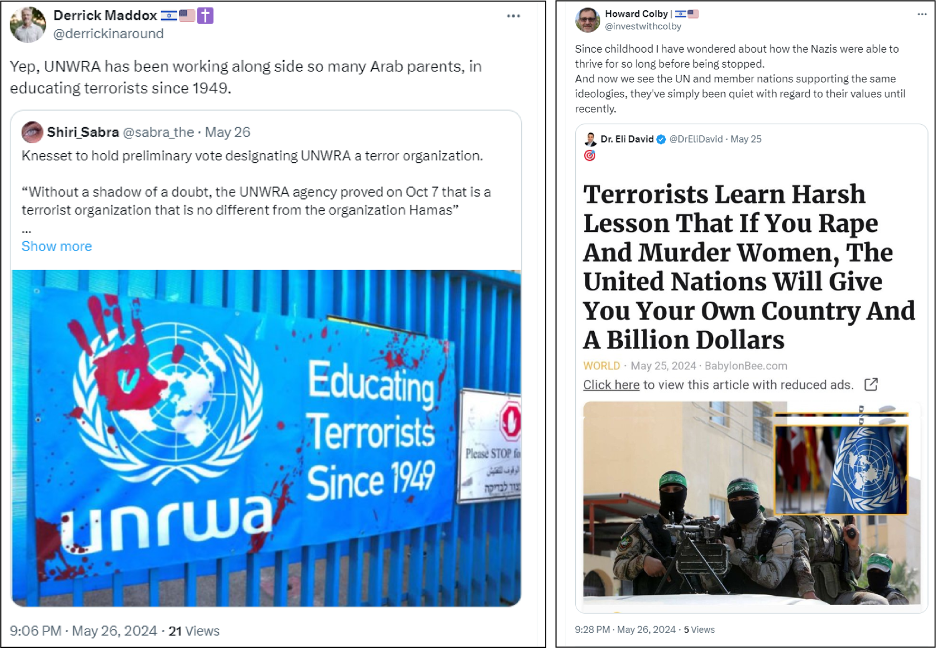

Some accounts also engaged with posts attacking the United Nations and UNRWA. Examples include quote retweets by @derrickinaround and @investwithcolby, minutes apart on May 26, 2024, claiming that UNRWA and the United Nations are supporting terrorists following a widely criticized bill in the Israeli parliament that would label the UNRWA a terrorist organization.

Other messages focused on criticizing proposals for implementing a two-state solution. On May 2, the account @HaviBernstein quoted another post by a former IDF soldier, writing, “If Trump is elected, the Two-State solution will be graveyard dead.” Others expressed favorable views of former President Donald Trump. “Everything will work out, as it always does. I trust Mr Trump will be remembered for all the good he has done for the American People,” @ryanairmilehigh wrote in a thread on maintaining support for Israel.

In addition to retweets, quote retweets, and likes, accounts also often replied to posts. For example, five accounts replied to a post by @aviabelow within less than an hour. Notably, these replies received a similar amount of likes, with some coming from other accounts in the network as well.

While the accounts mostly focused on retweeting and liking posts by other pro-Israel accounts or by accounts in the network, some original posts managed to reach different audiences outside the network and received substantial engagement from apparently authentic accounts outside of the network, many of which posted negative replies. For instance, a post by @IsraelWillWin_ was viewed over 90,000 times and received thousands of replies and likes.

Similarly, another post by account @kimcohenCA, an account claiming to be a “French born Canadian living in Montreal” but utilizes a stolen avatar, was viewed more than 1.1 million times. This account had several posts that reached thousands of users and likely received engagement with authentic platform users, including one instance in which it lambasted President Biden and the UN, writing, “Nothing will hold us back. Biden, the UN and the whole world fail to realize that Israel has the strength of Samson. Their ropes are useless compared to our G-d-given power.” This was accompanied by an Israel Digital Army image also sporting the slogan, “Nothing will hold us back.” The post received more than 93,000 views.

Editor’s note: Ali Chenrose is a pen name used by DFRLab contributors in certain circumstances for safety reasons.

Cite this case study:

Ali Chenrose, “Sockpuppet network impersonating Americans and Canadians amplifies pro-Israel narratives on X,” Digital Forensic Research Lab (DFRLab), November 4, 2024, https://dfrlab.org/2024/11/04/sockpuppet-network-impersonating-americans-and-canadians-amplifies-pro-israel-narratives-on-x/.