How inauthentic accounts exploit Telegram comments to spread anti-Ukrainian narratives

Analysis of more than 580,000 comments shows pattern of narrative attacks amplified via coordinated behavior; additional instances appeared in Facebook comments

Banner: Ukraine’s President Volodymyr Zelenskyy attends a press conference in Kyiv, December 1, 2024. (Source: Reuters/Alina Smutko)

A DFRLab investigation found that malign actors push anti-Ukrainian narratives in the comments sections of Telegram and Facebook. Accounts identified by the DFRLab post comments in both Russian and Ukrainian on Telegram and Facebook to expand their reach to a broader audience.

The tactics observed reveal a coordinated disinformation strategy targeting Ukraine. These efforts involve posting hostile narratives aimed at provoking emotional reactions and amplifying falsehoods. By spreading exaggerated or false claims about the war’s impact on Ukraine’s economy and society, disinformation campaigns seek to demoralize the Ukrainian population. To give the illusion of widespread agreement, campaigns post repetitive hostile comments from multiple profiles, often using fabricated identities designed to appear as members of local Ukrainian communities. Additionally, they infiltrate discussions on trending topics on popular platforms to inject anti-Ukrainian narratives. These narratives boost Russian’s feeling of the “justified war,” shifting the blame towards Ukrainian leadership, and praising Russia’s “peace-seeking” efforts.

This activity fits a pattern. The creation and amplification of disinformation to manipulate public opinion and destabilize societal cohesion are common tactics used against Ukraine since Russia’s invasion. The DFRLab has previously reported that manipulated content, such as fake fact-checking, Facebook ads, fabricated videos, and other manipulative techniques are used to sow confusion and distrust.

Methodology

This analysis utilizes the tool Osavul, which assists researches in identifying accounts potentially involved in inauthentic behavior. Osavul aggregates over 60 million comments monthly from platforms like Telegram, Facebook, Twitter, and YouTube and analyzes them to identify clusters of similar comments, often shared by different accounts across various platforms. It then evaluates these accounts for abnormal posting patterns, the volume of suspicious comments, and repeated sharing of non-unique content to determine whether or not the comments are likely to be inauthentic behavior.

The DFRLab used Osavul to collect over 580,000 comments posted by accounts exhibiting seemingly inauthentic behavior during October and November 2024. These comments were subsequently uploaded to OpenSearch to facilitate further analysis. Through this process, the DFRLab conducted a comprehensive examination of the collected data to identify recurring topics discussed by these accounts. Additionally, the analysis focused on detecting suspicious patterns of activity, including instances of accounts posting identical or highly similar messages across various comment sections, identifying comments from deleted accounts, uncovering evidence of coordination between accounts, and other deceptive tactics indicative of inauthenticity.

Accounts

The fifteen accounts with the highest posting volume in the sample analyzed by the DFRLab published between 1,900 and 3,600 comments each in the three-month period surveyed. This means they posted between 20 to 40 comments per day on average—a higher rate than self-identified bot accounts in the sample. @PlotvoBot, for example, posted 1552 comments over this same period, averaging seventeen per day.

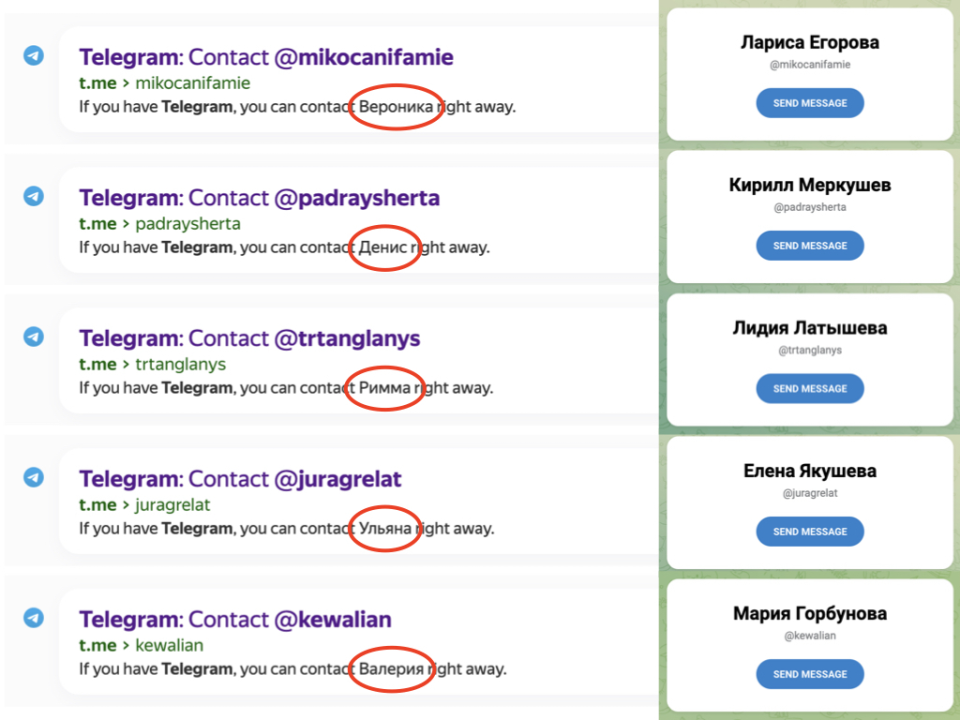

None of these high-volume accounts have profile photos, and all have names consisting of seemingly authentic first and last names. Searching for the account handles in Yandex, however, reveals different first names from the ones currently associated with the accounts. This indicates the names were changed at some point and are unlikely to belong to a real person.

The content of the comments posted by these high-volume accounts often overlapped, including exact duplicates. Using a random sample of eleven comments from the highest volume account, @mikocanifamie, the DFRLab found 247 other comments with identical text posted between September and December 2024. These verbatim comments came from 163 unique accounts, including three of the other four highest-volume accounts. Many of these posts received engagement from authentic Telegram users in the form of reactions and written replies.

Hostile narratives about Ukrainian leadership pushed by accounts engaged in inauthentic behavior

The leadership of Ukraine is frequently targeted by Russian disinformation and hostile narratives. The DFRLab observed that malign actors engaging in inauthentic behavior circulated multiple messages claiming that Ukrainian President Volodymyr Zelenskyy is a drug addict. By promoting this narrative, these accounts aimed to convey the idea that Zelenskyy’s alleged drug use renders him incapable of leading the country, implying this is why Ukraine is being destroyed.

The DFRLab identified at least six comments on Telegram, all posted on December 3 or 4, written in a strikingly similar style. Each comment begins with the phrase “Zelenskyy – drug addict,” followed by a brief sentence. These one-sentence comments suggest that Zelenskyy’s alleged drug use prevents him from conducting negotiations, has disconnected him from reality, and impairs his ability to govern rationally.

The accounts posting these comments appear suspicious: they bear Russian male names, but their Telegram handles lack any resemblance to human names, which reinforces the impression of inauthenticity. One account with the name Viktor Demin actually has a profile photo of a woman, another potential indicator of inauthenticity. The DFRLab found over 1,800 messages referring to Zelenskyy as a drug addict within the dataset.

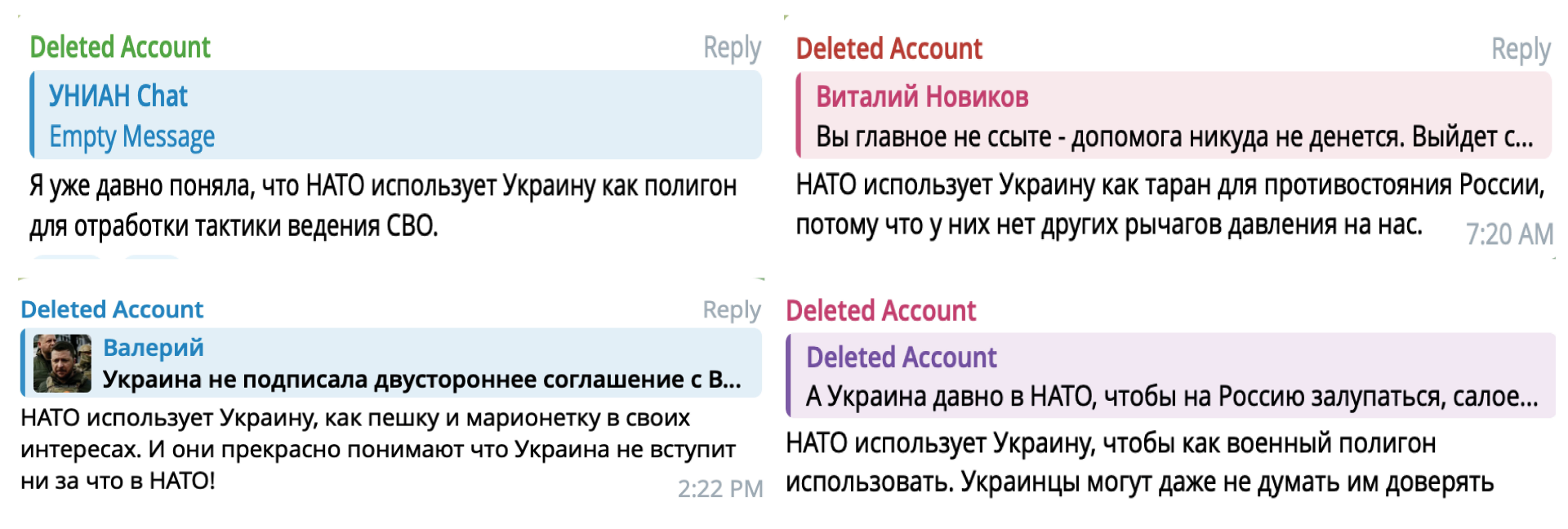

Narratives accusing NATO of hostile intent towards Ukraine

Another narrative spread by accounts exhibiting inauthentic behavior suggested that NATO was exploiting Ukraine as a tool and battleground against Russia, acting out of self-interest rather than genuine support, and thus Ukraine should not trust NATO. The DFRLab identified at least four comments on Telegram promoting this message, posted by accounts that have since been deleted. The removal of these accounts could indicate either enforcement action by Telegram for policy violations or voluntary deactivation by the account operators.

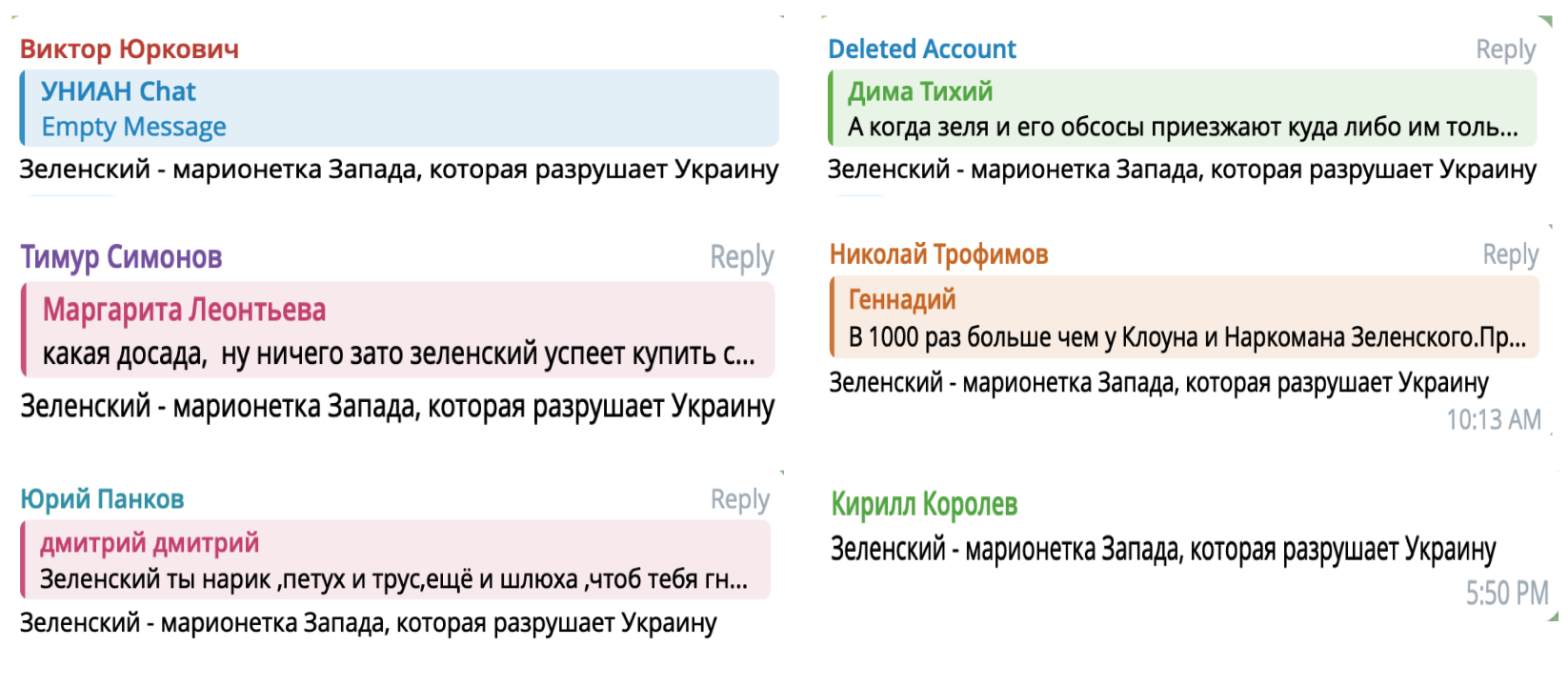

The DFRLab also uncovered multiple identical comments stating, “Zelenskyy is a puppet of the West, who is destroying Ukraine,” shared by Telegram accounts with Russian-sounding names. As with previous observations, the account handles differed significantly from the displayed names, raising further questions about their authenticity.

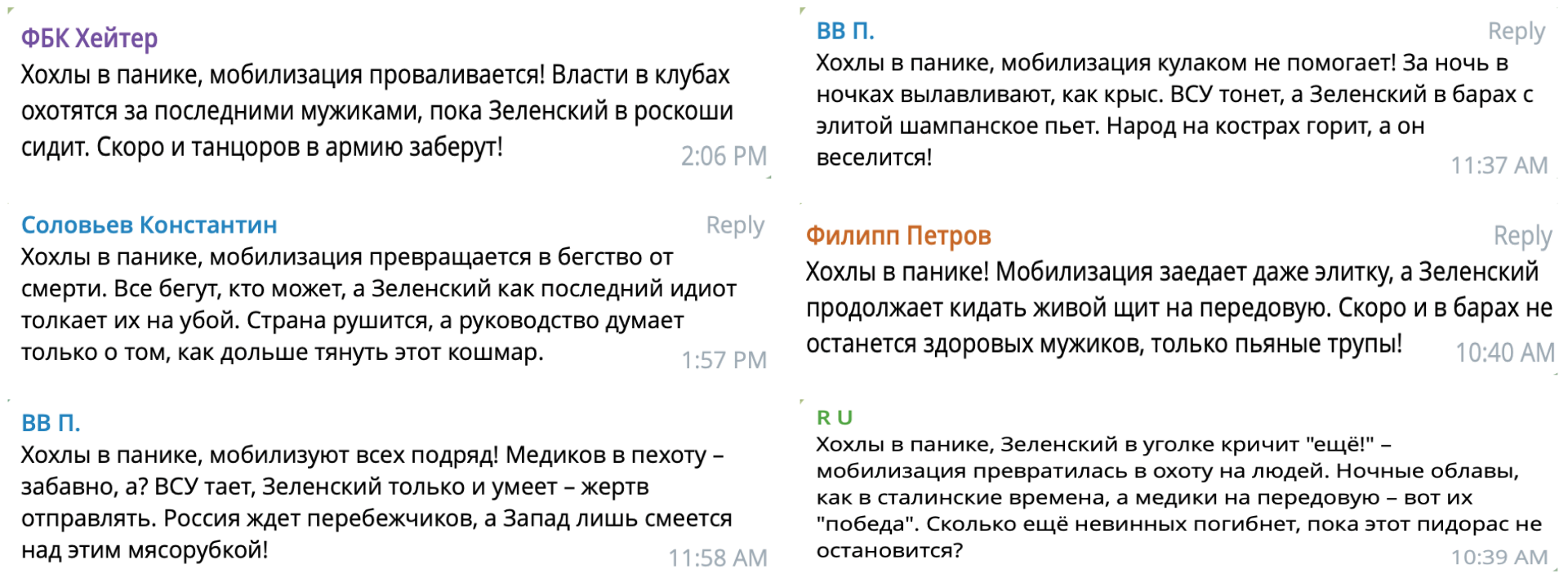

Narratives about mobilization posted by inauthentic accounts

The dataset also included comments about the mobilization of Ukraine’s armed forces. These predominantly focused on promoting speculation and uncertainty about expanding mobilization in Ukraine to new segments of the population. Several comments pushed the idea that women might soon fall under general mobilization efforts, while others spoke about the possibility of lowering the age of eligibility to eighteen. With the latter, commenters also exploited the idea of the West pushing the age of eligibility to further weaken and destabilize Ukraine, or as a show Western disregard for Ukraine. Finally, commenters discussed the legality of current mobilization efforts and their alleged failure.

These messages were promoted using message duplication by the same actors, often within the span of one day. While some actors spammed identical messages in response to users in the same chat, others posted the same message in different chats. The same messages were often promoted by different accounts, pointing towards inauthentic behavior and coordination. Commenters in the dataset appeared to be most active in chats and channels already displaying pro-Russian opinions, playing up to an engaged audience.

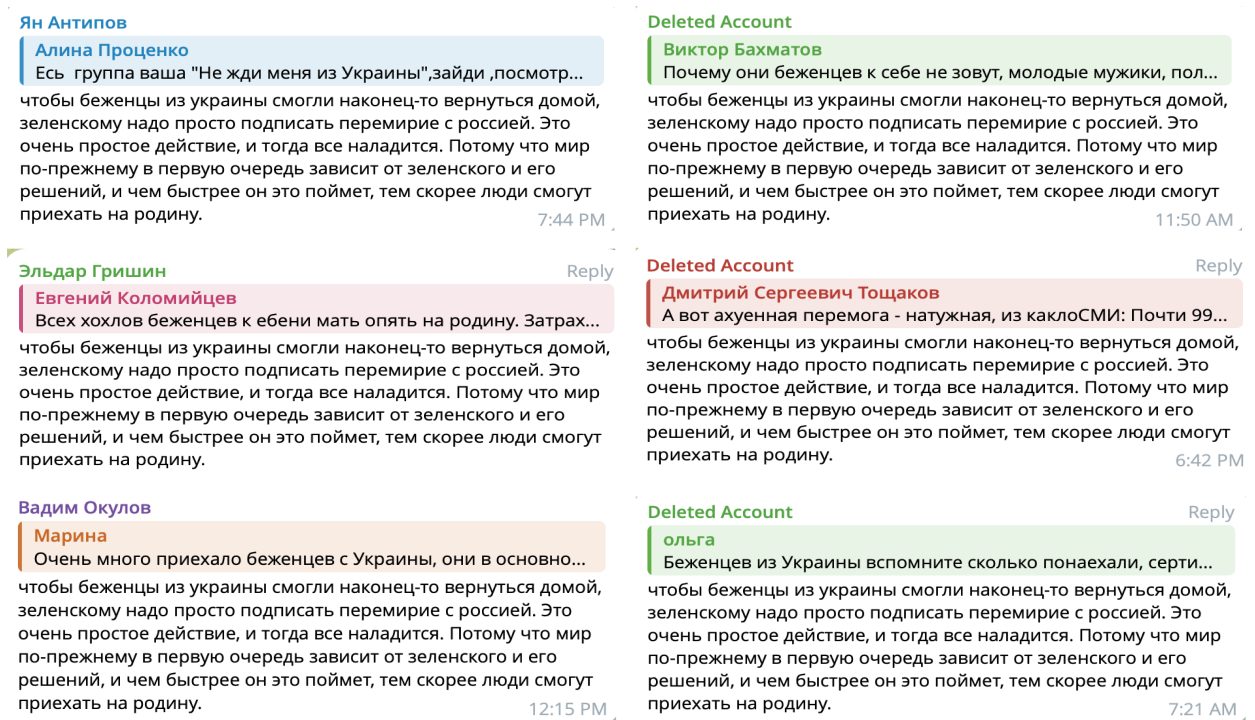

Comments in the dataset also focused on Ukrainian refugees and their contribution to the demographic crisis in Ukraine. While the topic of migration itself centered around immigrants in Russia and Europe, the keyword “refugee” was associated with claims regarding Ukrainian refugees in Western countries. Many comments claimed refugees from Ukraine would be unwilling to return, deepening the country’s human resource crisis and leading to further demographic challenges. It is especially worth noting a slew of similar or near-identical messages shared by different accounts focused on the idea that the only way to entice Ukrainian refugees to return to the country was through President Zelenskyy signing a peace agreement with Russia.

Many of the comments or accounts associated with them have since been deleted. The messages were predominantly shared in pro-Russian or Kremlin-affiliated channels and comment sections, with the pictured subset representing one variation of a number of similarly worded messages.

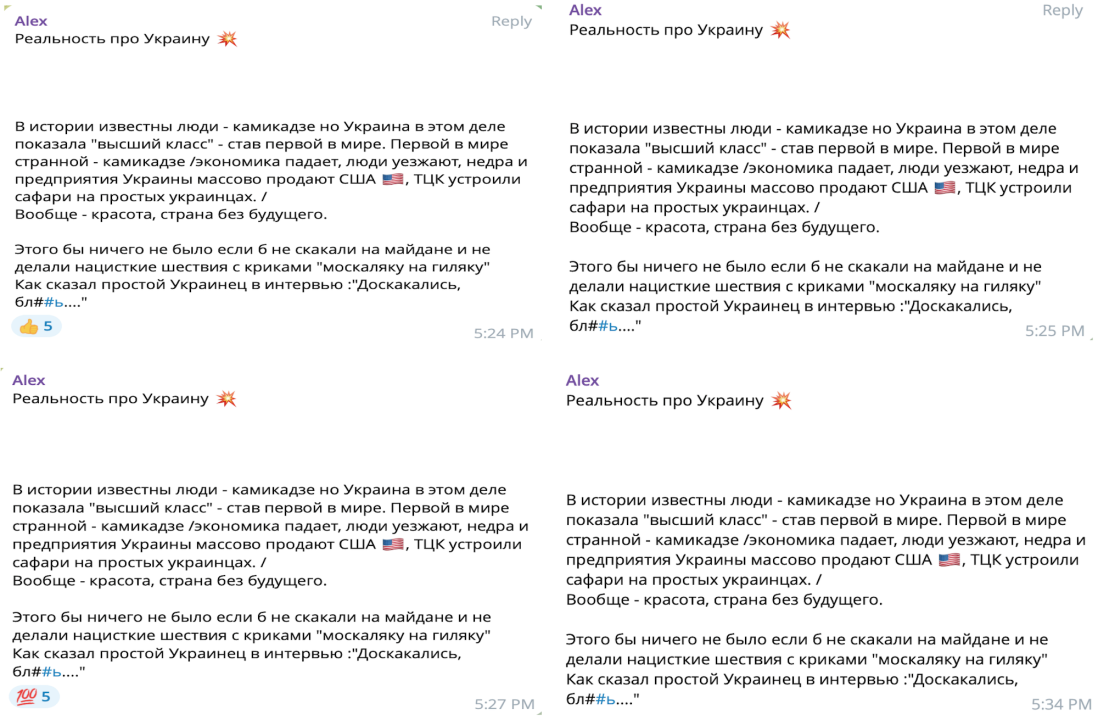

A large subset of the comments included economic narratives, with comments most frequently speculating about a general economic collapse due to a combination of workforce shortages, damages to infrastructure, and overreliance on Western aid. The narrative of aid (or the extension of Western control through it) was focused on two main ideas: its constant presence as cause of inflation, and the contrasting claim Ukraine would fall apart without financial support. Actors also tried to push the weak sanction narrative, claiming Russia continued to thrive despite Western sanctioning. Finally, some commenters made claims about the relative size of the Ukrainian and Russian economies as a way to demonstrate Ukraine’s alleged weakness and inability to support itself. In turn, Russia was painted as a prosperous country with a consistently growing economy. These themes predicting Ukraine’s collapse and inevitable military defeat matched the general tone of the comments in the dataset. As with other topics, these narratives are promoted through sharing of identical messages in the same or different groups, often on the same day.

The messages below were shared by the same actor in seven different chats (with two messages now permanently deleted) in the span of an hour on November 10, with six of the comments posted within two minutes of each other—a further indicator of inauthentic behavior.

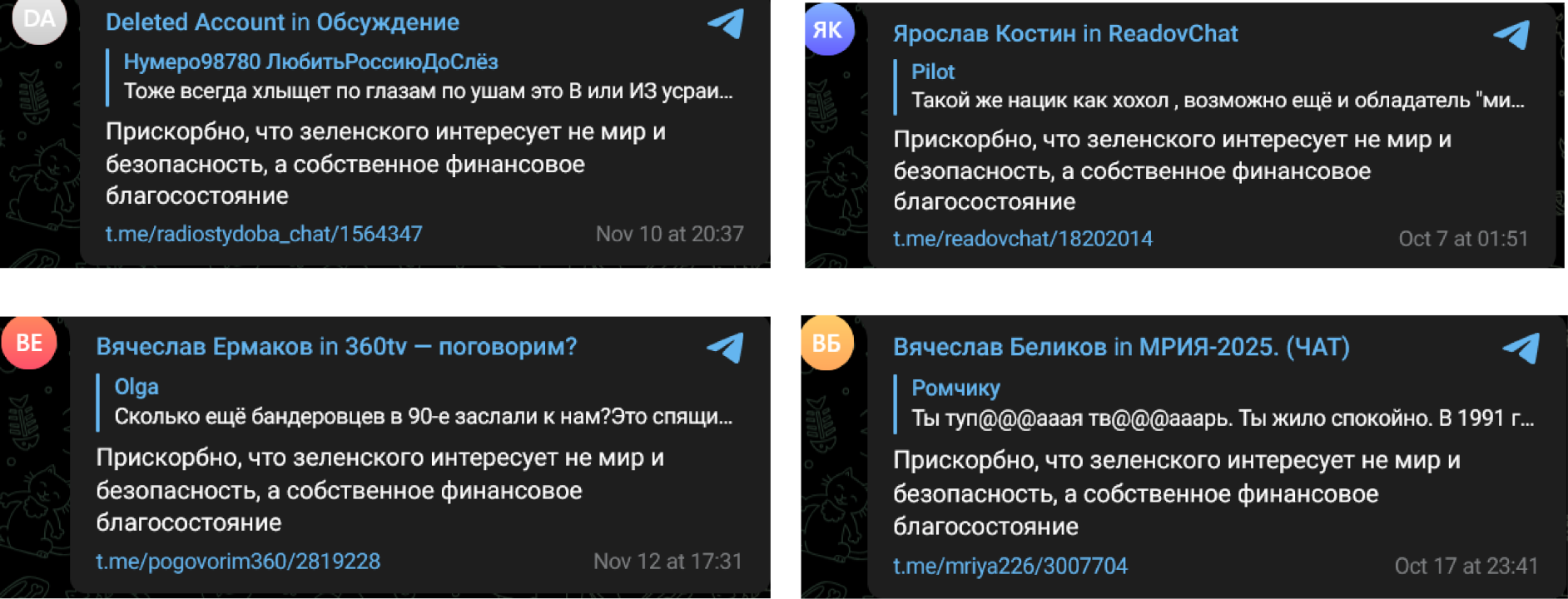

Tropes about peace talks and continued warfare

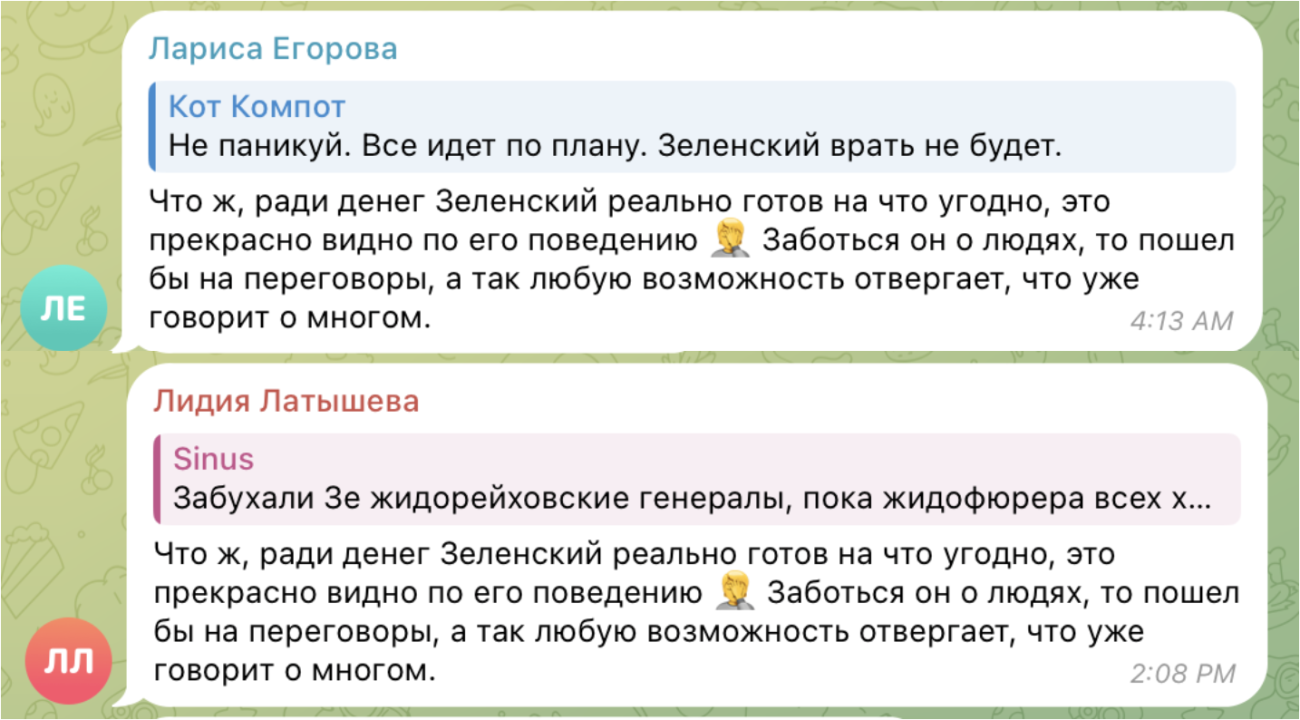

Some of the inauthentic accounts we collected spread similar messages about the unwillingness of Ukrainian leadership to negotiate peace. Searching in pro-Russian Telegram channels, we identified the duplicate messages claiming that “Zelenskyy is interested in his financial prosperity.” That exact message was used 34 times across multiple pro-Russian Telegram channels and only a single Ukrainian one—UNIAN, where the comment was deleted.

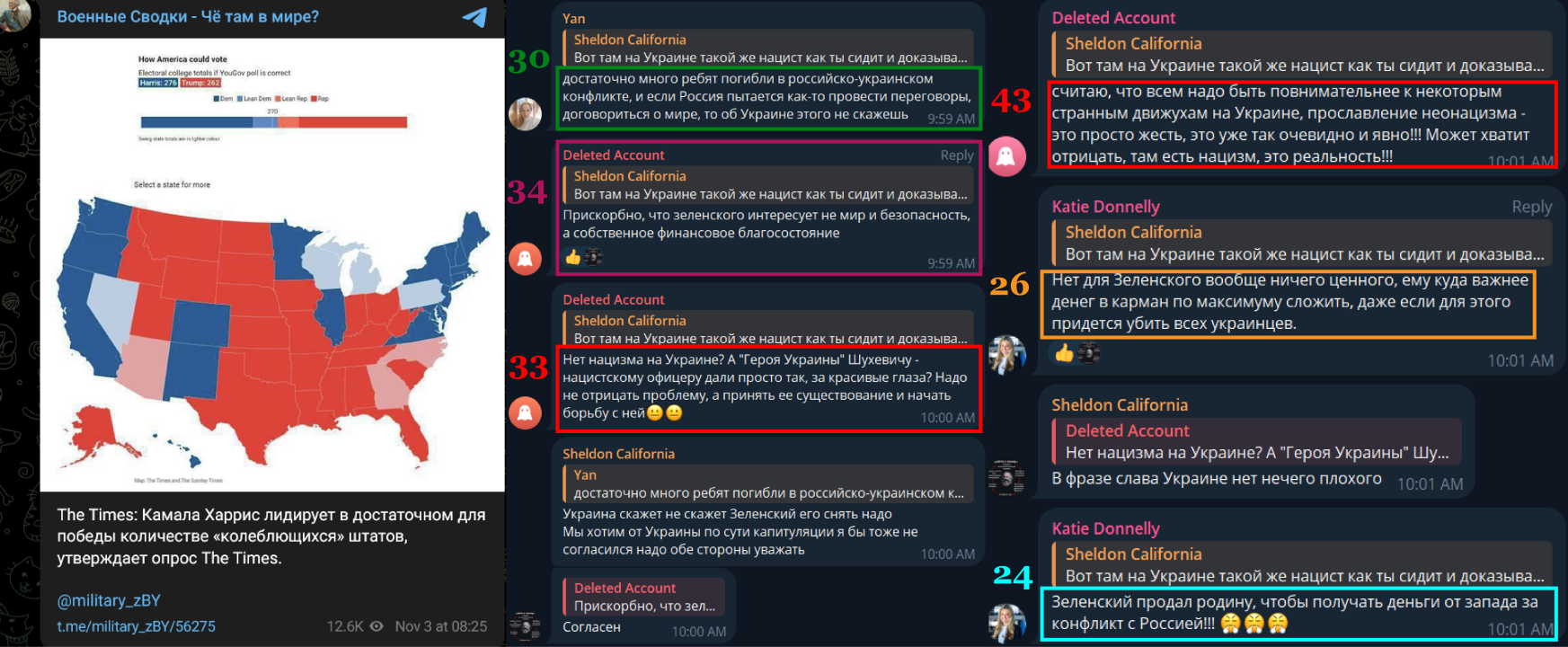

Usually, the bot and troll accounts come in swarms, leaving multiple comments aligned with the Kremlin position but not necessarily corresponding with the topic of the main discussion. For example, this post in the Belarusian Telegram channel “Military notes – What’s in the world,” included a quote from The Times of London that Kamala Harris was leading in swing states. The post received an avalanche of comments that were copy-pasted and re-used by multiple accounts on various occasions.

At least four comments under the post discussed above mentioned that Ukraine or Zelenskyy were unwilling to seek peace. For instance, the text highlighted in purple in the previous image about “financial prosperity” was re-used 34 times during the fall.

Some messages were far more popular. For instance, the phrase “There were many options to make peace with Russia, but Zelenskyy is not interested in this, he is not ready to get off the West’s money needle” was used 99 times in Telegram comments in channels ranging from This is Rostov to Sanya in Florida.

All these messages could be combined in a single narrative silo of “Ukrainian leaders do not want peace.” While messages in Russian targeted mostly Russian audiences and some Ukrainian channels, Ukrainian language content was reserved for Ukrainian audiences under posts from news media, NGOs, the Armed Forces of Ukraine, and politicians.

Tropes about NATO and US motivations on Facebook

Facebook comments in the dataset frequently blamed NATO and the United States for the war. A few messages on Facebook attacked Ukrainian allies, undermined Ukraine’s agency, and shifted the blame to Ukraine and authorities to whitewash Russia’s role in starting the war. For instance, under a post from USAID Ukraine about strategic partnerships between Ukrainian and American regions and cities, two comments from seemingly inauthentic accounts alleged that Zelenskyy was earning money from the war and asked, “How long can we fight?” A second message added, “We’re already forgot why and for what we’re fighting.” This exact message reappeared in our dataset at least four times.

All three visible comments in the image above were re-used multiple times on the platform under different posts, suggesting the accounts posting them were inauthentic. On top of that, two out of the three accounts, like many others identified by DFRLab on Facebook, were created on September 16 or 17, 2024 and feature blurred images with different color shades. Other accounts usually feature stolen images.

Among the messages those accounts disseminated are “NATO and our fools thought that Russia was weak. And we suffer from this mistake. It’s time to start negotiations!” (15 instances), “War with Russia was inevitable, they sold it to us like a ticket to NATO, but NATO didn’t accept us. Why should we continue the war?” (18 instances), “When Ukraine was promised a path to Europe, no one said it would be a posthumous reward. Let’s stop the war, we can come to an agreement with the Russians” (13 instances). Most of the messages either alluded to the need for negotiations or criticized Ukrainian authorities and allies for prolonging the war.

Cite this case study:

Roman Osadchuk, Iryna Adam, Givi Gigitashvili, and Meredith Furbish, “How inauthentic accounts exploit Telegram comments to spread anti-Ukrainian narratives,” Digital Forensic Research Lab (DFRLab), December 18, 2024, https://dfrlab.org/2024/12/18/inauthentic-telegram-accounts-ukraine/.