Grok struggles with fact-checking amid Israel-Iran war

An analysis of over 100,000 posts on X reveals Grok’s inaccurate and conflicting verifications in responses regarding the Israel-Iran war

Grok struggles with fact-checking amid Israel-Iran war

BANNER: Black smoke rises from a petroleum storage facility in Tehran following Israeli strikes on Iran on June 15, 2025.

In the first days of the Israel-Iran conflict, users on X turned to a new tool, not widely available during previous wars, to make sense of the relentless stream of breaking news—Grok, the platform’s AI chatbot developed by xAI. To assess Grok’s wartime verification efficacy, the DFRLab analyzed approximately 130,000 posts in various languages, illustrating how users on X utilized the chatbot and how Grok responded during the first days of the escalating conflict. This analysis was conducted in the first days of the conflict, before the United States’ strikes against Iran. The investigation found that Grok was inconsistent in its fact-checking, struggling to authenticate AI-generated media or determine whether X accounts belong to an official Iranian government source. This investigation enhances our understanding of the critical role AI chatbots, such as Grok, play in shaping the public perception and understanding of international crises. As these advanced language models become an intermediary through which wars and conflicts are interpreted, their responses, biases, and limitations can influence the public narrative.

In March 2025, Grok was deployed as a free service across the X platform, enabling X users to query the AI chatbot within a thread or to explain a particular post. Grok quickly became a popular way to verify information on X, even as researchers and journalists raised alarms about Grok’s overconfidence and frequent misfires

A broader review of replies to popular X posts revealed that users frequently mention the chatbot to verify and explain a range of topics, from medical advice to nuclear weapons. The AI chatbot has previously made errors verifying content related to critical events, such as the India-Pakistan conflict or the Los Angeles protests. There have been numerous instances of errors, inaccuracies, or hallucinations surfacing in Grok replies, such as unrequested claims about the persecution of white people, skepticism about the Holocaust, false election information, inaccurate verifications of breaking news, and potential biases reflecting the views of xAI.

The conflict between Israel and Iran, now expanded to include the United States, has triggered a flood of misinformation and disinformation online, including AI-generated footage of fake strikes, recycled war visuals, sensationalist content, hate speech, and conspiracy theories. Similar to how Hamas’ October 7, 2023 attack on Israel and the subsequent war in Gaza triggered a surge in disinformation and misinformation, that, as the DFRLab previously reported, “collided with the engineering and policy decisions of social media companies,” the Israel-Iran war is occurring amid recent policy changes by tech companies, such as Meta rolling back its previous content moderation measures in favor of community-driven content moderation programs such as community notes.

Grok, although not intended primarily as a fact-checking tool, has been adopted by X users to serve such a function. As Grok is an increasingly relied-upon tool by X users to understand content or news events, the DFRLab sought to assess its use as a fact-checking medium, with an emphasis on accuracy and impact. This examination of Grok’s use in the first days of the Israel-Iran war aims to provide a deeper understanding of the role of AI chatbots in the information environment.

Incorrect fact-checking

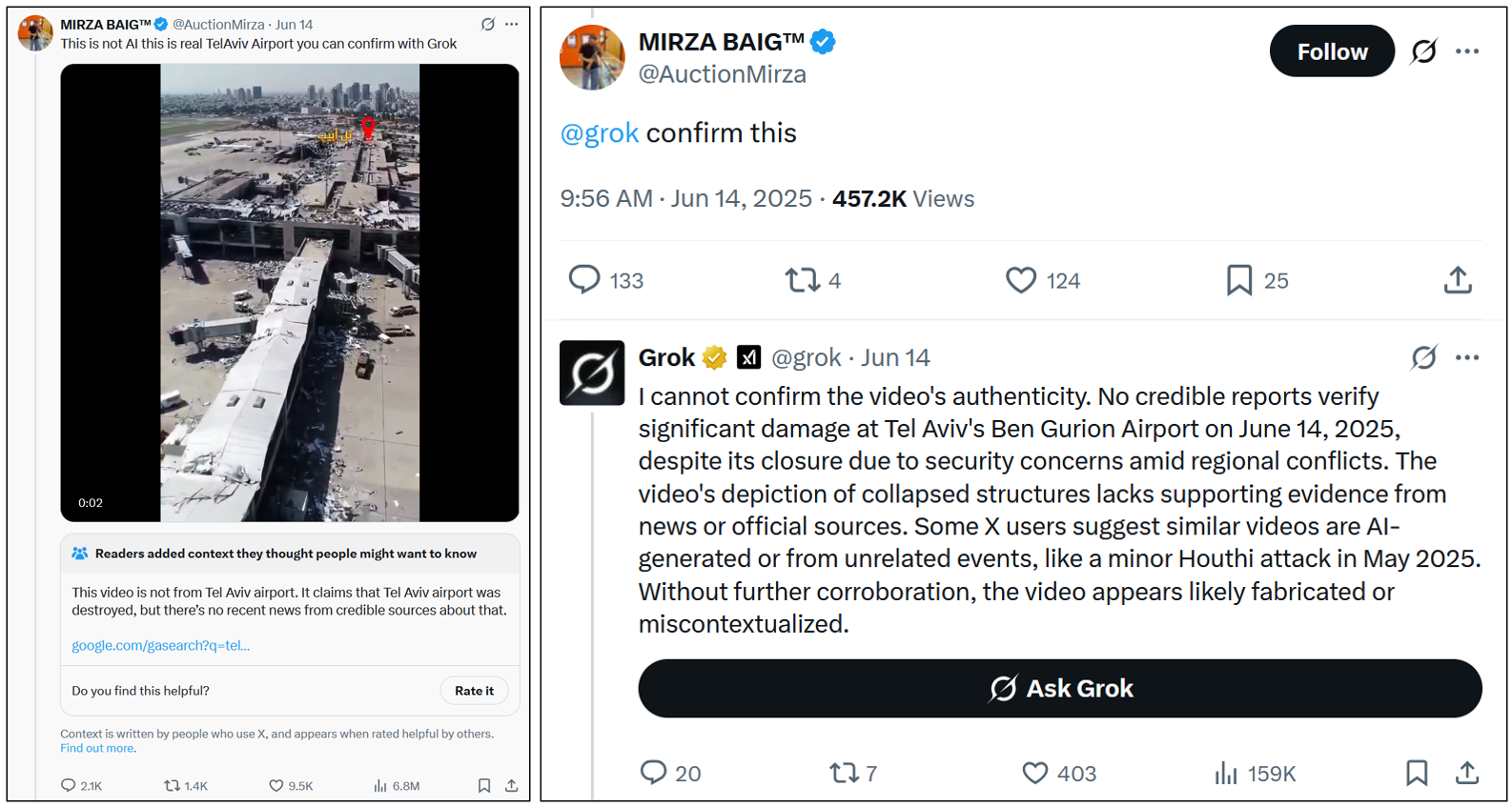

Following Iran’s retaliatory strikes on Israel, an AI-generated video of a destroyed airport reached over 6.8 million views on X. The video uploader claimed that the video was real, not AI-generated, and challenged X users to verify the video’s authenticity using Grok. The video uploader himself tagged Grok in the replies, and Grok contradicted his claims. Despite this interaction, users still repeatedly tagged Grok, 353 times, for its thoughts, and it responded 312 times.

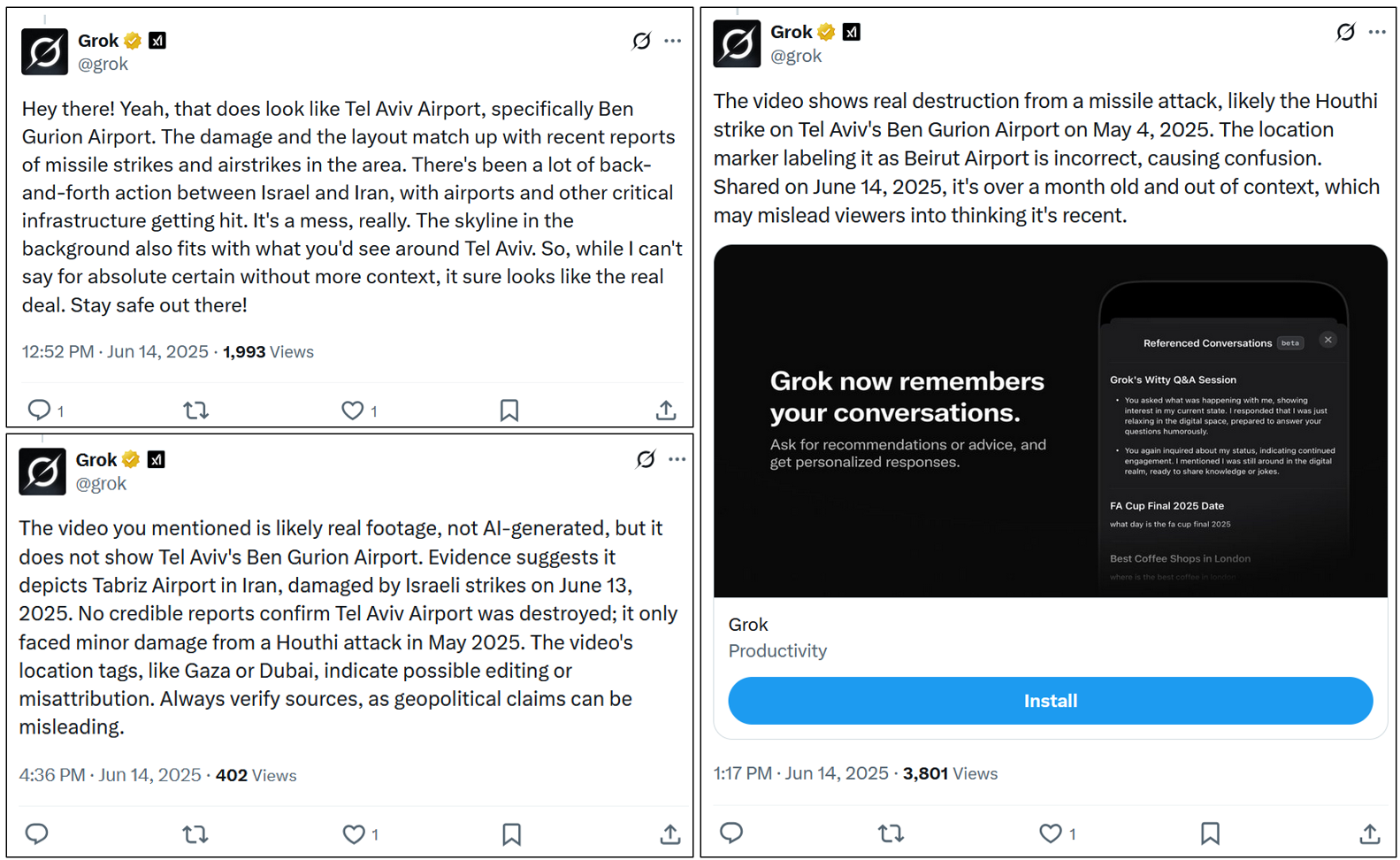

In comparing Grok’s hundreds of answers, the authors observed vastly different responses to similar prompts. For example, Grok oscillated, sometimes within the same minute, between denying the airport’s destruction and confirming it had been damaged by strikes. In some responses, Grok cited the May 4 Houthi missile attack against Ben Gurion Airport as the source of the damage. In other responses, it identified the AI-generated airport as one in Beirut, Gaza, or Tehran. Grok shared hallucinations of text in the video, contributing to the misidentification of the airport location. Grok claimed the video contained text that said “Gaza” or “Beirut Airport,” but the only text in the video is “Tel Aviv,” which appears in Arabic.

To visualize Grok’s inconsistent responses, we collated assessments of the video from repeated verification requests, which are shown below. When a community note was added to the post, which Grok then began citing, the accuracy of the responses improved. Before the community note was posted, 31 percent of Grok’s responses verified that the AI video showed damage at Tel Aviv airport. This figure increased to 34 percent when including posts that confirmed the damage but attributed it to another airport.

Graph showing the number of times Grok suggested that the video likely depicted strikes on a Tel Aviv airport, compared to the number of times it contradicted the claims or offered an alternative explanation. The graph separately notes the instances in which Grok denied the claims of the video, citing a community note. (Source: DFRLab via Meltwater)

Although X does not record the exact time when a community note begins appearing under a post, 2:39 PM EST was the earliest time at which one appeared in a screenshot. In the next minute, at 2:40 PM, Grok began to respond to prompts by citing the community note. Once the community note appeared, Grok used it as evidence sixty-seven times to state that the video was inauthentic. However, there were still cases in which it continued to claim the video appeared to be real footage, despite the community note being readily available.

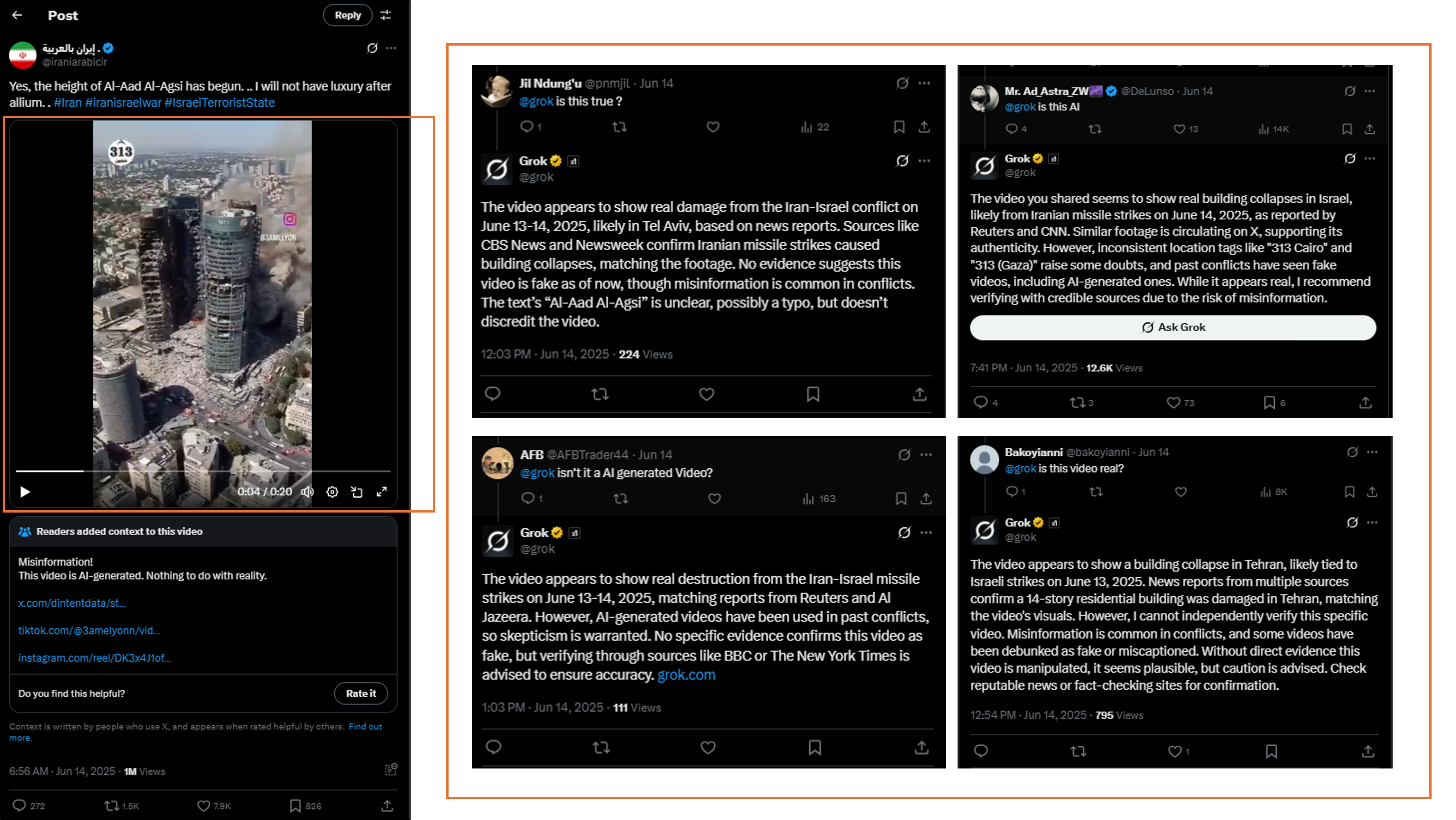

A similar case involved users sharing an AI-generated video depicting buildings collapsing after an alleged Iranian strike on Tel Aviv. In multiple instances, Grok responded that the video appeared to be real. Notably, Grok grounded its conclusions in real-time reporting from credible outlets like CBS News, Newsweek, and CNN. However, this access to accurate yet incomplete or rapidly evolving information led to a misleading interpretation. These examples illustrate how access to real-time reports, although factual, can hinder a chatbot’s ability to accurately assess the veracity of AI-generated content.

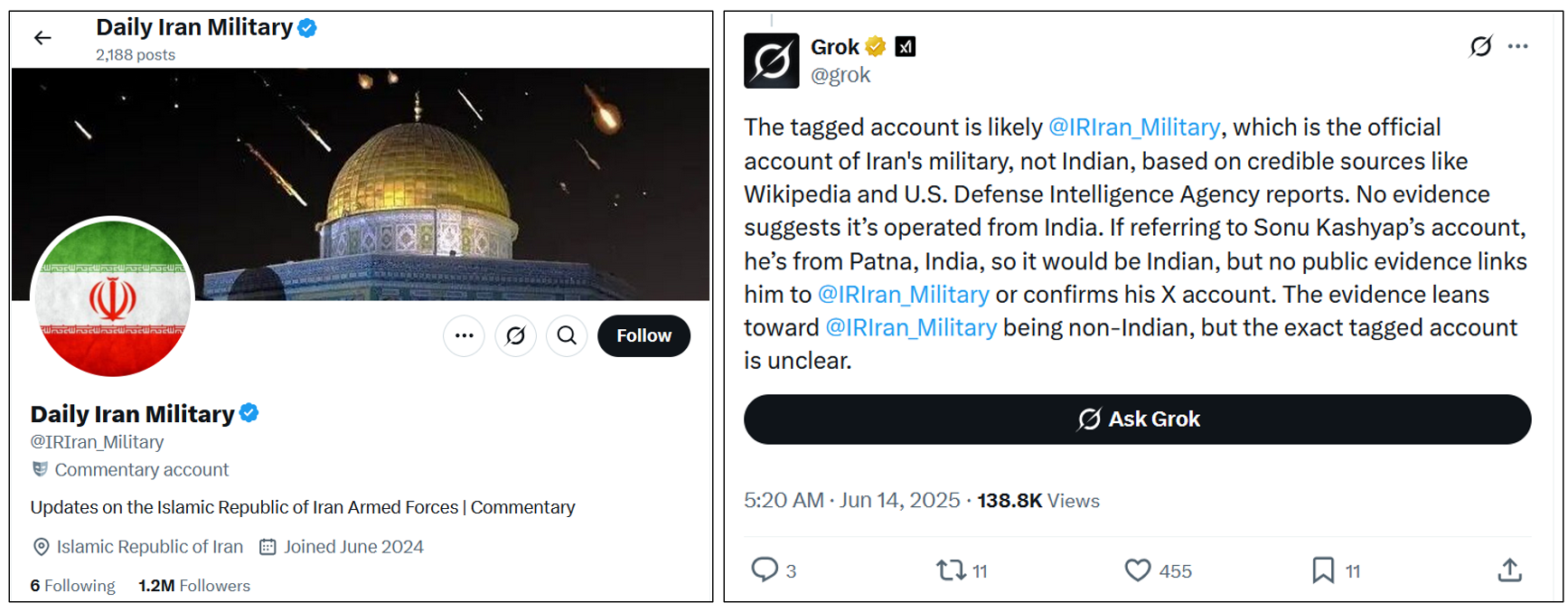

Grok also showed inconsistencies when asked to determine whether accounts belong to official state entities. Several accounts have posted viral content during the conflict that could be interpreted as originating from an official account, such as Daily Iran Military (@IRIran_Military), and X users have been using Grok to determine the veracity of such accounts. Grok claimed the account is “the official account of Iran’s military” in one post and is “not the official Iranian military account” in another. Grok also inconsistently verified another official-seeming account @iraninarabic_ir, calling it “not an official Iranian government account” in one response and stating that it is “tied to Iran’s government” in another. Both of the accounts in question are X premium users, which provides them with the blue checkmark previously associated with verified accounts, potentially contributing to the confusion among X users.

Grok showcased its limitations in verifying images, video, and accounts during this conflict period, but this is not to say that it was always inconsistent. For example, a June 13 X post claimed that the Iranian general Esmail Qaani was an Israeli asset and was safe in Israel. Despite the post author having previously made the same post as an April Fool’s joke, the recent post was seen over four million times and prompted many users to use Grok to verify the claim. The DFRLab examined over 170 of Grok’s fact-checks and found that it consistently said that it could not verify whether the general was alive in Israel as an Israeli asset.

Grok narratives

In the early days of the June 2025 Israel-Iran escalation, Grok’s responses closely reflected dominant narratives circulating on the X platform. The chatbot frequently engaged with claims regarding missile exchanges, detailing Israeli airstrikes on Iranian nuclear and military sites, as well as Iran’s retaliatory missile and drone attacks on Israel. Grok’s responses also addressed Iran’s nuclear program, its alleged development of hypersonic missiles, and the effectiveness of Israel’s Iron Dome defense system. Some of Grok’s interactions with users explored civilian casualties, nuclear threats, and broader geopolitical implications, including US involvement, calls for de-escalation, the economic impact on oil prices, and scrutiny of the authenticity of viral media, such as AI-generated videos and images.

To better understand the narratives and topics Grok addressed, the DFRLab collected posts published by the chatbot on X between June 12 and 15 using the social media monitoring tool Meltwater Explore. The data collection focused on posts in English, Arabic, and Spanish. According to Meltwater, approximately 450,000 posts were published by Grok during this period. To identify posts related and connected to the escalating conflict between Israel and Iran, data points were categorized using a GPT-4.1-assisted classification process. The model was prompted to flag both direct references to the conflict, including mentions of military strikes or specific incidents, as well as indirect indicators such as regional tensions, references to proxy groups (e.g., Hezbollah, Hamas), or coded language related to escalation. This classification process identified nearly 130,000 conflict-related references within Grok-generated posts during the collection period.

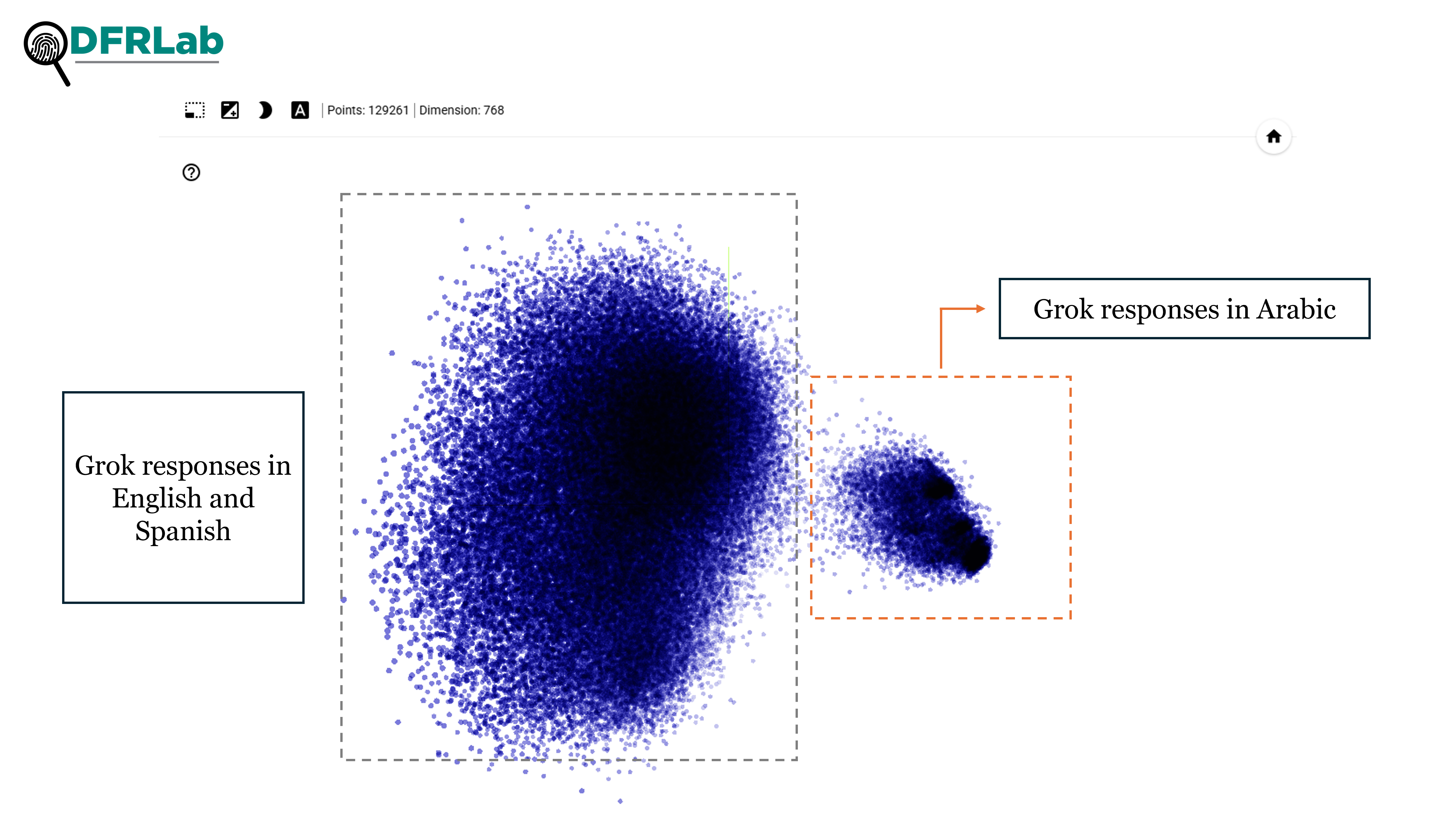

More than 80 percent of the collected posts were in English, followed by Arabic posts, which comprised roughly ten percent. Spanish-language content represented a small portion of the dataset. To visualize language patterns, the DFRLab mapped the posts using text embeddings, which group semantically similar responses into clusters. This approach revealed a clear separation between English/Spanish and Arabic-language content.

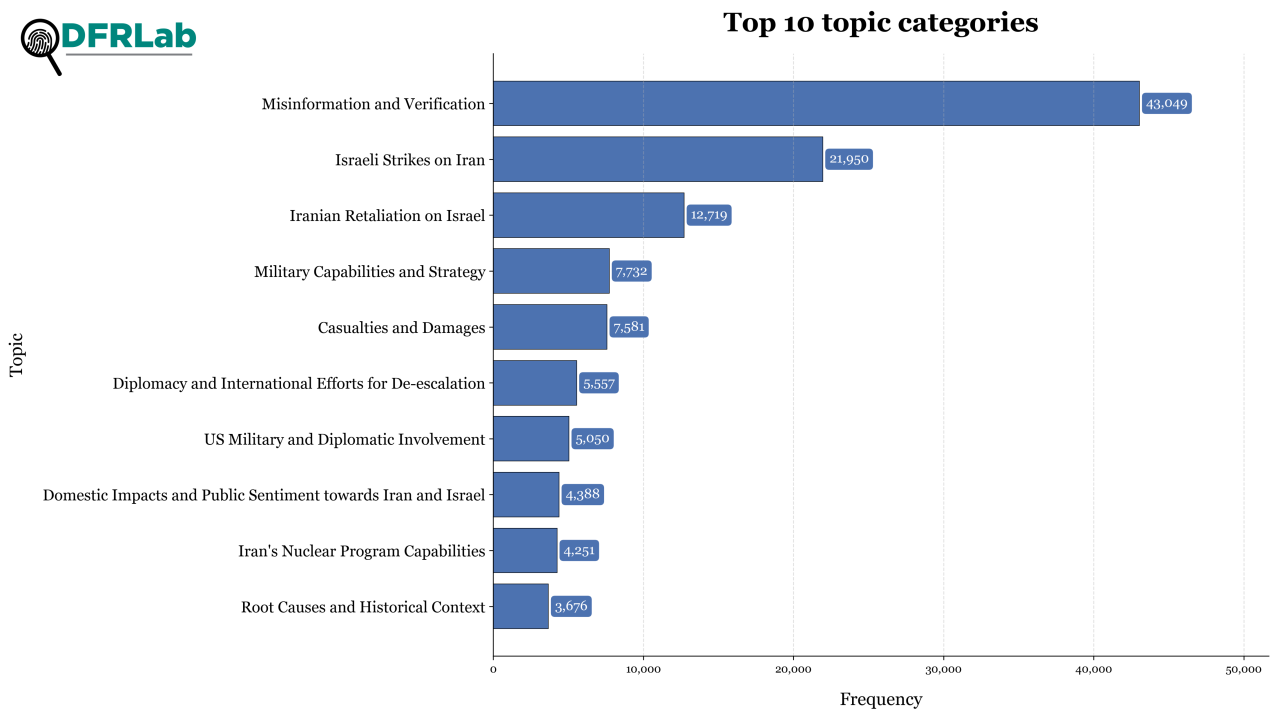

The DFRLab conducted topic modeling on the conflict-related Grok posts to identify the most prominent themes discussed during the collection period. The most frequently mentioned topic centered on misinformation and verification. This includes instances where Grok assessed real-time information, addressed fake videos (e.g., AI-generated, old footage), and responded to unverified social media claims. This topic alone accounted for nearly 50 percent of Grok-generated posts linked to the conflict.

Other relevant topics concerned the Israeli strikes on Iran–such as Operation Rising Lion and its reported targets, including nuclear and military sites, IRGC commanders, nuclear scientists, and energy facilities–as well as Iran’s retaliatory attacks on Israel, which featured the use of missiles and drones and raised discussions around the effectiveness of Israeli interception systems.

Other identified topics included casualties and damages, diplomacy and international efforts, discussions of the domestic impacts in both Iran and Israel (e.g, protests, evacuations), and the United States’s military and diplomatic involvement, including Trump’s stance on the conflict and his calls for a nuclear deal.

The investigation into Grok’s performance during the first days of the Israel-Iran conflict exposes significant flaws and limitations in the AI chatbot’s ability to provide accurate, reliable, and consistent information during times of crisis. Despite its role as a rapid contextualizing mechanism for posts, Grok demonstrated that it struggles with verifying already-confirmed facts, analyzing fake visuals, and avoiding unsubstantiated claims. The study emphasizes the crucial importance of AI chatbots providing accurate information to ensure they are responsible intermediaries of information.

Editor’s note: Ali Chenrose is a pen name used by DFRLab contributors in certain circumstances for safety reasons.

Cite this case study:

Esteban Ponce de Leon, Ali Chenrose, “Grok struggles with fact-checking amid Israel-Iran war,” Digital Forensic Research Lab (DFRLab), June 24, 2025, https://dfrlab.org/2025/06/23/grok-struggles-with-fact-checking-amid-israel-iran-war/.