Inauthentic X accounts targeted American and Canadian politicians amid student protests

The accounts pushed an anti-Qatar campaign and messages about Islamism

Inauthentic X accounts targeted American and Canadian politicians amid student protests

Share this story

BANNER: In response to fears of a raid by the Detroit Police Department to clear the Wayne State University pro-Palestine student encampment in Detroit, MI, on May 27, 2024, student activists held a rally, teach-ins, and poster-making to garner larger community support. (Source: Adam J. Dewey/NurPhoto via Reuters)

A network of over fifty likely inauthentic X accounts targeted US politicians in April and May 2024 with narratives accusing Qatar of sponsoring disorder during student protests at US universities. The network also targeted Canadian politicians with different messages related to Islamism. The DFRLab found evidence possibly linking accounts in the network to a similar previously exposed inauthentic network that also targeted US politicians but with messages critical of the United Nations Relief and Works Agency (UNRWA). The accounts, which used seemingly AI-generated avatars and shared duplicated text, were created during similar times and focused on boosting two hashtags attacking Qatar in replies to politicians and popular accounts.

In February 2024, the DFRLab, building on the work of other researchers, investigated a network of accounts that exhibited several signs of coordinated inauthentic behavior in amplifying, via replies to US politicians and popular accounts, accusations that some UNRWA staff were connected to terror groups. In another investigation in March 2024, the DFRLab identified an inauthentic campaign operating on X, Facebook, and Instagram that amplified Islamophobic content targeting Canadians. Meta and OpenAI attributed the network to the Tel Aviv-based marketing firm Stoic. Reporting by the New York Times, Ha’aretz, and FakeReporter found that Israel’s Ministry of Diaspora Affairs funded the campaign.

In this investigation, the DFRLab uncovered more than fifty undetected accounts that appear to have some connection to the previously exposed network. Throughout the course of our research, many of the accounts were suspended.

Connections to previous network

The DFRLab observed similarities between accounts in the newly identified network and the previously exposed network operated by Stoic. All accounts in the newly identified network stopped posting as of May 28, 2024, months after the DFRLab first analyzed the Stoic network, but only days after Meta and OpenAI confirmed the network’s inauthenticity. While some accounts halted their activities earlier in April, the majority of the dataset, forty-three accounts, were last active on May 28.

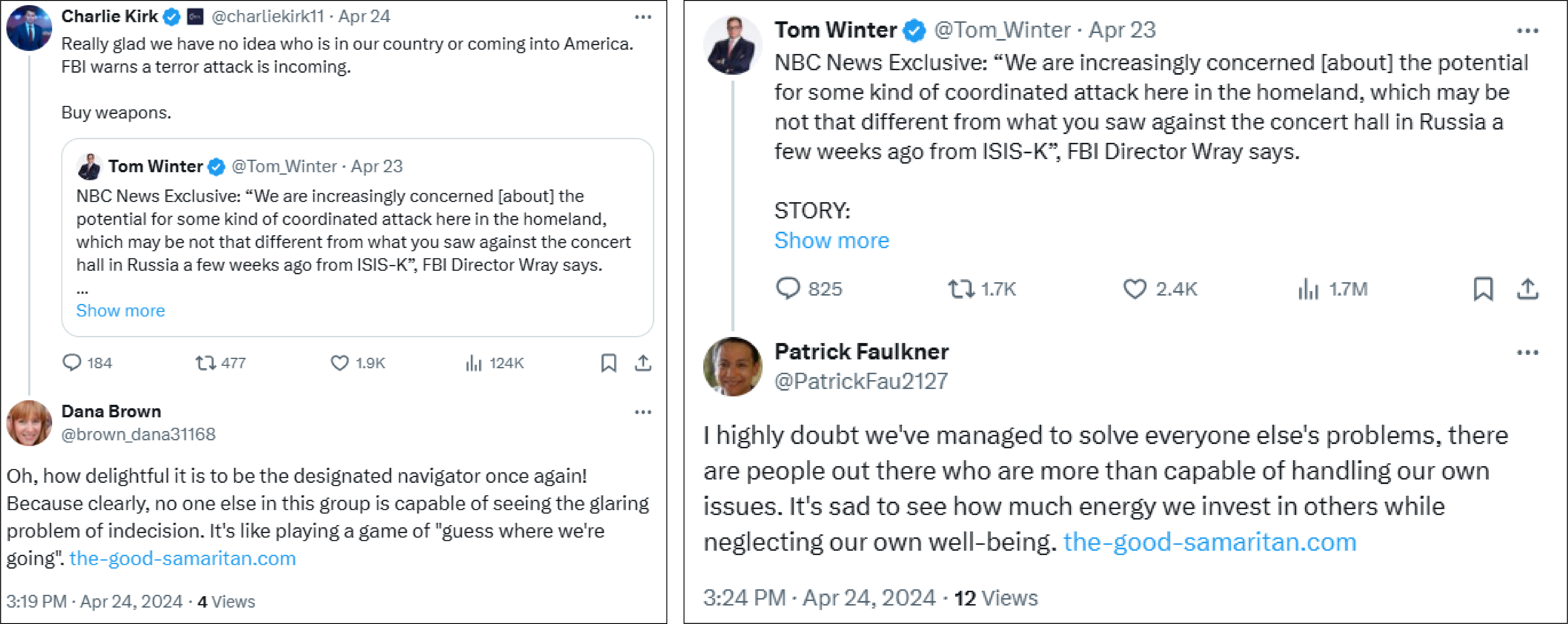

Other suspicious behavior that links these accounts to the previous network is the promotion of websites previously associated with Stoic. For example, at least twelve accounts, such as @brown_dana31168, @PatrickFau2127, and @MelissaSma70032, promoted the Good Samaritan website, which ranks safety on American university campuses based on hate-related incidents. According to Meta and OpenAI, the Good Samaritan website was created to support Stoic’s operation.

One account in the new network followed the X account of Moral Alliance, the account for another domain connected to Stoic. Moral Alliance’s X account is among the accounts that last posted on May 28. In addition, three accounts shared a link to the Non Agenda website, which was also created by Stoic.

Other accounts amplified the X account of The Human Fellowship, another website connected to Stoic that was first identified by Fake Reporter. The Human Fellowship X account also stopped posting on May 28. The account, created in May 2024, had two followers and only published four posts during the research period. Yet, its posts received significant engagement primarily in the form of likes and replies from accounts in the network.

Anti-Qatar campaign

The identified accounts focused on student protests and encampments at American universities that called for schools to divest from military weapons manufacturers in response to the war in Gaza. The accounts, which claimed to be concerned citizens, used replies to convey their disapproval of the campus protests, raise concerns about antisemitism and the safety of students, and promote the two hashtags #QatarBuysAmerica and #QatarBuysUSA alongside messages that suggested Qatar was financially supporting the protests. In addition to the hashtags blaming Qatar, many accounts also used the phrase “#Qatar’s Dollars Transforming America” at the end of their replies.

Data from the social media listening tool Meltwater Explore shows that the campaign had limited impact. The hashtags #QatarBuysAmerica and #QatarBuysUSA were most active in April and May 2024. During this period, #QatarBuysAmerica received 289 mentions, while #QatarBuysUSA received a total of sixty-six mentions.

Graph shows the volume of mentions for #QatarBuysAmerica and #QatarBuysUSA from March to June 2024, the period during which these hashtags were most active. (Source: DFRLab via Meltwater Explore via Flourish)

Many accounts in the network used the same text in replies to different posts. For instance, three accounts replied to posts by popular accounts with the same text emphasizing that “Universities should be havens for learning, not battlegrounds for ideological warfare.” One of the accounts added a link to the Good Samaritan website at the end of their post.

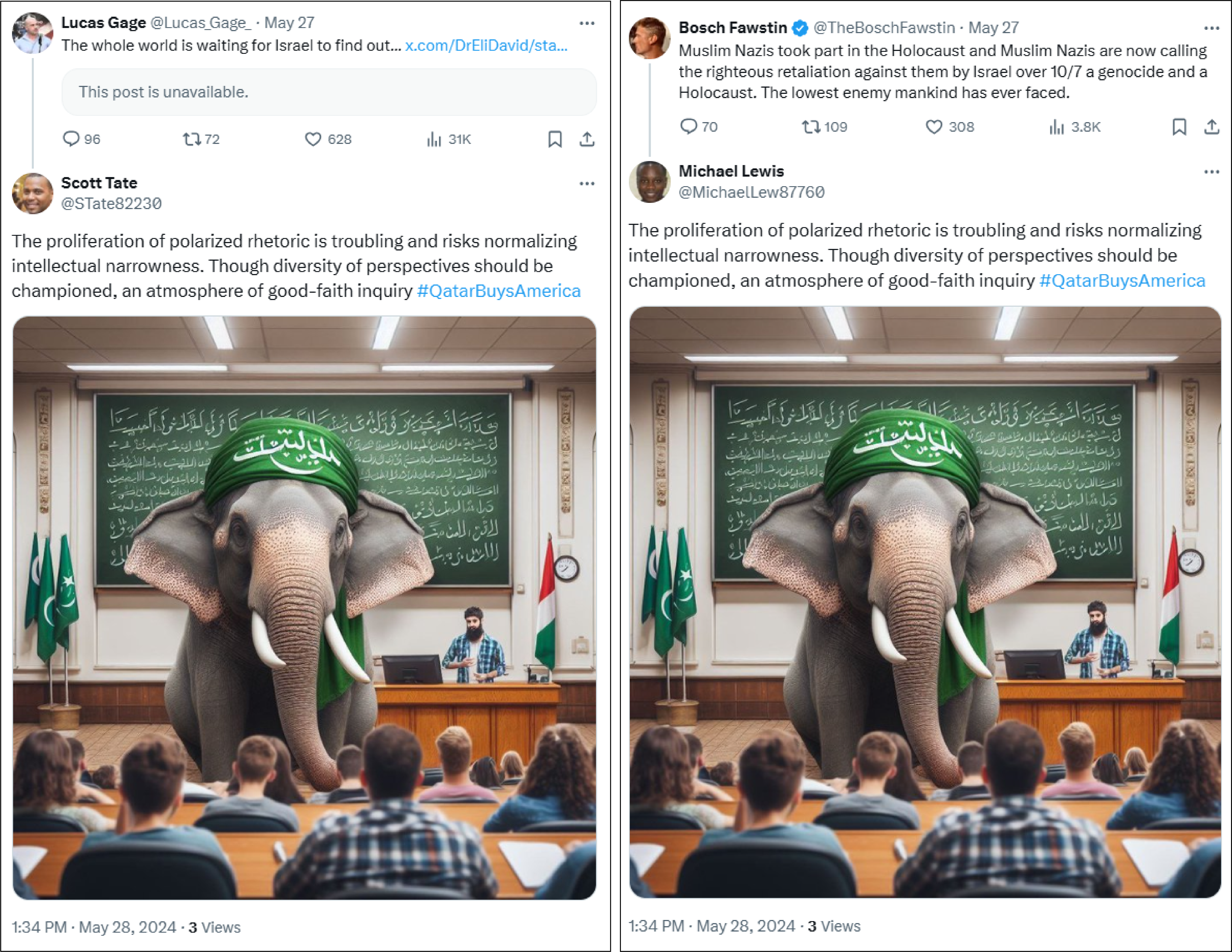

While accounts primarily relied on text and hashtags, some included visuals as well. The accounts @STate82230 and @MichaelLew87760 shared identical text, posted seconds apart, in replies to two different posts, ending the posts with the same hashtag. They also used the same visual featuring a big elephant in a classroom wearing a green headband with distorted Arabic text. The incomprehensible text showing random Arabic characters suggests that the image was generated by AI, as it is common for AI models to generate nonsensical text.

It is worth noting that one of the clusters of the network identified in OpenAI’s report was “focused on Qatar, portraying its investments in the US as a threat to American way of life.” The report cited some examples but did not explore the cluster in greater detail. Meanwhile, in July 2024, researchers Sohan Dsouza and Marc Owen Jones exposed a separate cross-platform social media influence operation that targeted Europeans and other audiences with “anti-Qatar propaganda and disinformation,” among other messages.

Targeting politicians

US politicians

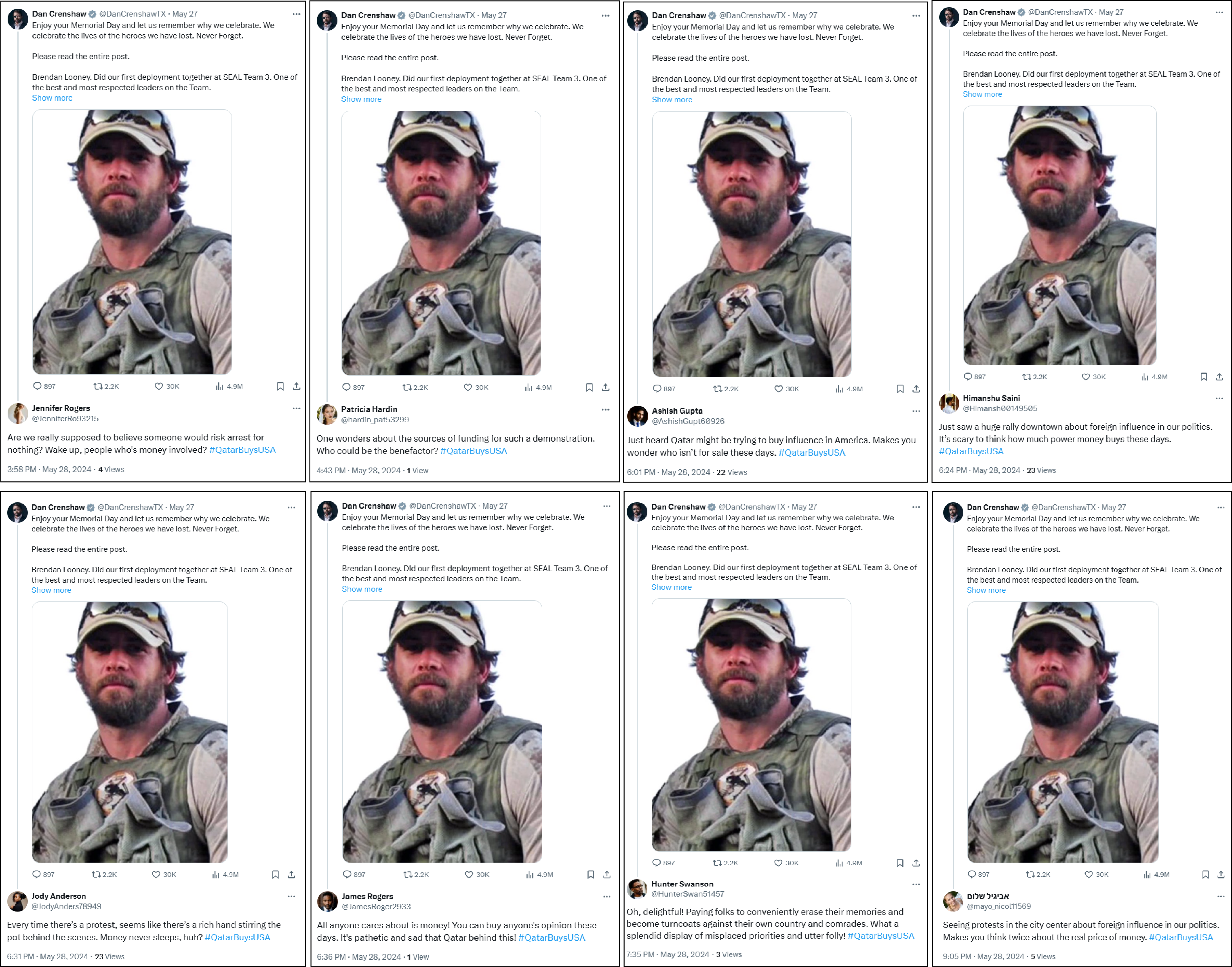

Accounts in the network targeted popular accounts on X in addition to the accounts of several US politicians, including President Joe Biden, Ted Cruz, Tom Cotton, David Kustoff, Lindsey Graham, Mike Lawler, Mike Jonhson, and Dan Crenshaw. The network also targeted Member of the European Parliament Assita Kanko.

The accounts attempted to impersonate concerned citizens claiming to have found evidence of foreign funding. For example, in reply to a post by Senator Lindsey Graham, @KmberlySe16396 said, “Seeing protests in the city center about foreign influence in our politics. Makes you think twice about the real price of money.”

The account @roth_court73616 replied to several posts by different politicians, including one by the US President with the same text saying, “Qatar’s quest for dominance in America: Are we just pawns in their game?” ending the post with another hashtag, #QatarPowerplay, and a link to the Stoic-associated website Non Agenda.

The accounts also directed narratives about student safety on campuses to politicians. In one case, at least four accounts replied to a video posted by Congressperson Elise Stefanik in which she questioned Minouche Shafik, former president of Columbia University, about antisemitic professors. The replies from the network highlighted the importance of ensuring campus safety.

Canadian politicians

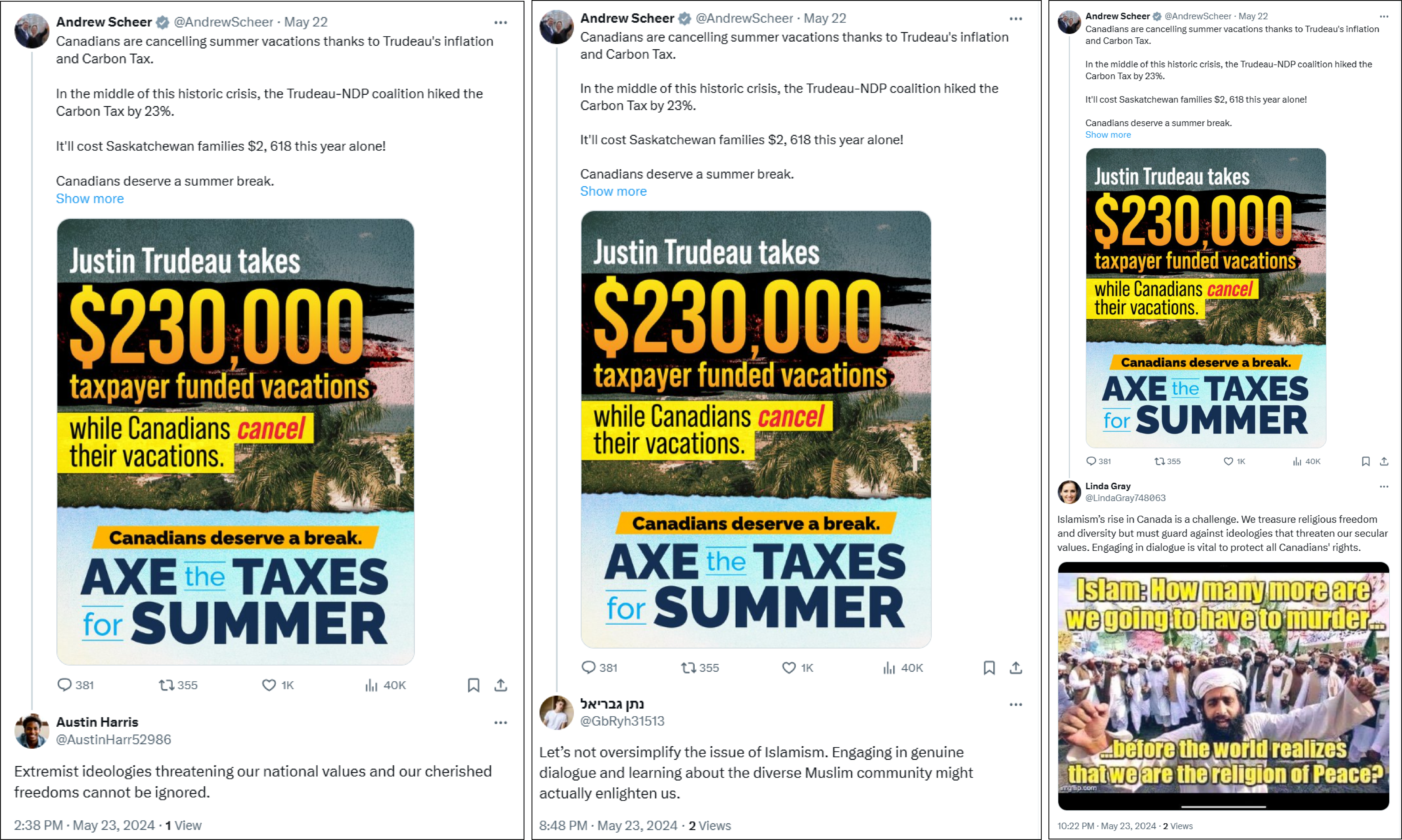

Some accounts also targeted politicians in Canada but with messages related to Islamism, extremism, and other vague messages. Among the politicians targeted were New Democratic Party (NDP) leader Jagmeet Singh and Conservative Party leader Pierre Poilievre, both candidates for Prime Minister in Canada’s 2025 federal election. Other targeted politicians include Minister of Housing Sean Fraser, Minister of Canadian Heritage Pascale St-Onge, and Members of Parliament Irek Kusmierczyk, Andrew Scheer, Kevin Vuong, Heather McPherson, Pablo Rodriguez, Omar Alghabra, and Anthony Housefather, among others.

Some of this targeting resembles the targeting of the Stoic network that launched an inauthentic campaign targeting Canadians with Islamophobic content via a falsified Canadian non-profit organization. In the new campaign, accounts directly targeted politicians by replying to their posts with messages.

At least two accounts targeted Poilievre, the leader of the Conservative Party of Canada, on May 27, 2024, with two similar replies emphasizing the importance of shared “hopes” that bridge differences. Both accounts also included a post from the anti-Liberal Party group Canada Proud in the replies, reporting on an increase in the number of refugees from Gaza permitted to resettle in Canada. The DFRLab also observed two accounts targeting the leader of the New Democratic Party, Jagmeet Singh, on different days in April 2024, with unclear replies discussing taxes and child protection. Notably, the accounts tagged a non-existent account; this specific account was also tagged in replies by other accounts in the network.

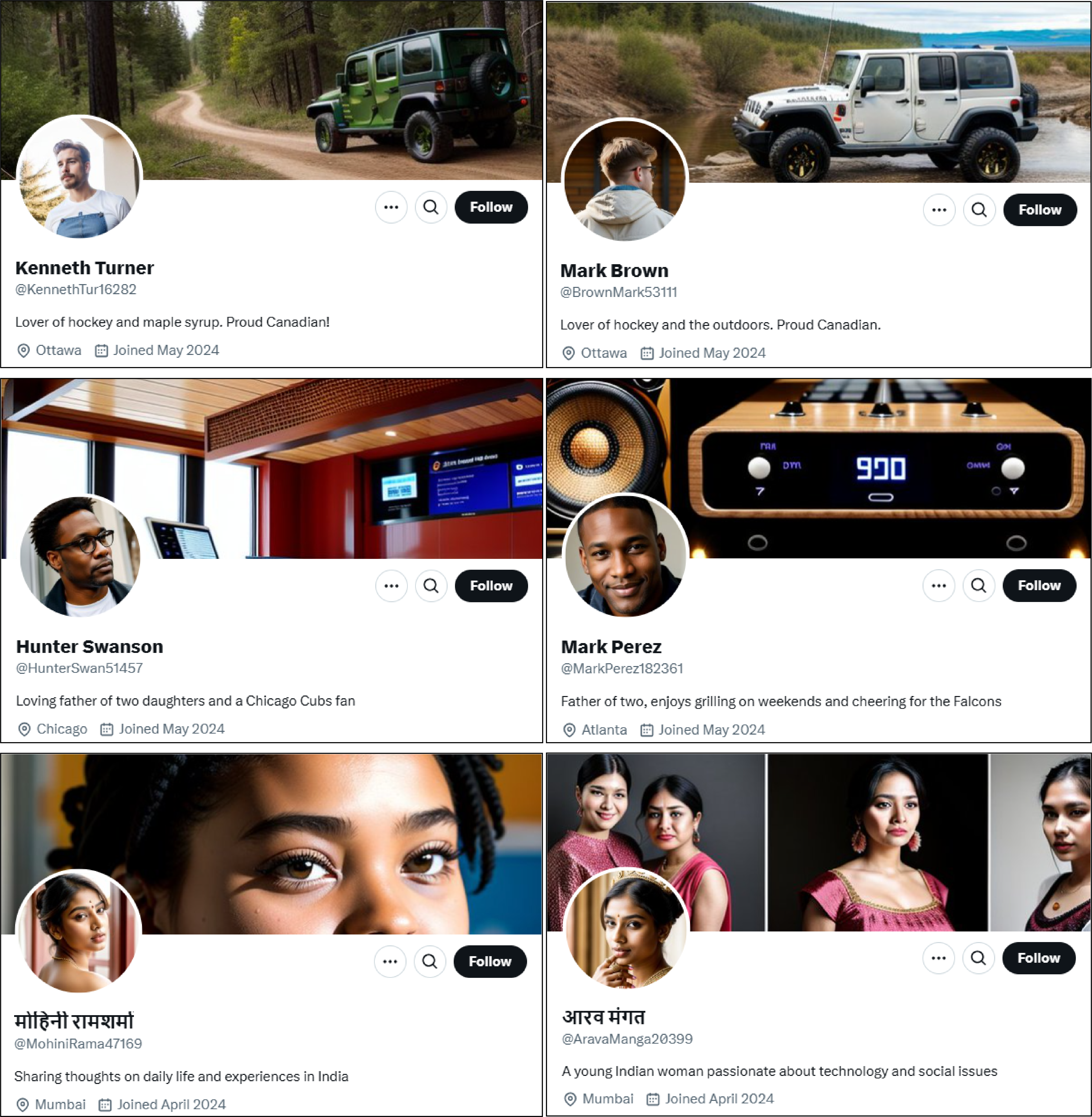

In other examples, the accounts @BrownMark53111 and @KennethTur16282 replied to posts by Member of Parliament Anthony Housefather and a post by the account of the Liberal Party in Canada. In reply to the post by Housefather, in which he asks Presidents of four Canadian universities about antisemitic speech on campus, @BrownMark53111 said, “It’s insane how rapidly extremist Islamists are growing in urban areas. We must ensure this doesn’t threaten the democratic principles we hold dear.” In reply to the post by the Liberal Party account, @KennethTur16282 said, “It’s alarming to see the rise in radicalism in our cities. We value our freedoms, but we must not let them compromise our democratic values.”

Three accounts also replied to a post by Canadian Member of Parliament Andrew Scheer with messages related to extremism and Islamism, with one account saying, “Islamism’s rise in Canada is a challenge. We treasure religious freedom and diversity but must guard against ideologies that threaten our secular values.”

Other politicians were targeted with vague messages. For example, one account warned of embracing “conservatism and oppression” in a reply to Member of Parliament Omar Alghabra, while another account replied to a post by Member of Parliament Heather McPherson, saying, “Let us descend into the abyss of irony! A nation built on freedom now flirts with conservatism and oppression. What profound lessons await us in this journey through the labyrinth of human folly?”

Indian politicians

While the accounts primarily focused on US and Canadian politicians, five accounts with Indian names also targeted Indian politicians with English language messages in a manner resembling Stoic activities targeting politicians from the country. For example, two accounts shared different messages, seconds apart, critical of the ruling Bharatiya Janata Party (BJP) to BJP MP Tejasvi Surya on May 12, 2024. Others replied to the account of opposition leader Rahul Gandhi, sharing support for the politician and critiques of Prime Minister Narendra Modi.

Fictitious personas

The accounts used different profile details to create diverse personas impersonating male and female accounts of different ethnic backgrounds, including White, Black, and Indian. Most of the accounts claimed to be US residents, but others claimed to be from Canadian, Israeli, or Indian cities by listing these locations in their profiles. In one example, fourteen accounts that listed Atlanta, Georgia, as their city used avatars showing Black individuals.

The network exhibited multiple suspicious indicators, such as accounts being created within a specific timeframe in March, April, or May 2024, with many created in apparent batches on the same day. In addition, almost all accounts used alphanumeric handles featuring a combination of letters and numbers automatically generated by the platform.

The accounts also used their bios to add details about the personas to their profiles. For example, @BrownMark53111 and @KennethTur16282, which both claimed to reside in Ottawa, had similar bios, with one account describing itself as a “Lover of hockey and the outdoors. Proud Canadian,” while the other’s bio reads, “Lover of hockey and maple syrup. Proud Canadian!”

In another example, the accounts @HunterSwan51457 and @MarkPerez182361 both presented themselves as fathers of two and fans of sports teams in their respective cities. One account said that he is “a Chicago Cubs fan,” while the other account said that he is “cheering for the Falcons” in reference to the Atlanta Falcons.

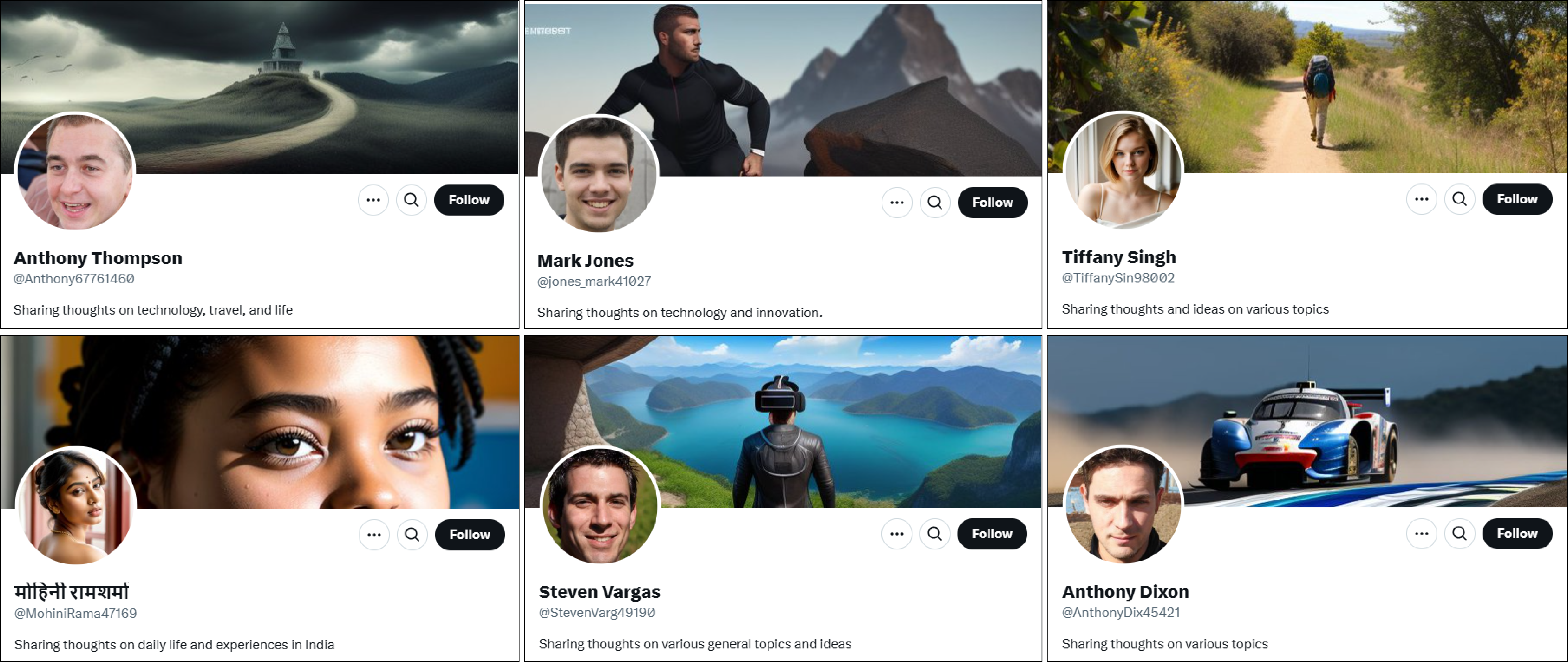

Most of the account bios were similar and generic. For example, seven accounts started their bios by claiming to be “Tech enthusiasts,” and four started their bios by claiming to be “Passionate about technology and innovation, always looking for….”

AI-generated avatars

Almost all accounts in the dataset relied on seemingly AI-generated pictures for avatars, and sometimes for header photos. In avatars, accounts appeared to use a generative adversarial network (GAN) to create realistic human faces. However, such images often produce minor errors detectable by a trained human eye. For instance, the avatars of @brown_dana31168, @JeffreySum90231, @ChristianH2688, and @GregoryTho48702 all had notable errors, such as inconsistencies with hair, errors in accessories, and unnatural blending or odd textures and items near the face.

Unlike the accounts in Stoic’s network, many accounts in this network appeared to use another unidentified AI generator to create other styles of hyper-realistic images for their avatars. These images exhibited similar artificial characteristics, such as overly smooth skin texture, perfectly balanced lighting, and similar poses and compositions. Two accounts featured images of men in business attire, framed from the back, with one of them wearing a tie backwards. Another account in the network used a picture of a man wearing overalls without shoulder straps, indicating signs of common AI errors.

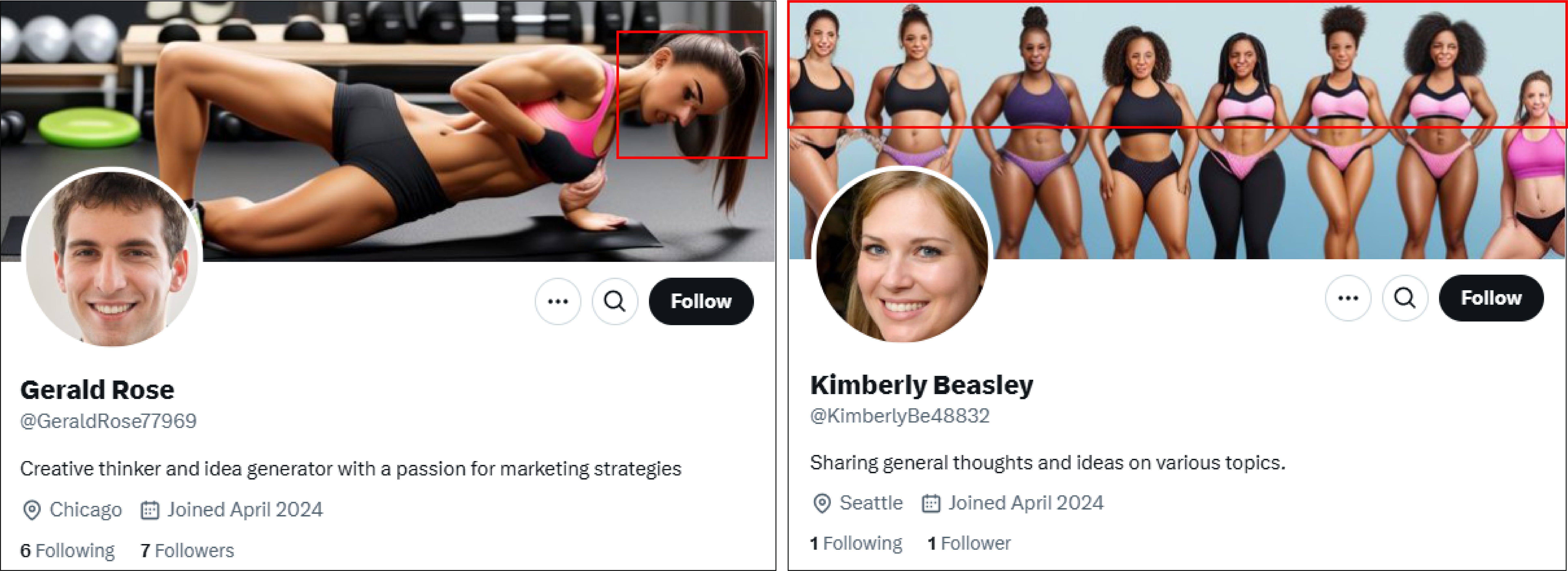

In addition to avatars, some accounts used likely AI-generated images as header photos. Accounts such as @GeraldRose77969 and @KimberlyBe48832 both used photos showing women in workout clothes. Yet, these photos had similar distortions and malformations in the facial features, which resulted in faces that appeared as though they were melting.

While it is difficult to prove with certainty that these accounts are part of the previously exposed network linked to the Israeli government, especially as most accounts from the previous network have now been suspended, this investigation details similarities in tactics, and the promotion of specific inauthentic websites, raising the possibility of the networks being connected.

Editor’s note: Ali Chenrose is a pen name used by a DFRLab contributor for safety reasons.

Cite this case study:

Ali Chenrose, “Inauthentic X accounts targeted American and Canadian politicians amid student protests,” Digital Forensic Research Lab (DFRLab), December 18, 2024, https://dfrlab.org/2024/12/18/inauthentic-x-accounts-targeted-american-and-canadian-politicians-amid-student-protests/.