AI tools usage for disinformation in the war in Ukraine

How and what technology Russia used to spread disinformation after the full-scale invasion of Ukraine

AI tools usage for disinformation in the war in Ukraine

BANNER: A woman speaks on the phone as civilians flee Odesa on March 9, 2022. (Source: REUTERS/Alexandros Avramidis)

Since initiating its full-scale invasion of Ukraine in February 2022, Russia has continued its efforts across multiple domains to undermine Ukraine, including in the information space. Russia’s arsenal of tactics and techniques remains diverse, ranging from doctored videos to false-flag operations and fake fact-checking services.

In parallel with this timeframe, we have also witnessed an enormous proliferation in AI-powered tools. In particular, the unleashed power of generative AI (GAI) caught the world’s attention, as GAI platforms lowered the barrier to entry, allowing for the sophisticated creation of fake or manipulated content at scale, whether for the purposes of disinformation or propaganda.

This article presents an overview of AI use in Russian disinformation and influence operations over the course of the war against Ukraine. While it is by no means an exhaustive list, we believe it is representative of the most notable tactics and techniques utilized by Russia.

AI-generated and assisted videos

Deepfakes entered the scene almost immediately after the full-scale invasion, allowing Russia to employ AI for the manipulation of pre-existing video. On March 16, 2022, pro-Russian actors hacked a Ukrainian media website and uploaded a poorly made deepfake of Ukrainian President Volodymyr Zelenskyy. In the video, a fake version of Zelenskyy claimed that he had resigned, fled Kyiv, and called on the Armed Forces of Ukraine to surrender and save their lives. The video received significant amplification via Telegram; the narrative also proliferated over the airwaves following a Russian hack of television channel Ukraine 24’s news chyron, which was altered to repeat the same message. The exceptionally poor quality of the video made it abundantly clear it was a deepfake, so pro-Kremlin Telegram sources started referring to it as fake while simultaneously bragging that it still succeeded in causing some Ukrainian troops to surrender.

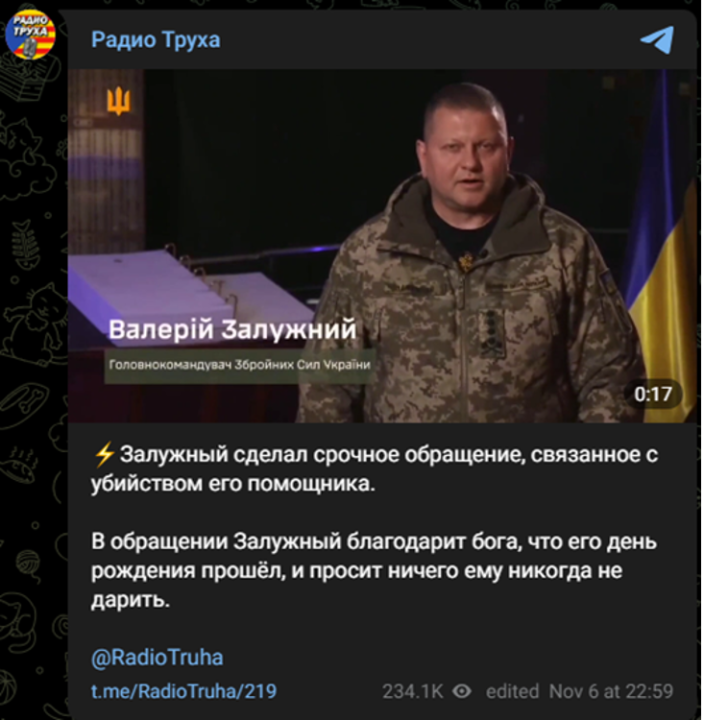

In November 2023, pro-Kremlin sources created three videos in which then-Ukrainian Commander-in-chief General Valerii Zaluzhnyi supposedly blamed Zelenskyy for killing his aide and warned of his own potential premature death. While the falsified videos had visible indicators, such as awkward gestures and facial expressions, they nonetheless demonstrated how deepfakes had evolved since the Zelenskyy fake at the beginning of the war.

A more targeted case of AI-generated deception took place in the form of a leaked Zoom call between members of the International Legion in Ukraine and an inauthentic version of former President of Ukraine Petro Poroshenko. In the call, hackers used doctored footage of Poroshenko to deceive the legion members into agreeing with inflammatory comments about Zelenskyy. The Kyiv Independent determined that Russian disinformation actors were behind the campaign and noted that an alleged “poor connection” on the Zoom call made it possible to explain away the artifacts in the Poroshenko footage. The campaign demonstrated the efficiency of AI-assisted technology in targeting a small group of people with a dedicated campaign.

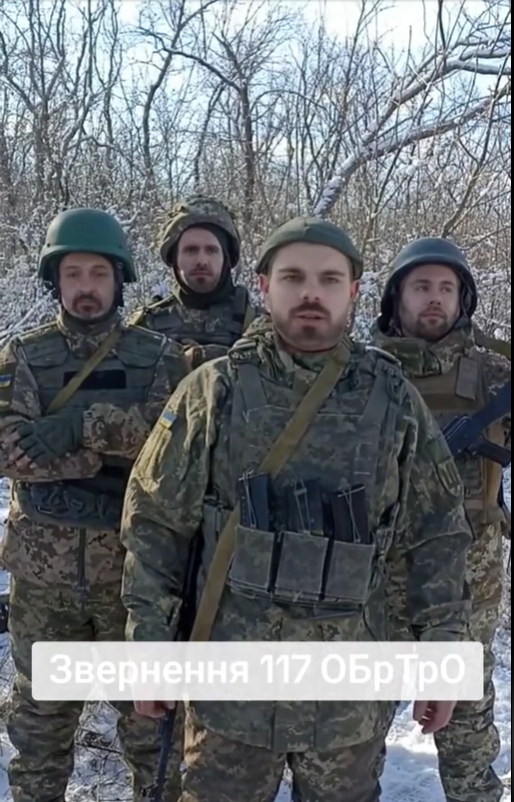

In a similar case, a video claims to feature members of the 117th Separate Territorial Defense Brigade appealing to Ukrainian General Valerii Zaluzhnyi to oust the current government. The brigade subsequently rejected the video’s veracity and published a statement declaring that the people portrayed in the video had never been a part of the brigade. Ukraine’s Center for Countering Disinformation suggested that the video had employed deepfake technology.

While the aforementioned AI-manipulated videos demonstrate the technology’s progress and its potential to spread disinformation, they have yet to fool a broad audience, likely signaling why recent examples narrowly targeted specific audiences. Meanwhile, it remains equally easy to repurpose real footage and present it in a false context, or even to employ actors to present a scenario as fact. In one notable instance, a video clip purported to show Ukrainian soldiers firing at a woman and child. OSINT researchers geolocated the footage and determined it had actually been filmed within Russian-occupied territory with actors sporting Ukrainian uniforms.

AI-generated audio

In the first year of Russia’s invasion, synthetic audio content was relatively uncommon in Russian information operations targeting Ukraine, with malign actors exploiting other media formats for explicit disinformation purposes. This began to change over the second year of the war, including some notable examples.

One instance utilized manipulated audio of Texas Gov. Greg Abbott, sourced from a Fox News interview on US immigration policy. Russian sources published an altered version of the interview on Telegram, featuring a fake voiceover of the governor when the footage cut away to show additional footage. The fake audio claimed that US President Joe Biden should learn from Russian President Vladimir Putin “how to work for national interests.” In the original interview, the governor did not mention Putin or Biden, as confirmed by spokespersons from Fox News and the governor’s office.

Another important audio tactic is lip-syncing, in which real video is enhanced to change the facial expression and the person’s pronounced speech. One such case happened after the Crocus City Hall terrorist attack in Moscow on March 22, 2024. Pro-Kremlin sources, in an effort to find evidence of “Ukrainian involvement” in the attack, produced multiple false stories and evidence. One featured a supposed interview with Oleksii Danilov, the former secretary of the National Defense and Security Council of Ukraine, in which he allegedly declared during the Ukrainian national telethon that Moscow was “having a lot of fun” in reference to the attack. Russian channel NTV, which is owned by state-controlled energy company Gazprom, broadcast the supposed Danilov interview. Danilov, however, had not appeared on Ukrainian TV during the telethon. The video itself was a stitch of two different Danilov interviews overlaid with a fake voice; indeed, BBC Verify’s Shayan Sardarizdeh confirmed that his voice had been AI-generated.

In another instance, a video appeared to show a person under arrest and confessing to being told by Ukrainian intelligence to assassinate US far-right media personality Tucker Carlson during a visit to Moscow in February 2024. Later reporting by Polygraph determined the person heard in the video featured indicators of “digital manipulation.”

In other instances, synthetic audio is also employed to narrate videos to obfuscate the video producer’s accent or nationality. Notably, this tactic appeared in what was later determined to be the largest influence operation uncovered on TikTok, in which the DFRLab and BBC Verify jointly investigated thousands of propaganda videos in seven languages accusing Ukrainian leadership of corruption. The use of AI narration with neutral accents allowed their Russian producers to avoid attribution.

AI-generated text

AI-generated text remains a quandary for the open-source intelligence and counter-disinformation community, as publicly available tools cannot reliably detect it, especially in smaller-scale texts like comments on social media platforms. In some instances, however, AI-generated text can be identified through other means.

One of the most evident use cases is translation. While written Russian disinformation in Ukraine was routinely ridiculed early on in the invasion due to its grammatical errors, the text quality later improved significantly. For instance, the Doppelganger campaign pushed negative stories about Ukraine with imperfect but believable translations that looked better than prior efforts. According to Recorded Future, generative AI has been used to generate articles for Doppelganger-associated websites in English, like Electionwatch.info. The full extent of AI text use is still uncertain, though, as new tools are needed to address this issue and better detect that which is AI-generated text.

Implications

AI technology has lowered the entry barriers for malign actors, with the potential to make fake content production faster, cheaper, and more sophisticated. At the moment, however, AI technology cannot outperform more traditional tactics and techniques, such as amplifying existing media footage out of context or editing it in ways that deceive target audiences.

New instances of generative AI in Russian information operations will likely become more commonplace as additional generative models become available. For the time being, they remain an imperfect but potentially powerful tool in Russia’s broader informational arsenal. Whether they ultimately dominate that toolkit remains to be seen.

This article covers the period until April 24, 2024, and does not account for later developments.

Cite this case study:

Roman Osadchuk, “AI tools usage for disinformation in the war in Ukraine,” Digital Forensic Research Lab (DFRLab), July 9, 2024, https://dfrlab.org/2024/07/09/ai-tools-usage-for-disinformation-in-the-war-in-ukraine.