An existential threat: Disinformation ‘single biggest risk’ to Canadian democracy

The DFRLab reviews Canada’s 2025 Public Inquiry into Foreign Interference

An existential threat: Disinformation ‘single biggest risk’ to Canadian democracy

Share this story

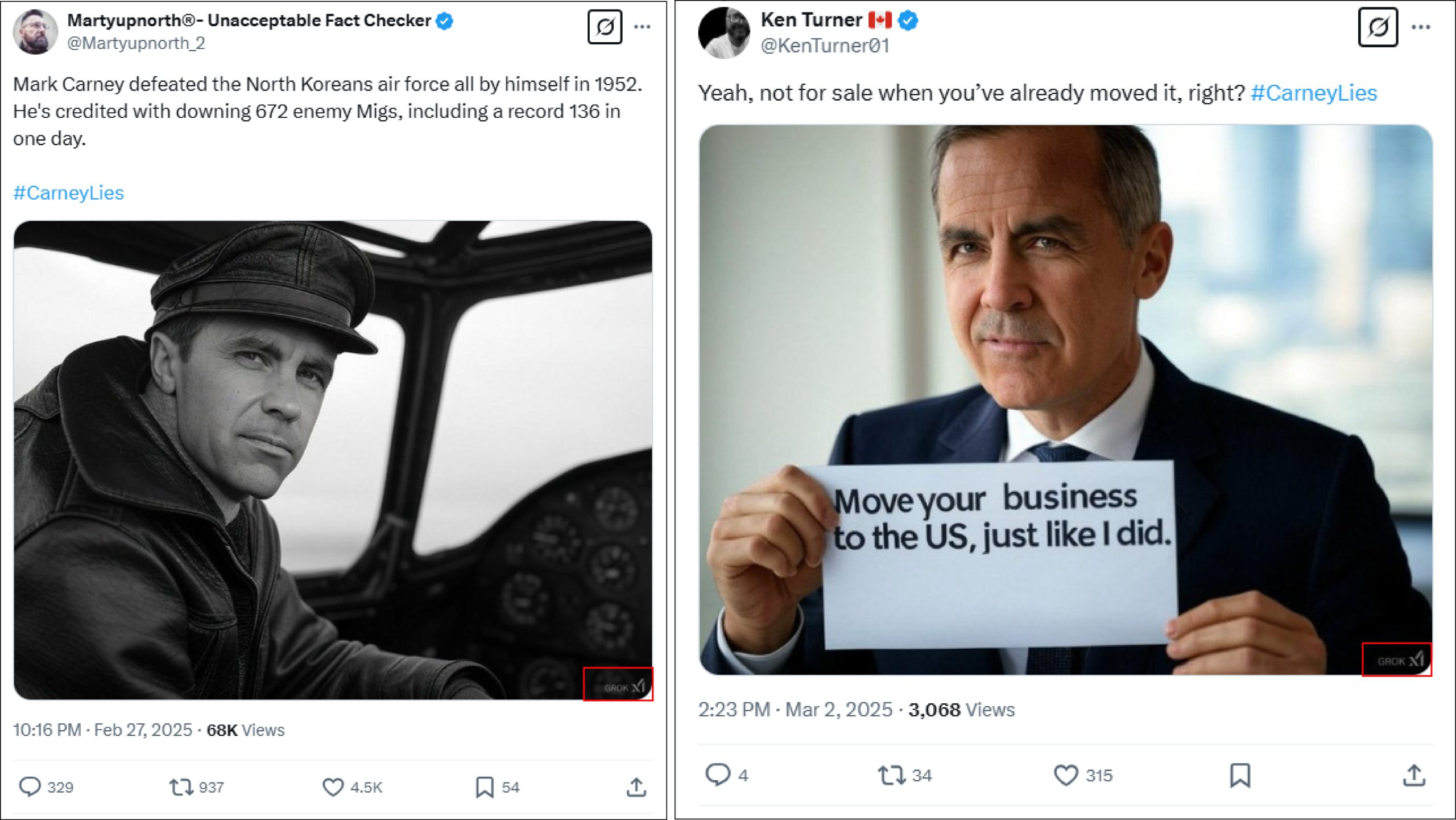

Banner: AI-generated image of Canadian PM Mark Carney used in the trending hashtag #CarneyLies, produced with X’s Grok tool. Grok watermark is highlighted in red. (Source: @KEnTurner01/archive)

A public inquiry examining the impact of foreign interference in Canada found that disinformation poses the “single biggest risk” to Canadian democracy. In January 2025, Canada’s Public Inquiry into Foreign Interference in Federal Electoral Processes and Democratic Institutions released its final report, examining the effect of foreign interference on the 2019 and 2021 federal elections. The Foreign Interference Commission’s findings arrive as the country prepares to head to the polls in 2025 to elect a new prime minister.

Many of the report’s findings about threat actors, like China and Russia, align with the trends observed in the DFRLab’s research on how these actors have deployed influence operations targeting other electoral cycles around the globe. In this piece, we examine the report’s main findings regarding the threat of misinformation and disinformation, review the main case studies of the report, and discuss emerging concerns, including the growing threat posed by artificial intelligence in the disinformation landscape. We also reflect on the report’s potential to inform future investigations of foreign interference.

Although Canada’s democratic institutions remain robust at the national level, the localized impacts of disinformation are deeply concerning. The report indicates that foreign interference had a small impact on previous federal electoral outcomes but may have affected outcomes in smaller ridings. While not sufficient to alter national election outcomes, the overall effect of disinformation is a gradual degradation of the public’s confidence in Canada’s democratic system, so while the impact may be minimal, it should be taken seriously. As Hogue warned, “Information manipulation (whether foreign or not) poses the single biggest risk to our democracy. It is an existential threat.”

While foreign interference is not a new phenomenon, its methods have transformed in recent years. The inquiry emphasized that contemporary disinformation campaigns use social media to disseminate misleading narratives at a rapid pace. As Justice Marie-Josée Hogue, commissioner of the public inquiry, explained, “While allegations of interference involving elected officials have dominated public and media discourse, the reality is that misinformation and disinformation pose an even greater threat to democracy.” Moreover, the report finds that the campaigns are not short-lived and targeted only at elections but are designed to create persistent uncertainty and distrust in democratic processes.

The inquiry identified significant systemic shortcomings that hindered an effective response to disinformation. These vulnerabilities included delays in inter-agency communication, unclear thresholds for intervention during elections, and inadequate public transparency. These deficiencies underscore the urgent need for enhanced detection systems, refined attribution methods, and more proactive public communication strategies.

Targeted campaigns in recent elections

Some of the report’s key findings center around disinformation in the 2021 federal election. Specific instances include:

Campaigns against political figures

False narratives, misleading reports in Chinese-language media outlets, and WeChat accounts targeted former Conservative Party leader Erin O’Toole and Conservative Party candidate Kenny Chiu. These reports misrepresented policy positions and attempted to discredit the candidates, with efforts focused on Chinese diaspora communities in Canada. O’Toole believed that WeChat was used to target him and his party in a foreign interference attempt he claims was orchestrated by the Chinese government, which he believed cost his party seats. When interviewed as part of the public inquiry, O’Toole said his party had tracked narratives intended to undermine support among Chinese-Canadians. These included claims that he intended to ban WeChat if his party was elected and that he was the Canadian version of Donald Trump. The commissioner’s report highlighted the circulation of such narratives in Chinese provincial and state media outlets, and by popular accounts on WeChat.

Further, former MP Chiu believed that he lost his 2021 race due to Chinese foreign interference. The DFRLab first investigated the campaign against Chiu in November 2021. Chiu had previously introduced a bill calling for a foreign influence registry. Chiu discussed the use of WeChat to spread narratives against him, and the commissioner’s report discussed the impact of Canada’s Chinese-language media ecosystem, which published articles targeting Chiu. Investigators for the Commissioner of Canada Elections reached a conclusion in 2024 that, while it didn’t break elections law, the Chinese government did try to persuade Chinese-Canadians to vote against Chiu, with narratives circulating on WeChat that claimed the former MP and his party held an “anti-China” stance.

The Trudeau–Nijjar incident

The report also notes that a disinformation campaign occurred after then-Prime Minister Justin Trudeau announced that Canada suspected India was involved in the killing of Sikh activist Hardeep Singh Nijjar in British Colombia. Relations between the two countries deteriorated following the accusations and after Canada accused Indian agents of engaging in other criminal activities targeting Canadians, leading to both countries expelling each other’s diplomats. Shortly after Nijjar’s assassination and the start of the diplomatic row, warnings emerged from the US State Department that this issue might be exploited by malign actors. While the report makes clear that it could prove no connection between the disinformation campaign and the Indian state, the case illustrates the potential for political actions to provoke attacks in the information space and for disinformation to be “used as a retaliatory tactic, to punish decisions that run contrary to a state’s interests.” Rapid Response Mechanism Canada described how it observed narratives circulating in pro-government Indian outlets and by influencers on social media that promoted negative portrayals of Canada, Trudeau, Canadian politicians, and Canadian security agencies. Some of these Indian outlets had a tremendous following in comparison to Canadian media outlets, highlighting the ability to reach millions of domestic and diaspora communities.

Also of note, in May 2024, Meta detected another inauthentic network that originated in China and targeted Canada, in which this network used Facebook and Instagram to promote content in English and Hindi about the assassination of Nijjar and Khalistan independence.

Anticipating the next online foreign interference wave

As the report notes, disinformation campaigns are not only limited to elections but can interfere with different parts of the democratic process. India, Russia, China, and, to a lesser extent, Iran are actors with the capacity to amplify specific narratives, especially given the presence of large diaspora communities from all four countries.

China

The report states that “China is the most active perpetrator of state-based foreign interference targeting Canada’s democratic institutions” and that it is “party agnostic,” supporting China’s interests above a particular Canadian party. As recently as February 2025, Canada’s Security and Intelligence Threats to Elections Task Force (SITE TF) revealed that a campaign that targeted Liberal leadership candidate Chrystia Freeland on WeChat was linked to China. Monitoring Chinese media outlets and messaging apps such as WeChat can offer insights into disinformation narratives and messages targeting the Chinese diaspora in Canada. These media outlets also host offline political events, introducing candidates to Chinese Canadian voters, a privilege that can, theoretically, be withheld based on the candidates’ views on China. An example of this, as cited in the report, comes from New Democratic Party (NDP) MP Jenny Kwan, who believed that she was excluded from key events hosted by Chinese Canadian community organizations after she took positions against China. She had previously received invitations to such events.

Additionally, the report notes that the Chinese information operation Spamouflage targeted various MPs in 2023. Spamouflage is a large-scale operation that uses a network of fake social media accounts to spread spam-like content and propaganda across different platforms to portray China positively and denigrate democratic competitors. This campaign is still active and could be used to influence Canadian elections. For example, the DFRLab found that Spamouflage assets on X, which primarily target the United States, also posted narratives about Canada in 2025. Also, in March, Canada’s Rapid Response Mechanism RRM attributed “with high confidence” a new Spamouflage campaign to China that targeted individuals based in Canada and Canadian politicians.

India

The report also mentioned that India is “the second most active country” targeting Canada with electoral foreign interference. As documented by the CBC in December 2024, online attacks against Canadian-Indian communities and Canadian politicians and institutions were rife following the killing of Nijjar and the resulting diplomatic dispute. When offline violent clashes occurred between pro-Khalistan and pro-Narendra Modi groups in Canada, suspicious accounts on social media and pro-government Indian media outlets spread false information related to the clashes, highlighting the parallel battle that emerged online. Following the assassination of Nijjar, members and activists of the Sikh community also described being victims of online threats and doxxing, alongside the offline threats.

Notably, following the release of the commission’s report, many articles published by English-language Indian media outlets used a quote from the report’s findings in stories that appeared to be intended to mislead readers. In reporting on the disinformation campaign targeting Trudeau following the announcement of suspected Indian involvement in the killing of Nijjar, the report said that “no definitive link to a foreign state could be proven.” However, media outlets misrepresented the statement, claiming that it was in reference to Trudeau’s accusations regarding Nijjar’s murder and not the disinformation campaign. This approach follows a trend, as previous articles published by Indian outlets falsely claimed that the case had collapsed because the individuals accused of murdering Nijjar had been released from Canadian custody.

Russia

Russia was mentioned in the report as a prime example of a country that pushes disinformation campaigns to stir up division. Despite not finding evidence of Russian interference during the 2021 elections, the report noted Russia is engaged in disinformation efforts related to its war against Ukraine and highlighted that “Canada’s strong support of Ukraine could affect whether Russia tries to influence the next election.”

As the DFRLab has extensively documented, Russian influence operations, such as Doppelganger and Operation Undercut, are known to target several countries in different languages and across different platforms to spread distrust about the leaders of these countries and sow doubt about efforts to support Ukraine during the war. Such manipulation also includes spreading false narratives about elections. During the 2024 US election, for example, the DFRLAB examined how Russia-linked media used Russian-language and English-speaking media outlets to amplify false voter fraud claims and undermine the electoral process.

In September 2024, officials in the United States and Europe revealed that Canada was a target of Russia’s Operation Doppelganger. Fake articles attacked Trudeau and the Liberal Party. Furthermore, the US Department of Justice issued an indictment against Canadian-owned company Tenet Media, linking them to pro-Kremlin disinformation operations. While the investigation focused on attacks targeting the United States, reports that emerged following the indictment highlighted that narratives targeted Canada as well.

Iran

Compared to China and India, Iran is not identified as a primary threat to Canadian institutions and elections within the scope of the report’s inquiry. The report points to possible transnational repression and mentions that Canada’s listing of the Iranian Revolutionary Guard Corps as a terrorist entity in 2024 “could result in increased foreign interference” in the future. A recent report by the Communications Security Establishment (CSE) also assessed that for Russia and Iran, “Canadian elections are almost certainly lower priority targets compared to the US or the UK.” CSE also mentioned that the two countries “are more likely to use low-effort” influence operations if they do target Canada. Nevertheless, the cyber agency also assessed that Iran, Russia, and China “will very likely” use AI tools to try and target Canada before and during the 2025 elections.

Despite the different points highlighted in the reports from Commissioner Hogue and CSE, it remains important to monitor potential Iranian interference. Though Iran’s capabilities may not match those of Russia and China, the DFRLab found that their efforts to target the United States revealed their ability to use “flashy” tactics, such as hack-and-leak operations that targeted the Trump 2025 US elections campaign and persistent covert and less covert operations that sought to exploit existing political divisions.

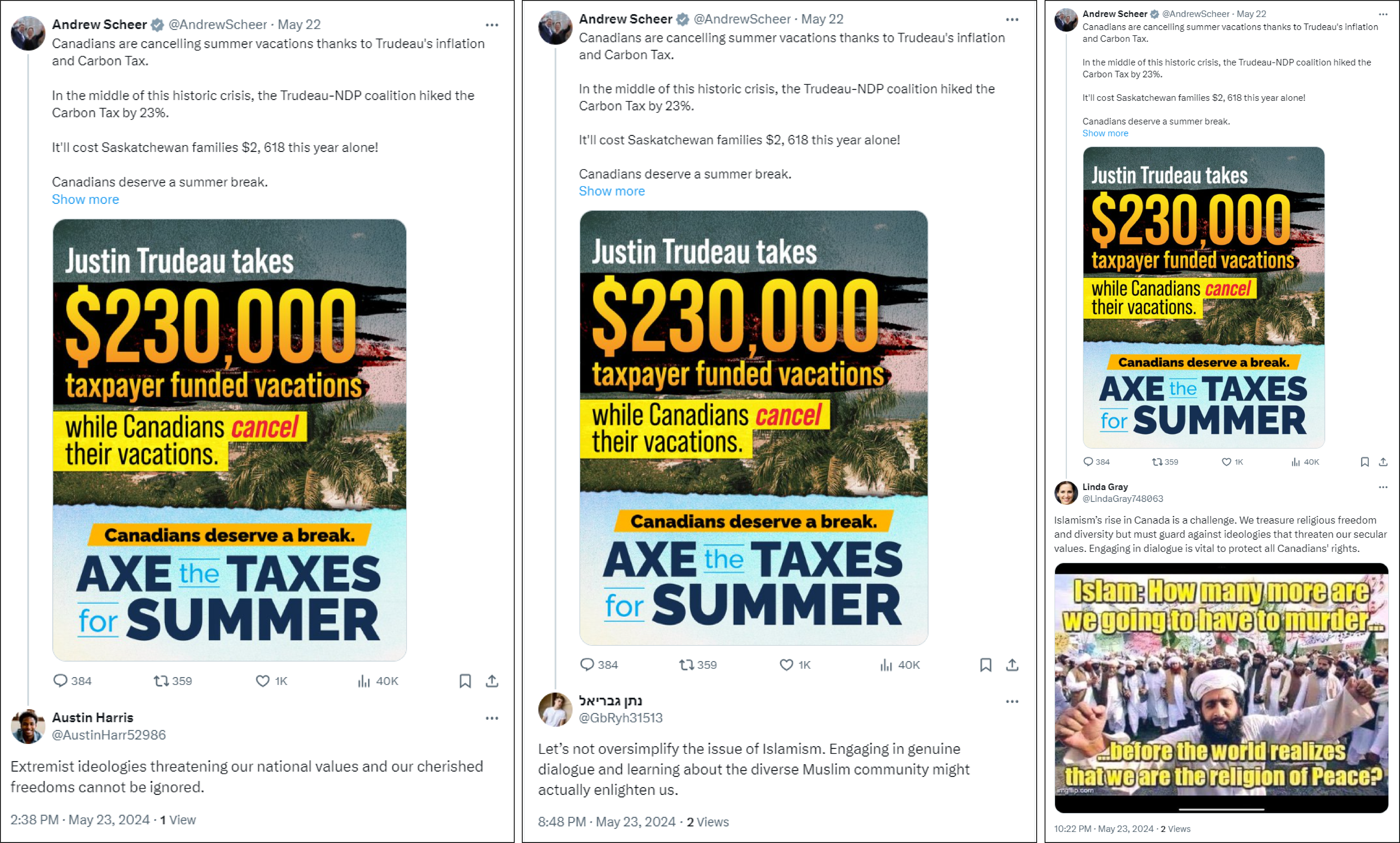

Israel

Aside from adversarial influence campaigns, other countries, including allies of Canada, may also engage in foreign influence. In March 2024, the DFRLab identified a disinformation campaign that targeted Canadians with Islamophobic content. This was later revealed by Meta and OpenAI to be a foreign influence campaign operated by Israeli marketing firm STOIC, which was, according to the New York Times, funded by an Israeli government ministry. A later campaign identified by the DFRLab bore many similarities to the STOIC campaign as it targeted Canadian politicians from different parties with messages about Islamism and divisions in the country. Both campaigns utilized AI-generated content to craft their messages. The Canadian government confirmed it had verified components of the STOIC campaign and expressed concern over the issue.

AI-generated disinformation

Adding a new dimension to these challenges, the commission sheds light on the rise of generative AI and warns about its contribution to the threat of disinformation. This threat includes synthetic media, a term used to describe different content generated partially or fully by AI, which enables malign actors to create vast quantities of misleading content, complicating the already difficult task of detecting and countering disinformation campaigns.

Different government agencies and organizations, including CSE, have warned of the threat associated with deepfakes, a technology that can be used in cyber warfare and covert operations to sway public opinion. The Rapid Response Mechanism also described the use of deepfakes as a prominent tactic in Spamouflage operations.

Following the advancements and accessibility of generative artificial intelligence models, the DFRLab has monitored the developing use of various AI-generated media content to aid influence operations and deceive users. The exploitation of this technology to enable political manipulation could have negative consequences if used to sway public opinion, and potentially elections. The DFRLab has previously examined the influence of AI on elections, for example, the use of fake viral celebrity images to endorse candidates prior to the 2024 US presidential elections or the use of deepfakes to undermine, attack, or support candidates in local elections in Brazil. These tools can also be used to fuel or invent political narratives.

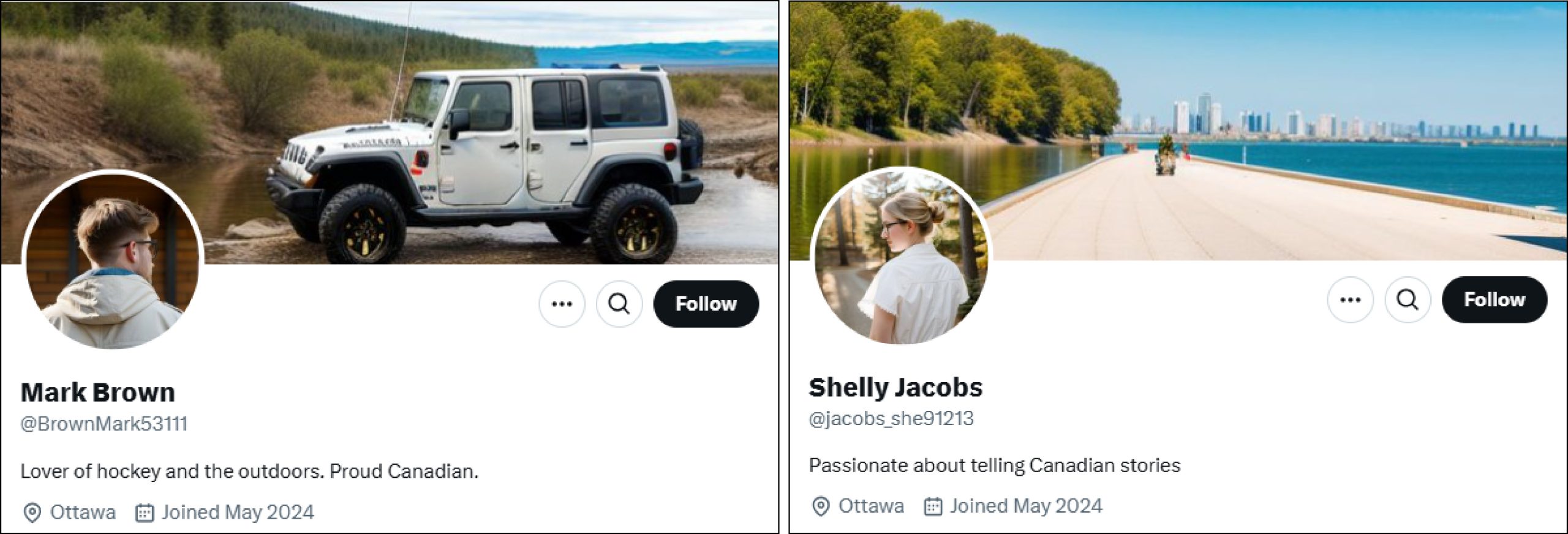

Targeting Canada, the previously mentioned STOIC campaign used AI-generated pictures and possibly text, including OpenAI models, to aid their campaign and generate fake profiles, some of which noted their love of hockey.

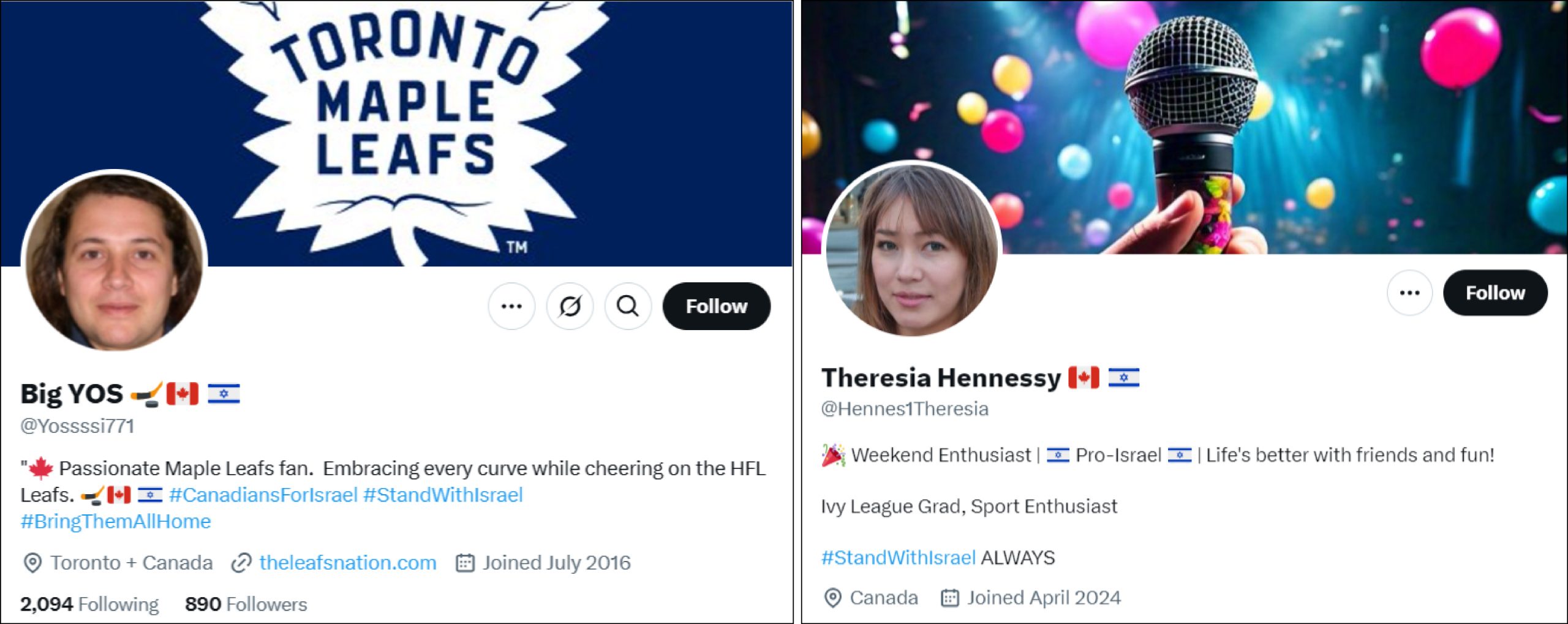

Another separate and unattributed operation used synthetic profile pictures to create pro-Israel sockpuppet accounts that pretended to be Canadian, American, and British nationals. Both campaigns used different AI tools that created different types of synthetic faces and pictures, likely including the use of generative adversarial network (GAN) images and text-to-image models.

In addition to weaponizing AI tools to deceive users, these tools can also be used to enable trolling, cyberbullying, and harassment. The benefits of this are that it creates noise, floods online spaces, and normalizes such behavior.

Mark Carney

As the Liberal Party of Canada appointed Mark Carney as its new leader, the DFRLab observed the proliferation of synthetic AI-generated images under a trending hashtag in Canada. The posts attacked Carney in the period before the Liberal Party held a leadership race to replace Trudeau. According to Meltwater Explore data, the hashtag #CarneyLies garnered over 70,000 mentions and received over 100 million views on X between February 27 and March 10, 2025. Many users who engaged with the hashtag included images created with X’s AI tool Gork, showing satirical and exaggerated scenarios intended to make fun of Carney.

These images, which were used many times along with the hashtag, were easily identifiable given their exaggerated details and Grok’s watermark. While it is more difficult to track hashtags on other platforms, we noticed the use of these AI-generated images of Carney on Facebook, Instagram, and TikTok.

Even though it is still too early to determine how such content will be received, the increasing number of cases shows the ability of state and non-state actors to exploit low-cost AI tools with ease and on a large scale to create evolving malicious content both during election periods and at other times. As described by CSE, the increased use of AI is changing “how disinformation is created and spread,” but not its effects.

Commissioner Hogue’s final report confirms that while Canada’s electoral outcomes have largely remained unaffected by foreign interference, the pervasive threat of disinformation—now amplified by generative AI—poses a significant long-term risk to democratic institutions in general. With federal elections on the horizon, and CSE describing Canada as “an attractive target to foreign actors,” the Commissioner’s report serves as a call for a wider response, showing that a challenge lies ahead as Canada develops its monitoring and defense strategies in a changing digital landscape. As Hogue asserted, “Foreign interference will never be fully eradicated, and it will always be necessary to counter it.”

The DFRLab closely tracked foreign information manipulation and interference (FIMI) in 2024, the biggest election year in history, and our research demonstrated how various malign actors, sometimes armed with AI tools, continue to use different tactics such as disinformation campaigns, influencer networks, and cyberattacks to undermine democratic institutions around the world. Canada is not immune to these evolving tactics, especially in 2025, as the country prepares for federal elections and relations with the United States spiral into new terrain. This also comes at a time when the online landscape is undergoing significant challenges that make fact-checking and the identification of falsehoods more difficult. As platforms like X and Meta adjust their content moderation policies, these actions could be exploited to spread false information around critical events such as elections.

Cite this case study:

“An existential threat: Disinformation ‘single biggest risk’ to Canadian Democracy,” Digital Forensic Research Lab (DFRLab), March 19, 2024,