How social media manipulation fuels anti-Ukraine sentiment in Poland

How inauthentic social media activity exploits political and security events to seed division between Poland and Ukraine

How social media manipulation fuels anti-Ukraine sentiment in Poland

Share this story

BANNER: Polish President Karol Nawrocki and Ukraine’s President Volodymyr Zelenskyy address the media during a press conference at the Presidential Palace in Warsaw, Poland, on December 19, 2025. (Source: Aleksander Kalka via Reuters)

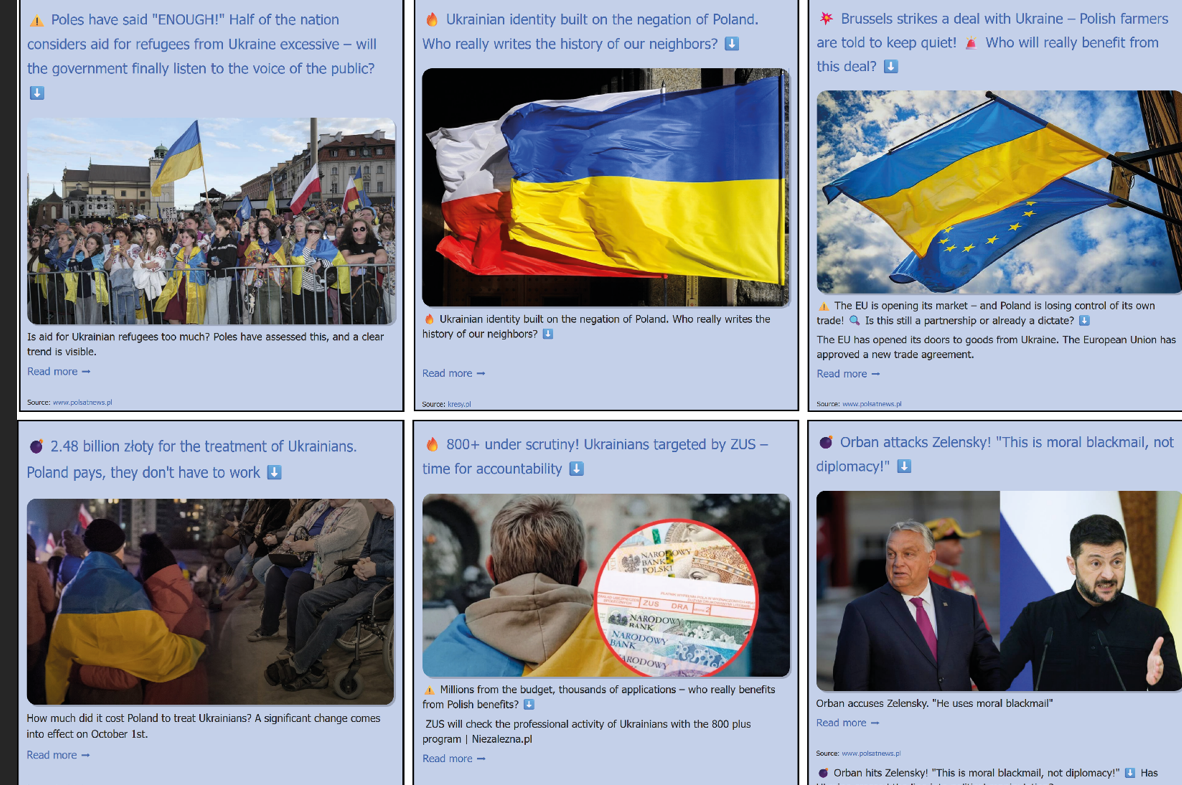

Anti-Ukraine narratives gained traction in Poland recently, sparked by two events: President Karol Nawrocki vetoing a bill concerning support for Ukrainian refugees, and Russian drones entering Polish airspace. These events prompted a surge in misleading social media narratives that blamed Ukrainians for Poland’s economic burdens and security threats. Coordinated networks manipulated social media platforms to amplify this hostility, seeking to fracture public support for Ukraine and destabilize Poland-Ukraine relations.

Poland currently hosts around one million Ukrainian refugees, mostly women and children, but public attitudes have shifted since the war began. In 2022, 51 percent of Poles viewed Ukrainians positively, according to Public Opinion Research Center (CBOS) data, but by early 2025, that dropped to 30 percent, with 38 percent expressing negative views. The anti-Ukraine narratives circulating in Poland seek to exploit seeds of genuine grievances to widen existing divisions, as is common in Russian-backed information operations.

On August 25, President Nawrocki vetoed an amendment to the Act on Assistance to Ukrainian Citizens that would extend support for Ukrainians in Poland. He argued that the Family 800+ benefit should not be extended to all Ukrainians, but only to those who work and pay taxes in Poland. The benefit provides families with 800 PLN (roughly USD 200) per child to help cover basic living costs. The Polish government and the president agreed to allow foreign families to receive the benefit, but only if the adults are working and the children are enrolled in school. The proposed bill was signed into law by Nawrocki in late September.

Following this, malicious narratives circulated online describing Ukrainians as people who live off Poland’s generosity while giving little in return. These messages claimed that refugees depend on benefits rather than working, exploit social programs, take money meant for citizens, and drain state resources. The language was often dehumanizing, portraying Ukrainians as freeloaders who take everything for free, at the expense of Poles.

Despite widespread concerns about the economic and social costs of hosting Ukrainians, elevated following Nawrocki’s veto, 78 percent of Ukrainians in Poland are employed. In 2024, their work and spending contributed to a 2.7 percent rise in Poland’s gross domestic product (GDP), according to a report by the United Nations High Commissioner for Refugees (UNHCR). In 2024, Ukrainians contributed PLN 15.2 billion (around USD 4.23 billion) into the Polish state budget, while receiving PLN 2.8 billion in benefits under the Family 800+ program, according to a report from the national bank, Bank Gospodarstwa Krajowego. The UNHCR report found that 80 percent of refugee households’ income comes from their earnings. Nevertheless, false claims of freeloading and parasitism continue to spread.

Anti-Ukrainian sentiments grew even stronger following the incursion of Russian drones into Poland on September 10. A recurring narrative blamed Ukraine, accusing it of trying to drag Poland and NATO into war with Russia.

Poland’s military and political support for Ukraine plays an important role in Ukraine’s resistance to Russian military aggression, and this alliance represents a significant priority for Poland as well. Ukraine’s defeat in the war with Russia would pose a serious national security threat to Poland. At the same time, rising hostility toward Ukrainian refugees within Poland creates risks to social cohesion and public safety.

For this investigation, the DFRLab collected over eighteen keywords, phrases, and hashtags related to Nawrocki’s veto and the drone incursion and analyzed their mentions across Facebook, YouTube, and TikTok from August 25 to September 25. Using the keywords and hashtags, the DFRLab collected more than 400,000 posts, comments, and video transcripts and used OpenSearch to analyze the data and identify coordination and platform manipulation patterns. We found that likely coordinated networks, often comprised of a small number of accounts, used social media manipulation to entrench anti-Ukraine views and undermine the Polish-Ukrainian alliance.

A limitation of this investigation is the limited access to transparency data that would, with certainty, confirm the inauthenticity of the accounts behind such activity. Some accounts and pages pushing anti-Ukrainian narratives appear real, share normal content, and appear active in ordinary groups. Yet their posting patterns at times diverge from the norm and synchronize with other accounts, raising suspicions that they could be coordinated or hijacked profiles. Increased transparency and data access would enable researchers to more effectively identify inauthenticity. The need is underscored by malicious actors’ growing use of AI, which enables the creation of unique, seemingly authentic profiles at scale. The enhanced manipulations enabled by AI require improved data access for researchers to more effectively distinguish authentic user behavior from organized manipulation.

A ‘security threat’

One dominant narrative framed Ukraine as the primary threat to Poland, while dismissing the threat from Russia. Dehumanizing language portrayed Ukrainians as “enemies” or “historical adversaries.” Narratives called for stricter border controls and the deportation of Ukrainians living in Poland.

On August 23, the Facebook page of the clickbait website udostepnij.pl posted, “Poland shows no mercy to lawbreakers! A Ukrainian was deported for hooliganism.” The comments section linked to an article on the udostepnij.pl website, which inaccurately reported on a real case involving the deportation of a Ukrainian man, who had a history of police encounters. A few hours later, seven Facebook pages (here, here, here, here, here, here, and here) published the exact same post with identical visuals, all in the same minute, indicating that posting behavior across these pages was likely automated. The Facebook pages had names unrelated to politics, such as “We are breaking the record of likes for John Paul II” or “Hug me like you’ve never hugged anyone before.”

These seven pages have a combined 700,000 followers. Each post’s comment section linked to an article from Fast-news.pl. While the title and banner image matched the identical article on udostepnij.pl, the main text differed. The DFRLab found at least two other instances in which udostepnij.pl and the seven Facebook pages published identical posts. While udostepnij.pl may not belong to the network of seven pages, it benefits from the network’s amplification.

The seven coordinated pages primarily amplify content from Fast-news.pl. In 2024, this website shared the same Google AdSense code (CA-PUB-6371535130732086) as News.pl, suggesting a connection or even possible shared ownership. As of October 2025, News.pl automatically redirects visitors to Gazeta.pl. This suggests that News.pl could be owned by the same entity as Gazeta.pl, or that there is some form of partnership between them.

Udostepnij.pl is a clickbait-style website that promotes its content on Facebook to draw users to its external website. The website and Facebook page are run by the Piotr Bodek Art Unlimited Studio Projektowe, according to Facebook transparency data. The presence of a comprehensive Google advertising and analytics setup (adsbygoogle, google_tag_manager, gtag, googletag_data) on the website indicates that it seeks to monetize traffic through ads. The site also uses a Facebook tracking pixel (_fbq, FB) that sends visit and engagement data back to Facebook, allowing operators to track and retarget users with similar content.

The site’s outgoing links show that udostepnij.pl primarily redirects users to a cluster of right-wing and conservative Polish websites, with the most frequent destinations including Wpolityce.pl, Niezalezna.pl, Fronda.pl, Kresy.pl, Tysol.pl, Wpolsce24.pl, Dorzeczy.pl, Wmeritum.pl, and Se.pl. Operating a feed-style homepage, udostepnij.pl’s newest posts still contain the SEO data of the original source material, copying meta tags and image filenames that contain the original domain identifiers.

Udostepnij.pl also runs a monetized YouTube channel, udostepnijpl. The website redirects users to this YouTube channel, which includes a “Join” option to subscribe to a paid membership. Created on October 10, 2021, the channel has over 1,060 videos and more than 6 million views. There is no publicly available data to indicate how much money Udostepnij.pl has raised via its YouTube channel. YouTube analytics tools can provide estimated income ranges for channels. For example, the YouTube analytics extension vidIQ showed the estimated monthly earnings for the @udostepnijpl channel are between USD 413 and USD 1,000. These figures are highly approximate, unconfirmed, and vary based on video performance in a given month. Therefore, such estimates are not reliable indicators.

The activities of the Facebook pages promoting udostepnij.pl exemplify coordinated amplification. They use synchronized posts across multiple misleadingly named pages, indicating automated posting patterns and inauthentic behavior.

‘Freeloaders’

Following Nawrocki’s veto, the DFRLab identified over 2,800 comments on Facebook, YouTube, and TikTok portraying Ukrainians in Poland as “freeloaders,” “parasites,” or “idle.” The DFRLab also uncovered a network of Facebook accounts and groups spreading anti-Ukraine narratives about the veto, including claims suggesting only Poles work and pay taxes while Ukrainians live off state-provided benefits. One video referred to Ukrainians in Poland as “parasites,” falsely alleged that the veto would lead to forceful deportations of one million Ukrainians from Poland, and suggested that Ukrainian President Volodymyr Zelenskyy would gain “fresh meat” for the frontlines of the war against Russia. This network is affiliated with a fringe spiritual and esoteric sect, the Bright Side of the Force (“Jasna Strona Mocy” or JSM), that shares mystical teachings, promotes its own system of “Laws of Life,” and positions itself as a source of hidden or superior knowledge.

As of October 16, one video was shared over 770 times, primarily by JSM-linked accounts, across Facebook groups unrelated to politics, including those centered on running, self-improvement, real estate, and entertainment. Despite the high share count, the post drew only 146 reactions, indicating minimal authentic engagement.

Under the guise of a spiritual movement, JSM promotes conspiracy theories and anti-Ukrainian narratives. The organization operates several Facebook pages (here, here, here, here, here, here) and groups (here, here, here). The DFRLab identified more than ten Facebook profiles (here, here, here, here, here, here, here, here, here, here, here, here, here) tied to JSM, the association was based on the use of “jsm” in account names and actively sharing JSM’s content across multiple groups. Beyond Facebook, JSM maintains a presence on other social media platforms, including TikTok, YouTube, and X.

This tactic indicates that a small but active network linked to JSM used multiple Facebook pages, groups, and accounts to push inflammatory, conspiratorial, and anti-Ukrainian content into non-political groups, reaching broader audiences. The JSM network highlights the additional difficulty of tracking narrative spread when political content spreads through spaces that, on the surface, appear entirely apolitical. JSM’s accounts push anti-Ukrainian videos into hobby and lifestyle groups, where they are more likely to evade detection. Additionally, because much of the material is visual, it evades keyword searches.

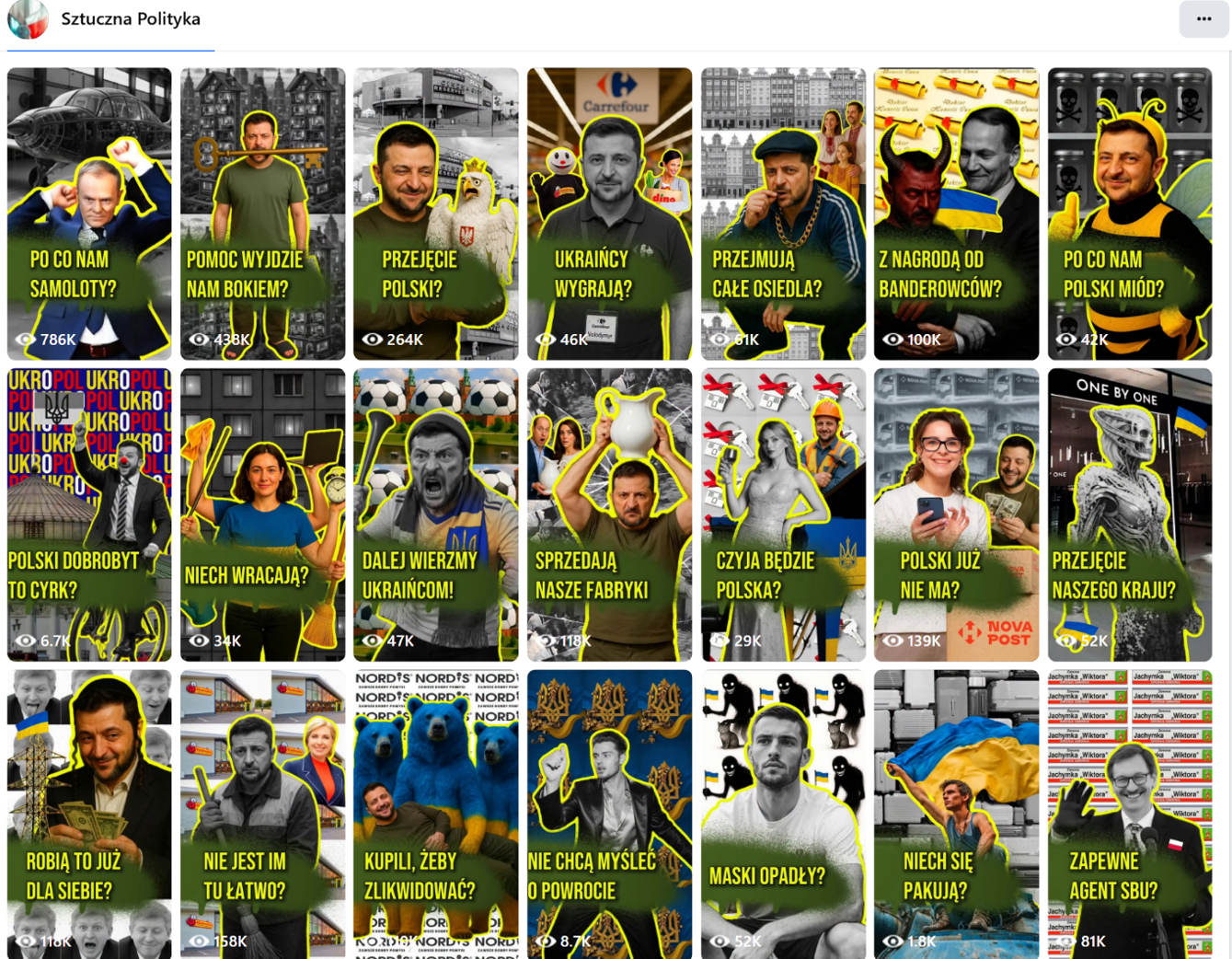

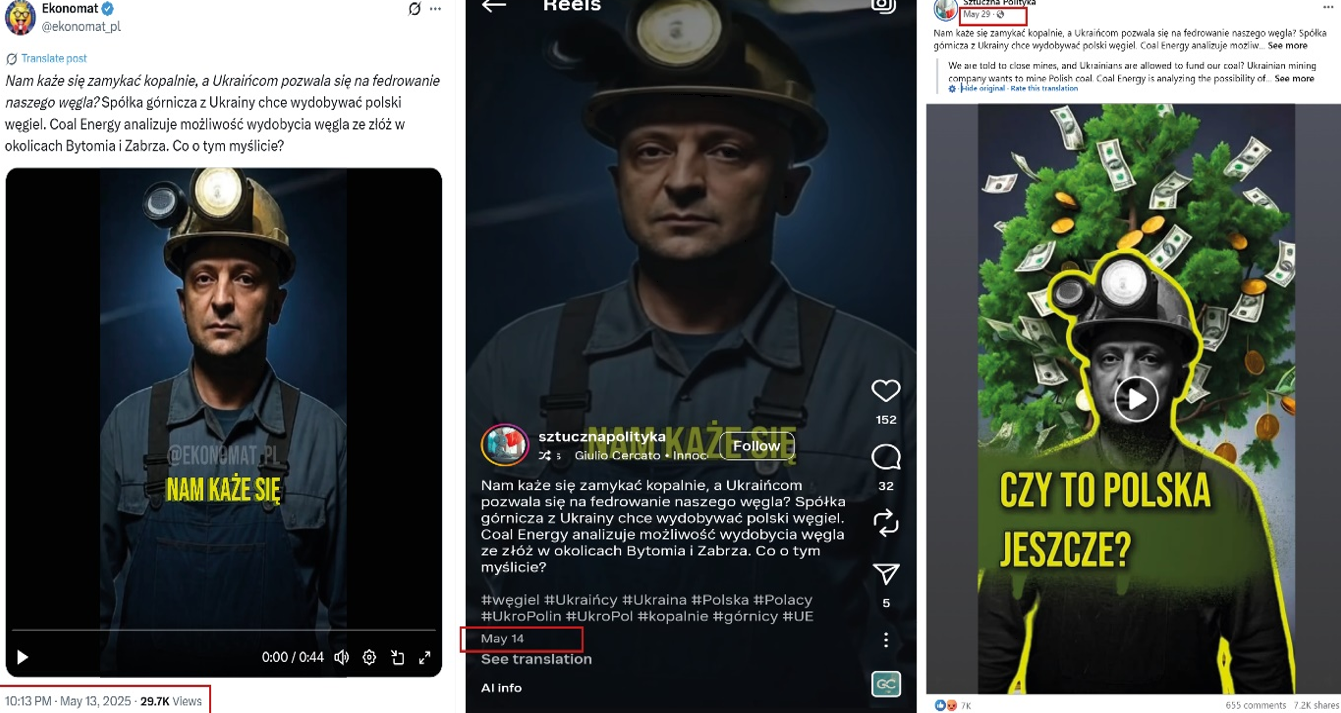

The DFRLab also identified a Facebook page, Sztuczna Polityka (“Artificial Politics”), which consistently portrays the expansion of Ukrainian companies in Poland in a negative light and depicts Ukrainians, particularly President Zelenskyy, as “greedy.” The page regularly posts anti-Ukrainian AI-generated videos dubbed in Polish. It also has an Instagram account under the same name. The Facebook page began posting AI-generated videos in May 2025, while the Instagram account started posting in April 2025.

The DFRLab conducted a reverse image search of Sztuczna Polityka’s video captions and found that the X account Economat_pl, which frequently posts anti-Ukraine content, had earlier published the same AI-generated videos, but with an economat_pl watermark. The findings suggest that Economat_pl published some of these videos first (examples here, here, and here), after which Sztuczna Polityka repackaged them with slightly altered cover frames (here, here, and here). There is no consistent posting pattern between the two accounts or other evidence of a direct relationship, and the time gap between posts ranges from several days to just a few hours, suggesting this is indirect amplification.

Sztuczna Polityka’s Facebook videos attract tens of thousands of views, but the engagement patterns appear suspicious. The first three videos in May received zero to six shares, but the fourth on May 22 reached 908 shares. Engagement then dropped until May 29, when a video about Polish mines spiked to 7,200 shares. These sudden surges, without corresponding ads, suggest inauthentic activity on the page.

Drones in Poland

On September 9, around nineteen reportedly Russian drones crossed into Polish territory, leading to the invocation of Article 4 of the NATO treaty by Poland. Article 4 allows any NATO member to call for urgent consultations with allies when it feels its security is under threat. The incursion became a flashpoint for a wave of conspiracies. Online networks amplified three main narratives: the drone incursion was a Ukrainian false flag, it was a Ukrainian provocation, and the war in Ukraine is “not Poland’s war.”

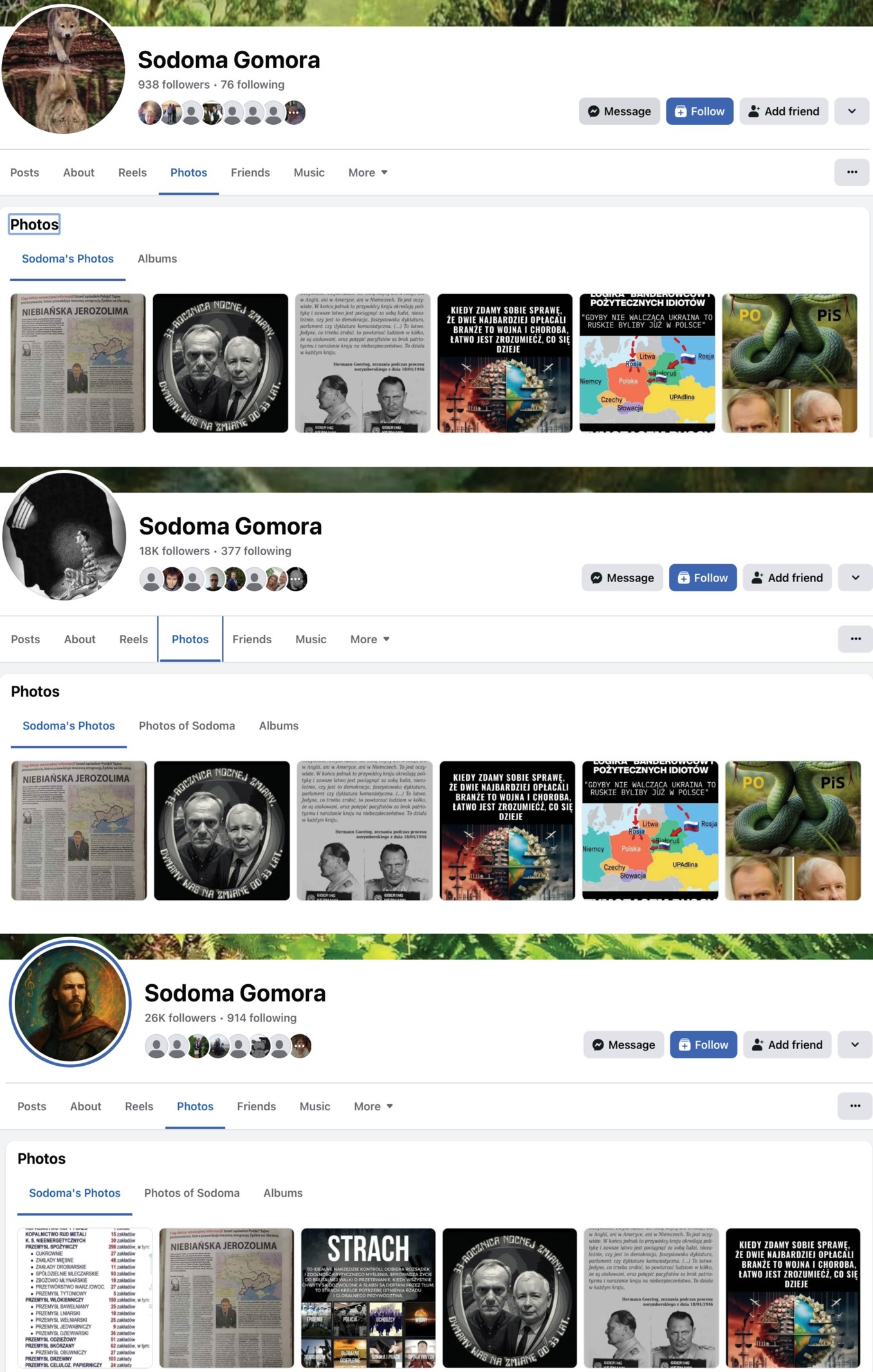

The DFRLab identified a small network of accounts on Facebook pushing the narrative that the drone attack was a Ukrainian false flag operation. According to their posts, Ukraine’s supposed goal was to draw Poland into open conflict with Russia. Three accounts sharing the same name posted nearly identical content across Facebook. The name was a reference to Sodom and Gomorrah, further evidence of the accounts’ inauthenticity. The intervals between identical posts across the accounts were sometimes several minutes, suggesting the activity was manually coordinated rather than automated. Cumulatively, these three accounts have almost 40,000 followers.

Another network of three interconnected nationalist Facebook pages also amplified the claim that the drone incursion was a Ukrainian provocation intended to drag Poland and NATO into war with Russia. These pages are “Polska i Ukraina” (“Poland and Ukraine”), “Polski Ruch Patriotyczny” (“Polish Patriotic Movement”), and “Nie dla banderyzmu” (“No to Banderism”). The pages frequently share each other’s anti-Ukrainian posts. Many of their posts feature screenshots of articles from Kresy.pl, a website that has for years published material portraying Ukrainians in a negative light.

Two pages, Polska i Ukraina and Nie dla banderyzmu, share the same crowdfunding profile and link to the same fundraising page, suggesting a possible connection between them. Although these pages appear to exhibit coordinated behavior, stronger confirmation of a structural connection is not possible with the publicly available data.

On September 10, the Polska i Ukraina Facebook page published a TVN24 screenshot accompanied by a post speculating that the drone attack could be “another Ukrainian provocation,” and suggested that drones might have been redirected through GPS interference. The posts amplifying the Ukrainian provocation narrative received thousands of shares.

TikTok amplification

The DFRLab identified more than ten English-language TikTok accounts (here, here, here, here, here, here, here, here, here, here, here) posting videos claiming that Ukraine attacked Poland. The first identified video promoting this narrative was created by Ethan Levins, a self-described independent journalist who frequently posts anti-Ukraine content. The narrative was later amplified by other accounts that independently created and edited content to further the claim’s spread. Despite the variations, all the videos included the same caption: “Ukraine attacked Poland.” Most of the accounts sharing these clips had a history of amplifying content from Levins.

‘This is not our war’

Suspicious accounts also spammed the Facebook comment sections of Polish media outlets and politicians. These accounts further demonstrate how difficult it is to prove inauthenticity based solely on publicly available data. In information operations, we increasingly encounter accounts that closely resemble real users and raise suspicions of account hijacking.

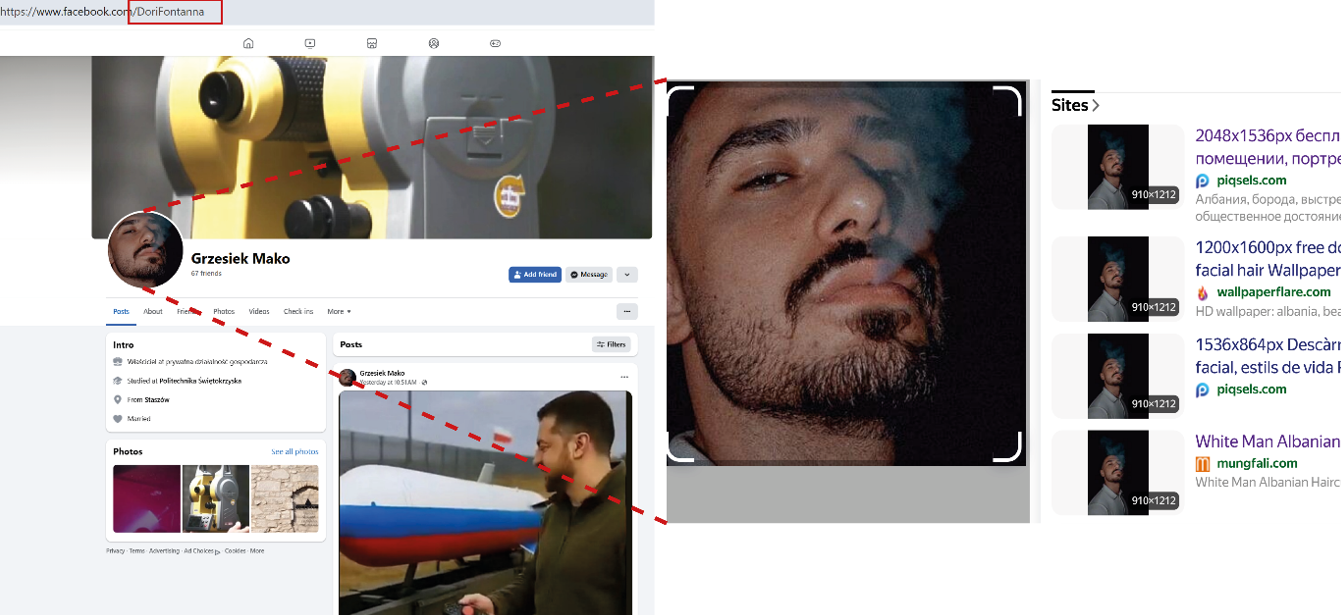

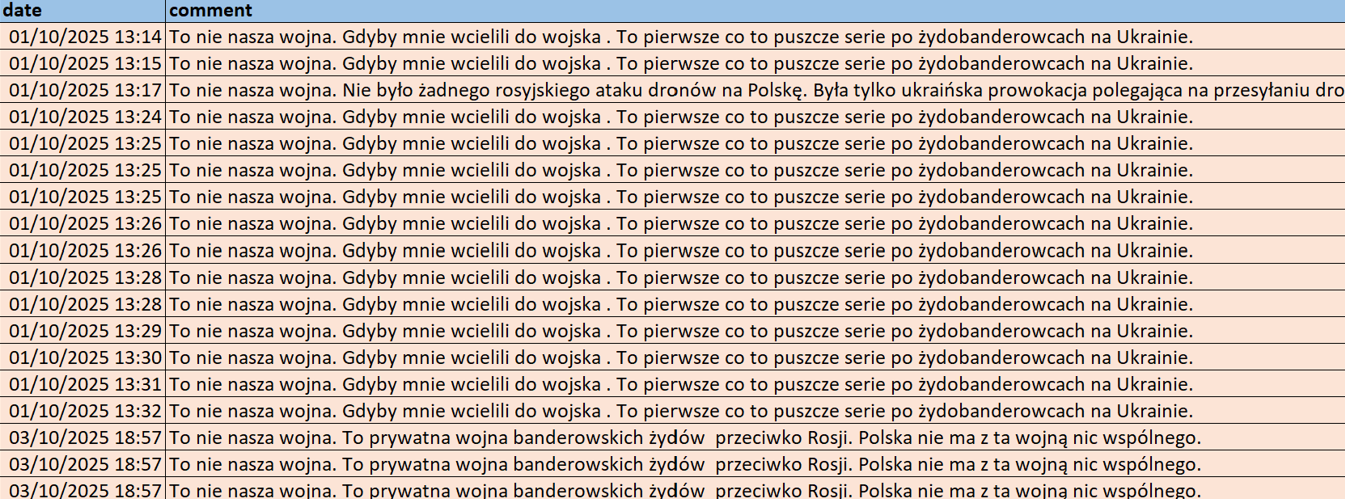

Two suspicious accounts we analyzed promoted an anti-Ukrainian narrative claiming that the war in Ukraine is not Poland’s war, using the phrase “to nie nasza wojna” (“not our war”). Both accounts have different user names and URL names. For example, one account, Grzesiek Mako, has a different name in its URL, “DoriFontanna.” A reverse-image of its profile image shows it is inauthentic, as it is available on wallpaper and stock imagery websites. The account posted eighteen similar comments on the Facebook pages of news outlets, of these, fourteen were identical and contained violent language.

These comments appeared under posts from two Polish news outlets, Polityka and Fakt, on October 1 between 13:14 and 13:32. One comment also addressed the September 2025 Russian drone incursion, in which the account claimed there was no Russian drone attack on Poland. It alleged that the incident was a Ukrainian provocation, with Ukrainians sending drones into Poland to draw Poland into a war with Russia.

This investigation reveals how coordinated networks in Poland are using inauthentic amplification, platform manipulation, and emotionally charged messaging to spread anti-Ukrainian narratives. These campaigns appear to be a part of a broader strategy to erode public support for Ukraine, distort political discourse, and undermine Poland’s role as a key ally of Ukraine.

Cite this case study:

Givi Gigitashvili, Sopo Gelava, “How social media manipulation fuels anti-Ukraine sentiment in Poland,” Digital Forensic Research Lab (DFRLab), December 21, 2025, https://dfrlab.org/2025/12/21/how-social-media-manipulation-fuels-anti-ukraine-sentiment-in-poland/.