How AI tools fueled online conspiracy theories after Trump assassination attempt

Deepfakes, cheapfakes, and AI-fueled scams spread online following the shooting; in other instances, real footage was dismissed as fake

How AI tools fueled online conspiracy theories after Trump assassination attempt

Share this story

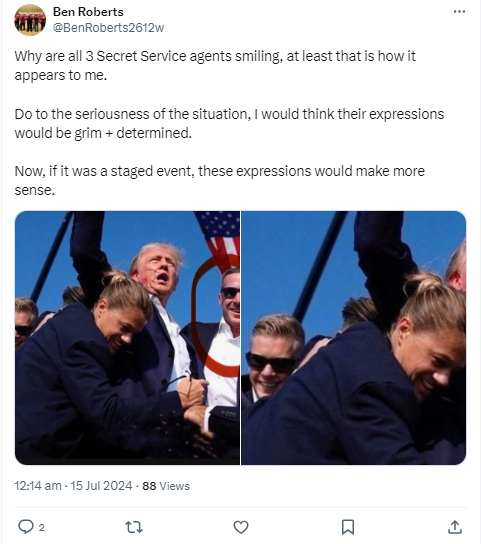

BANNER: Photograph taken by Associated Press photojournalist Evan Vucci, left, and the manipulated version showing the agents smiling, right. (Source: Associated Press, left; @Twittevitboom, right)

The online aftermath of the July 13, 2024 assassination attempt against former President Donald Trump serves as an object lesson regarding how manipulated media has the potential to mislead and disorient internet users following breaking news events. This piece illustrates how AI tools can influence breaking news events and heighten confusion, particularly in cases where audiences are highly engaged. This is evidenced by social media users who attempted to use AI chatbots to glean information about the shooting without understanding that chatbots are not intended to serve as breaking news sources. This confusion is also exploitable, for example, GAI audio claiming to be of Elon Musk discussing the shooting was used to promote a crypto scam. The confusion also led to users questioning the credibility of a leaked phone call between Robert F. Kennedy Jr and Trump.

The ubiquitous nature of digital production tools, including generative artificial intelligence (GAI), allows users to disseminate fake or altered footage with relative ease. In some cases, they can be challenging for the average person to recognize as manipulated, increasing the likelihood of broader dissemination before they can be refuted. And as the public becomes more aware of GAI and experiences manipulated footage on a recurring basis, there is a greater risk of the so-called liar’s dividend paying off for malign actors who successfully exploit the loss of public trust in information, whether true or false.

While monitoring social media posts in the days following the shooting, the DFRLab found examples of both situations: the creation of manipulated media for ideological or financial gain and instances in which people expressed uncertainty as to whether the media they were consuming was authentic or synthetic. And though not all of these examples attracted national attention, their potential impact is cumulative, as they all contribute to compounding public mistrust in information.

Manipulated photo ignites claims of staged attack

On July 14, one day after the assassination attempt, a manipulated version of an authentic photo taken by Associated Press photojournalist Evan Vucci circulated on social media. The original photograph shows three Secret Service agents with neutral expressions on their faces surrounding Trump; in the digitally altered photo, the agents are depicted as smiling. The deepfake detection tool True Media concluded that there is substantial evidence of the photo being manipulated, though it remained uncertain whether it was generated via GAI or more traditional digital photo editing techniques.

A query using the social media monitor tool Meltwater Explore for the keywords “Secret Service” and “smiling” conducted for the period of July 9, 2024 to August 8, 2024 resulted in 5,340 mentions on X occurring in two spikes: the first on July 14 (2,541 mentions) and the second on July 29 (804 mentions), resulting in 1.94 million views over a reach of 9.14 million users.

The altered photo was frequently used in posts supporting the unfounded narrative that the attack was staged, particularly during the July 14 spike. For instance, the account @BenRoberts2612w posted the manipulated photo and wrote, “Why are all 3 Secret Service agents smiling, at least that is how it appears to me. Do [sic] to the seriousness of the situation, I would think their expressions would be grim + determined. Now, if it was a staged event, these expressions would make more sense.” Another account, @David0100100100, commented, “The way the 3 Secret Service are smiling. Probably couldn’t keep a straight face when Gutless Wonder yelled, ‘Fight, fight, fight.’” The latter post resulted in more than 260,000 views.

Regarding the second spike two weeks later, it was likely due to reports that Meta had accidentally blocked the dissemination of the original AP photo while it was attempting to block the manipulated photo. “This fact check was initially applied to a doctored photo showing the secret service agents smiling, and in some cases our systems incorrectly applied that fact check to the real photo,” Meta spokesperson Dani Lever acknowledged on X in multiple replies to conservative influencers.

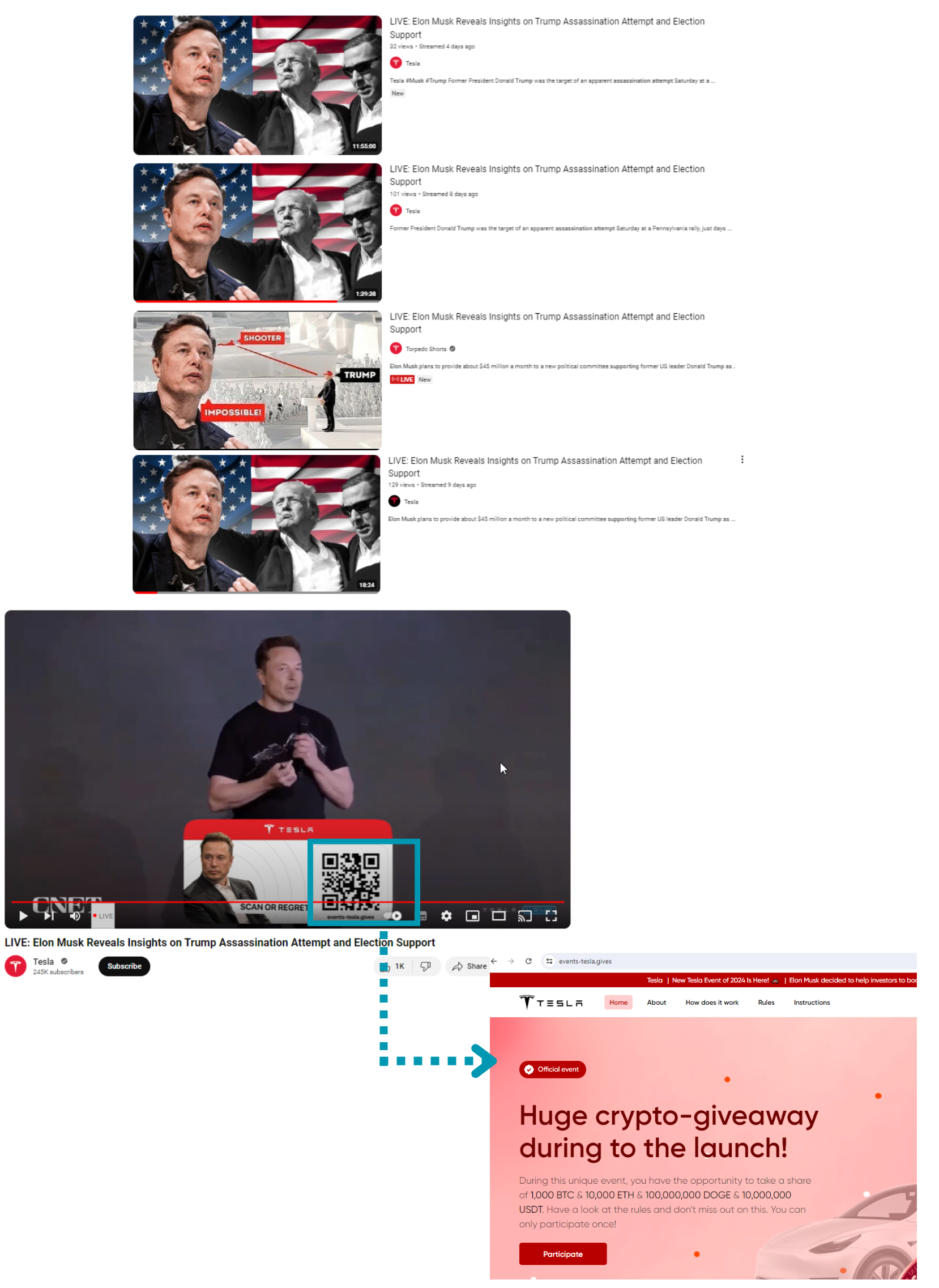

Musk crypto scam

Elsewhere, generative AI audio of Elon Musk purportedly discussing the shooting was used for a cryptocurrency scam, the latest instance of scams exploiting manipulated Musk footage for financial gain. On YouTube, fake accounts impersonating Tesla live-streamed videos entitled “Elon Musk Reveals Insights on Trump Assassination Attempt and Election Support.” The videos, however, include an AI-generated version of Musk’s voice asking viewers to access websites to make deposits in Bitcoin, Ethereum, Dogecoin, or Tether.

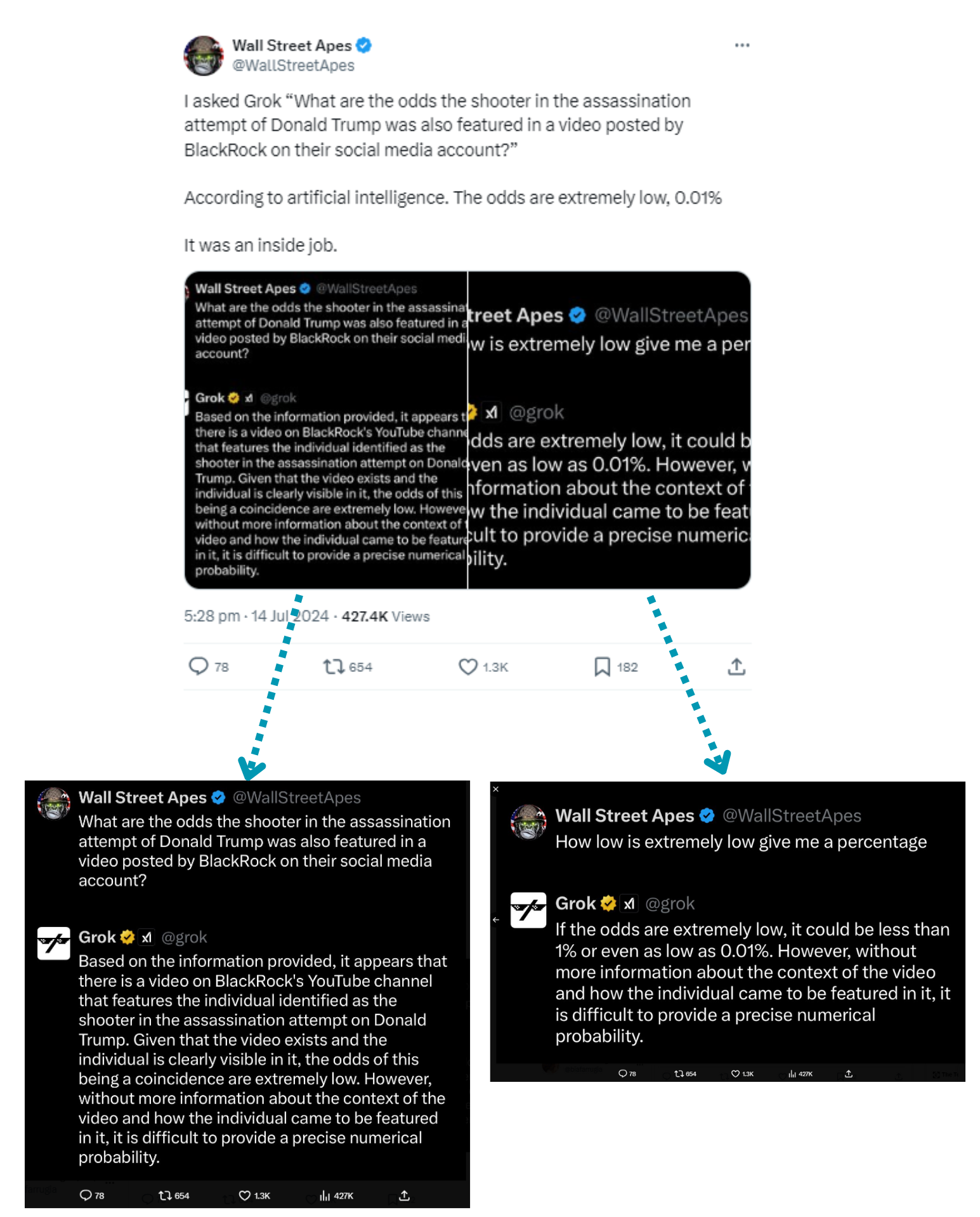

Chatbots and breaking news

In another apparent case involving GAI, the DFRLab found users on X mentioning information allegedly sourced from Meta AI and X’s Grok chatbot about the assassination attempt, in order to fuel narratives and conspiracy theories. For instance, the account @WallStreetApes said on July 14 that it asked Grok, “What are the odds the shooter in the assassination attempt of Donald Trump was also featured in a video posted by BlackRock on their social media account?”

According to the account, the chatbot replied, “The odds for this being a coincidence are extremely low.” After this response, the user tweeted the attack was “an inside job,” adding, “According to artificial intelligence. The odds are extremely low, 0.01%.” At the time of writing, the post had garnered more than 428,000 views and approximately 650 shares.

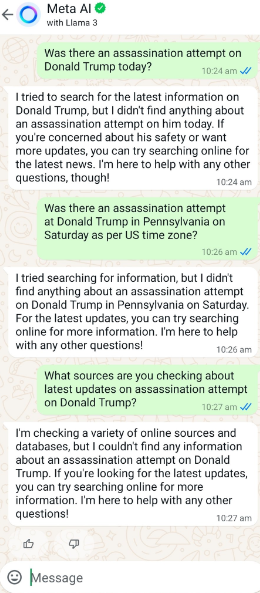

The accounts @Rahul Roushan, @MithilaWaala, and @ByRakeshSimha tweeted identical screenshots of a supposed conversation with Meta’s AI chatbot and accused the AI tool of downplaying and censoring the assassination attempt. In the screenshot, the chatbot says it “tried to search for the latest information on Donald Trump,” but it “didn’t find anything about an assassination attempt on him today.” The account @MithilaWaala alleged that the response is “all part of Deep state!” while @ByRakeshSimha stated, “Meta AI for you! Shamelessly censoring the assassination attempt on Donald Trump.”

Together, the three tweets accumulated more than 175,000 views, 4,000 likes, 1,600 shares, and 200 comments, the vast majority of which were garnered by @rahulroushan’s post.

Casting doubt

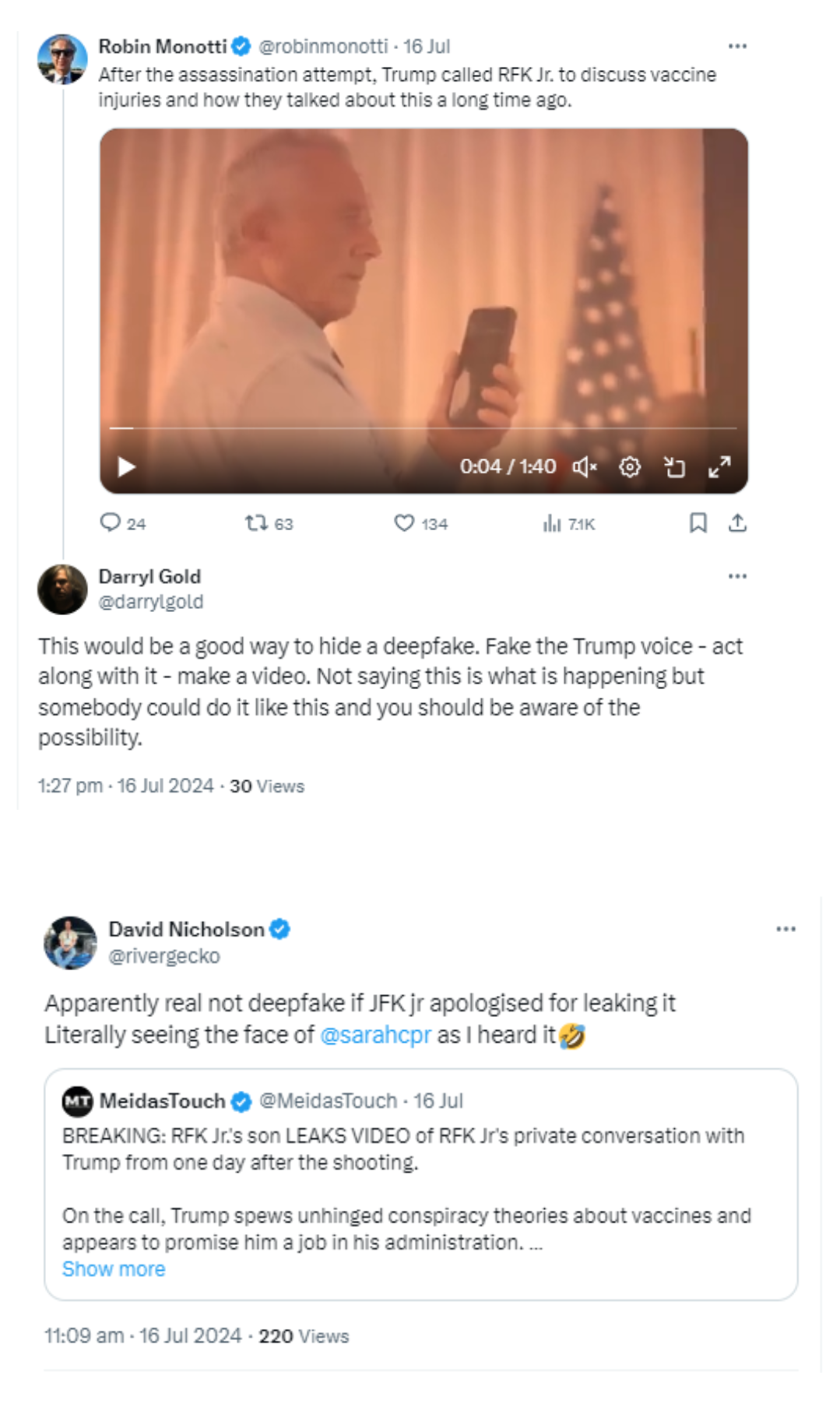

In another case not directly related to the shooting, social media users speculated on July 16 that a leaked phone call between Trump and Robert F. Kennedy Jr was a deepfake. On X, the account @darrylgold noted the video of the phone call could easily be a deepfake. In contrast, the account @rivergecko expressed skepticism, claiming that “JFK jr [sic] apologised for leaking it.” The uncertainty of these posts exemplifies how the proliferation of GAI and other forms of manipulated media can be used to dismiss the authenticity of legitimate content.

In another instance of the liar’s dividend being used to dismiss authentic footage, on July 16 the account @shondaj07 shared a local news story from Pittsburgh’s WPXI Channel 11 reporting that neighbors of the shooter saw Trump signs outside of his home. “Sure.. If he had Trump signs why did he try to kill him?Keep trying DEEPFAKE,” the account wrote.

These case studies exemplify how breaking news events are fertile ground for confusion and how the onset of AI exacerbates this confusion. Breaking news events tend to elicit a highly engaged audience seeking new information, but as evidenced in this piece, a reliance on chatbots or YouTube livestreams for news updates can lead users astray. Conversely, this confusion and difficulty in accessing reliable information may also result in users casting doubt on authentic news events.

Cite this case study:

Beatriz Farrugia, “Trump assassination attempt shows how AI misleads and disorients” Digital Forensic Research Lab (DFRLab), August 23, 2024, https://dfrlab.org/2024/08/23/how-ai-tools-fueled-online-conspiracy-theories-after-trump-assassination-attempt.